Learning from machine learning (saturday, philosophical)

Machine learning is drawing new enthusiasts into its orbit. So I became an enthusiast a few years ago. I am a representative of one of the "sidest" groups, an economist with the practice of working with data. Data is always a problem in economics (it has remained that way, however) and it was easy to buy into the “big data” mantra. From big data it was easy to go, after Garter in 2016, to machine learning.

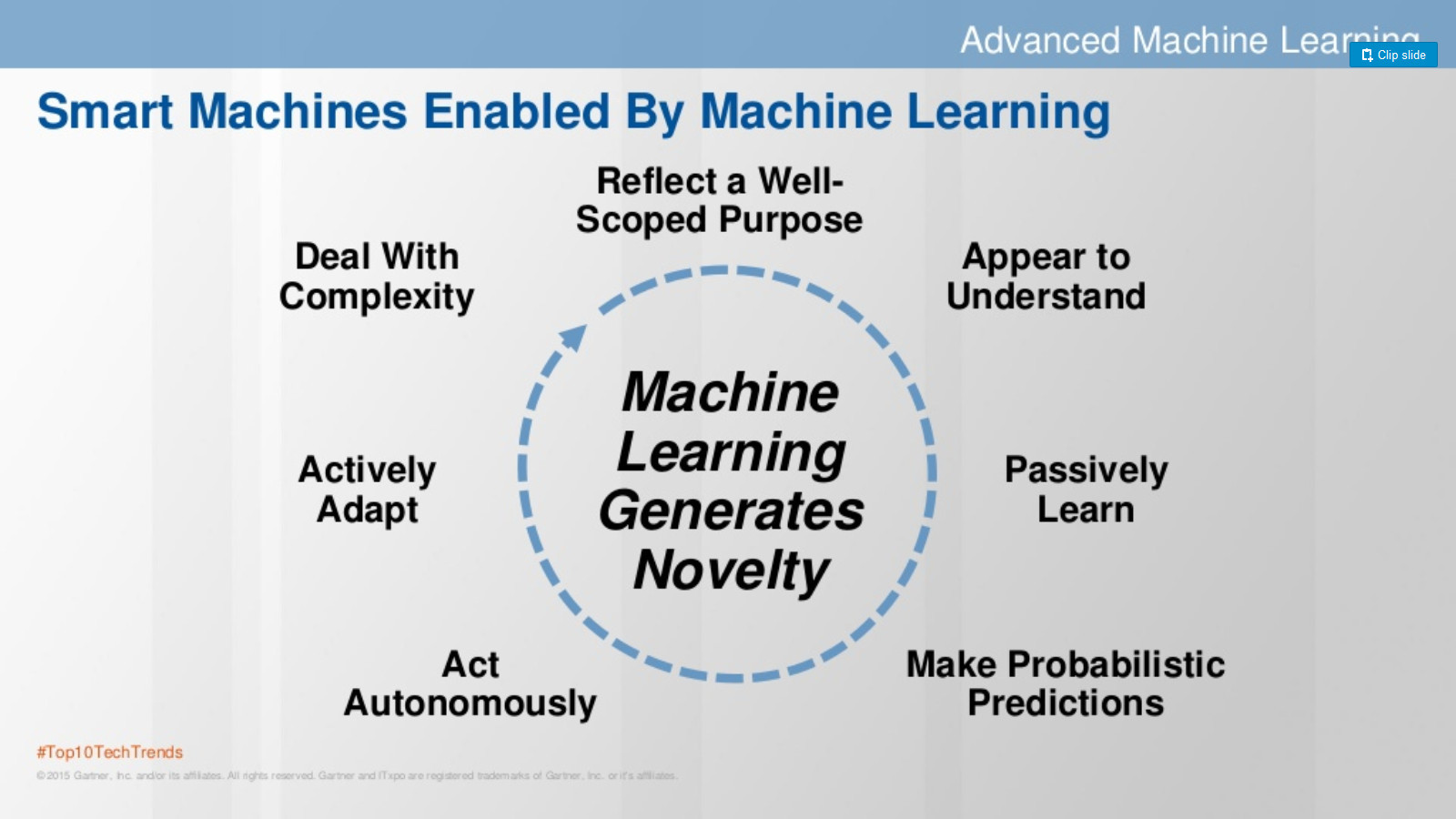

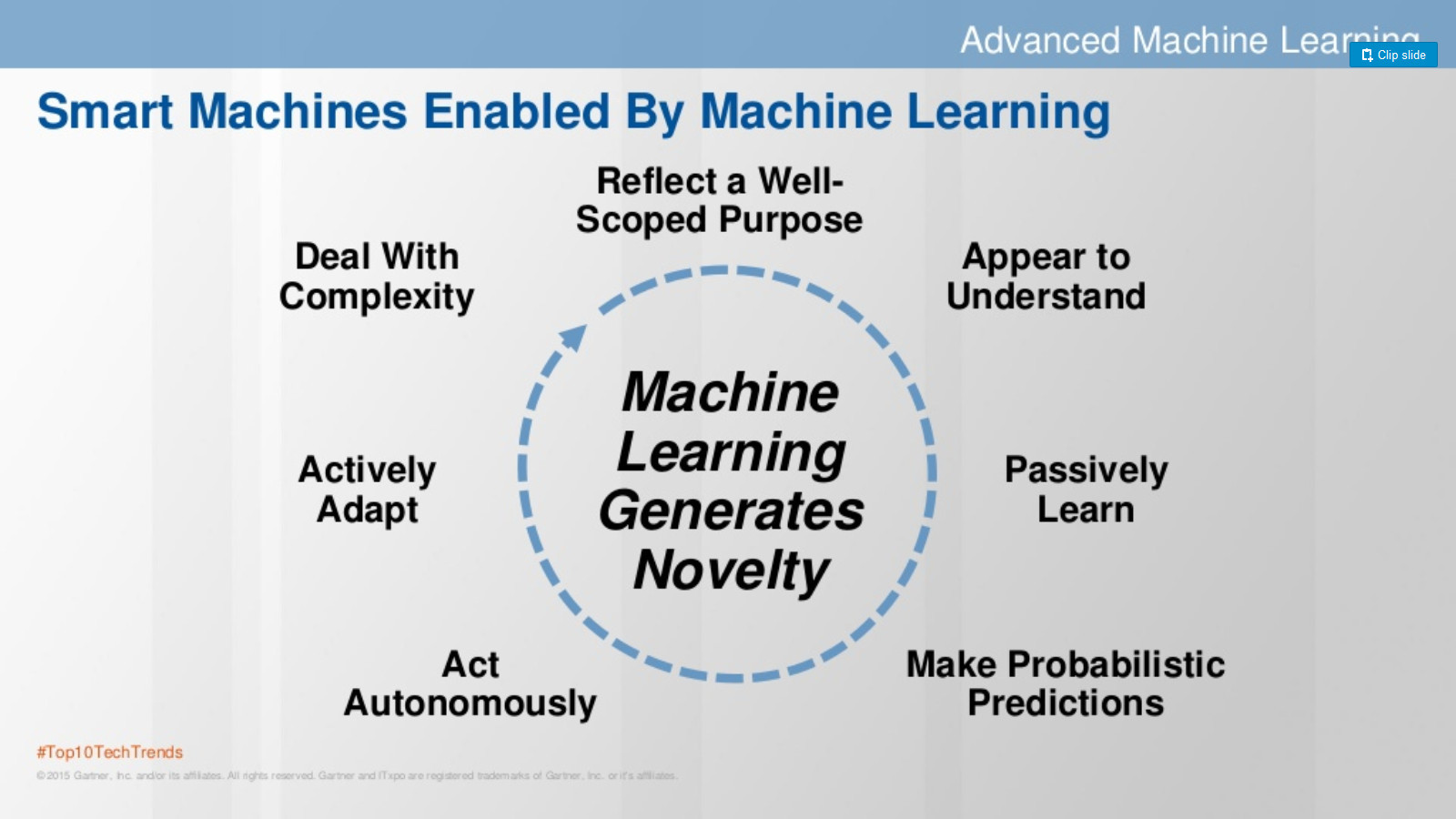

The more you deal with this topic, the more interesting it becomes, especially in the light of ongoing predictions such as the advent of the era of robots, smart machines, etc. And it is not surprising that such machines will be created, because Evolution shows that man learns to expand himself, creating a symbiosis of man-machine. Sometimes you go near your fence, a nail sticks out. Oh how hard it is to hammer without a hammer. A hammer - once and there. It is therefore not surprising that the same "helpers" for brain activity appear.

')

In the course of studying the topic, I never stopped thinking about what seems to be machine learning explaining how our mind works. Below I will list the lessons I learned about a person studying machine learning. I do not pretend to be right, I apologize if all this is obvious, I will be glad if the material is amusing, or if there are counter-examples in order to begin (again) to live by believing in the “incomprehensible”. By the way, HSE has a course where machine learning is used to understand how the brain works.

1) The ensemble of the simplest algorithms can understand an unimaginable heap of data with good accuracy. In this regard, I recall the recently departed Minsky, who wrote a whole book about stupid agents, who together form our mind. Such an interpretation of the mind makes it possible to imagine that all magic, which is our mind, is nothing more than a collection of simple models, trained by experience.

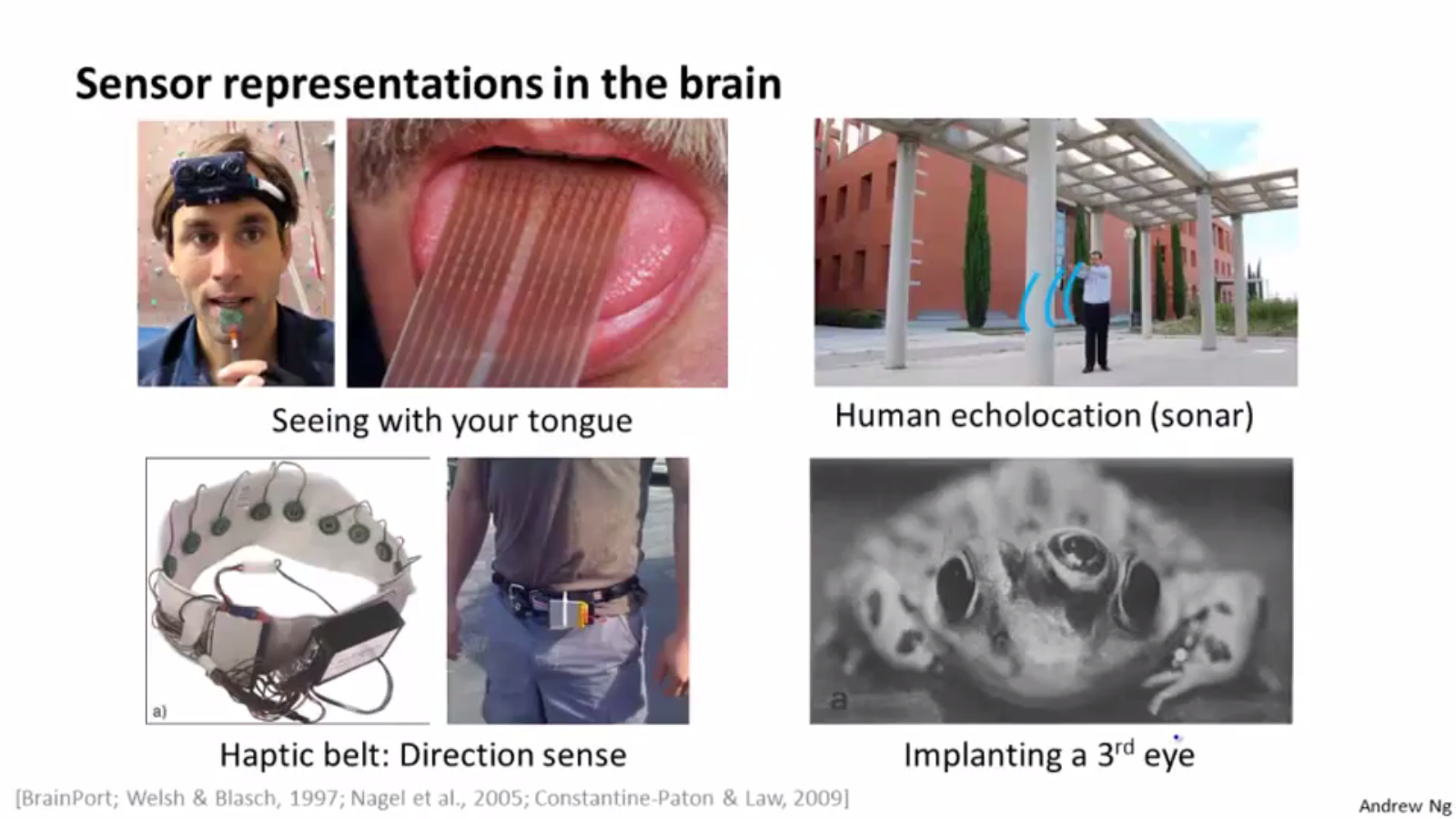

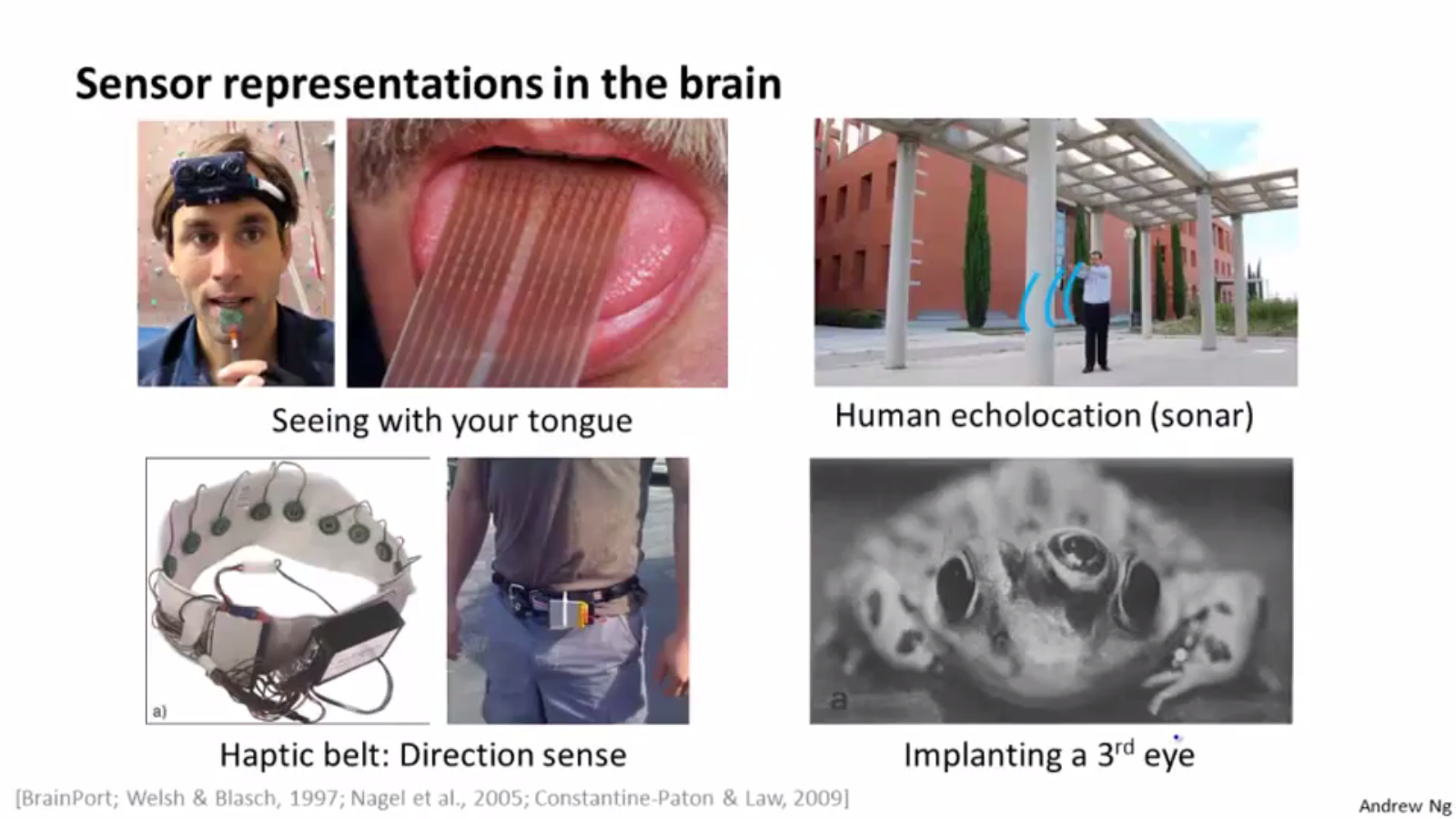

2) In terms of learning experiences, I recall one of Andrew NG’s favorite slides:

On it, Andrew focuses on the fact that the brain can teach its other department (where there is no model) to those functions that have been lost, for example, to see with the tongue.

3) Brain researchers know that the mind is laid when a person is very small. Fantastic changes occur - from the sounds a person begins to understand speech, to recognize parents / strangers. The little ones still beat computers, but this only shows that our mind seems to be very good models, trained on a huge amount of information (for example, 3 years of continuous images), on giant biological neural networks ( 86 billion neurons ).

4) It seems that some models can be somehow explained and changed - there is an area like “sandbox” (“let's think about why this is so”, methods for correcting the type of psychoanalysis, etc.), and some go into unconscious “production” (habits and automatic actions: driving a car after years of practice, breathing, driving, etc.). An example of how “production” works is the classic Clive Wearing case. The man completely lost the ability to memorize. "If you’re watching for what you’ve ever seen. . The model, trained in the past days, is in memory and gives predictions for the next viewing. Model - remained in memory, data about the past - no.

5) Probably, many people are lazy to create or update their models and live on the fact that in “production”, on ready-made models, created on historical data that are no longer relevant. Therefore, it is so difficult to change a person: of course, he will trust (unconsciously) a model trained on a gigantic amount of past information than new reliable data, the volume of which is smaller.

6) Animals live only on models in “production” - they cannot analyze the model analytically and change the model ( ceteris paribus ) without retraining. They will emit saliva without food, from the light of a light bulb.

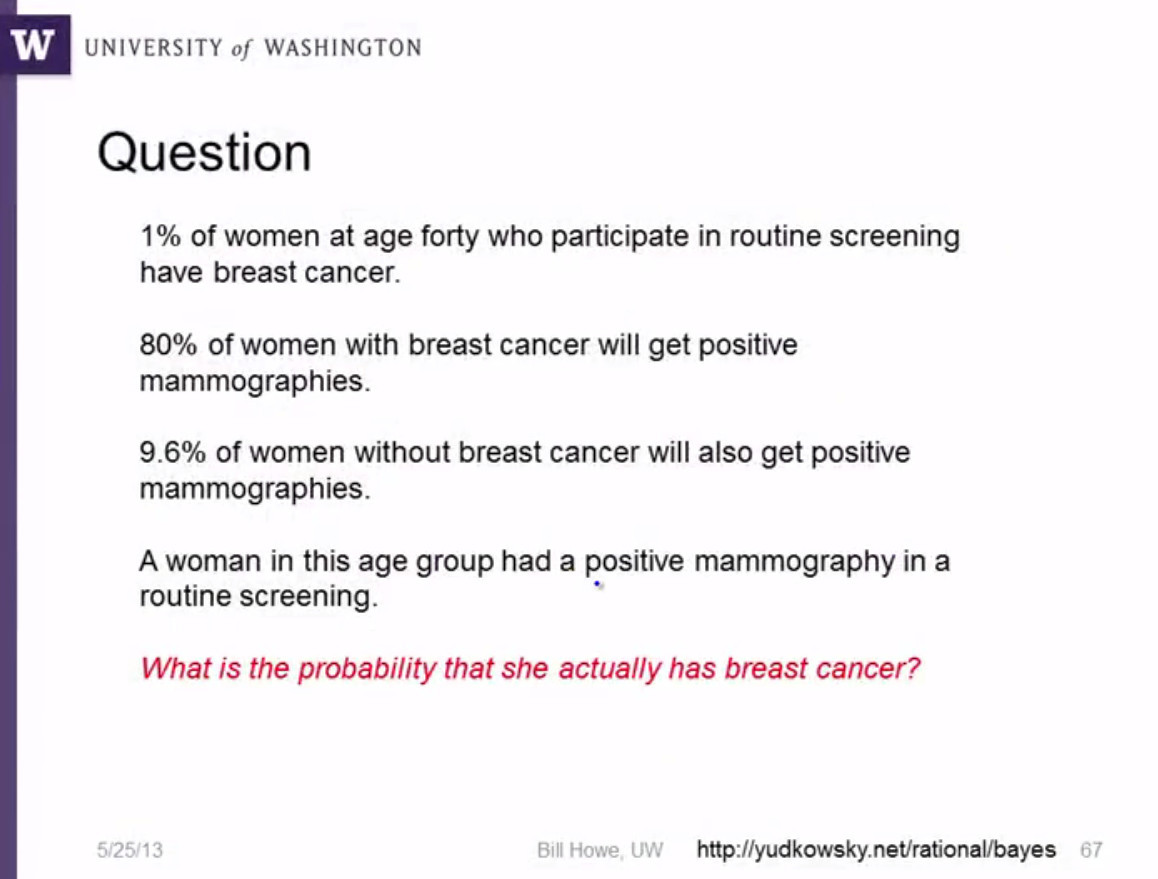

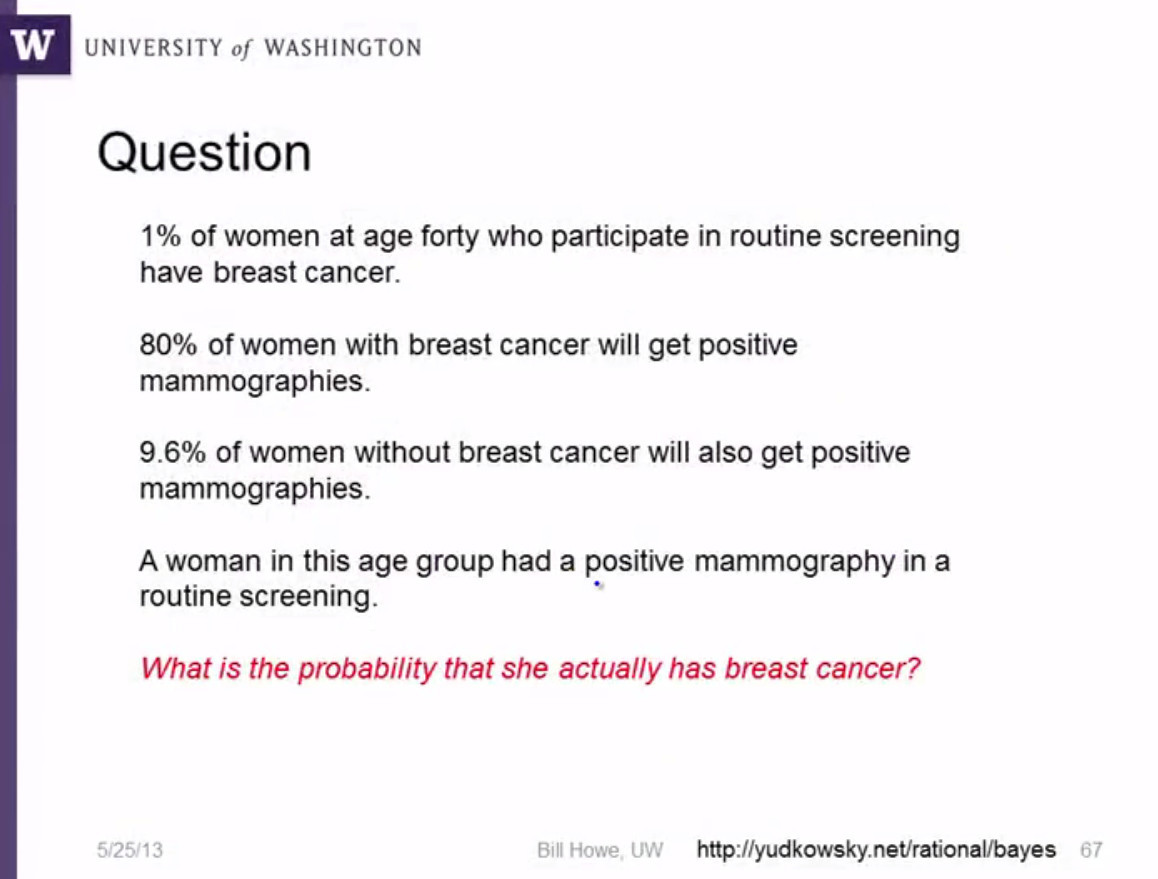

7) The brain is often inferior to the machine (analytics), especially if you do not think carefully. For example, the classics of the genre from the course Bill Howe, Practical Predictive Analytics: Models and Methods:

15% (!) Doctors answer correctly (answer: 7.8%).

8) Intuition is complex models that cannot be explained. Intuition is always based on data and models. The best example from the book Malcolm Gladwell “Blink” - “makes it to the tennis coach before the racket even makes contact with the ball”. Those. his model, trained on years of observation, gives a very accurate prediction whether the ball will fall into the field, having only the data before the impact.

9) In principle, the following generalization can be made: “A person is smart in a certain area as much as he has built an algorithm trained in this area.” For this, as a rule, you need a lot of observation. The same Gladwell says that data is needed in about 10,000 hours of practice.

10) Why can't some people get smarter even with a lot of experience? Note that this is how often a fool is defined: “the one who makes the same mistake (with the same data) the same mistake.” Probably, the complexity of the models is different, as is the ability to train models.

11) For training models need positive and negative examples. It is impossible to teach an algorithm or a person only with examples of one type. A person will not learn if he is always scolded or repeated, that everything is OK.

12) Machine and human learning does not predict with 100% probability. 25% of the error is a miss in one of four cases. No need to be surprised that the ideal algorithm with such an error is often mistaken (examples: currency rate prediction, GDP this year, Dota2 games , etc.) It is not surprising that very smart people may not be right very often. By the way, averages of a large number of predictions help - it is better to judge them by one than by one observation.

13) Love, music, art, etc. clearly associated with the trained model (please understand in the right sense), which converts the data coming from the object of admiration into the probability of "success", activation of connections that we "like", etc.

14) Machine learning is built on minimizing the prediction error, which corresponds to the very definition of an intelligent person (mind) - the one who gives the correct answer, that is, has the minimum error.

15) The ability to explain why this algorithm works a second time, both for a person and for a machine. In this regard, the Buddha is remembered:

"One day Māluṅkyaputta got him from his afternoon

“Sir, when I was not alone, I thought you were alone. Namely, (1) is the universe eternal ... '

Excerpt from Buddha’s answer:

"... a man of It should not be possible to say that: Whether it is a Kṣatriya (of the warrior caste) or a Brāmama (of the priestly caste) or a Vai Vya (of the trading caste) or a aūdra (of the low caste); may be; whether he is tall, short, or of medium stature; Whether his complexion is black, brown, or golden: from which village, town or city he comes. I was shot; the kind of bowstring used; the type of arrow; It was made. ”

... Therefore, I’m not talking about unexplained. What are the things that I have not explained? I have not explained. Why, Māluṅkyaputta, have I not explained them? It is not conducive to aversion, detachment, cessation, tranquility, deep penetration, full realization, it is not useful . That is why I have not told you about them. ”

The more you deal with this topic, the more interesting it becomes, especially in the light of ongoing predictions such as the advent of the era of robots, smart machines, etc. And it is not surprising that such machines will be created, because Evolution shows that man learns to expand himself, creating a symbiosis of man-machine. Sometimes you go near your fence, a nail sticks out. Oh how hard it is to hammer without a hammer. A hammer - once and there. It is therefore not surprising that the same "helpers" for brain activity appear.

')

In the course of studying the topic, I never stopped thinking about what seems to be machine learning explaining how our mind works. Below I will list the lessons I learned about a person studying machine learning. I do not pretend to be right, I apologize if all this is obvious, I will be glad if the material is amusing, or if there are counter-examples in order to begin (again) to live by believing in the “incomprehensible”. By the way, HSE has a course where machine learning is used to understand how the brain works.

1) The ensemble of the simplest algorithms can understand an unimaginable heap of data with good accuracy. In this regard, I recall the recently departed Minsky, who wrote a whole book about stupid agents, who together form our mind. Such an interpretation of the mind makes it possible to imagine that all magic, which is our mind, is nothing more than a collection of simple models, trained by experience.

2) In terms of learning experiences, I recall one of Andrew NG’s favorite slides:

On it, Andrew focuses on the fact that the brain can teach its other department (where there is no model) to those functions that have been lost, for example, to see with the tongue.

3) Brain researchers know that the mind is laid when a person is very small. Fantastic changes occur - from the sounds a person begins to understand speech, to recognize parents / strangers. The little ones still beat computers, but this only shows that our mind seems to be very good models, trained on a huge amount of information (for example, 3 years of continuous images), on giant biological neural networks ( 86 billion neurons ).

4) It seems that some models can be somehow explained and changed - there is an area like “sandbox” (“let's think about why this is so”, methods for correcting the type of psychoanalysis, etc.), and some go into unconscious “production” (habits and automatic actions: driving a car after years of practice, breathing, driving, etc.). An example of how “production” works is the classic Clive Wearing case. The man completely lost the ability to memorize. "If you’re watching for what you’ve ever seen. . The model, trained in the past days, is in memory and gives predictions for the next viewing. Model - remained in memory, data about the past - no.

5) Probably, many people are lazy to create or update their models and live on the fact that in “production”, on ready-made models, created on historical data that are no longer relevant. Therefore, it is so difficult to change a person: of course, he will trust (unconsciously) a model trained on a gigantic amount of past information than new reliable data, the volume of which is smaller.

6) Animals live only on models in “production” - they cannot analyze the model analytically and change the model ( ceteris paribus ) without retraining. They will emit saliva without food, from the light of a light bulb.

7) The brain is often inferior to the machine (analytics), especially if you do not think carefully. For example, the classics of the genre from the course Bill Howe, Practical Predictive Analytics: Models and Methods:

15% (!) Doctors answer correctly (answer: 7.8%).

8) Intuition is complex models that cannot be explained. Intuition is always based on data and models. The best example from the book Malcolm Gladwell “Blink” - “makes it to the tennis coach before the racket even makes contact with the ball”. Those. his model, trained on years of observation, gives a very accurate prediction whether the ball will fall into the field, having only the data before the impact.

9) In principle, the following generalization can be made: “A person is smart in a certain area as much as he has built an algorithm trained in this area.” For this, as a rule, you need a lot of observation. The same Gladwell says that data is needed in about 10,000 hours of practice.

10) Why can't some people get smarter even with a lot of experience? Note that this is how often a fool is defined: “the one who makes the same mistake (with the same data) the same mistake.” Probably, the complexity of the models is different, as is the ability to train models.

11) For training models need positive and negative examples. It is impossible to teach an algorithm or a person only with examples of one type. A person will not learn if he is always scolded or repeated, that everything is OK.

12) Machine and human learning does not predict with 100% probability. 25% of the error is a miss in one of four cases. No need to be surprised that the ideal algorithm with such an error is often mistaken (examples: currency rate prediction, GDP this year, Dota2 games , etc.) It is not surprising that very smart people may not be right very often. By the way, averages of a large number of predictions help - it is better to judge them by one than by one observation.

13) Love, music, art, etc. clearly associated with the trained model (please understand in the right sense), which converts the data coming from the object of admiration into the probability of "success", activation of connections that we "like", etc.

14) Machine learning is built on minimizing the prediction error, which corresponds to the very definition of an intelligent person (mind) - the one who gives the correct answer, that is, has the minimum error.

15) The ability to explain why this algorithm works a second time, both for a person and for a machine. In this regard, the Buddha is remembered:

"One day Māluṅkyaputta got him from his afternoon

“Sir, when I was not alone, I thought you were alone. Namely, (1) is the universe eternal ... '

Excerpt from Buddha’s answer:

"... a man of It should not be possible to say that: Whether it is a Kṣatriya (of the warrior caste) or a Brāmama (of the priestly caste) or a Vai Vya (of the trading caste) or a aūdra (of the low caste); may be; whether he is tall, short, or of medium stature; Whether his complexion is black, brown, or golden: from which village, town or city he comes. I was shot; the kind of bowstring used; the type of arrow; It was made. ”

... Therefore, I’m not talking about unexplained. What are the things that I have not explained? I have not explained. Why, Māluṅkyaputta, have I not explained them? It is not conducive to aversion, detachment, cessation, tranquility, deep penetration, full realization, it is not useful . That is why I have not told you about them. ”

Source: https://habr.com/ru/post/279095/

All Articles