Vision-based SLAM: stereo and depth-SLAM

After a short break, we continue the series of lesson articles on SLAM. In previous releases, we prepared the software environment and also worked with monocular SLAM . Under the cut - a lesson on the use of SLAM based on stereo camera and camera depth. We will talk about setting up packages and equipment and give tips on using two ROS packages: the now traditional RTAB-Map and the fresh, delicious ElasticFusion.

RTAB-Map (Real-Time Appearance-Based Mapping) is a visual graph-based SLAM algorithm based on a closure detector. The detector uses the bag-of-words algorithm to determine the degree of similarity of new camera images to images of locations already visited. Each such closure adds a new edge to the camera positions, after which the graph is optimized. To achieve real-time, the algorithm uses a clever way to manage memory, limiting the number of locations used. The article developers described in detail how it all works.

We covered RTAB-Map setup using the Kinect sensor in the first lesson , so here we’ll work on setting up this package for use with a stereo camera. As such, we will use two ordinary USB webcams.

')

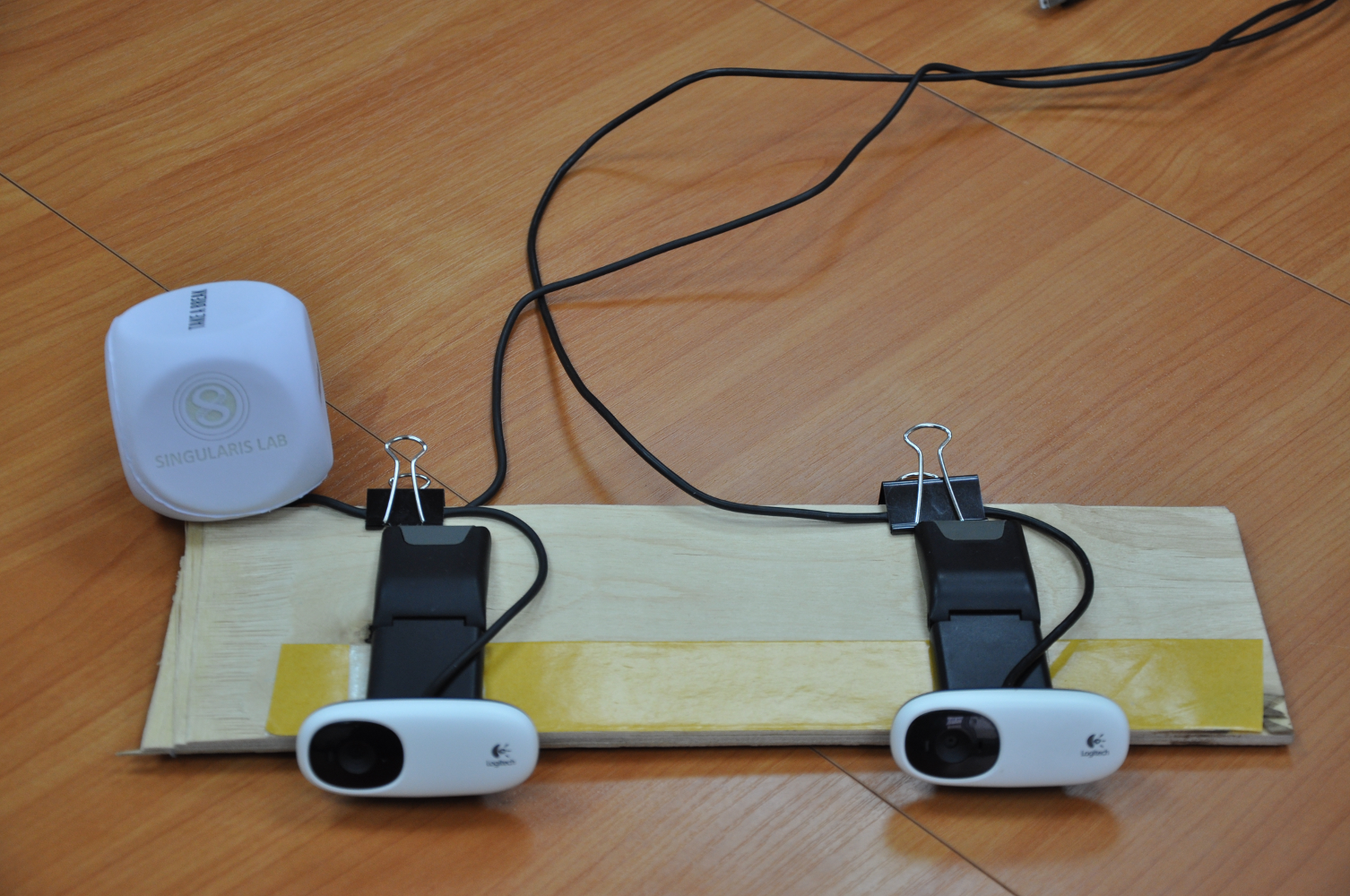

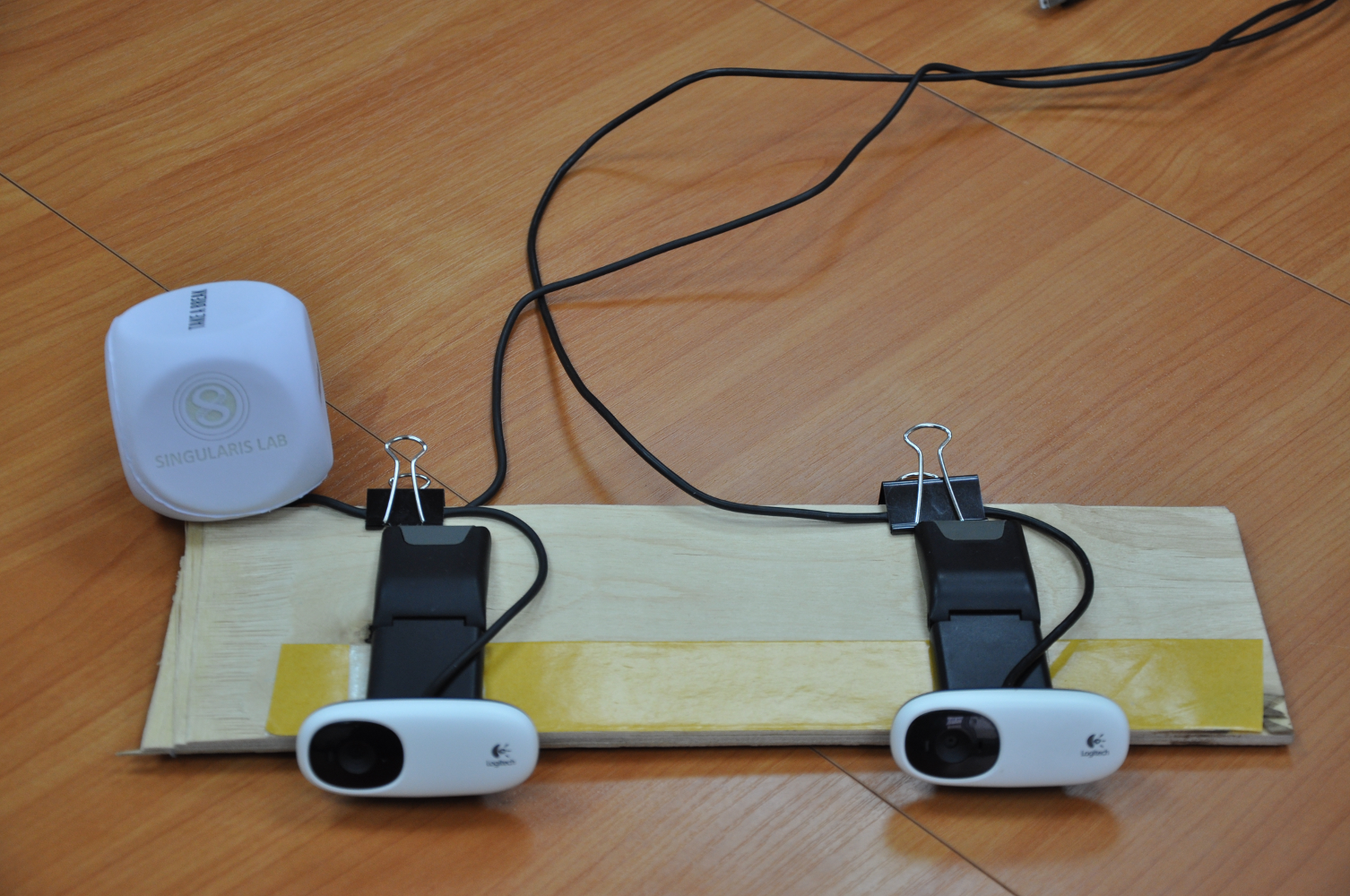

For correct operation, the two cameras must be firmly fixed relative to each other (for this you can use webcams on a clothespin :). It is desirable that the cameras were the same model and did not have the ability to manually adjust the focus. We will use this horizontal “stereo camera”:

We will use the usb_cam package to get the video stream from the cameras. Create a stereo_camera.launch file with the following content:

where / dev / video0 should be replaced with the identifier corresponding to the left / right camera.

If the following error appears at startup:

then this means that USB bandwidth is not enough for some reason. You need to try to connect the cameras to different USB hubs, this often solves the problem.

You can make sure that both cameras are working using the ROS utility rqt_image_view . The utility should show videos for the / stereo / left / image_raw and / stereo / right / image_raw topics.

The ROS camera_calibration utility supports horizontal stereo camera calibration. Before starting, you need to prepare a calibration sample - print a chess marker. Run the utility as follows:

Where

--size 8x6 indicates the number of inner corners of the chess pattern (8x6 corresponds to the pattern of 9x7 black squares);

- square 0.054 - size (sides) of a square chess pattern in meters;

--approximate = 0.01 - in our case, non-specialized cameras are used, so it is necessary to specify a parameter that sets the allowable time for desynchronization between cameras in seconds.

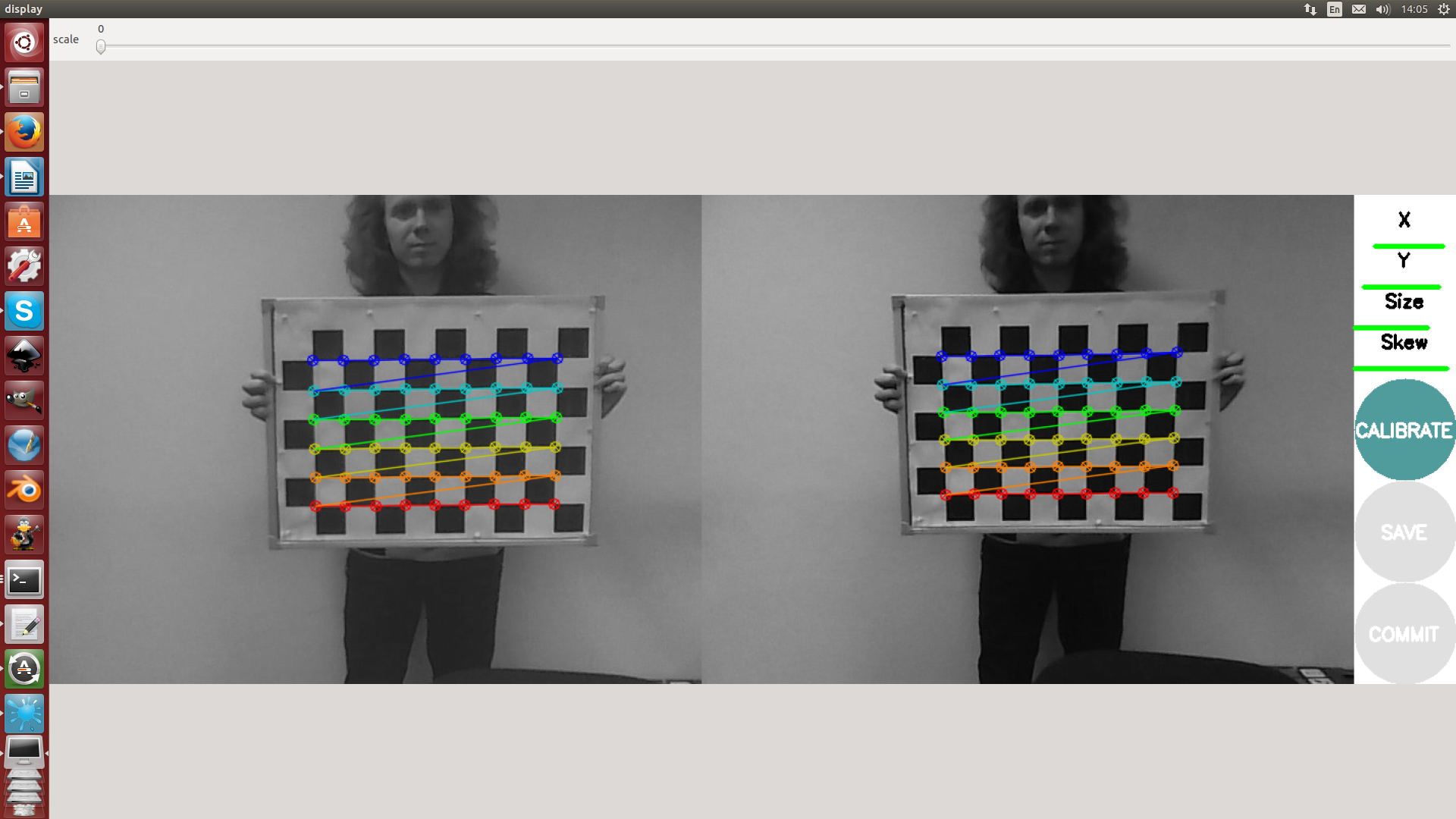

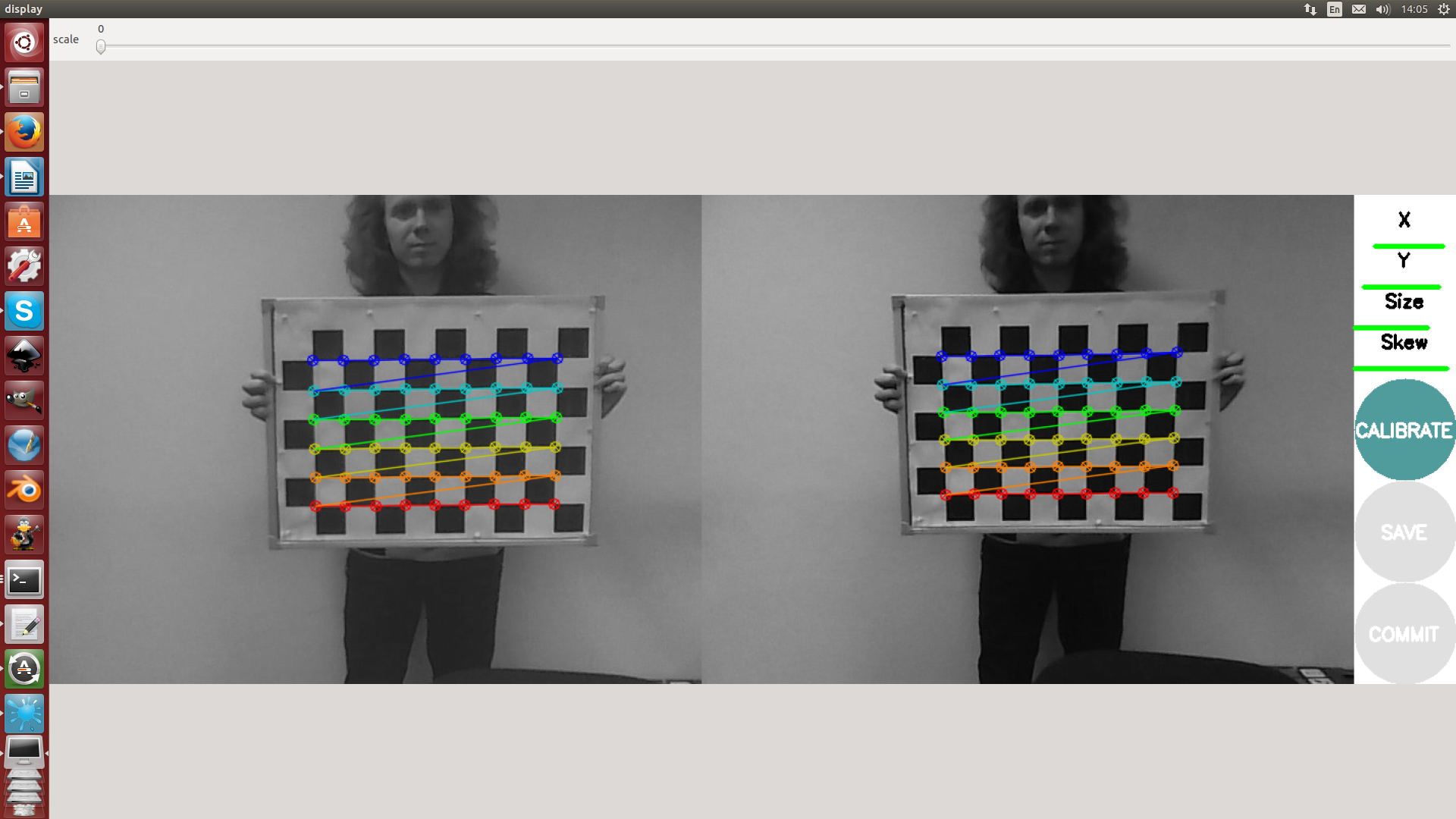

The process of calibrating a stereo pair using this utility does not differ much from the calibration of a single camera, given in the first article . Upon completion of the calibration, you must click the “commit” button to save the calibration data.

After calibrating the cameras, you can proceed to processing the stereo image. The stereo_image_proc package rectifies camera images and builds a disparity map. The launch is as follows:

for testing, you can run the image_view utility with the following parameters:

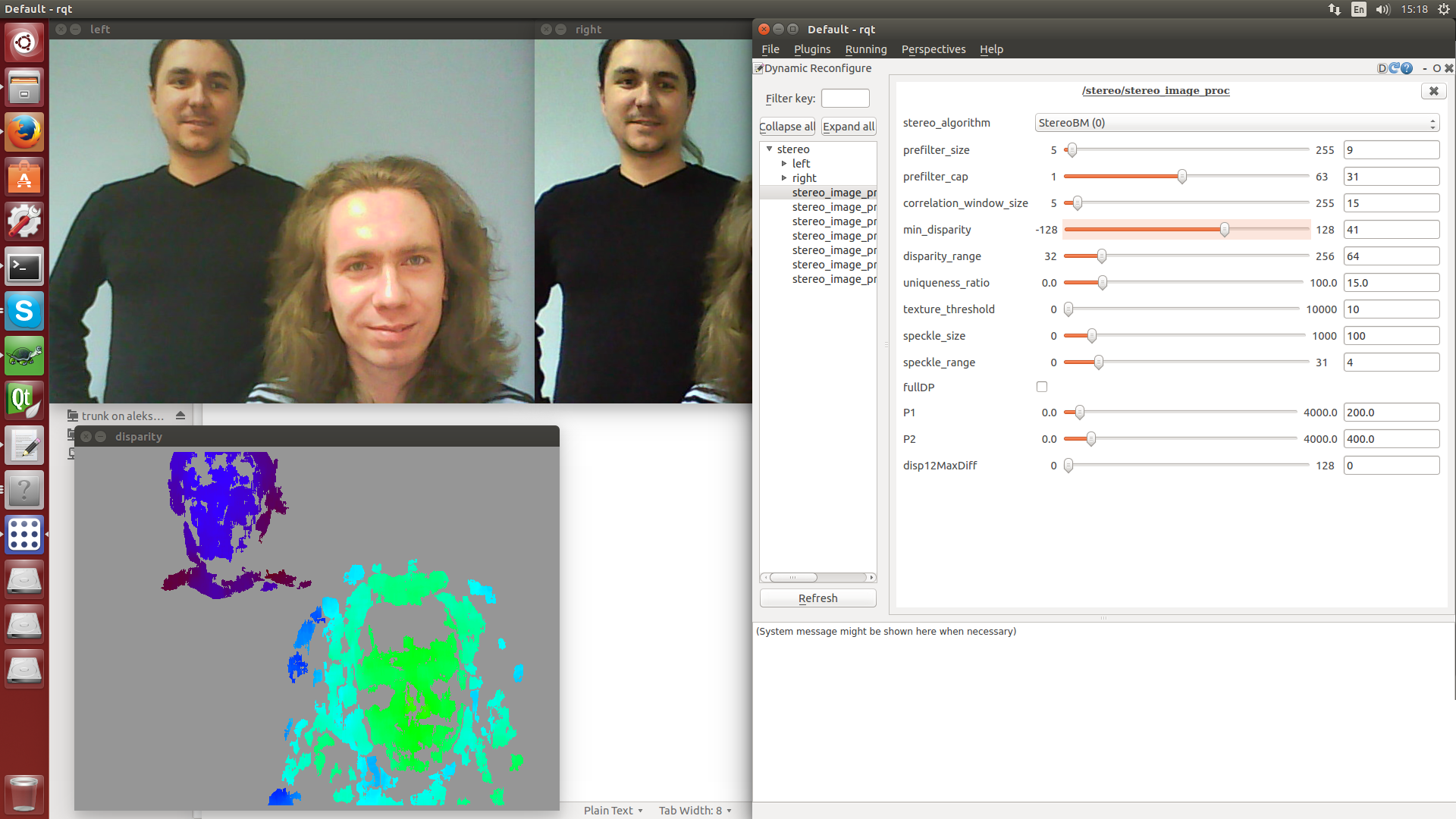

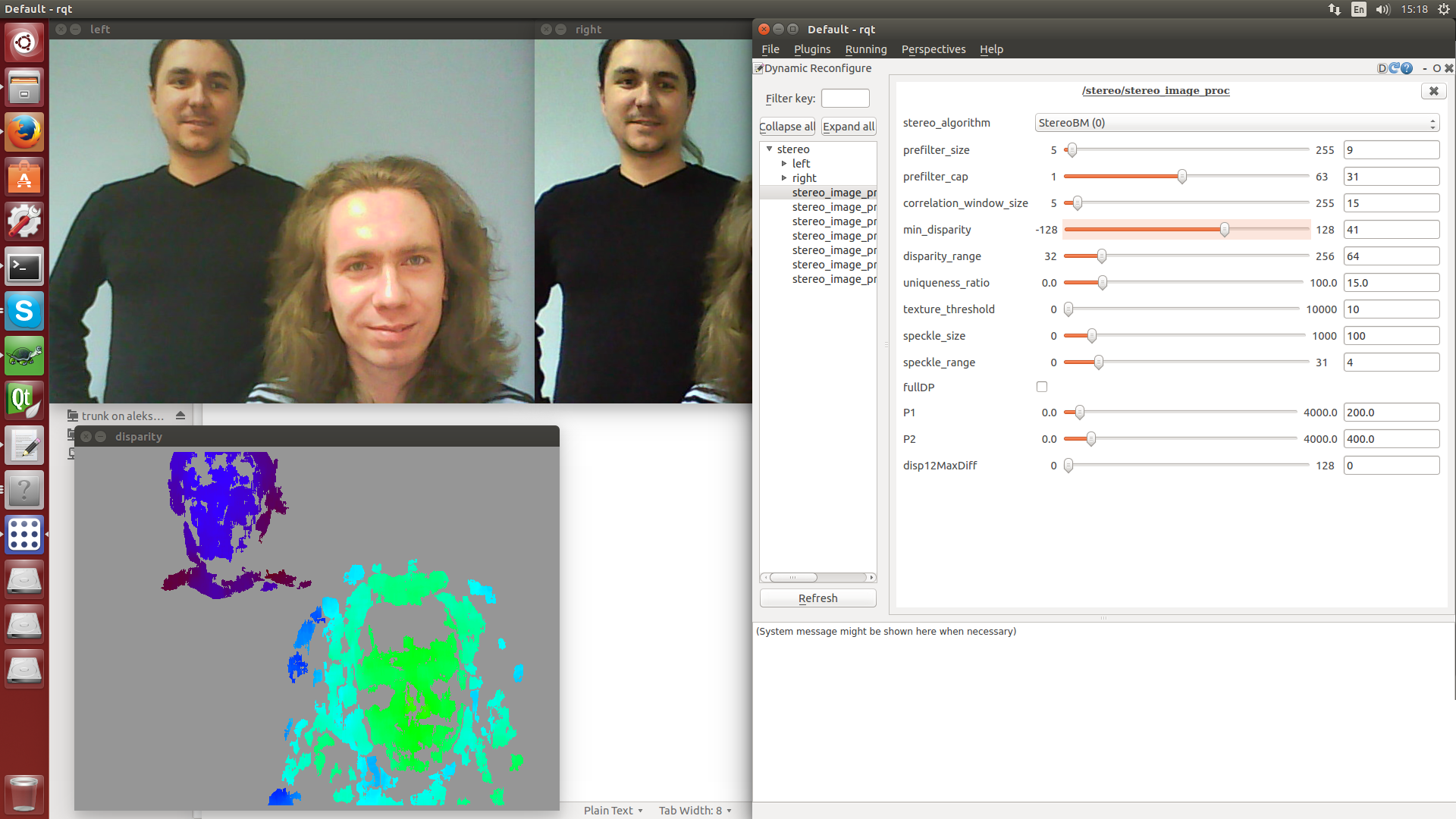

The parameters of the stereo image processing algorithm can be configured using the dynamic_reconfigure utility. To run, you need to run the command:

and select Dynamic reconfigure in the Plugins → Configuration menu.

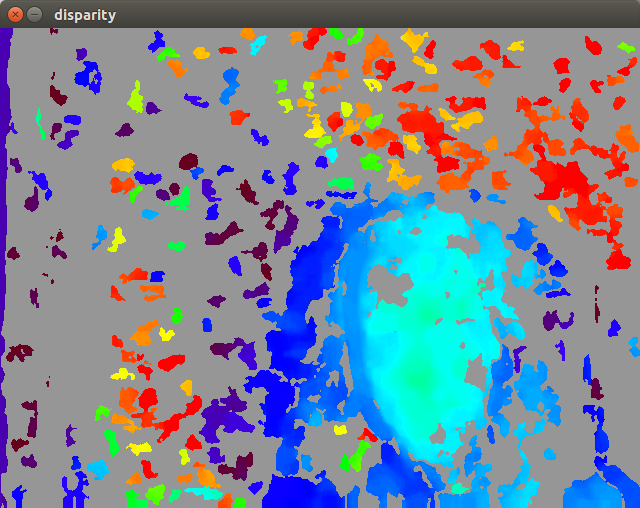

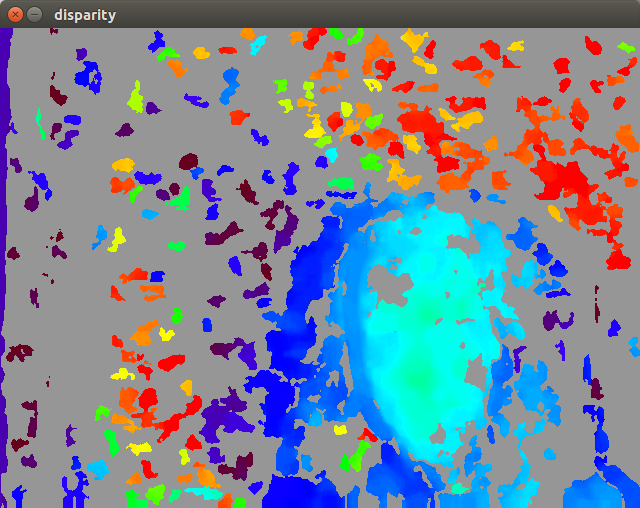

stereo_image_proc supports two processing algorithms: StereoBM (faster, first picture) and StereoSGBM (semi-global block matching, better, slower, second picture).

After the camera has been successfully calibrated and we have an adequate disparity map, we can launch the RTAB-Map. Prepare the launch file rtabmap.launch :

This file was created based on the standard configuration file RTAB-Map for the Bumblebee camera. It sets the orientation of the camera coordinate system relative to the global coordinate system, runs the odometry modules ( stereo_odometry in our case), SLAM ( rtabmap ) and visualizations ( rtabmapviz or rviz ).

If an error is displayed at startup:

you need to swap the left and right cameras in the file stereo_camera.launch and calibrate them again.

Run the RTAB-Map (this command should be run from the folder containing rtabmap.launch ):

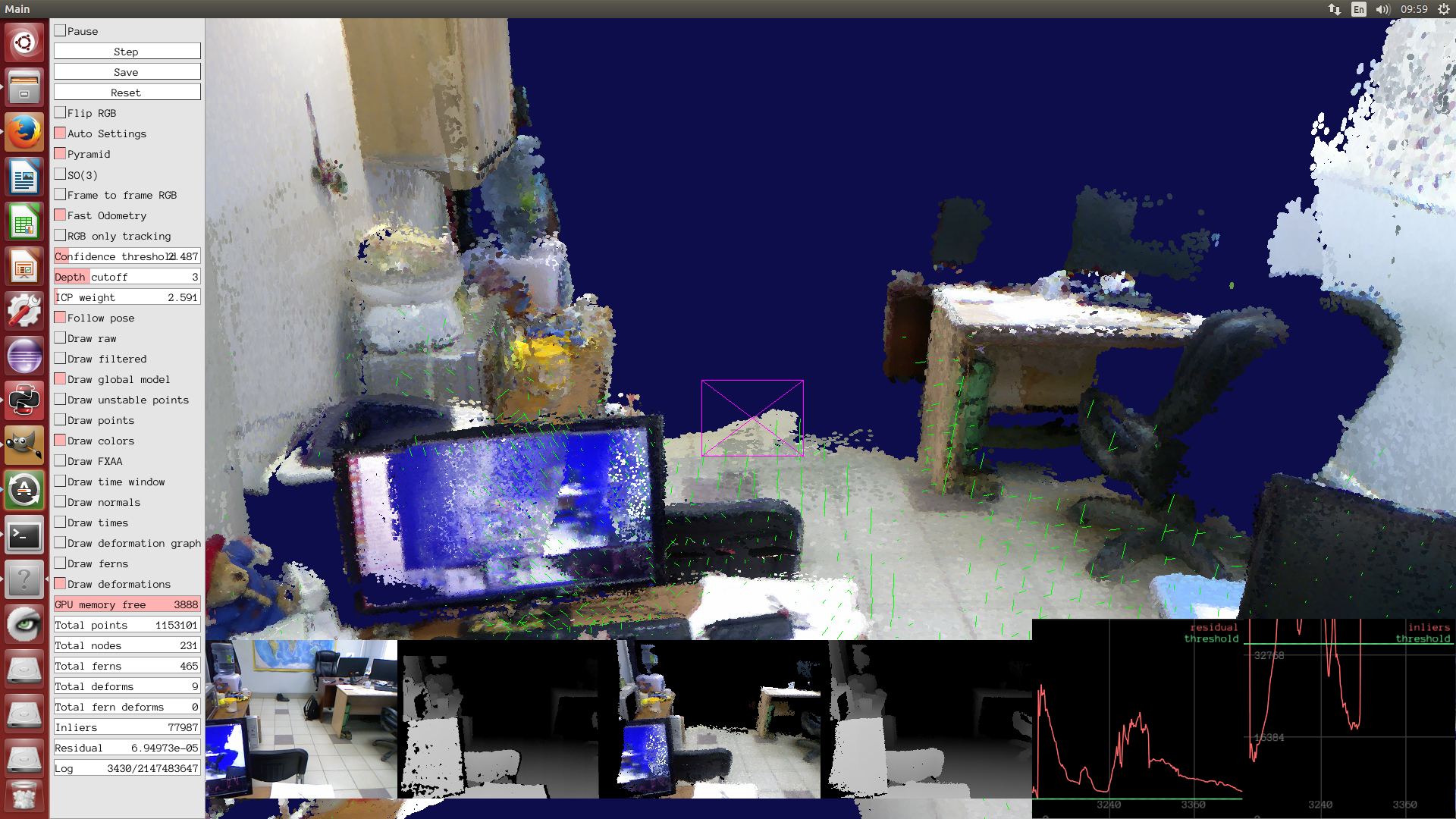

If everything went well, we will see the RTAB-Map window:

RTAB-Map provides the following parameters to configure the operation of the algorithm (they can be changed in the launch file or sent via the command line):

After working with monocular SLAM, being able to use two cameras is just a blessing, since there are no problems with determining the map scale and localization. If you have a stereo camera or at least two identical mono cameras, use them and stereo SLAM. However, if you want to achieve a good quality card, then you must get a real stereo camera mounted in one package. Two scotch webcams are a fun solution from the category “I am an engineer to my mother” to write a lesson on SLAM, but for real tasks you need real equipment.

ElasticFusion allows you to build dense 3D-models of the environment based on the surface (from the surface element). The algorithm does not use the graph of visited locations and fully relies only on the constructed map when localizing and searching for closures. To search for closures, the algorithm randomly selects small parts of the map with which new frames are subsequently compared. After finding the closure, the map section is deformed in accordance with the error. All other interesting things can be seen in the article developers . Immediately, we note that the algorithm is very picky about hardware: for normal operation, an nVidia video card with a performance of over 3.5 TFlOPS is required, as well as a CPU like an Intel Core i5 - i7.

Clone the ElasticFusion repository to a convenient folder:

The project has a lot of dependencies, and among them is CUDA. If you have already installed a video card driver, and this is not CUDA, then, unfortunately, you will have to dance a little with a tambourine. Dancing includes blacklisting of the video card drivers, installation with lightdm stopped, and all sorts of other nasty things that can be read in detail, for example, here . Perform all these procedures very carefully and with the understanding of what you are doing, otherwise you can easily say goodbye to the system.

The easiest way is to assemble ElasticFusion with all dependencies at once with the help of a script kindly prepared by the developers. Go to the repository and run the script:

The magic script will do almost everything for us. “Almost” - because out of the box, ElasticFusion only works with OpenNI2 drivers. We use the first version of Kinect, and, fortunately, there is an easy way to add its support to OpenNI2. To do this, first collect libfreenect (clone it into the same folder as ElasticFusion, side by side):

Then add a link to the freenect driver in OpenNI2:

Hooray, now we can run this miracle of technology, if we have Kinect connected:

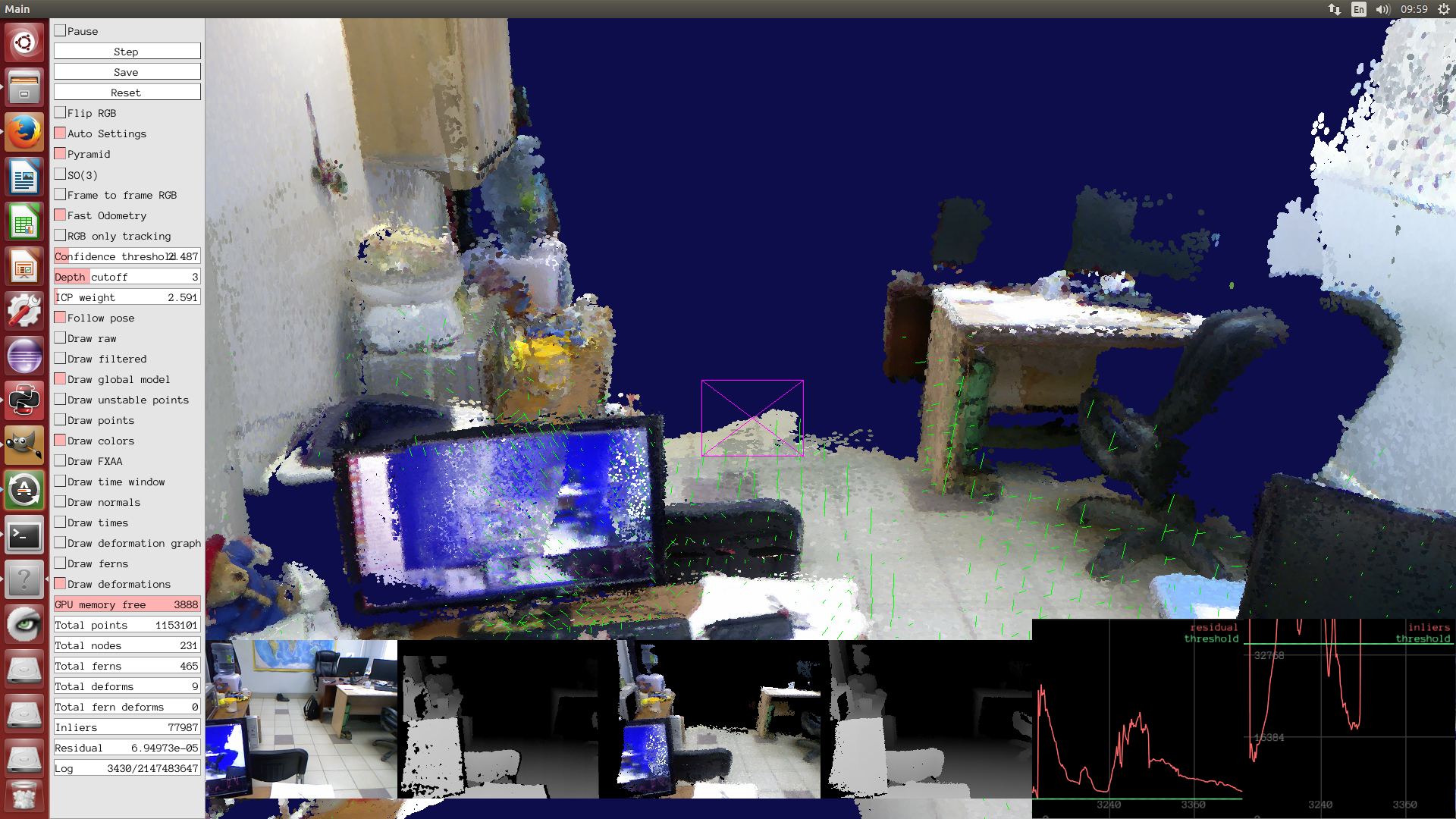

If everything is good, then this window will appear:

The algorithm provides a packet of parameters for tweaking that can be passed on the command line (and some can be rotated directly in the GUI). So far we have launched ElasticFusion with default parameters. How well it will work depends on the configuration of your computing hardware, as well as on the depth camera used. The algorithm can work only in real-time; in general, you may face such problems:

We also recommend that you enable relocation ( -rl ) so that if you lose tracking, the algorithm can recover itself, and also include frame-to-frame RGB tracking ( -ftf ), which makes the camera move more smoothly. In addition, you need to configure the percentage of using two trackers used by the algorithm, which is done by setting the -i parameter.

For our hardware (Core i5 + GeForce GTX Titan) and Kinect of the first version, we selected the following parameters, which allow us to achieve a very good operation of the algorithm:

The -d parameter here sets the limit in meters beyond which the depth values obtained from the sensor will not be used. As a result, we received such a reconstruction of one of the rooms of our office:

Very often, SLAM algorithms that use depth maps work with dense (dense) maps. This inevitably entails significant requirements for computing resources, so the use of this approach, for example, on small-sized robots is difficult. In addition, the scope of such sensors is not too large (of course, if you do not use the expensive 3D LiDAR), so the scope is still a little narrowed. If you are not very limited in computing resources, and you need to solve the problem of navigation in the room - then projects like ElasticFusion will suit you perfectly.

There are also less resource-intensive algorithms, for example, the same RTAB-Map can work with depth cameras. This solution is a compromise and will fit perfectly into the navigation system of a less intelligent robot.

This concludes the series of lessons on visual SLAM. Feel free to clarify / ask / add / disprove / dispute, it is always nice for us :)

Article 1: Setting up the environment for working with SLAM

Article 2: Monocular SLAM

ElasticFusion page on the developer site

RTAB-Map site

RTAB-Map + stereo camera

Principle of operation

RTAB-Map (Real-Time Appearance-Based Mapping) is a visual graph-based SLAM algorithm based on a closure detector. The detector uses the bag-of-words algorithm to determine the degree of similarity of new camera images to images of locations already visited. Each such closure adds a new edge to the camera positions, after which the graph is optimized. To achieve real-time, the algorithm uses a clever way to manage memory, limiting the number of locations used. The article developers described in detail how it all works.

Launch

We covered RTAB-Map setup using the Kinect sensor in the first lesson , so here we’ll work on setting up this package for use with a stereo camera. As such, we will use two ordinary USB webcams.

')

Adjustment and calibration of the stereo pair in ROS

For correct operation, the two cameras must be firmly fixed relative to each other (for this you can use webcams on a clothespin :). It is desirable that the cameras were the same model and did not have the ability to manually adjust the focus. We will use this horizontal “stereo camera”:

Setting up cameras in ROS

We will use the usb_cam package to get the video stream from the cameras. Create a stereo_camera.launch file with the following content:

<launch> <node name="left" ns="stereo" pkg="usb_cam" type="usb_cam_node" output="screen" > <param name="video_device" value="/dev/video0"/> <param name="image_width" value="640"/> <param name="image_height" value="480"/> <param name="camera_frame_id" value="camera_link"/> </node> <node name="right" ns="stereo" pkg="usb_cam" type="usb_cam_node" output="screen" > <param name="video_device" value="/dev/video1"/> <param name="image_width" value="640"/> <param name="image_height" value="480"/> <param name="camera_frame_id" value="camera_link"/> </node> </launch> where / dev / video0 should be replaced with the identifier corresponding to the left / right camera.

If the following error appears at startup:

[ERROR] [1455879780.737902972]: VIDIOC_STREAMON error 28, No space left on device

then this means that USB bandwidth is not enough for some reason. You need to try to connect the cameras to different USB hubs, this often solves the problem.

You can make sure that both cameras are working using the ROS utility rqt_image_view . The utility should show videos for the / stereo / left / image_raw and / stereo / right / image_raw topics.

Calibration

The ROS camera_calibration utility supports horizontal stereo camera calibration. Before starting, you need to prepare a calibration sample - print a chess marker. Run the utility as follows:

rosrun camera_calibration cameracalibrator.py --size 8x6 --square 0.054 right:=/stereo/right/image_raw left:=/stereo/left/image_raw left_camera:=/stereo/left right_camera:=/stereo/right --approximate=0.01 Where

--size 8x6 indicates the number of inner corners of the chess pattern (8x6 corresponds to the pattern of 9x7 black squares);

- square 0.054 - size (sides) of a square chess pattern in meters;

--approximate = 0.01 - in our case, non-specialized cameras are used, so it is necessary to specify a parameter that sets the allowable time for desynchronization between cameras in seconds.

The process of calibrating a stereo pair using this utility does not differ much from the calibration of a single camera, given in the first article . Upon completion of the calibration, you must click the “commit” button to save the calibration data.

Stereo image processing

After calibrating the cameras, you can proceed to processing the stereo image. The stereo_image_proc package rectifies camera images and builds a disparity map. The launch is as follows:

rosrun stereo_image_proc stereo_image_proc __ns:=stereo _approximate_sync:=true for testing, you can run the image_view utility with the following parameters:

rosrun image_view stereo_view stereo:=/stereo image:=image_rect_color _approximate_sync:=true The parameters of the stereo image processing algorithm can be configured using the dynamic_reconfigure utility. To run, you need to run the command:

rqt and select Dynamic reconfigure in the Plugins → Configuration menu.

stereo_image_proc supports two processing algorithms: StereoBM (faster, first picture) and StereoSGBM (semi-global block matching, better, slower, second picture).

RTAB-Map

After the camera has been successfully calibrated and we have an adequate disparity map, we can launch the RTAB-Map. Prepare the launch file rtabmap.launch :

rtabmap.launch

<?xml version="1.0"?> <launch> <arg name="pi/2" value="1.5707963267948966" /> <arg name="optical_rotate" value="0 0 0 -$(arg pi/2) 0 -$(arg pi/2)" /> <node pkg="tf" type="static_transform_publisher" name="camera_base_link" args="$(arg optical_rotate) base_link camera_link 100" /> <!-- Choose visualization --> <arg name="rtabmapviz" default="true" /> <arg name="rviz" default="false" /> <!-- Corresponding config files --> <arg name="rtabmapviz_cfg" default="-d $(find rtabmap_ros)/launch/config/rgbd_gui.ini" /> <arg name="rviz_cfg" default="-d $(find rtabmap_ros)/launch/config/rgbd.rviz" /> <arg name="frame_id" default="base_link"/> <!-- Fixed frame id, you may set "base_link" or "base_footprint" if they are published --> <arg name="time_threshold" default="0"/> <!-- (ms) If not 0 ms, memory management is used to keep processing time on this fixed limit. --> <arg name="optimize_from_last_node" default="false"/> <!-- Optimize the map from the last node. Should be true on multi-session mapping and when time threshold is set --> <arg name="database_path" default="~/.ros/rtabmap.db"/> <arg name="rtabmap_args" default="--delete_db_on_start"/> <arg name="stereo_namespace" default="/stereo"/> <arg name="left_image_topic" default="$(arg stereo_namespace)/left/image_rect_color" /> <arg name="right_image_topic" default="$(arg stereo_namespace)/right/image_rect" /> <!-- using grayscale image for efficiency --> <arg name="left_camera_info_topic" default="$(arg stereo_namespace)/left/camera_info" /> <arg name="right_camera_info_topic" default="$(arg stereo_namespace)/right/camera_info" /> <arg name="approximate_sync" default="true"/> <!-- if timestamps of the stereo images are not synchronized --> <arg name="compressed" default="false"/> <arg name="subscribe_scan" default="false"/> <!-- Assuming 2D scan if set, rtabmap will do 3DoF mapping instead of 6DoF --> <arg name="scan_topic" default="/scan"/> <arg name="visual_odometry" default="true"/> <!-- Generate visual odometry --> <arg name="odom_topic" default="/odom"/> <!-- Odometry topic used if visual_odometry is false --> <arg name="namespace" default="rtabmap"/> <arg name="wait_for_transform" default="0.1"/> <!-- Odometry parameters: --> <arg name="strategy" default="0" /> <!-- Strategy: 0=BOW (bag-of-words) 1=Optical Flow --> <arg name="feature" default="2" /> <!-- Feature type: 0=SURF 1=SIFT 2=ORB 3=FAST/FREAK 4=FAST/BRIEF 5=GFTT/FREAK 6=GFTT/BRIEF 7=BRISK --> <arg name="estimation" default="0" /> <!-- Motion estimation approach: 0:3D->3D, 1:3D->2D (PnP) --> <arg name="nn" default="3" /> <!-- Nearest neighbor strategy : 0=Linear, 1=FLANN_KDTREE (SIFT, SURF), 2=FLANN_LSH, 3=BRUTEFORCE (ORB/FREAK/BRIEF/BRISK) --> <arg name="max_depth" default="10" /> <!-- Maximum features depth (m) --> <arg name="min_inliers" default="20" /> <!-- Minimum visual correspondences to accept a transformation (m) --> <arg name="inlier_distance" default="0.1" /> <!-- RANSAC maximum inliers distance (m) --> <arg name="local_map" default="1000" /> <!-- Local map size: number of unique features to keep track --> <arg name="odom_info_data" default="true" /> <!-- Fill odometry info messages with inliers/outliers data. --> <arg name="variance_inliers" default="true"/> <!-- Variance from inverse of inliers count --> <!-- Nodes --> <group ns="$(arg namespace)"> <!-- Odometry --> <node if="$(arg visual_odometry)" pkg="rtabmap_ros" type="stereo_odometry" name="stereo_odometry" output="screen" args="--udebug"> <remap from="left/image_rect" to="$(arg left_image_topic)"/> <remap from="right/image_rect" to="$(arg right_image_topic)"/> <remap from="left/camera_info" to="$(arg left_camera_info_topic)"/> <remap from="right/camera_info" to="$(arg right_camera_info_topic)"/> <param name="frame_id" type="string" value="$(arg frame_id)"/> <param name="wait_for_transform_duration" type="double" value="$(arg wait_for_transform)"/> <param name="approx_sync" type="bool" value="$(arg approximate_sync)"/> <param name="Odom/Strategy" type="string" value="$(arg strategy)"/> <param name="Odom/FeatureType" type="string" value="$(arg feature)"/> <param name="OdomBow/NNType" type="string" value="$(arg nn)"/> <param name="Odom/EstimationType" type="string" value="$(arg estimation)"/> <param name="Odom/MaxDepth" type="string" value="$(arg max_depth)"/> <param name="Odom/MinInliers" type="string" value="$(arg min_inliers)"/> <param name="Odom/InlierDistance" type="string" value="$(arg inlier_distance)"/> <param name="OdomBow/LocalHistorySize" type="string" value="$(arg local_map)"/> <param name="Odom/FillInfoData" type="string" value="true"/> <param name="Odom/VarianceFromInliersCount" type="string" value="$(arg variance_inliers)"/> </node> <!-- Visual SLAM (robot side) --> <!-- args: "delete_db_on_start" and "udebug" --> <node name="rtabmap" pkg="rtabmap_ros" type="rtabmap" output="screen" args="$(arg rtabmap_args)"> <param name="subscribe_depth" type="bool" value="false"/> <param name="subscribe_stereo" type="bool" value="true"/> <param name="subscribe_laserScan" type="bool" value="$(arg subscribe_scan)"/> <param name="frame_id" type="string" value="$(arg frame_id)"/> <param name="wait_for_transform_duration" type="double" value="$(arg wait_for_transform)"/> <param name="database_path" type="string" value="$(arg database_path)"/> <param name="stereo_approx_sync" type="bool" value="$(arg approximate_sync)"/> <remap from="left/image_rect" to="$(arg left_image_topic)"/> <remap from="right/image_rect" to="$(arg right_image_topic)"/> <remap from="left/camera_info" to="$(arg left_camera_info_topic)"/> <remap from="right/camera_info" to="$(arg right_camera_info_topic)"/> <remap from="scan" to="$(arg scan_topic)"/> <remap unless="$(arg visual_odometry)" from="odom" to="$(arg odom_topic)"/> <param name="Rtabmap/TimeThr" type="string" value="$(arg time_threshold)"/> <param name="RGBD/OptimizeFromGraphEnd" type="string" value="$(arg optimize_from_last_node)"/> <param name="LccBow/MinInliers" type="string" value="10"/> <param name="LccBow/InlierDistance" type="string" value="$(arg inlier_distance)"/> <param name="LccBow/EstimationType" type="string" value="$(arg estimation)"/> <param name="LccBow/VarianceFromInliersCount" type="string" value="$(arg variance_inliers)"/> <!-- when 2D scan is set --> <param if="$(arg subscribe_scan)" name="RGBD/OptimizeSlam2D" type="string" value="true"/> <param if="$(arg subscribe_scan)" name="RGBD/LocalLoopDetectionSpace" type="string" value="true"/> <param if="$(arg subscribe_scan)" name="LccIcp/Type" type="string" value="2"/> <param if="$(arg subscribe_scan)" name="LccIcp2/CorrespondenceRatio" type="string" value="0.25"/> </node> <!-- Visualisation RTAB-Map --> <node if="$(arg rtabmapviz)" pkg="rtabmap_ros" type="rtabmapviz" name="rtabmapviz" args="$(arg rtabmapviz_cfg)" output="screen"> <param name="subscribe_depth" type="bool" value="false"/> <param name="subscribe_stereo" type="bool" value="true"/> <param name="subscribe_laserScan" type="bool" value="$(arg subscribe_scan)"/> <param name="subscribe_odom_info" type="bool" value="$(arg visual_odometry)"/> <param name="frame_id" type="string" value="$(arg frame_id)"/> <param name="wait_for_transform_duration" type="double" value="$(arg wait_for_transform)"/> <remap from="left/image_rect" to="$(arg left_image_topic)"/> <remap from="right/image_rect" to="$(arg right_image_topic)"/> <remap from="left/camera_info" to="$(arg left_camera_info_topic)"/> <remap from="right/camera_info" to="$(arg right_camera_info_topic)"/> <remap from="scan" to="$(arg scan_topic)"/> <remap unless="$(arg visual_odometry)" from="odom" to="$(arg odom_topic)"/> </node> </group> <!-- Visualization RVIZ --> <node if="$(arg rviz)" pkg="rviz" type="rviz" name="rviz" args="$(arg rviz_cfg)"/> <node if="$(arg rviz)" pkg="nodelet" type="nodelet" name="points_xyzrgb" args="standalone rtabmap_ros/point_cloud_xyzrgb"> <remap from="left/image" to="$(arg left_image_topic)"/> <remap from="right/image" to="$(arg right_image_topic)"/> <remap from="left/camera_info" to="$(arg left_camera_info_topic)"/> <remap from="right/camera_info" to="$(arg right_camera_info_topic)"/> <remap from="cloud" to="voxel_cloud" /> <param name="decimation" type="double" value="2"/> <param name="voxel_size" type="double" value="0.02"/> <param name="approx_sync" type="bool" value="$(arg approximate_sync)"/> </node> </launch> This file was created based on the standard configuration file RTAB-Map for the Bumblebee camera. It sets the orientation of the camera coordinate system relative to the global coordinate system, runs the odometry modules ( stereo_odometry in our case), SLAM ( rtabmap ) and visualizations ( rtabmapviz or rviz ).

If an error is displayed at startup:

[FATAL] The stereo baseline (-0.177000) should be positive

you need to swap the left and right cameras in the file stereo_camera.launch and calibrate them again.

Run the RTAB-Map (this command should be run from the folder containing rtabmap.launch ):

roslaunch rtabmap.launch If everything went well, we will see the RTAB-Map window:

Setting parameters and possible problems

RTAB-Map provides the following parameters to configure the operation of the algorithm (they can be changed in the launch file or sent via the command line):

- strategy - odometry algorithm: 0 = bag-of-words 1 = optical flow

- feature - feature detector type: 0 = SURF 1 = SIFT 2 = ORB 3 = FAST / FREAK 4 = FAST / BRIEF 5 = GFTT / FREAK 6 = GFTT / BRIEF 7 = BRISK. Best of all, in our opinion, the ORB shows itself, it is used by default.

- estimation - operation of odometry: 0: 3D, 1: 2D.

- max_depth - maximum feature depth used from disparity map in meters.

- inlier_distance - maximum distance between features for RANSAC in meters.

- local_map - the maximum number of features stored in the map.

When to use SLAM with a stereo camera?

After working with monocular SLAM, being able to use two cameras is just a blessing, since there are no problems with determining the map scale and localization. If you have a stereo camera or at least two identical mono cameras, use them and stereo SLAM. However, if you want to achieve a good quality card, then you must get a real stereo camera mounted in one package. Two scotch webcams are a fun solution from the category “I am an engineer to my mother” to write a lesson on SLAM, but for real tasks you need real equipment.

ElasticFusion + RGB-D camera

Principle of operation

ElasticFusion allows you to build dense 3D-models of the environment based on the surface (from the surface element). The algorithm does not use the graph of visited locations and fully relies only on the constructed map when localizing and searching for closures. To search for closures, the algorithm randomly selects small parts of the map with which new frames are subsequently compared. After finding the closure, the map section is deformed in accordance with the error. All other interesting things can be seen in the article developers . Immediately, we note that the algorithm is very picky about hardware: for normal operation, an nVidia video card with a performance of over 3.5 TFlOPS is required, as well as a CPU like an Intel Core i5 - i7.

Install and Run

Clone the ElasticFusion repository to a convenient folder:

git clone https://github.com/mp3guy/ElasticFusion.git The project has a lot of dependencies, and among them is CUDA. If you have already installed a video card driver, and this is not CUDA, then, unfortunately, you will have to dance a little with a tambourine. Dancing includes blacklisting of the video card drivers, installation with lightdm stopped, and all sorts of other nasty things that can be read in detail, for example, here . Perform all these procedures very carefully and with the understanding of what you are doing, otherwise you can easily say goodbye to the system.

The easiest way is to assemble ElasticFusion with all dependencies at once with the help of a script kindly prepared by the developers. Go to the repository and run the script:

cd ElasticFusion ./build.sh The magic script will do almost everything for us. “Almost” - because out of the box, ElasticFusion only works with OpenNI2 drivers. We use the first version of Kinect, and, fortunately, there is an easy way to add its support to OpenNI2. To do this, first collect libfreenect (clone it into the same folder as ElasticFusion, side by side):

git clone https://github.com/OpenKinect/libfreenect.git cd libfreenect mkdir build cd build cmake .. -DBUILD_OPENNI2_DRIVER=ON make Then add a link to the freenect driver in OpenNI2:

ln -s lib/OpenNI2-FreenectDriver/libFreenectDriver.so ../../ElasticFusion/deps/OpenNI2/Bin/x64-Release/OpenNI2/Drivers/ Hooray, now we can run this miracle of technology, if we have Kinect connected:

cd ElasticFusion/GUI ./ElasticFusion If everything is good, then this window will appear:

Setting parameters and possible problems

The algorithm provides a packet of parameters for tweaking that can be passed on the command line (and some can be rotated directly in the GUI). So far we have launched ElasticFusion with default parameters. How well it will work depends on the configuration of your computing hardware, as well as on the depth camera used. The algorithm can work only in real-time; in general, you may face such problems:

- slow “filling” of the card with surfels. It is solved using a more powerful video adapter, as well as setting parameters:

- setting a smaller value Surfel confidence threshold (parameter -c ), for example, in 2 instead of default 10;

- enabling fast odometry ( -fo );

- There are a couple of options that can be viewed on the project page, but we do not recommend using them - the quality drops dramatically.

- unstable finding closures. It is solved by adjusting the parameters of the circuit for finding closures ( -ic, -cv, -pt ).

We also recommend that you enable relocation ( -rl ) so that if you lose tracking, the algorithm can recover itself, and also include frame-to-frame RGB tracking ( -ftf ), which makes the camera move more smoothly. In addition, you need to configure the percentage of using two trackers used by the algorithm, which is done by setting the -i parameter.

For our hardware (Core i5 + GeForce GTX Titan) and Kinect of the first version, we selected the following parameters, which allow us to achieve a very good operation of the algorithm:

./ElasticFusion -c 2 -d 4 -i 5 -fo -ftf -rl -ic 1000 -cv 1e-02 -pt 150 The -d parameter here sets the limit in meters beyond which the depth values obtained from the sensor will not be used. As a result, we received such a reconstruction of one of the rooms of our office:

When to use SLAM with depth camera?

Very often, SLAM algorithms that use depth maps work with dense (dense) maps. This inevitably entails significant requirements for computing resources, so the use of this approach, for example, on small-sized robots is difficult. In addition, the scope of such sensors is not too large (of course, if you do not use the expensive 3D LiDAR), so the scope is still a little narrowed. If you are not very limited in computing resources, and you need to solve the problem of navigation in the room - then projects like ElasticFusion will suit you perfectly.

There are also less resource-intensive algorithms, for example, the same RTAB-Map can work with depth cameras. This solution is a compromise and will fit perfectly into the navigation system of a less intelligent robot.

Conclusions and general recommendations for use

- Using a stereo camera or an RGB-D sensor eliminates the main problem of monocular SLAM algorithms - the fundamental impossibility of determining the scale of the map.

- In general, the requirements of algorithms for computing resources do not depend on what you use - stereo or camera depth.

- If you need to build a scene map with great depth (for example, outdoors), then you need a stereo camera (or LiDAR, which is significantly more expensive).

- If possible, use stereo cameras already produced in the same package. This is the only normal way to get a reliable disparity map.

This concludes the series of lessons on visual SLAM. Feel free to clarify / ask / add / disprove / dispute, it is always nice for us :)

Sources and references

Article 1: Setting up the environment for working with SLAM

Article 2: Monocular SLAM

ElasticFusion page on the developer site

RTAB-Map site

Source: https://habr.com/ru/post/279035/

All Articles