How Cloud@mail.ru saved all * my files and what came of it

Once I saw this banner and decided that a free terabyte wouldn't hurt me at all, especially since my archive of photos and documents was just lying on a terabyte hard drive. I admit, I was very afraid to put a program with the mail.ru logo on my computer, but my desire for freebies overcame. I signed up, got a place, installed the client, set it up and forgot.

And a few months ago, the inevitable happened - my hard drive with the archive ordered me to live long. Fortunately, by that time all the files were copied to the cloud and nothing was lost.

After buying a new disk, I reinstalled the cloud client and waited for my files to download. But after a couple of minutes, I saw that nothing appeared on the disk, but the files are rapidly removed from the Cloud.

')

At the end of the article there are UPD , UPD2 , UPD3 and UPD4 , which describes the reasons for this behavior.

TL; DR : false alarm, with files and synchronization, everything is in order, but the user interface and the work of those. support need to be finalized.

As it turned out after talking with those. support, this is the standard behavior of the client - no matter what folder you feed him, he starts to synchronize it to the cloud, removing from there everything that is not in the folder.

Downloading files via WebDav is also impossible:

All that remains is the ability to download files through the web interface. Files there can be downloaded one at a time, or you can select several files or folders and download them in one archive, which is quite convenient. The only restriction is that the archive cannot exceed 4GB.

I tried to go this way, but quickly realized that this is a very inconvenient option:

- The 4 GB limit means that if you have a terabyte in the cloud, you will need to download at least 250 archives.

- Each archive must be created manually by selecting folders, considering their total size and marking those that have already been downloaded.

- Sometimes archives do not open for an unknown reason.

- Lost folder structure.

I still need the files, so I decided to write my tool, and at the same time learn something new. Well, get pleasure from solving the problem, of course.

First of all, you need to understand how to get a list of folders and files. Initially, I planned to just parse the pages, rip out information about folders and files from them and build a tree. But, having opened the source code of the page, I immediately saw that the entire interface of working with documents is built through javascript, which, if you think about it, is quite logical.

Therefore, I have two possible solutions: connect

Selenium and still build a tree from html or deal with the internal API that is used in the script.

I chose the second way, as the most reasonable one - why should I parse something using third-party tools if there is already a ready-made API?

Fortunately, the script was not obfuscated and not even compressed - the original variable and function names and developer comments were available to me, which made the task much easier.

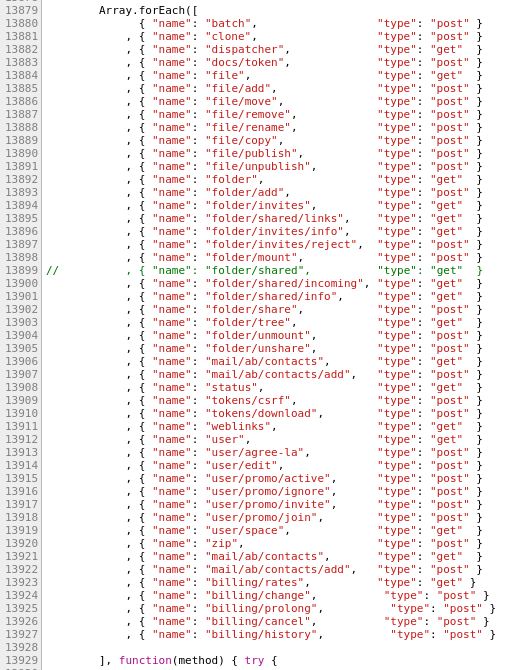

After a few minutes of studying, I saw that all available API methods are described in an array:

That's why I don’t spend time on beautiful formatting in my code - somebody will surely break it.

I reasoned that to get a list of folders and files in a directory, you need to call the folder method. To do this, send a get request to

https://cloud.mail.ru/api/v2/folder .Open the page in the browser and see the following answer:

{"body":"user","time":1457097026874,"status":403} Obviously, you need to log in to the portal. Log in, repeat the request and see another error:

{"email":"me@mail.ru","body":"token","time":1457097187300,"status":403 No wonder, a token is required to execute API requests. There are two suitable methods in the list:

tokens/csrf and tokens/download .When you request

https://cloud.mail.ru/api/v2/tokens/download exactly the same token error is given, which means we need a csrf-token.We request it, add the parameter

?token=X9ccJNwYeowQTakZC1yGHsWzb7q6bTpP to the call to the folder method and get a new error: {"email":"me@mail.ru","body":{"error":"invalid args"},"time":1457097695182,"status":400} Here I had to read the source again to find out what arguments this method takes. It turned out that you need to specify the folder, the contents of which we want to receive in the home parameter.

So, in response to a request on the url

https://cloud.mail.ru/api/v2/folder?token=X9ccJNwYeowQTakZC1yGHsWzb7q6bTpP&home=/ , the following object is returned: { "email": "me@mail.ru", "body": { "count": {"folders": 1, "files": 1}, "tree": "363831373562653330303030", "name": "/", "grev": 17, "size": 978473730, "sort": {"order": "asc", "type": "name"}, "kind": "folder", "rev": 9, "type": "folder", "home": "/", "list": [{ "count": {"folders": 1, "files": 3}, "tree": "363831373562653330303030", "name": "", "grev": 17, "size": 492119223, "kind": "folder", "rev": 16, "type": "folder", "home": "/" }, { "mtime": 1456774311, "virus_scan": "pass", "name": ".mp4", "size": 486354507, "hash": "C2AD142BDF1E4F9FD50E06026BCA578198BFC36E", "kind": "file", "type": "file", "home": "/.mp4" }] }, "time": 1457097848869, "status": 200 } Information about files and directories - what you need!

The efficiency of the API is confirmed, the scheme of its work is clear - you can start writing a program. I decided to write a console application in php, because I know this language well. The Conm component from symfony is ideal for this task. I already wrote console commands for Laravel , which are built just on this component, but there the level of abstraction is quite high and I did not work directly with it, so I decided that it was time to get to know each other better.

I will not retell the documentation, it is quite detailed and very simple. Without knowing anything about the component, in a few hours I wrote these simple interfaces:

It looks like the application in the process of downloading files.

And so on completion: a small table is shown (maximum 100 lines) with information about the downloaded files. It is of no practical use and is made solely for educational purposes.

The console application may include several commands called as follows:

php app.php command argument --option . But for my purposes only one command is needed and I would like to start the download like this: php app.php argument --option . This is easily achieved with the instruction from the component documentation.So, the console application is ready, it displays information from pre-prepared fixtures and is even covered with tests . It's time to implement directly getting information about files and folders from the cloud.

Here, I also did not reinvent the wheel and used the excellent Guzzle library. With its help it is very convenient to send http requests, while it uses the interfaces PSR-7 .

When authorizing from the main mail.ru page, a post-request is sent to

https://auth.mail.ru/cgi-bin/auth , containing the Login and Password fields.Here is the authorization method in my application.

Since in response to an authorization request, several redirects are returned, which eventually lead to the user's mailbox, I decided to simply check the page title to determine if the authorization was successful.

The check is so simple that now it fails if there are unread letters in the mailbox - their number is displayed in the page header. But I do not use the box, so for my purposes it is enough.

/** * @throws InvalidCredentials */ private function auth() { $expectedTitle = sprintf(' - %s - Mail.Ru', $this->login); $authResponse = $this->http->post( static::AUTH_DOMAIN . '/cgi-bin/auth', [ 'form_params' => [ 'Login' => $this->login, 'Password' => $this->password, ] ] ); try { // http://php.net/manual/en/domdocument.loadhtml.php#95463 libxml_use_internal_errors(true); $this->dom->loadHTML($authResponse->getBody()); $actualTitle = $this->dom->getElementsByTagName('title')->item(0)->textContent; } catch (\Exception $e) { throw new InvalidCredentials; } if ($actualTitle !== $expectedTitle) { throw new InvalidCredentials; } } Since in response to an authorization request, several redirects are returned, which eventually lead to the user's mailbox, I decided to simply check the page title to determine if the authorization was successful.

The check is so simple that now it fails if there are unread letters in the mailbox - their number is displayed in the page header. But I do not use the box, so for my purposes it is enough.

Then I tried to request a csrf-token, but was surprised to get a familiar error:

{"status":403,"body":"user"} I debugged the requests and saw that the authorization was successful, but, nevertheless, the token was not given to me. It is very similar to the problem with cookies and it turns out that they are turned off by default in Guzzle and need to be turned on by hand .

The easiest way to do this is once the client is initialized:

$client = new \GuzzleHttp\Client(['cookies' => true]); Another initialization parameter is 'debug' => true , with it the debugging of requests is almost painless.

After setting up the cookies, I tried again to get the token and received an authorization error in response that I had not encountered before:

{"email":"me@mail.ru","body":"nosdc","time":1457097187300,"status":403} After reading the source code and monitoring the authorization process, I saw that sdc is another cookie that is obtained by a separate request when the application starts:

https://auth.mail.ru/sdc?from=https://cloud.mail.ru/home .I added this request after the authorization request and finally was able to get the token. Well, then the matter of technology is to request the contents of the root folder and recursively the contents of its subfolders, and the tree is ready.

As it turned out, the tree in the end did not even need - each file stores the full path from the root, therefore, to download a fairly flat list.

The download mechanism is a bit tricky: you must first request the recommended shard (something similar to

https://cloclo28.datacloudmail.ru/get/ ) and then download the file.Considering that the addresses of shards differ only in numbers, I think it would be possible not to bother and address the address, but if you do, then do it to the end!

To get an array of shards you need to run the dispatcher method (

https://cloud.mail.ru/api/v2/dispatcher?token=X9ccJNwYeowQTakZC1yGHsWzb7q6bTpP ): { "email": "me@mail.ru", "body": { "video": [{"count": "3", "url": "https://cloclo22.datacloudmail.ru/video/"}], "view_direct": [{"count": "250", "url": "http://cloclo18.cloud.mail.ru/docdl/"}], "weblink_view": [{"count": "50", "url": "https://cloclo18.datacloudmail.ru/weblink/view/"}], "weblink_video": [{"count": "3", "url": "https://cloclo18.datacloudmail.ru/videowl/"}], "weblink_get": [{"count": 1, "url": "https://cloclo27.cldmail.ru/2yoHNmAc9HVQzZU1hcyM/G"}], "weblink_thumbnails": [{"count": "50", "url": "https://cloclo3.datacloudmail.ru/weblink/thumb/"}], "auth": [{"count": "500", "url": "https://swa.mail.ru/cgi-bin/auth"}], "view": [{"count": "250", "url": "https://cloclo2.datacloudmail.ru/view/"}], "get": [{"count": "100", "url": "https://cloclo27.datacloudmail.ru/get/"}], "upload": [{"count": "25", "url": "https://cloclo22-upload.cloud.mail.ru/upload/"}], "thumbnails": [{"count": "250", "url": "https://cloclo3.cloud.mail.ru/thumb/"}] }, "time": 1457101607726, "status": 200 } We are interested in an array stored in get .

Choose a random item from the shard array, add the file address to it and the download link is ready!

To save memory, you can immediately indicate where Guzzle should write the answer when creating a request, using the sink parameter.

The final code is posted on GitHub under the MIT license, I will be glad if it is useful to someone.

The application is far from ideal, its functionality is limited, there are definitely bugs in it and the test coverage leaves much to be desired, but it solved my task 100%, and this is what MVP requires.

PS I want to express the same thanks to Mail.ru for the fact that, firstly, along with the cloud client, I have never established Amigo, and secondly, because they saved me from losing the entire home archive (even not sure which of these is more important). But still, out of harm's way, I decided to move to a cloud of another company: 200 rubles a month - a small fee for the fact that I did not have to repeat this attraction again.

* All that did not have time to first remove.

UPD: Communication with those. support.

[[[[I have problems with synchronization ..., another problem, Feedback Form]]]]

Good day.

I replaced the hard drive on which the cloud folder was located. The old disk broke, so there is no possibility to transfer data from it. In the web interface, all my data is in place.

When I created an empty folder on the new disk and set it up in the application, files in the cloud began to be deleted during synchronization.

How do I set up an application on my computer so that it considers the main copy of the web, and not an empty folder - that is, it would start downloading files to the computer, rather than deleting them in the cloud.

I tried to download files through the browser, but this is unreal - there are a lot of them.

I replaced the hard drive on which the cloud folder was located. The old disk broke, so there is no possibility to transfer data from it. In the web interface, all my data is in place.

When I created an empty folder on the new disk and set it up in the application, files in the cloud began to be deleted during synchronization.

How do I set up an application on my computer so that it considers the main copy of the web, and not an empty folder - that is, it would start downloading files to the computer, rather than deleting them in the cloud.

I tried to download files through the browser, but this is unreal - there are a lot of them.

support@cloud.mail.ru 12/29/15

Hello.

Unfortunately, recover deleted files simultaneously in the Cloud and on the PC

can not.

By default, between the web interface and the application on the computer

full two-way synchronization is performed - if you delete a file from

Clouds in the web-interface, the file is deleted in the application, as well

on the contrary: deleting a file in the application, you delete the file in the Cloud.

You can set up custom sync in the Cloud PC client. For this

click on the Clouds icon (in the system tray) with the right mouse button and

Go to the "Select folders" section.

In the window that opens, uncheck the checkboxes in front of those folders, synchronization for

which you want to cancel and click "Select".

If the folder was previously synchronized, it will be deleted from your

computer, but in the web interface Clouds folder, as well as all contained in

her files will be saved.

To re-enable synchronization for a previously deleted folder, click on

right-click the Clouds app icon, click “Select Folders” and

check the box next to the name of the desired folder.

You can also temporarily disable syncing. To do this, click on

icon of the Clouds application, right-click and select “Suspend

synchronization.

You can read more about synchronization in the Help system.

help.mail.ru/cloud_web/synch

Unfortunately, recover deleted files simultaneously in the Cloud and on the PC

can not.

By default, between the web interface and the application on the computer

full two-way synchronization is performed - if you delete a file from

Clouds in the web-interface, the file is deleted in the application, as well

on the contrary: deleting a file in the application, you delete the file in the Cloud.

You can set up custom sync in the Cloud PC client. For this

click on the Clouds icon (in the system tray) with the right mouse button and

Go to the "Select folders" section.

In the window that opens, uncheck the checkboxes in front of those folders, synchronization for

which you want to cancel and click "Select".

If the folder was previously synchronized, it will be deleted from your

computer, but in the web interface Clouds folder, as well as all contained in

her files will be saved.

To re-enable synchronization for a previously deleted folder, click on

right-click the Clouds app icon, click “Select Folders” and

check the box next to the name of the desired folder.

You can also temporarily disable syncing. To do this, click on

icon of the Clouds application, right-click and select “Suspend

synchronization.

You can read more about synchronization in the Help system.

help.mail.ru/cloud_web/synch

Alexey Ukolov 12/29/15

Perhaps I have not quite clearly identified my problem, I will try to rephrase.

All my files are currently in the cloud. I bought a new HDD and I want to download these files to it. But when I created an empty folder on it and indicated it in the application, instead of downloading files from the cloud to the computer, the files began to be deleted from the cloud.

How do I start the process in the opposite direction - download everything from the cloud to a computer without using the web interface.

If this cannot be done through the application, are there any alternative tools? WebDav, as I understand it, is not yet implemented?

All my files are currently in the cloud. I bought a new HDD and I want to download these files to it. But when I created an empty folder on it and indicated it in the application, instead of downloading files from the cloud to the computer, the files began to be deleted from the cloud.

How do I start the process in the opposite direction - download everything from the cloud to a computer without using the web interface.

If this cannot be done through the application, are there any alternative tools? WebDav, as I understand it, is not yet implemented?

support@cloud.mail.ru 12/29/15

Hello.

Currently this functionality is missing.

Your comment has been submitted to the developers.

Currently this functionality is missing.

Your comment has been submitted to the developers.

UPD2: The problem is still reproduced, representatives of Cloud@mail.ru say that this is an atypical behavior and the problem is local, there are messages in the comments that synchronization with an empty folder works as it should.

Added video sample : youtu.be/dTF9UCdN2S8

I apologize for the watermarks and the overall quality, just proof of concept.

UPD3: On the laptop, where the cloud client had never stood before, downloaded the latest version from the official site, installed, launched. When you select an existing folder, the history repeats itself: files instead of downloading begin to be deleted. I tried not to create a folder - similarly.

UPD4: Bulldozavr wrote that, it seems, this removes the system files Thumbs.db and desktop.ini . I started the synchronization and did not stop it - indeed, periodically such files were visible in the status (but because the context menu is limited in width, and there is no available log file, the user can make sure that only these files are deleted).

After a couple of minutes, all the system files, apparently, were removed and the download started from the cloud to the computer, as it should be.

The verdict is the following: synchronization works fine, although at first the user is frightened of deleting files from the cloud; those. support works badly.

Well, my experience of writing console applications in php is not going anywhere :)

Source: https://habr.com/ru/post/278849/

All Articles