[ScanDoc] preprocessing scans

It is believed that the electronic document management system completely eliminates the work with the papers, but it is not. To digitize paper copies of documents they are usually passed through a scanner. When the flow of documents and the quality requirements for scans exceed a certain threshold, a number of issues arise that need to be addressed programmatically.

What problems have to be solved:

- Adjust the angle of the image, because the scanner feeder inevitably tilts the document while drawing. Sloppiness in important documents is unacceptable.

- Highlight the useful part on the scan, the rest is to delete, since it is not informative and takes up disk space for nothing.

- Find and delete blank pages, which will necessarily be in duplex scanning.

Algorithms that solve the tasks are developed and probably even posted on the Internet, but it was not possible to find a clear description of them. Of course, these problems are solved by expensive professional scanners, but it is not always possible to use firmware.

The idea of the article was born just in the process of developing a tool to solve these problems. I hope it will complement the available information on digitizing documents and will be useful to developers who are engaged in a similar task.

')

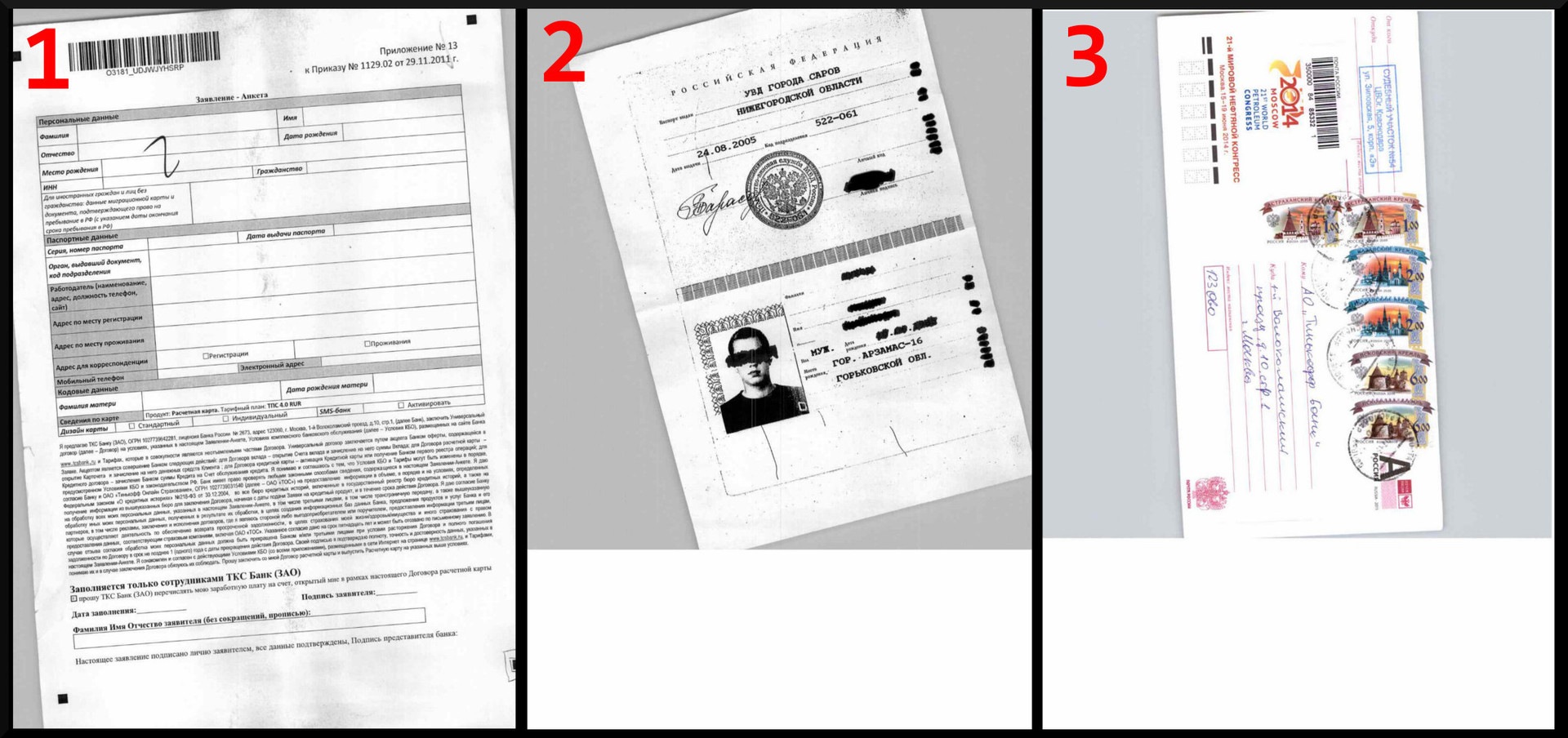

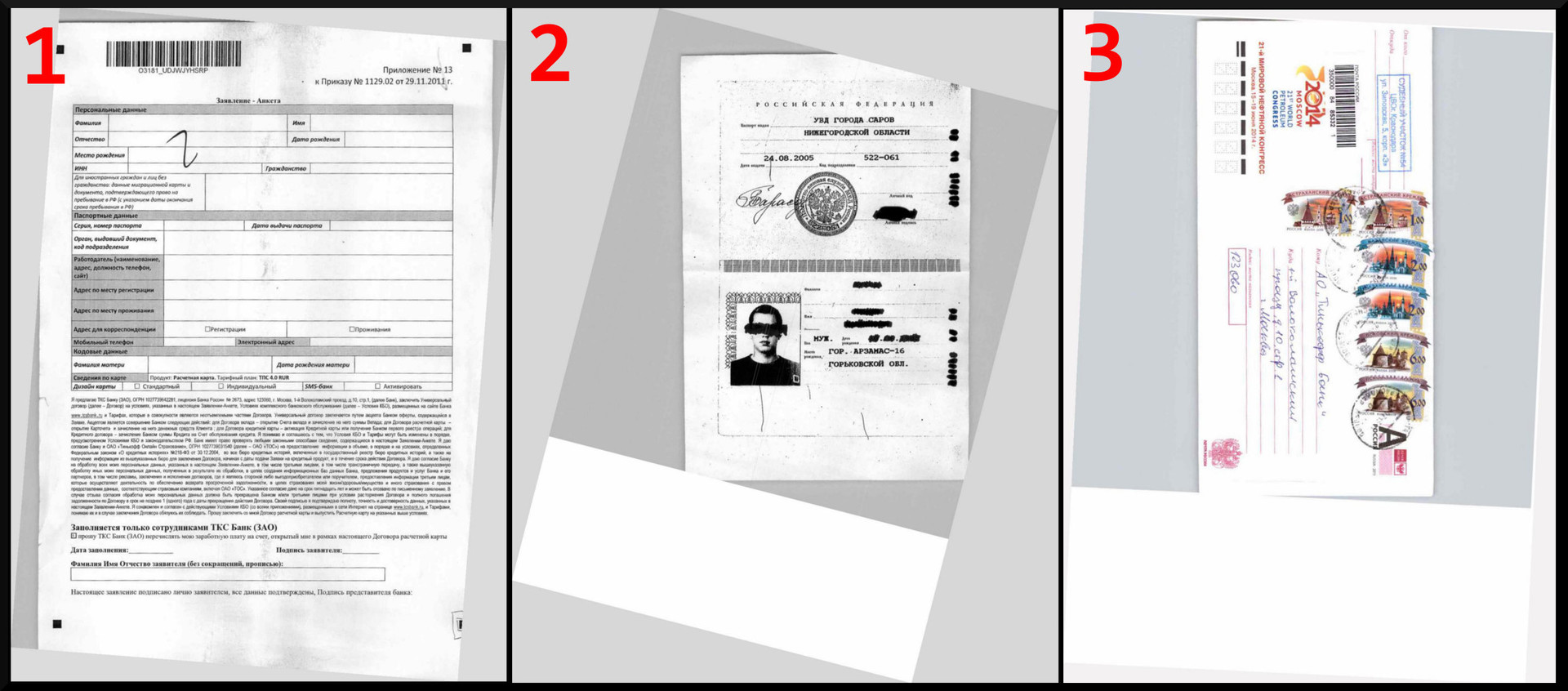

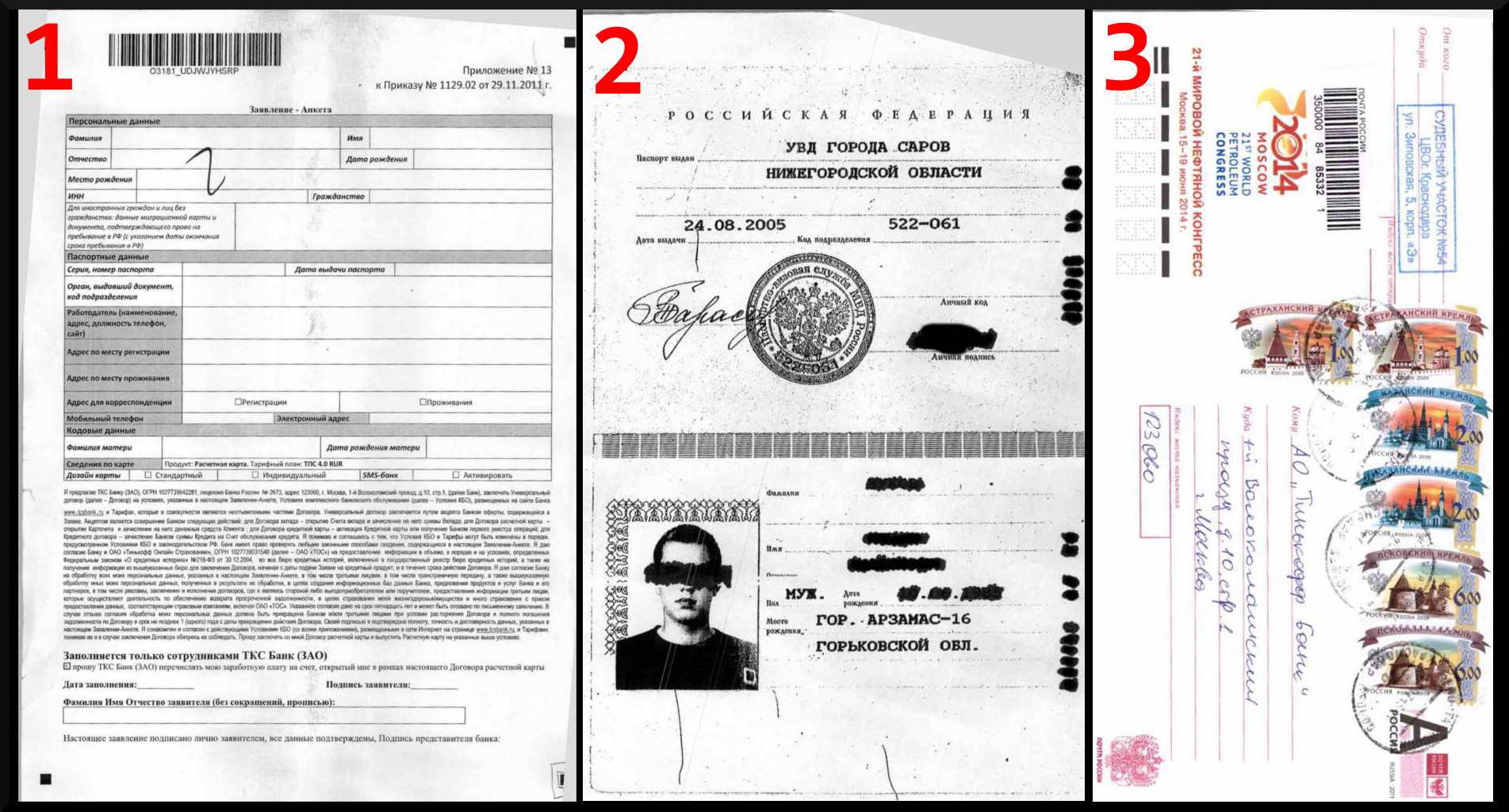

Consider three scans of documents that we received with the help of the good old scanner Futjitsu fi-6140.

- Scan of the form for obtaining a Tinkoff Bank card;

- Scan a photocopy of your passport;

- Scan mail envelope.

Tilt correction

After receiving a scan of the document, you need to bring it to a strictly vertical or horizontal view. It is understood that arbitrary documents may be submitted to the input without any marks by which the slope can be adjusted. Therefore, we will bind to the horizontal and vertical components of the document: lines, lines of tables, barcodes, and even fold points.

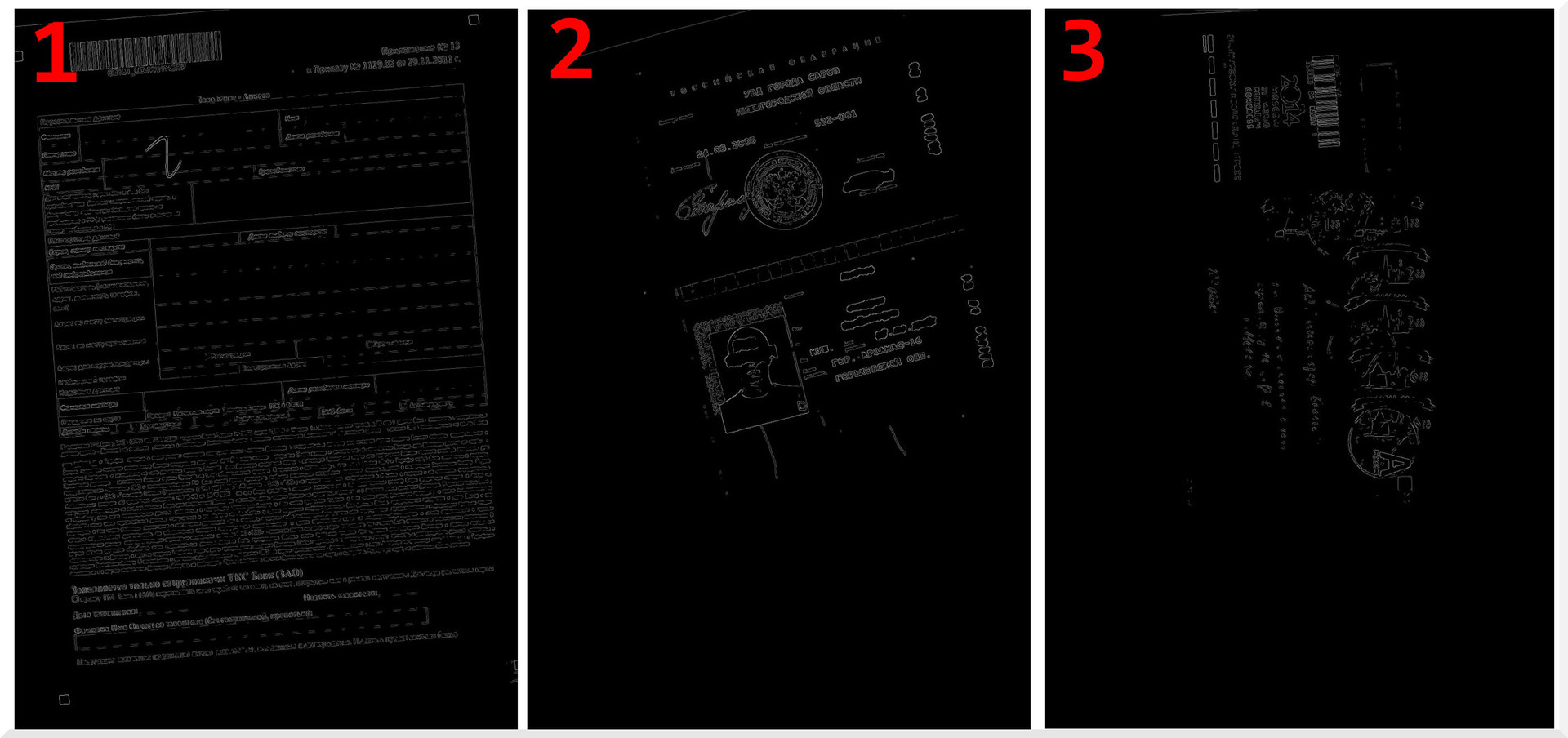

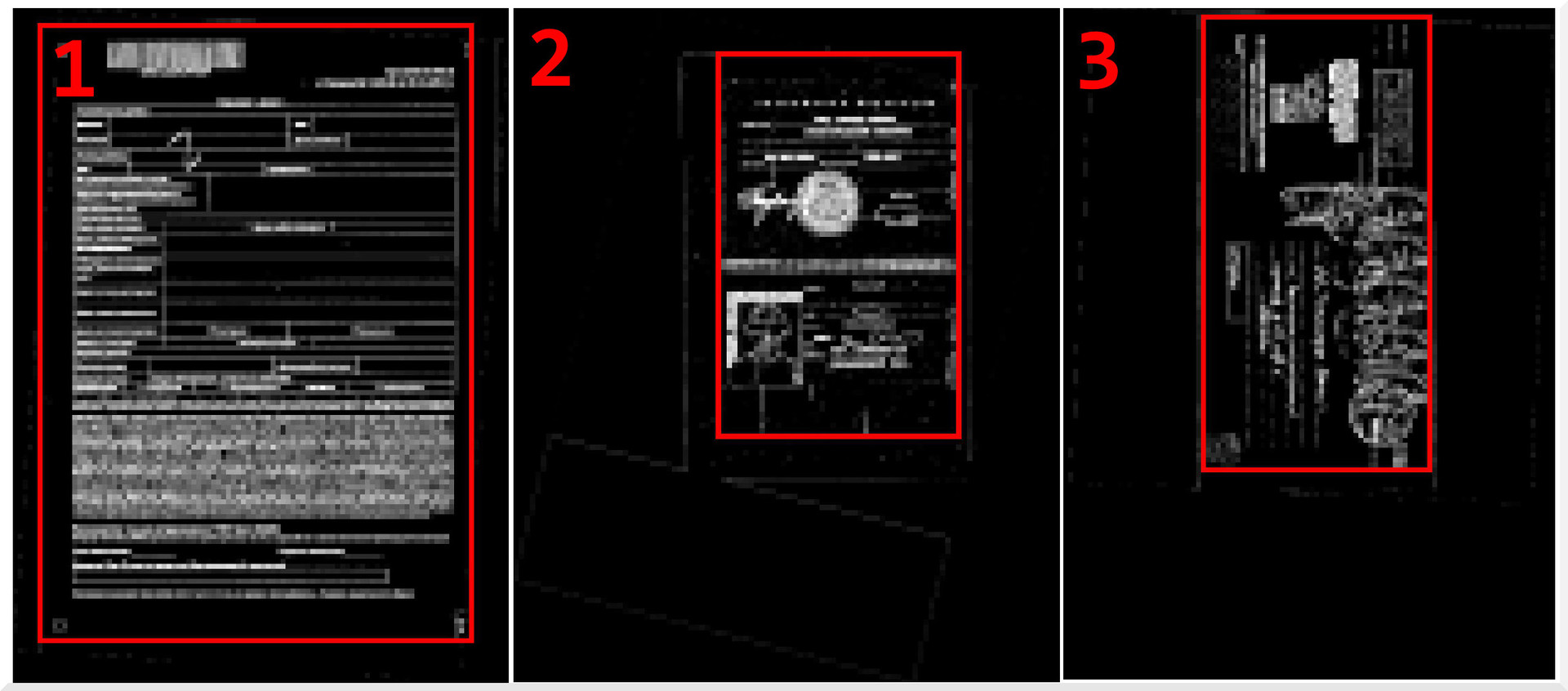

First of all, we eliminate the redundancy of the image, i.e. select the contour. To do this, use the detector borders.

We chose the Kanni Boundary Detector — because it gives the highest-quality result.

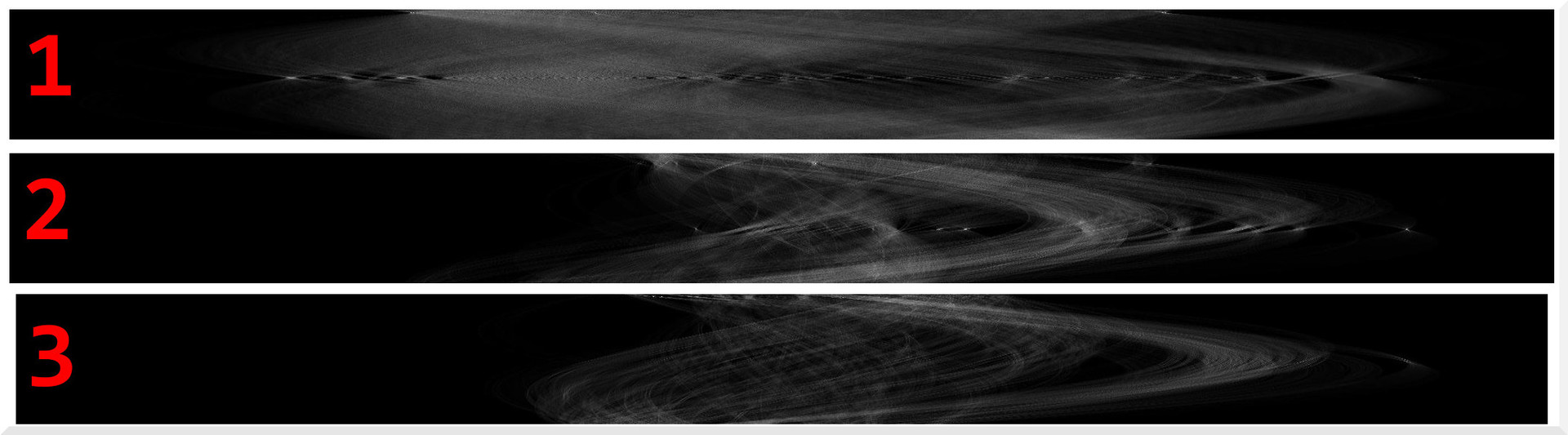

Now on the image you should find straight lines. For this we use the popular solution used in computer vision - Hough transformation. I will not describe his principle in detail, it can be found on the Internet. The essence of the transformation lies in the selection of all possible options for lines in the image and calculating the response for them. The greater the response, the more pronounced the line. As a result of the transformation, a phase plane will be constructed, where Y is the angle of inclination, X is the distance to the line.

On visualization of the phase plane, each pixel corresponds to a unique line. The coordinates will be calculated by the most pronounced lines.

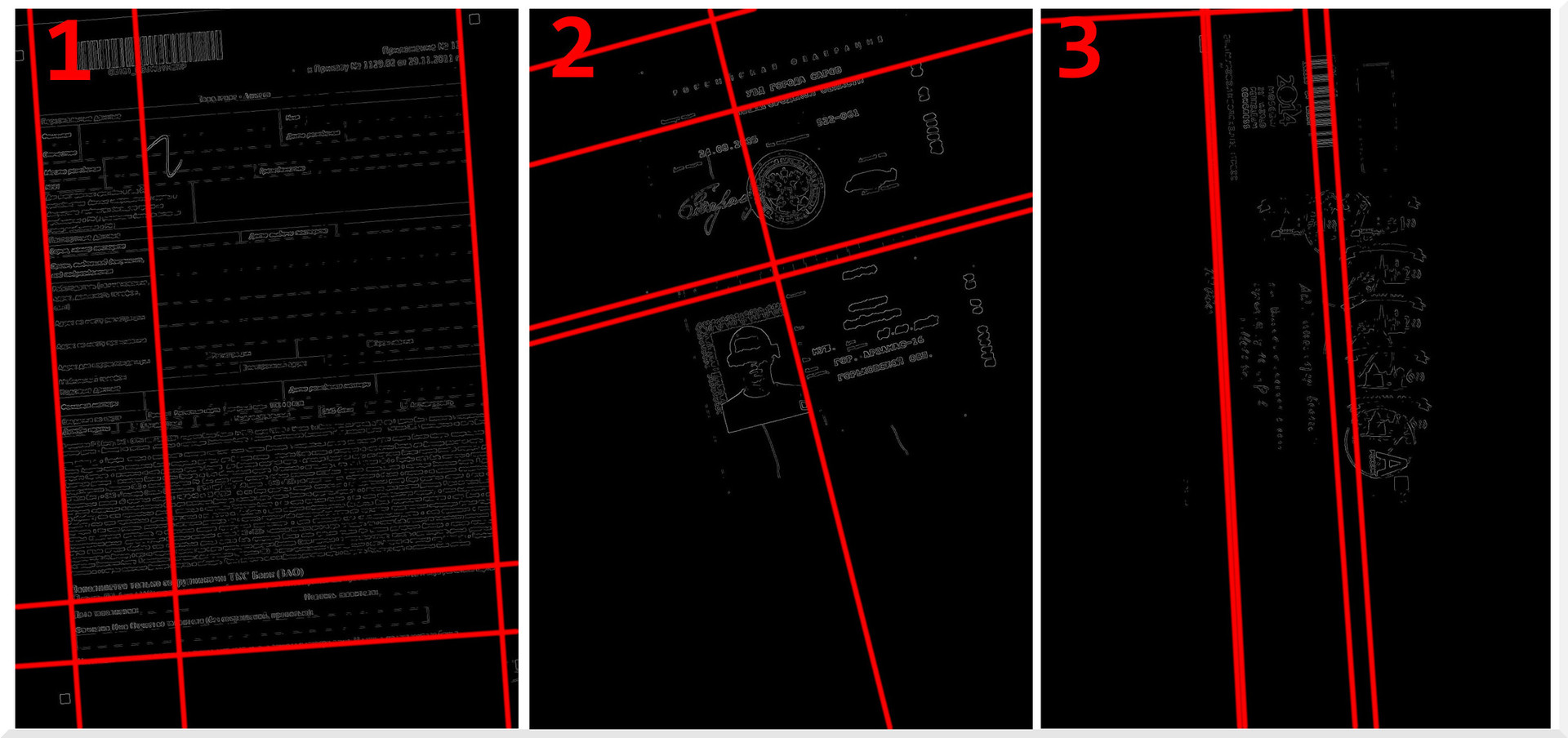

Take the 5 most intense lines, for clarity, we show them on the contour of the original image:

It is seen that the angles of inclination of the lines correspond to the inclination of the coordinate axes of the document.

To get the angle to which you want to rotate the image, we calculate the angle of deviation of the lines from the global coordinate axes (from 0 and 90 degrees), average the value and get the angle of the image. Rotate the image on the resulting angle with a minus sign. Now you can continue working with this image.

! The slope of some lines will be very different from others, i.e. they are far from parallel. Such lines are best excluded from the calculations so that they do not spoil the result.

To work with graphics, we used a wonderful library aforgenet . It already has an implementation of the document tilt angle search algorithm described above. As a result, only 15 lines of code and nalon correction is ready.

! The GetAverageBorderColor function returns the average color of the perimeter of the source image. It can be replaced by a constant or another more advanced function.

public static Bitmap DocumentAngleCorrection(Bitmap image) { var grayImage = Grayscale.CommonAlgorithms.RMY.Apply(image); var skewChecker = new DocumentSkewChecker(); var angle = skewChecker.GetSkewAngle(grayImage); while (angle >= 90) { angle -= 90; } while (angle <= -90) { angle += 90; } var rotator = new RotateBilinear(-angle, false); rotator.FillColor = GetAverageBorderColor(image); image = rotator.Apply(image); return image; } Cropping

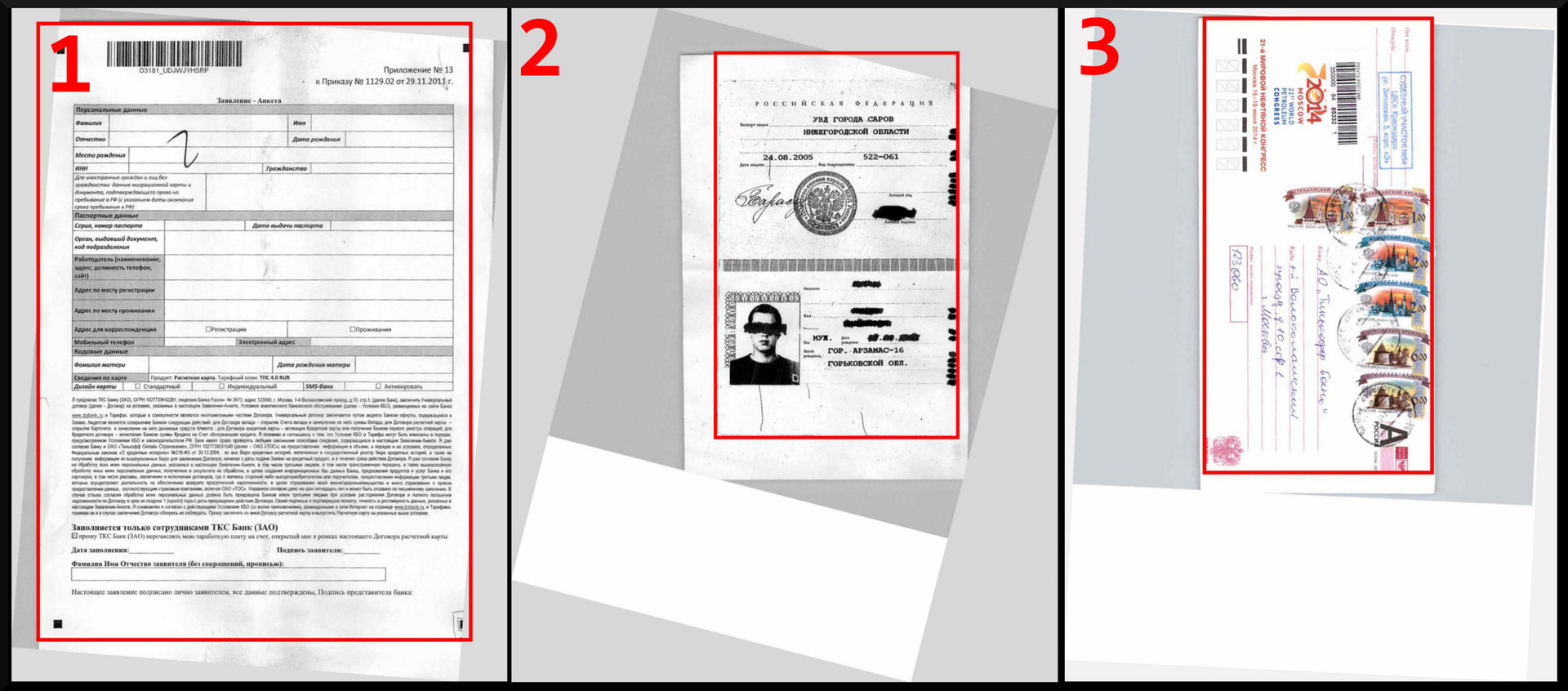

We have aligned the image. Now you need to frame his informative part. At this stage it is important to consider some features.

The algorithm should work properly:

- with any text and background color;

- with scans of any quality;

- with documents of any type.

We took the assumption as a basis for the algorithm: there will be many differences in brightness in the informative area of the image, but not enough in an empty one. Therefore, the solution of the problem is reduced to three actions:

- We divide the images into fragments, count the number of brightness differences vertically and horizontally for each fragment.

- We are looking for fragments with a large number of brightness differences.

- Cut out the informative area.

For quick access to image pixels, it is best to work with an array of bytes. It can be obtained as follows:

var bitmapData = sourceBitmap.LockBits(new Rectangle(0, 0, sourceBitmap.Width, sourceBitmap.Height), ImageLockMode.ReadWrite, PixelFormat.Format24bppRgb); var bytes = bitmapData.Stride * sourceBitmap.Height; var sourceBytes = new byte[bytes]; System.Runtime.InteropServices.Marshal.Copy(bitmapData.Scan0, sourceBytes, 0, bytes); The algorithm looks like this:

const int sensitivity = 25; const int widthQuantum = 100; var regionSize = bitmapData.Width / widthQuantum; for (var y = 0; y < bitmapData.Height + regionSize; y += regionSize) { // x processing for (var x = 0; x < bitmapData.Width + regionSize; x += regionSize) { // y processing var value = 0; for (var yy = y; (yy < y + regionSize) && (yy < bitmapData.Height); yy++) { // Horosontal counting var pixel = GetGrayPixel(sourceBytes, bitmapData.Width, x, yy); for (var xx = x; (xx < x + regionSize) && (xx < bitmapData.Width); xx++) { var nextPixel = GetGrayPixel(sourceBytes, bitmapData.Width, xx, yy); if (Math.Abs(pixel - nextPixel) > sensitivity) { value++; } pixel = nextPixel; } } for (var xx = x; (xx < x + regionSize) && (xx < bitmapData.Width); xx++) { // Vertical counting var pixel = GetGrayPixel(sourceBytes, bitmapData.Width, xx, y); for (var yy = y; (yy < y + regionSize) && (yy < bitmapData.Height); yy++) { var nextPixel = GetGrayPixel(sourceBytes, bitmapData.Width, xx, yy); if (Math.Abs(pixel - nextPixel) > sensitivity) { value++; } pixel = nextPixel; } } // value TODO } } The variable value in the designated place will contain the number of vertical and horizontal brightness variations in the fragment being processed. This value and fragment coordinates can, for example, be saved to the list.

! The GetGrayPixel function returns the average pixel intensity value.

private static byte GetGrayPixel(byte[] src, int w, int x, int y) { var s = GetShift(w, x, y); if ((s + 3 > src.Length) || (s < 0)) { return 127; } int b = src[s++]; b += src[s++]; b += src[s]; b = (int)(b / 3.0); return (byte)b; } After applying the algorithm, we got a map of the image brightness differences. On which we select the area including the greatest differences.

! To save resources, it is better to work with a reduced copy of the image. Then scale the result and apply to the original image.

See the result. The algorithm worked correctly - did not leave and did not cut anything extra.

We got this result in most cases.

Remove blank pages

It turned out that the task of removing blank pages of documents appeared after we developed an algorithm for extracting the informative part of the document. Therefore, to delete empty pages, we used the same algorithm, only slightly modified it. Instead of building a map of image brightness, we counted the number of fragments with large and small brightness differences. If there are many high-frequency blocks, the image contains valuable information and is not empty.

It would seem that in order to reduce processing time, you can remove unnecessary pages and frame the image in one approach, one loop only once. But it is obvious that you can frame the image only after alignment. In this case, one would also have to rotate the differential map. Therefore, in order not to complicate our lives, we have defined the following procedure:

delete blank pages -> tilt correction -> cropping

We got one extra pass through the pixels to calculate the frequency. But this is not a problem in our time thanks to the law of Gordon Moore.

! Full cheese

public static Bitmap DocumentAngleCorrection(Bitmap image) { var grayImage = Grayscale.CommonAlgorithms.RMY.Apply(image); var skewChecker = new DocumentSkewChecker(); var angle = skewChecker.GetSkewAngle(grayImage); while (angle >= 90) { angle -= 90; } while (angle <= -90) { angle += 90; } var rotator = new RotateBilinear(-angle, false); rotator.FillColor = GetAverageBorderColor(image); image = rotator.Apply(image); return image; } private static Color GetAverageBorderColor(Bitmap bitmap) { var widthProcImage = (double)200; var sourceImage = bitmap; var sizeFactor = widthProcImage / sourceImage.Width; var procBtmp = new Bitmap(sourceImage, (int)Math.Round(sourceImage.Width * sizeFactor), (int)Math.Round(sourceImage.Height * sizeFactor)); var bitmapData = procBtmp.LockBits(new Rectangle(0, 0, procBtmp.Width, procBtmp.Height), ImageLockMode.ReadWrite, PixelFormat.Format24bppRgb); var bytes = Math.Abs(bitmapData.Stride) * procBtmp.Height; var sourceBytes = new byte[bytes]; System.Runtime.InteropServices.Marshal.Copy(bitmapData.Scan0, sourceBytes, 0, bytes); var channels = new Dictionary<char, int>(); channels.Add('r', 0); channels.Add('g', 0); channels.Add('b', 0); var cnt = 0; for (var y = 0; y < bitmapData.Height; y++) { // vertical var c = GetColorPixel(sourceBytes, bitmapData.Width, 0, y); channels['r'] += cR; channels['g'] += cG; channels['b'] += cB; cnt++; c = GetColorPixel(sourceBytes, bitmapData.Width, bitmapData.Width - 1, y); channels['r'] += cR; channels['g'] += cG; channels['b'] += cB; cnt++; } for (var x = 0; x < bitmapData.Width; x++) { // horisontal var c = GetColorPixel(sourceBytes, bitmapData.Width, x, 0); channels['r'] += cR; channels['g'] += cG; channels['b'] += cB; cnt++; c = GetColorPixel(sourceBytes, bitmapData.Width, x, bitmapData.Height - 1); channels['r'] += cR; channels['g'] += cG; channels['b'] += cB; cnt++; } procBtmp.UnlockBits(bitmapData); var r = (int)Math.Round(((double)channels['r']) / cnt); var g = (int)Math.Round(((double)channels['g']) / cnt); var b = (int)Math.Round(((double)channels['b']) / cnt); var color = Color.FromArgb(r > 255 ? 255 : r, g > 255 ? 255 : g, b > 255 ? 255 : b); return color; } private static byte GetGrayPixel(byte[] src, int w, int x, int y) { var s = GetShift(w, x, y); if ((s + 3 > src.Length) || (s < 0)) { return 127; } int b = src[s++]; b += src[s++]; b += src[s]; b = (int)(b / 3.0); return (byte)b; } private static Color GetColorPixel(byte[] src, int w, int x, int y) { var s = GetShift(w, x, y); if ((s + 3 > src.Length) || (s < 0)) { return Color.Gray; } byte r = src[s++]; byte b = src[s++]; byte g = src[s]; var c = Color.FromArgb(r, g, b); return c; } private static int GetShift(int width, int x, int y) { return y * width * 3 + x * 3; } public static bool DocumentDetectInfo(Bitmap image) { const double widthProcImage = 200; const int sens = 15; const int treshold = 25; const int widthQuantum = 10; var sourceImage = image; var sizeFactor = widthProcImage / sourceImage.Width; var procBtmp = new Bitmap(sourceImage, (int)Math.Round(sourceImage.Width * sizeFactor), (int)Math.Round(sourceImage.Height * sizeFactor)); var bd = procBtmp.LockBits(new Rectangle(0, 0, procBtmp.Width, procBtmp.Height), ImageLockMode.ReadWrite, PixelFormat.Format24bppRgb); var bytes = Math.Abs(bd.Stride) * procBtmp.Height; var source = new byte[bytes]; System.Runtime.InteropServices.Marshal.Copy(bd.Scan0, source, 0, bytes); var maxV = 0; var size = bd.Width / widthQuantum; var hight = 0; var low = 0; for (var y = 0; y < bd.Height + size; y += size) { // x processing for (var x = 0; x < bd.Width + size; x += size) { // y processing var value = 0; for (var yy = y; (yy < y + size) && (yy < bd.Height); yy++) { // Horosontal counting var pixel = GetGrayPixel(source, bd.Width, x, yy); for (var xx = x; (xx < x + size) && (xx < bd.Width); xx++) { var point = GetGrayPixel(source, bd.Width, xx, yy); if (Math.Abs(pixel - point) > sens) { value++; } pixel = point; } } for (var xx = x; (xx < x + size) && (xx < bd.Width); xx++) { // Vertical counting var pixel = GetGrayPixel(source, bd.Width, xx, y); for (var yy = y; (yy < y + size) && (yy < bd.Height); yy++) { var point = GetGrayPixel(source, bd.Width, xx, yy); if (Math.Abs(pixel - point) > sens) { value++; } pixel = point; } } maxV = Math.Max(maxV, value); if (value > treshold) { hight++; } else { low++; } } } double cnt = hight + low; hight = (int)Math.Round(hight / cnt * 100); procBtmp.UnlockBits(bd); return (hight > treshold); } public static Bitmap DocumentCropInfo(Bitmap image) { const double widthProcImage = 1000; const int sensitivity = 25; const int treshold = 50; const int widthQuantum = 100; var sourceImage = image; var sizeFactor = widthProcImage / sourceImage.Width; var procBtmp = new Bitmap(sourceImage, (int)Math.Round(sourceImage.Width * sizeFactor), (int)Math.Round(sourceImage.Height * sizeFactor)); var bitmapData = procBtmp.LockBits(new Rectangle(0, 0, procBtmp.Width, procBtmp.Height), ImageLockMode.ReadWrite, PixelFormat.Format24bppRgb); var bytes = Math.Abs(bitmapData.Stride) * procBtmp.Height; var sourceBytes = new byte[bytes]; System.Runtime.InteropServices.Marshal.Copy(bitmapData.Scan0, sourceBytes, 0, bytes); var x1 = procBtmp.Width; var y1 = procBtmp.Height; var x2 = 0; var y2 = 0; var maxV = 0; var pointList = new List<Point>(); var regionSize = bitmapData.Width / widthQuantum; for (var y = 0; y < bitmapData.Height + regionSize; y += regionSize) { // x processing for (var x = 0; x < bitmapData.Width + regionSize; x += regionSize) { // y processing var value = 0; for (var yy = y; (yy < y + regionSize) && (yy < bitmapData.Height); yy++) { // Horosontal counting var pixel = GetGrayPixel(sourceBytes, bitmapData.Width, x, yy); for (var xx = x; (xx < x + regionSize) && (xx < bitmapData.Width); xx++) { var nextPixel = GetGrayPixel(sourceBytes, bitmapData.Width, xx, yy); if (Math.Abs(pixel - nextPixel) > sensitivity) { value++; } pixel = nextPixel; } } for (var xx = x; (xx < x + regionSize) && (xx < bitmapData.Width); xx++) { // Vertical counting var pixel = GetGrayPixel(sourceBytes, bitmapData.Width, xx, y); for (var yy = y; (yy < y + regionSize) && (yy < bitmapData.Height); yy++) { var nextPixel = GetGrayPixel(sourceBytes, bitmapData.Width, xx, yy); if (Math.Abs(pixel - nextPixel) > sensitivity) { value++; } pixel = nextPixel; } } pointList.Add(new Point() { V = value, X = x, Y = y }); maxV = Math.Max(maxV, value); } } var vFactor = 255.0 / maxV; foreach (var point in pointList) { var v = (byte)(point.V * vFactor); if (v > treshold) { x1 = Math.Min(x1, point.X); y1 = Math.Min(y1, point.Y); x2 = Math.Max(x2, point.X + regionSize); y2 = Math.Max(y2, point.Y + regionSize); } } procBtmp.UnlockBits(bitmapData); x1 = (int)Math.Round((x1 - regionSize) / sizeFactor); x2 = (int)Math.Round((x2 + regionSize) / sizeFactor); y1 = (int)Math.Round((y1 - regionSize) / sizeFactor); y2 = (int)Math.Round((y2 + regionSize) / sizeFactor); var bigRect = new Rectangle(x1, y1, x2 - x1, y2 - y1); var clippedImg = CropImage(sourceImage, bigRect); return clippedImg; } public static Bitmap CropImage(Bitmap source, Rectangle section) { section.X = Math.Max(0, section.X); section.Y = Math.Max(0, section.Y); section.Width = Math.Min(source.Width, section.Width); section.Height = Math.Min(source.Height, section.Height); var bmp = new Bitmap(section.Width, section.Height); var g = Graphics.FromImage(bmp); g.DrawImage(source, 0, 0, section, GraphicsUnit.Pixel); return bmp; } private class Point { public int X; public int Y; public int V; } Conclusion

Algorithms do an excellent job with their task to this day. It is likely that this is not the most effective solution. Therefore, we invite everyone in the comments to discuss other possible algorithms and approaches to solve a specific problem.

See you soon!

Source: https://habr.com/ru/post/278073/

All Articles