Video transmission from the deep-sea robot

I want to share with the community the experience of developing software for viewing and recording the video signal transmitted from the deep-water robot Moby Dick. The development was carried out by order of The Whale underwater robotics laboratory. The project was designed to provide:

- work with any IP cameras that support the RTSP protocol;

- viewing and recording video from multiple IP cameras;

- viewing and recording stereo video from two dedicated IP cameras;

- Record video from the screen;

- comfortable video viewing with a short drop in the data transfer rate.

The deep-water robot Moby Dick of Project 1-0-1, landed from the board of the transrader of the Russian Moonboard Rainbow, explores the ocean of Europe (in the artist’s view, a collage)

If you are not afraid of the megaton code, welcome under cat.

')

Moby Dick is a remote - controlled underwater vehicle (born Underwater Remotely Operated Vehicle (Underwater ROV or UROV)) developed in The Whale underwater robotics laboratory. The main goal in the design and creation of Moby Dick was to create a small robot capable of diving to the maximum (in perspective) depths of the world ocean (as part of the lifting mechanism), to issue high-resolution video (Full HD), take samples, samples, carry out rescue .

Deep-sea robot Moby Dick in front (two front cameras are visible in the upper part under the cylinders)

Deep-sea robot Moby Dick behind (visible rear camera at the bottom under the cable mount)

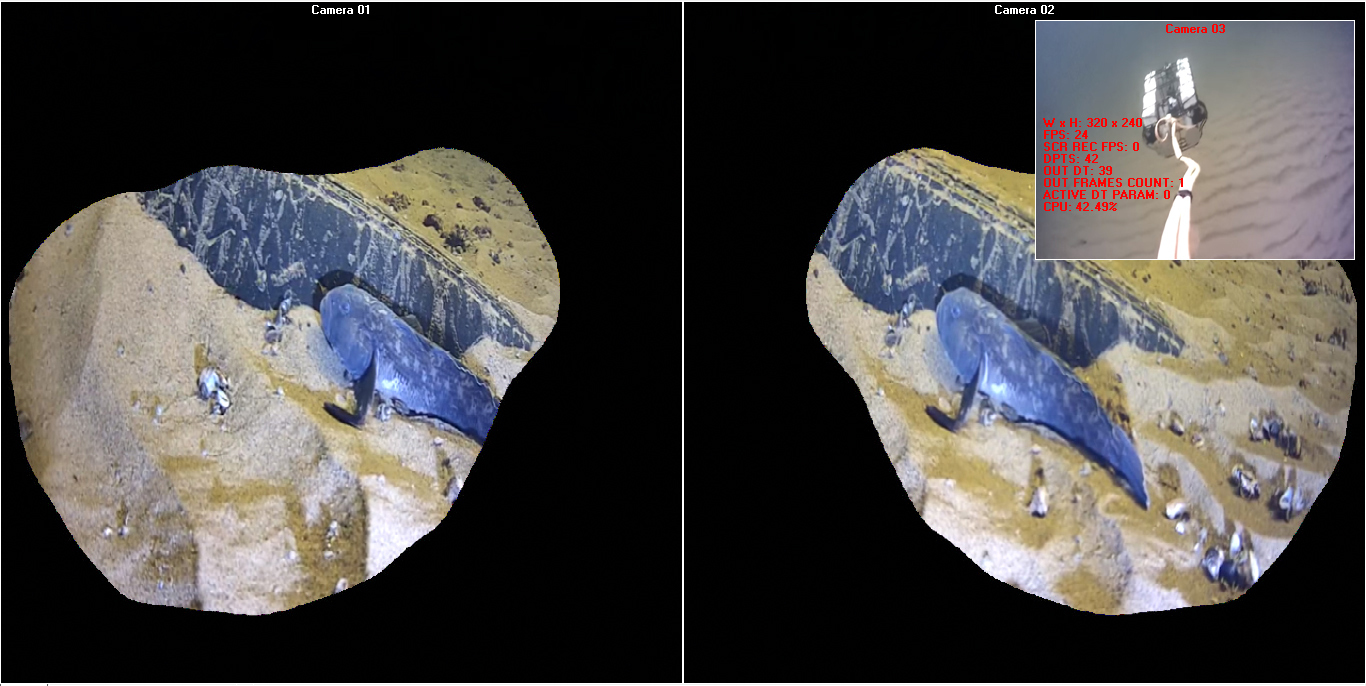

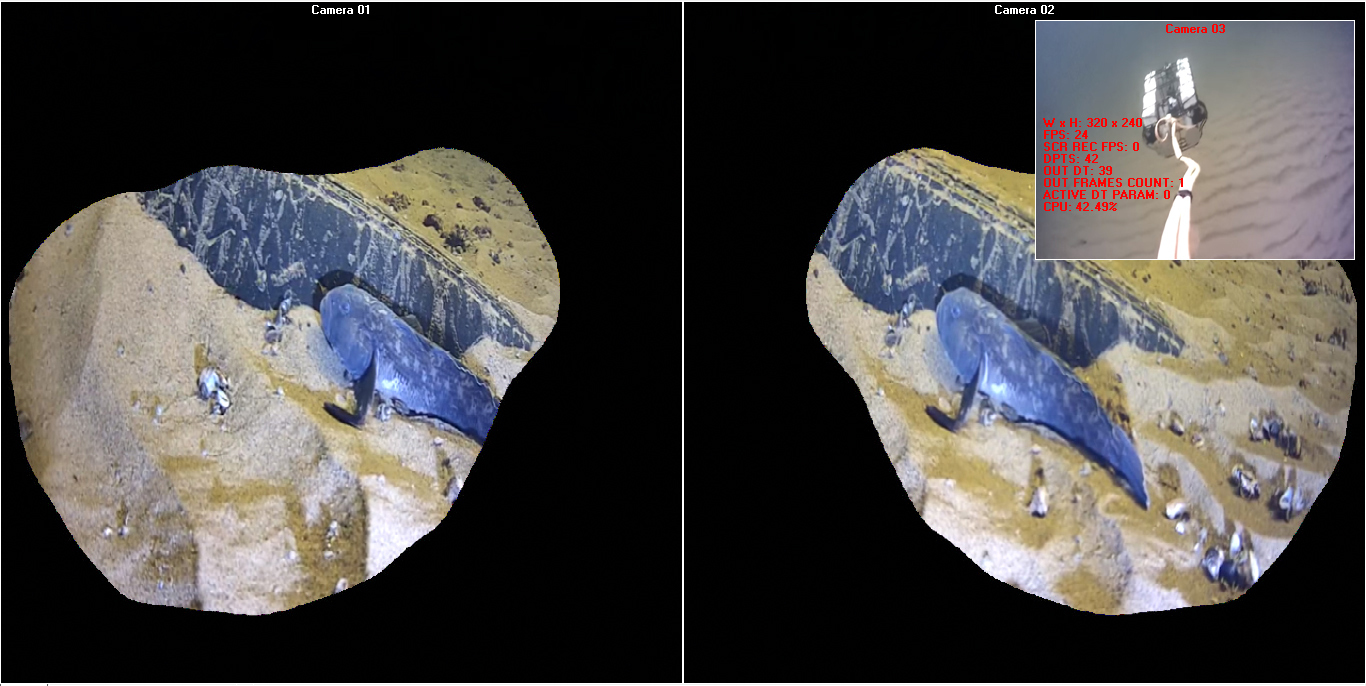

As a rule, three digital video cameras are installed on Moby Dick, and two of them are mounted on a rotating suspension with 360 ° rotation on all three axes. 360 ° rotation along all three axes allows inspecting the engines of the apparatus and the general situation around and inside the frame, as well as quickly inspect lengthy objects (pipelines, ships, etc.) when the video camera is perpendicular to the movement of the robot. This feature also allows you to receive a stereoscopic image of the seabed, it is possible to estimate the distance to objects and their size directly from the monitor screen.

In total, up to 9 digital cameras can be installed on Moby Dick by getting an overview of the entire sphere. However, in the maximum configuration, you have to be content with Full HD for only one camera - the rest will have to be connected as 720p due to the limited data transfer rate over the cable.

It should be noted that this is the only underwater robot with the ability to transfer stereo video in our country. Robots similar in their capabilities exist abroad, but in comparison with Moby Dick they are very large in size.

The FFMPEG library was chosen to receive and record video. To isolate the developer from the specific API FFMPEG, a proxy DLL is used (compiled in the good old Microsoft Visual C ++ 2008 Express Edition). Consider the most interesting parts of his code.

Code is used to obtain high accuracy time stamps.

Sleep. /

ctx_t.

FFMPEG

FFMPEG avformat_open_input / avformat_find_stream_info / av_read_frame / avformat_close_input

avformat_open_input timeout_ms. IP-. , TCP. UDP ( ).

:

- ( IP-, );

- ( ).

. . 1280 x 720, 24 fps, 2727 kbps Intel Core2 Duo E8300 2.83 17%. ffplay . .

- IP- ( ).

( moov atom not found)

( Borland C++ Builder 6.0 Enterprise Suite).

( , — — ; )

( , )

, , , , , , . : , , FPS , , , ( , , ), () ..

WM_PAINT . WM_PAINT . .

. .

— , . . — . — . ( ). .

( ) .

. DLL-

WM_PAINT

.

— (!), (BMP) . ? , (, , ) , …

. . .

- IP- : ( ). . ().

()

( — )

- :

- VR Android;

- iDisplay ();

- iDisplay ();

- USB ( ADB ) Wi-Fi « » ( — );

- « » VR .

- :

- (VR 0 USD :), iDisplay 5 USD);

- — .

:

-« » — — — , , iDisplay , : Intel Core2 Duo E8300 2.83 iDisplay 50% ( ; , Intel Core2 Quad Q9400 2.66 25%) , ; ( ) — iDisplay - ( ); iDisplay « »;

- VR , .

: DLL- .

. DLL-

, (1280 x 1024, 25 fps) Intel Core2 Duo E8300 2.83 30% FPS, , .

. . .

:

.

- work with any IP cameras that support the RTSP protocol;

- viewing and recording video from multiple IP cameras;

- viewing and recording stereo video from two dedicated IP cameras;

- Record video from the screen;

- comfortable video viewing with a short drop in the data transfer rate.

The deep-water robot Moby Dick of Project 1-0-1, landed from the board of the transrader of the Russian Moonboard Rainbow, explores the ocean of Europe (in the artist’s view, a collage)

If you are not afraid of the megaton code, welcome under cat.

')

Moby dick

Moby Dick is a remote - controlled underwater vehicle (born Underwater Remotely Operated Vehicle (Underwater ROV or UROV)) developed in The Whale underwater robotics laboratory. The main goal in the design and creation of Moby Dick was to create a small robot capable of diving to the maximum (in perspective) depths of the world ocean (as part of the lifting mechanism), to issue high-resolution video (Full HD), take samples, samples, carry out rescue .

Deep-sea robot Moby Dick in front (two front cameras are visible in the upper part under the cylinders)

Deep-sea robot Moby Dick behind (visible rear camera at the bottom under the cable mount)

As a rule, three digital video cameras are installed on Moby Dick, and two of them are mounted on a rotating suspension with 360 ° rotation on all three axes. 360 ° rotation along all three axes allows inspecting the engines of the apparatus and the general situation around and inside the frame, as well as quickly inspect lengthy objects (pipelines, ships, etc.) when the video camera is perpendicular to the movement of the robot. This feature also allows you to receive a stereoscopic image of the seabed, it is possible to estimate the distance to objects and their size directly from the monitor screen.

In total, up to 9 digital cameras can be installed on Moby Dick by getting an overview of the entire sphere. However, in the maximum configuration, you have to be content with Full HD for only one camera - the rest will have to be connected as 720p due to the limited data transfer rate over the cable.

It should be noted that this is the only underwater robot with the ability to transfer stereo video in our country. Robots similar in their capabilities exist abroad, but in comparison with Moby Dick they are very large in size.

Receiving and recording video

The FFMPEG library was chosen to receive and record video. To isolate the developer from the specific API FFMPEG, a proxy DLL is used (compiled in the good old Microsoft Visual C ++ 2008 Express Edition). Consider the most interesting parts of his code.

Code is used to obtain high accuracy time stamps.

static bool timer_supported;

static LARGE_INTEGER timer_f;

static bool timer_init(void)

{

return timer_supported = QueryPerformanceFrequency(&timer_f);

}

static LARGE_INTEGER timer_t;

static bool timer_get(void)

{

return QueryPerformanceCounter(&timer_t);

}

static LONGLONG get_microseconds_hi(void)

{

return timer_get()? timer_t.QuadPart * 1000000 / timer_f.QuadPart : 0;

}

static LONGLONG get_microseconds_lo(void)

{

return GetTickCount() * 1000i64;

}

static LONGLONG (*get_microseconds)(void) = get_microseconds_lo;

Sleep. /

static TIMECAPS tc;

...

// DLL

memset(&tc, 0, sizeof(tc));

...

//

if (flag)

{

get_microseconds = timer_init()? get_microseconds_hi : get_microseconds_lo;

// tc , ...

if (!tc.wPeriodMin && timeGetDevCaps(&tc, sizeof(tc)) == MMSYSERR_NOERROR)

{

timeBeginPeriod(tc.wPeriodMin); //

// Sleep

}

}

//

else

{

if (tc.wPeriodMin) //

{

timeEndPeriod(tc.wPeriodMin); //

memset(&tc, 0, sizeof(tc));

}

}

ctx_t.

class ctx_t:

public mt_obj //

{

public:

AVFormatContext *format_ctx; //

int stream; // -

AVCodecContext *codec_ctx; // -

AVFrame *frame[2]; //

int frame_idx; // ( !frame_idx frames_count > 0)

//BGR- ...

// DLL-

AVFrame *x_bgr_frame;

SwsContext *x_bgr_ctx;

AVFormatContext *ofcx; //

AVStream *ost; //-

int rec_started; // ( )

mt_obj rec_cs; //

int64_t pts[2]; //

int continue_on_error; //

int disable_decode; //

void (*cb_fn)(int cb_param); //

int cb_param; //

ms_rec_ctx_t *owner; // -

int out_stream; // - ()

int frames_count; //

DWORD base_ms; // FFMPEG

DWORD timeout_ms; // FFMPEG

HANDLE thread_h; //

int terminated; //

};

FFMPEG

static int interrupt_cb(void *_ctx)

{

ctx_t *ctx = (ctx_t *)_ctx;

// FFMPEG ,

if (GetTickCount() - ctx->base_ms > ctx->timeout_ms) return 1;

return 0;

}

FFMPEG avformat_open_input / avformat_find_stream_info / av_read_frame / avformat_close_input

format_ctx = avformat_alloc_context();

if (!format_ctx)

{...}

format_ctx->interrupt_callback.callback = interrupt_cb;

format_ctx->interrupt_callback.opaque = this;

AVDictionary *opts = 0;

if (tcp_flag) av_dict_set(&opts, "rtsp_transport", "tcp", 0); // TCP-

base_ms = GetTickCount(); // FFMPEG

if (avformat_open_input(&format_ctx, src, 0, &opts) != 0) // FFMPEG ( )

{...}

avformat_open_input timeout_ms. IP-. , TCP. UDP ( ).

AVPacket packet;

int finished;

while (!ctx->terminated)

{

ctx->base_ms = GetTickCount();

if (av_read_frame(ctx->format_ctx, &packet) != 0)

{

//

if (ctx->continue_on_error)

{}

else

{

ctx->terminated = 1;

ExitThread(0);

}

}

else if (packet.stream_index == ctx->stream)

{

int64_t original_pts = packet.pts;

if (ctx->disable_decode) // ( )

{

ctx->rec_cs.lock(); //

if (ctx->owner) lock(ctx->owner); // -

if (ctx->ofcx)

{

//

if (!ctx->rec_started && (packet.flags & AV_PKT_FLAG_KEY))

{

ctx->rec_started = 1;

}

if (ctx->rec_started)

{

//

int64_t pts = packet.pts;

//

int64_t delta = packet.dts - packet.pts;

if (ctx->owner) // -, ...

{

LONGLONG start;

// -

get_start(ctx->owner, &start);

//

LONGLONG dt = (get_microseconds() - start) / 1000;

//

pts = dt * ctx->format_ctx->streams[ctx->stream]->time_base.den / 1000;

}

packet.stream_index = ctx->out_stream; //

//

packet.pts = av_rescale_q(pts, ctx->format_ctx->streams[ctx->stream]->time_base, ctx->ost->time_base);

// ...

//

packet.dts = av_rescale_q(packet.dts == AV_NOPTS_VALUE? AV_NOPTS_VALUE : (pts + delta), ctx->format_ctx->streams[ctx->stream]->time_base, ctx->ost->time_base);

//

packet.duration = av_rescale_q(packet.duration, ctx->format_ctx->streams[ctx->stream]->time_base, ctx->ost->time_base);

//

packet.pos = -1;

// - ...

//FFMPEG

av_interleaved_write_frame( ctx->ofcx, &packet );

//

packet.stream_index = ctx->stream;

}

}

if (ctx->owner) unlock(ctx->owner);

ctx->rec_cs.unlock();

}

// , ...

else if (avcodec_decode_video2(ctx->codec_ctx, ctx->frame[ctx->frame_idx], &finished, &packet) > 0)

{

// ( )

ctx->rec_cs.lock();

if (ctx->owner) lock(ctx->owner);

if (ctx->ofcx)

{

if (!ctx->rec_started && (packet.flags & AV_PKT_FLAG_KEY))

{

ctx->rec_started = 1;

}

if (ctx->rec_started)

{

int64_t pts = packet.pts;

int64_t delta = packet.dts - packet.pts;

if (ctx->owner)

{

LONGLONG start;

get_start(ctx->owner, &start);

LONGLONG dt = (get_microseconds() - start) / 1000;

pts = dt * ctx->format_ctx->streams[ctx->stream]->time_base.den / 1000;

}

packet.stream_index = ctx->out_stream;

packet.pts = av_rescale_q(pts, ctx->format_ctx->streams[ctx->stream]->time_base, ctx->ost->time_base);

packet.dts = av_rescale_q(packet.dts == AV_NOPTS_VALUE? AV_NOPTS_VALUE : (pts + delta), ctx->format_ctx->streams[ctx->stream]->time_base, ctx->ost->time_base);

packet.duration = av_rescale_q(packet.duration, ctx->format_ctx->streams[ctx->stream]->time_base, ctx->ost->time_base);

packet.pos = -1;

av_interleaved_write_frame( ctx->ofcx, &packet );

packet.stream_index = ctx->stream;

}

}

if (ctx->owner) unlock(ctx->owner);

ctx->rec_cs.unlock();

if (finished) //

{

ctx->lock(); //

ctx->pts[ctx->frame_idx] = original_pts; //

ctx->frame_idx = !ctx->frame_idx; //

// ,

if (ctx->cb_fn) ctx->cb_fn(ctx->cb_param);

ctx->unlock();

if (ctx->frames_count + 1 < 0) ctx->frames_count = 1;

else ctx->frames_count++;

}

}

}

av_free_packet(&packet);

}

:

- ( IP-, );

- ( ).

. . 1280 x 720, 24 fps, 2727 kbps Intel Core2 Duo E8300 2.83 17%. ffplay . .

- IP- ( ).

( moov atom not found)

AVOutputFormat *ofmt = av_guess_format( "VOB", NULL, NULL ); // VOB

ofcx = avformat_alloc_context();

if (!ofcx)

{...}

ofcx->oformat = ofmt;

//

int ffmpeg_get_bmp_NB_(int _ctx, void *bmp_bits, int w, int h, int br, int co, int sa, void *pts)

{

ctx_t *ctx = (ctx_t *)_ctx;

if (!ctx || !ctx->frames_count) return 0; // -

// BGR- , ...

if (!ctx->x_bgr_frame || ctx->x_bgr_frame->width != w || ctx->x_bgr_frame->height != h)

{

// BGR-

sws_freeContext(x_bgr_ctx);

av_free(x_bgr_frame);

x_bgr_frame = 0;

x_bgr_ctx = 0;

// BGR-

ctx->x_bgr_frame = av_frame_alloc();

if (!ctx->x_bgr_frame)

{

sws_freeContext(x_bgr_ctx);

av_free(x_bgr_frame);

x_bgr_frame = 0;

x_bgr_ctx = 0;

return 0;

}

ctx->x_bgr_frame->width = w;

ctx->x_bgr_frame->height = h;

if

(

avpicture_fill((AVPicture *)ctx->x_bgr_frame, 0, PIX_FMT_RGB32, w, h) < 0

)

{

sws_freeContext(x_bgr_ctx);

av_free(x_bgr_frame);

x_bgr_frame = 0;

x_bgr_ctx = 0;

return 0;

}

//

ctx->x_bgr_ctx = sws_getContext(ctx->codec_ctx->width, ctx->codec_ctx->height, ctx->codec_ctx->pix_fmt, w, h, PIX_FMT_RGB32, SWS_BICUBIC, 0, 0, 0);

if (!ctx->x_bgr_ctx)

{

sws_freeContext(x_bgr_ctx);

av_free(x_bgr_frame);

x_bgr_frame = 0;

x_bgr_ctx = 0;

return 0;

}

}

// BGR-

ctx->x_bgr_frame->data[0] = (uint8_t *)bmp_bits;

ctx->x_bgr_frame->data[0] += ctx->x_bgr_frame->linesize[0] * (h - 1);

ctx->x_bgr_frame->linesize[0] = -ctx->x_bgr_frame->linesize[0];

// , ,

int *table;

int *inv_table;

int brightness, contrast, saturation, srcRange, dstRange;

sws_getColorspaceDetails(ctx->x_bgr_ctx, &inv_table, &srcRange, &table, &dstRange, &brightness, &contrast, &saturation);

brightness = ((br<<16) + 50) / 100;

contrast = (((co+100)<<16) + 50) / 100;

saturation = (((sa+100)<<16) + 50) / 100;

sws_setColorspaceDetails(ctx->x_bgr_ctx, inv_table, srcRange, table, dstRange, brightness, contrast, saturation);

//

sws_scale(ctx->x_bgr_ctx, ctx->frame[!ctx->frame_idx]->data, ctx->frame[!ctx->frame_idx]->linesize, 0, ctx->codec_ctx->height, ctx->x_bgr_frame->data, ctx->x_bgr_frame->linesize);

// BGR-

ctx->x_bgr_frame->linesize[0] = -ctx->x_bgr_frame->linesize[0];

//

*(int64_t *)pts = ctx->pts[!ctx->frame_idx] * 1000 / ctx->format_ctx->streams[ctx->stream]->time_base.den;

return 1;

}

//

int ffmpeg_get_bmp(int _ctx, void *bmp_bits, int w, int h, int br, int co, int sa, void *pts)

{

ctx_t *ctx = (ctx_t *)_ctx;

if (!ctx || !ctx->frames_count) return 0;

ctx->lock();

int res = ffmpeg_get_bmp_NB_(_ctx, bmp_bits, w, h, br, co, sa, pts);

ctx->unlock();

return res;

}

( Borland C++ Builder 6.0 Enterprise Suite).

( , — — ; )

( , )

, , , , , , . : , , FPS , , , ( , , ), () ..

WM_PAINT . WM_PAINT . .

class t_buf_t

{

public:

int n; //

__int64 *t; //

int idx; //

int ttl; // ( )

t_buf_t(void): n(0), t(0), idx(0), ttl(0) {}

virtual ~t_buf_t(void) {delete [] t;}

void reset_size(int n)

{

delete [] t;

this->n = n;

t = new __int64[n];

idx = 0;

ttl = 0;

}

void set_t(__int64 t) //

{

this->t[idx] = t;

idx++;

if (idx == n) idx = 0;

if (ttl < n) ttl++;

}

__int64 get_dt(void) //

{

if (ttl != n) return 0;

int idx_2 = idx - 1;

if (idx_2 < 0) idx_2 = n - 1;

return (t[idx_2] - t[idx]) / n;

}

};

. .

— , . . — . — . ( ). .

char **form_bmp_bits; //

HBITMAP *form_bmp_h; //

bool *frame_good; //

int frame_buffer_size; // ( )

int frame_buffer_size_2; // ( )

int in_frames_count_ttl; // ( )

int in_frame_idx; //

int out_frame_idx; //

int in_frames_count; //

int out_frames_count; //

int in_base_idx; // ( )

int out_base_idx; // ( )

int buffer_w, buffer_h; //

t_buf_t in_t_buf; //

__int64 out_dt; //

__int64 pts; //

__int64 dpts; //

//

HANDLE h_a;

HANDLE h_b;

//

int n_param;

// /

int active_dt_param;

void reset_frame_buffer(void)

{

in_frames_count_ttl = frame_buffer_size == 1? 1 : 0;

in_frame_idx = 0;

out_frame_idx = 0;

in_frames_count = frame_buffer_size == 1? 1 : 0;

out_frames_count = frame_buffer_size == 1? 1 : 0;

in_base_idx = 0;

out_base_idx = frame_buffer_size == 1? 0 : frame_buffer_size;

//

in_t_buf.reset_size(n_param);

out_dt = 20; // 20

}

void create_frame_buffer(void)

{

reset_frame_buffer();

frame_buffer_size_2 = frame_buffer_size == 1? 1 : frame_buffer_size * 2;

buffer_w = ClientWidth;

buffer_h = ClientHeight;

// ( )

int n = frame_buffer_size == 1? 1 : frame_buffer_size * 4;

form_bmp_bits = new char *[n];

form_bmp_h = new HBITMAP[n];

frame_good = new bool[n];

for (int i = 0; i < n; i++)

{

BITMAPINFOHEADER form_bmi_hdr;

form_bmi_hdr.biSize = sizeof(BITMAPINFOHEADER);

form_bmi_hdr.biWidth = buffer_w;

form_bmi_hdr.biHeight = buffer_h;

form_bmi_hdr.biPlanes = 1;

form_bmi_hdr.biBitCount = 32;

form_bmi_hdr.biCompression = BI_RGB;

form_bmi_hdr.biSizeImage = form_bmi_hdr.biWidth * form_bmi_hdr.biHeight * 4;

form_bmi_hdr.biXPelsPerMeter = 0;

form_bmi_hdr.biYPelsPerMeter = 0;

form_bmi_hdr.biClrUsed = 0;

form_bmi_hdr.biClrImportant = 0;

form_bmp_h[i] = CreateDIBSection(0, (BITMAPINFO *)&form_bmi_hdr, DIB_RGB_COLORS, (void **)&form_bmp_bits[i], 0, 0);

frame_good[i] = false;

}

}

void destroy_frame_buffer(void)

{

int n = frame_buffer_size == 1? 1 : frame_buffer_size * 4;

for (int i = 0; i < n; i++)

DeleteObject(form_bmp_h[i]);

delete [] form_bmp_bits;

delete [] form_bmp_h;

delete [] frame_good;

}

( ) .

. DLL-

//

bool u_obj_t::get_frame(const bool _NB_, void *pts)

{

bool res;

if (_NB_)

{

res = ffmpeg_get_bmp_NB_(form->ctx, form->form_bmp_bits[form->in_frame_idx + form->in_base_idx], form->buffer_w, form->buffer_h, form->br, form->co, form->sa, pts);

}

else

{

res = ffmpeg_get_bmp(form->ctx, form->form_bmp_bits[form->in_frame_idx + form->in_base_idx], form->buffer_w, form->buffer_h, form->br, form->co, form->sa, pts);

}

return res;

}

//

void u_obj_t::exec(const bool _NB_)

{

__int64 pts;

// , ...

if (form->form_bmp_h[form->in_frame_idx + form->in_base_idx] && get_frame(_NB_, &pts))

{

if (pts != form->pts) // ( ), ...

{

form->frame_good[form->in_frame_idx + form->in_base_idx] = true;

// ...

//

form->dpts = pts - form->pts;

form->pts = pts;

//

form->in_t_buf.set_t(ffmpeg_get_microseconds() / 1000);

//

form->out_dt = form->in_t_buf.get_dt();

// 250

if (form->out_dt > 250) form->out_dt = 250;

//

form->in_frame_idx++;

// -

if (form->in_frame_idx == form->frame_buffer_size_2) form->in_frame_idx--;

//

form->in_frames_count++;

//

if (form->in_frames_count_ttl != form->frame_buffer_size) form->in_frames_count_ttl++;

}

}

else //

{

form->frame_good[form->in_frame_idx + form->in_base_idx] = false;

}

}

//

void cb_fn(int cb_param)

{

Tmain_form *form = (Tmain_form *)cb_param;

form->u_obj->lock(); //

form->u_obj->exec(true); //

form->u_obj->unlock(); //

if (form->frame_buffer_size == 1) //

{

ResetEvent(form->h_b); // A

SetEvent(form->h_a); // A

ResetEvent(form->h_a); // B

SetEvent(form->h_b); // B

}

}

WM_PAINT

//

void __fastcall repaint_thread_t::repaint(void)

{

form->Repaint(); // WM_PAINT

if (form->frame_buffer_size == 1) //

{}

else //...

{

//

form->out_frame_idx++;

// ,

if (form->out_frame_idx >= form->out_frames_count || form->in_frames_count >= form->frame_buffer_size_2)

{

form->u_obj->lock();

if (form->in_frames_count) // , ...

{

//

//

int n = form->in_frames_count >= form->frame_buffer_size_2? form->frame_buffer_size_2 : form->in_frames_count;

form->out_frame_idx = 0;

form->out_frames_count = n;

form->out_base_idx = form->out_base_idx? 0 : form->frame_buffer_size_2;

form->in_frame_idx = 0;

form->in_frames_count = 0;

form->in_base_idx = form->in_base_idx? 0 : form->frame_buffer_size_2;

}

else //

{

form->out_frame_idx--;

}

form->u_obj->unlock();

}

}

}

//

void __fastcall repaint_thread_t::Execute(void)

{

while (!Terminated)

{

if (form->frame_buffer_size == 1) // , ...

{

WaitForSingleObject(form->h_a, 250); // A 250

Synchronize(repaint); // WM_PAINT

WaitForSingleObject(form->h_b, 250); // B 250

/*

*/

}

else // ...

{

// ...

// /

form->active_dt_param =

(

/* ...

( )

( )*/

form->frame_buffer_size -

(form->out_frames_count - form->out_frame_idx + form->in_frames_count)

/*

( ) ( )

( )

:

(

)

( )

-

/

(

)... :)*/

) * form->out_dt / form->frame_buffer_size;

LONGLONG t = ffmpeg_get_microseconds() / 1000;

Synchronize(repaint); // WM_PAINT

LONGLONG dt = ffmpeg_get_microseconds() / 1000 - t;

// :

//

int delay = form->out_dt + form->active_dt_param - dt;

if (delay > 0) Sleep(delay);

}

}

}

.

mem_c = new TCanvas; //

...

//

void Tmain_form::draw_bg_img(TCanvas *c, int w, int h)

{

c->Brush->Style = bsSolid;

c->Brush->Color = clBlack;

c->FillRect(TRect(0, 0, w, h));

}

//

void Tmain_form::draw_no_signal_img(TCanvas *c, int w, int h)

{

AnsiString s = "NO SIGNAL";

c->Brush->Style = bsClear;

c->Font->Color = clWhite;

c->Font->Size = 24;

c->Font->Style = TFontStyles()<< fsBold;

c->TextOutA(w / 2 - c->TextWidth(s) / 2, h / 2 - c->TextHeight(s) / 2, s);

}

...

// WM_PAINT

void __fastcall Tmain_form::WMPaint(TWMPaint& Message)

{

if (form_bmp_h[out_frame_idx + out_base_idx]) //

{

PAINTSTRUCT ps;

HDC paint_hdc = BeginPaint(Handle, &ps);

HDC hdc_1 = CreateCompatibleDC(paint_hdc); // 1

HDC hdc_2 = CreateCompatibleDC(paint_hdc); // 2

mem_c->Handle = hdc_1; // 1

HBITMAP h_1 = CreateCompatibleBitmap(paint_hdc, buffer_w, buffer_h); // BMP

HBITMAP old_h_1 = (HBITMAP)SelectObject(hdc_1, h_1); // 1 BMP

// ( 1) BMP

// 2

HBITMAP old_h_2 = (HBITMAP)SelectObject(hdc_2, form_bmp_h[out_frame_idx + out_base_idx]);

// BMP

BitBlt(hdc_1, 0, 0, buffer_w, buffer_h, hdc_2, 0, 0, SRCCOPY);

// ...

// ( ), ...

if (start_form->stereo_test && (start_form->left_form == this || start_form->right_form == this))

{

// ( )

mem_c->CopyMode = cmSrcCopy;

mem_c->StretchDraw(TRect(0, 0, buffer_w, buffer_h), start_form->left_form == this? start_form->left_img : start_form->right_img);

}

else

{

// - NO SIGNAL

if (!ffmpeg_get_status(ctx))

{

draw_bg_img(mem_c, buffer_w, buffer_h);

draw_no_signal_img(mem_c, buffer_w, buffer_h);

}

// ...BUFFERING...

else if (!out_frames_count) {

draw_bg_img(mem_c, buffer_w, buffer_h);

draw_buffering_img(mem_c, buffer_w, buffer_h);

}

// - NO SIGNAL

else if (!frame_good[out_frame_idx + out_base_idx])

{

draw_bg_img(mem_c, buffer_w, buffer_h);

draw_no_signal_img(mem_c, buffer_w, buffer_h);

}

}

// ()

if (area_type) draw_area_img(mem_c, buffer_w, buffer_h);

// ( , FPS, , ..)...

//

if (show_ui) draw_info_img(mem_c, buffer_w, buffer_h);

// (BMP)

BitBlt(paint_hdc, 0, 0, buffer_w, buffer_h, hdc_1, 0, 0, SRCCOPY);

//

SelectObject(hdc_1, old_h_1);

SelectObject(hdc_2, old_h_2);

DeleteObject(h_1);

DeleteDC(hdc_1);

DeleteDC(hdc_2);

EndPaint(Handle, &ps);

}

}

— (!), (BMP) . ? , (, , ) , …

. . .

-

- IP- : ( ). . ().

()

( — )

- :

- VR Android;

- iDisplay ();

- iDisplay ();

- USB ( ADB ) Wi-Fi « » ( — );

- « » VR .

- :

- (VR 0 USD :), iDisplay 5 USD);

- — .

:

-« » — — — , , iDisplay , : Intel Core2 Duo E8300 2.83 iDisplay 50% ( ; , Intel Core2 Quad Q9400 2.66 25%) , ; ( ) — iDisplay - ( ); iDisplay « »;

- VR , .

: DLL- .

void scr_rec_thread_t::main(void) //

{

LONGLONG t = ffmpeg_get_microseconds() / 1000;

HBITMAP old_h = (HBITMAP)SelectObject(scr_bmp_hdc, scr_bmp_h);

if (!BitBlt //

(

scr_bmp_hdc, //HDC hdcDest

0, //int nXDest

0, //int nYDest

scr_w, //int nWidth

scr_h, //int nHeight

scr_hdc, //HDC hdcSrc

scr_x, //int nXSrc

scr_y, //int nYSrc

CAPTUREBLT | SRCCOPY //DWORD dwRop

))

{}

SelectObject(scr_bmp_hdc, old_h);

ffmpeg_rec_bmp(scr_rec_ctx, scr_bmp_bits); //

/* FPS

*/

LONGLONG dt = ffmpeg_get_microseconds() / 1000 - t;

int ms_per_frame = 1000 / scr_rec_max_fps;

if (dt < ms_per_frame)

{

dt = ms_per_frame - dt;

if (dt < scr_rec_min_delay) dt = scr_rec_min_delay;

}

else

{

dt = scr_rec_min_delay;

}

Sleep(dt);

// FPS

frames_count++;

if (ffmpeg_get_microseconds() / 1000 - start > 1000)

{

start = ffmpeg_get_microseconds() / 1000;

fps = frames_count;

frames_count = 0;

}

}

scr_rec_thread_t *scr_rec_thread; //-

//

int scr_x;

int scr_y;

int scr_w;

int scr_h;

HDC scr_hdc; //

HDC scr_bmp_hdc; // BMP

char *scr_bmp_bits; //

HBITMAP scr_bmp_h; // BMP

//

void scr_rec_init(void)

{

// DLL-

scr_rec_ctx = ffmpeg_alloc_rec_ctx();

//

scr_x = GetSystemMetrics(SM_XVIRTUALSCREEN);

scr_y = GetSystemMetrics(SM_YVIRTUALSCREEN);

scr_w = GetSystemMetrics(SM_CXVIRTUALSCREEN);

scr_h = GetSystemMetrics(SM_CYVIRTUALSCREEN);

// BMP

scr_hdc = GetDC(0);

scr_bmp_hdc = CreateCompatibleDC(scr_hdc);

// BMP

BITMAPINFOHEADER scr_bmi_hdr;

scr_bmi_hdr.biSize = sizeof(BITMAPINFOHEADER);

scr_bmi_hdr.biWidth = scr_w;

scr_bmi_hdr.biHeight = scr_h;

scr_bmi_hdr.biPlanes = 1;

scr_bmi_hdr.biBitCount = 32;

scr_bmi_hdr.biCompression = BI_RGB;

scr_bmi_hdr.biSizeImage = scr_bmi_hdr.biWidth * scr_bmi_hdr.biHeight * 4;

scr_bmi_hdr.biXPelsPerMeter = 0;

scr_bmi_hdr.biYPelsPerMeter = 0;

scr_bmi_hdr.biClrUsed = 0;

scr_bmi_hdr.biClrImportant = 0;

scr_bmp_h = CreateDIBSection(0, (BITMAPINFO *)&scr_bmi_hdr, DIB_RGB_COLORS, (void **)&scr_bmp_bits, 0, 0);

scr_rec_thread = new scr_rec_thread_t(); // -

}

//

void scr_rec_uninit(void)

{

delete scr_rec_thread;

DeleteObject(scr_bmp_h);

DeleteDC(scr_bmp_hdc);

DeleteDC(scr_hdc);

ffmpeg_free_ctx(scr_rec_ctx);

}

int scr_rec_ctx;

. DLL-

//

class rec_ctx_t:

public mt_obj

{

public:

AVFormatContext *ofcx; //

AVStream *ost; //

LONGLONG start; //

AVPacket pkt; //

AVFrame *frame; // YUV420P

AVFrame *_frame; // RGB32

/* BGR-

RGB32- -

BMP, FFMPEG*/

uint8_t *_buffer; //

// ( RGB32 YUV420P)

SwsContext *ctx;

//ID - , , ...

//

int codec_id;

// -

char *preset;

//CRF - , ...

//( . FFMPEG)

int crf;

rec_ctx_t(void):

ofcx(0),

ost(0),

frame(0),

_frame(0),

_buffer(0),

ctx(0),

codec_id(CODEC_ID_H264),

preset(strdup("ultrafast")),

crf(23)

{

memset(&start, 0, sizeof(start));

av_init_packet( &pkt );

}

virtual ~rec_ctx_t()

{

ffmpeg_stop_rec2((int)this);

free(preset);

}

void clean(void) //

{

sws_freeContext(ctx);

av_free(_buffer);

av_free(frame);

av_free(_frame);

if (ost) avcodec_close( ost->codec );

avformat_free_context( ofcx );

ofcx = 0;

ost = 0;

memset(&start, 0, sizeof(start));

av_init_packet( &pkt );

frame = 0;

_frame = 0;

_buffer = 0;

ctx = 0;

}

int prepare(char *dst, int w, int h, int den, int gop_size) //

{

//

AVOutputFormat *ofmt = av_guess_format( "VOB", NULL, NULL ); // VOB

if (!ofmt)

{

clean();

return 0;

}

ofcx = avformat_alloc_context();

if (!ofcx)

{

clean();

return 0;

}

ofcx->oformat = ofmt;

// ,

AVCodec *ocodec = avcodec_find_encoder( (AVCodecID)codec_id );

if (!ocodec || !ocodec->pix_fmts || ocodec->pix_fmts[0] == -1)

{

clean();

return 0;

}

ost = avformat_new_stream( ofcx, ocodec );

if (!ost)

{

clean();

return 0;

}

ost->codec->width = w;

ost->codec->height = h;

ost->codec->pix_fmt = ocodec->pix_fmts[0];

ost->codec->time_base.num = 1;

ost->codec->time_base.den = den;

ost->time_base.num = 1;

ost->time_base.den = den;

ost->codec->gop_size = gop_size;

if ( ofcx->oformat->flags & AVFMT_GLOBALHEADER ) ost->codec->flags |= CODEC_FLAG_GLOBAL_HEADER;

AVDictionary *opts = 0;

av_dict_set(&opts, "preset", preset, 0); //

char crf_str[3];

sprintf(crf_str, "%i", crf); // CRF

av_dict_set(&opts, "crf", crf_str, 0);

if (avcodec_open2( ost->codec, ocodec, &opts ) != 0)

{

clean();

return 0;

}

// ,

frame = av_frame_alloc();

_frame = av_frame_alloc();

if (!frame || !_frame)

{

clean();

return 0;

}

frame->format = PIX_FMT_RGB32;

frame->width = w;

frame->height = h;

_frame->format = PIX_FMT_YUV420P;

_frame->width = w;

_frame->height = h;

int _buffer_size = avpicture_get_size(PIX_FMT_YUV420P, w, h);

if (_buffer_size < 0)

{

clean();

return 0;

}

uint8_t *_buffer = (uint8_t *)av_malloc(_buffer_size * sizeof(uint8_t));

if (!_buffer)

{

clean();

return 0;

}

if

(

avpicture_fill((AVPicture *)frame, 0, PIX_FMT_RGB32, w, h) < 0 ||

avpicture_fill((AVPicture *)_frame, _buffer, PIX_FMT_YUV420P, w, h) < 0

)

{

clean();

return 0;

}

ctx = sws_getContext(w, h, PIX_FMT_RGB32, w, h, PIX_FMT_YUV420P, SWS_BICUBIC, 0, 0, 0);

if (!ctx)

{

clean();

return 0;

}

//

if (avio_open2( &ofcx->pb, dst, AVIO_FLAG_WRITE, NULL, NULL ) < 0)

{

clean();

return 0;

}

//

if (avformat_write_header( ofcx, NULL ) != 0)

{

avio_close( ofcx->pb );

clean();

return 0;

}

//

start = get_microseconds();

return 1;

}

};

//

int ffmpeg_start_rec2

(

int rec_ctx,

char *dst, int w, int h, int den, int gop_size

)

{

rec_ctx_t *ctx = (rec_ctx_t *)rec_ctx;

if (!ctx) return 0;

ffmpeg_stop_rec2(rec_ctx); //

return ctx->prepare(dst, w, h, den, gop_size);

}

//

void ffmpeg_stop_rec2(int rec_ctx)

{

rec_ctx_t *ctx = (rec_ctx_t *)rec_ctx;

if (!ctx || !ctx->ofcx) return;

//

av_write_trailer( ctx->ofcx );

avio_close( ctx->ofcx->pb );

ctx->clean();

}

//

int ffmpeg_rec_bmp(int rec_ctx, void *bmp_bits)

{

rec_ctx_t *ctx = (rec_ctx_t *)rec_ctx;

if (!ctx) return 0;

// RGB32-

ctx->frame->data[0] = (uint8_t *)bmp_bits;

ctx->frame->data[0] += ctx->frame->linesize[0] * (ctx->frame->height - 1);

ctx->frame->linesize[0] = -ctx->frame->linesize[0];

// RGB32- YUV420P-

sws_scale(ctx->ctx, ctx->frame->data, ctx->frame->linesize, 0, ctx->frame->height, ctx->_frame->data, ctx->_frame->linesize);

// RGB32-

ctx->frame->linesize[0] = -ctx->frame->linesize[0];

//

LONGLONG dt = (get_microseconds() - ctx->start) / 1000;

ctx->_frame->pts = dt * ctx->ost->time_base.den / 1000;

//

int got_packet;

if (avcodec_encode_video2(ctx->ost->codec, &ctx->pkt, ctx->_frame, &got_packet) != 0) return 0;

//

int res = 1;

if (got_packet)

{

res = av_interleaved_write_frame( ctx->ofcx, &ctx->pkt ) == 0? 1 : 0;

av_free_packet( &ctx->pkt );

}

return res;

}

, (1280 x 1024, 25 fps) Intel Core2 Duo E8300 2.83 30% FPS, , .

. . .

:

.

Source: https://habr.com/ru/post/277955/

All Articles