Using convolutional networks for searching, highlighting, and classifying

Recently, ZlodeiBaal published an article “Neuro-revolution in heads and villages,” in which he gave an overview of the capabilities of modern neural networks. The most interesting, in my opinion, is the approach with the use of convolutional networks for image segmentation, about this approach and will be discussed in the article.

There has long been a desire to explore convolutional networks and learn something new, besides there are several recent Tesla K40 with 12GB of memory, Tesla c2050, regular video cards, Jetson TK1 and a laptop with a mobile GT525M, so the most interesting of all is to try on TK1, how it can be used almost everywhere, even hang a lamp post. The very first thing I started with is the recognition of numbers, of course there is nothing to surprise, the numbers have long been recognized by networks, but there is always a need for new applications that need to recognize something: house numbers, car numbers, car numbers, etc. d. Everything would be fine, but the digit recognition task is only part of more general tasks.

')

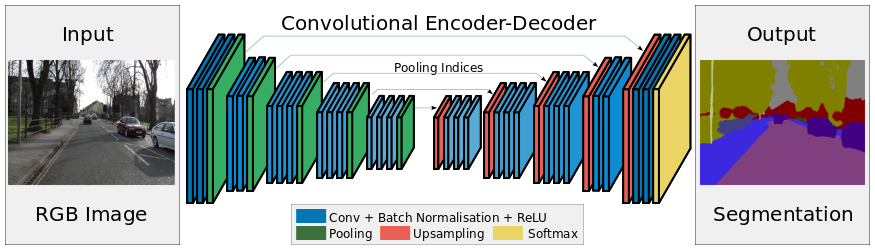

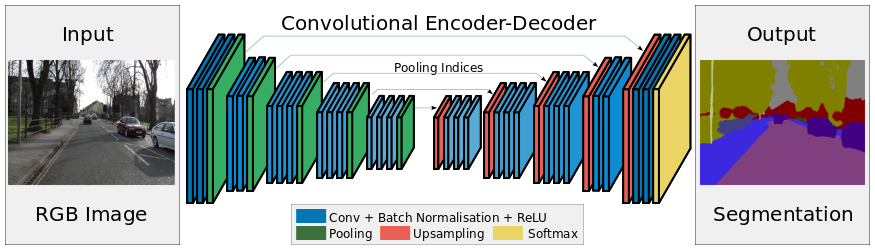

Convolution networks are different. Some are only able to recognize objects in the image. Some are able to select a rectangle with an object (RCNN, for example). And some can filter the image and turn it into some logical picture. I liked the last ones the most: they are the fastest and most beautiful work. For testing, one of the most recent networks on this front was chosen - SegNet , more details can be found in the article . The main idea of this method is that instead of lable, it is not a number that is fed, but an image; a new layer “Upsample” is added to increase the dimension of the layer.

At the end, the expanded image and mask from lable are fed to the loss layer, where each class is assigned its weight as a function of loss.

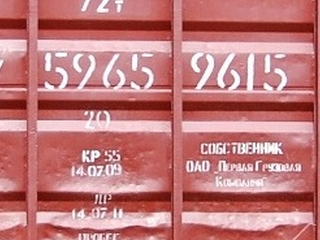

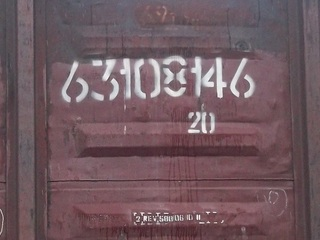

Recognizing the numbers correctly is only part of the number recognition task and is far from the most difficult. First you need to find this number, then find out where the numbers are located, and then recognize them. Quite often, large errors appear at the first stages, and as a result, it is rather difficult to obtain high accuracy of number recognition. Dirty and overwritten numbers are poorly detected and with large errors, the number pattern is poorly superimposed, resulting in many inaccuracies and difficulties. The number can be generally non-standard with arbitrary intervals, etc.

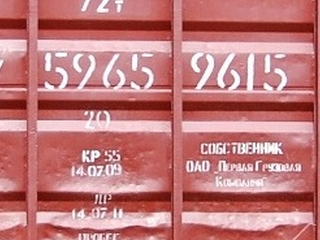

For example, wagon numbers have many variations of spelling. If you correctly identify the boundaries of the number, you can get at least 99.9% for each digit. And if the numbers are intertwined? If the segmentation will give different numbers in different parts of the car?

Or, for example, the task of finding a car number. Of course, it can be solved through Haar and Hog . But why not try another method and compare? Especially when there is a base ready for learning and marking?

At the entrance of a convolutional network, an image with a car number and a mask are applied, on which a rectangle with a number is filled with a unit, and everything else is zero. After training, we check the work on the test sample, where, for each input image, the network issues a mask of the same size, on which it paints the pixels where, in its opinion, there is a number. The result is in the pictures below.

After reviewing the test sample, you can understand that this method works quite well and almost does not fail, it all depends on the quality of training and settings. Since Vasyutka and ZlodeiBaal had a marked base of numbers, they learned from it and checked how well everything works. The result was no worse than the Haar cascade, and even better in many situations. It may be noted some disadvantages:

In general, the manifestation of these shortcomings is natural, the network doesn’t find something that was not in the training set. If you carefully approach the process of preparing the base, then the result will be of high quality.

The resulting solution can be applied to a large class of objects search problems, not just car license plates. Well, the number is found, now you need to find numbers there and recognize them. This is also not an easy task, as it seems at first glance, you need to check many hypotheses of their location, and that if the number is not standard, does not fit the mask, then the case is a pipe. If car numbers are made according to GOST and have a certain format, then there are numbers that can be written as you like, by hand, at different intervals. For example, numbers of cars, are written with spaces, ones take much less space than other digits.

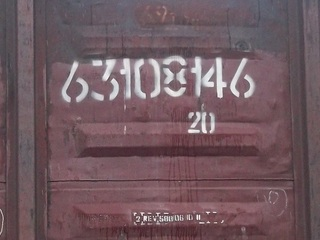

Convolution networks are again in a hurry to help us. And what if you use the same network for search and recognition. We will search and recognize wagon numbers. An image is fed to the input of the network, in which there is a number and a mask, where the squares with numbers are filled with values from 1 to 10, and the background is filled with zero.

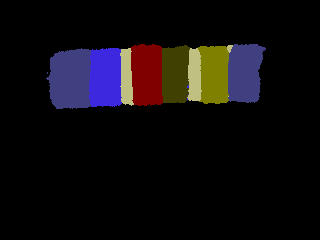

After not very long training at Tesla K40, the result is obtained. To make the result more readable, different numbers are painted in different colors. Determine the number of colors of great work will not make.

In fact, it turned out to be a very good result, even the worst numbers that were not well recognized before were able to be found, divided into numbers and recognize the entire number. It turned out to be a universal method, which allows not only to recognize the numbers, but in general to find an object in the image, select and classify it, if there can be several such objects.

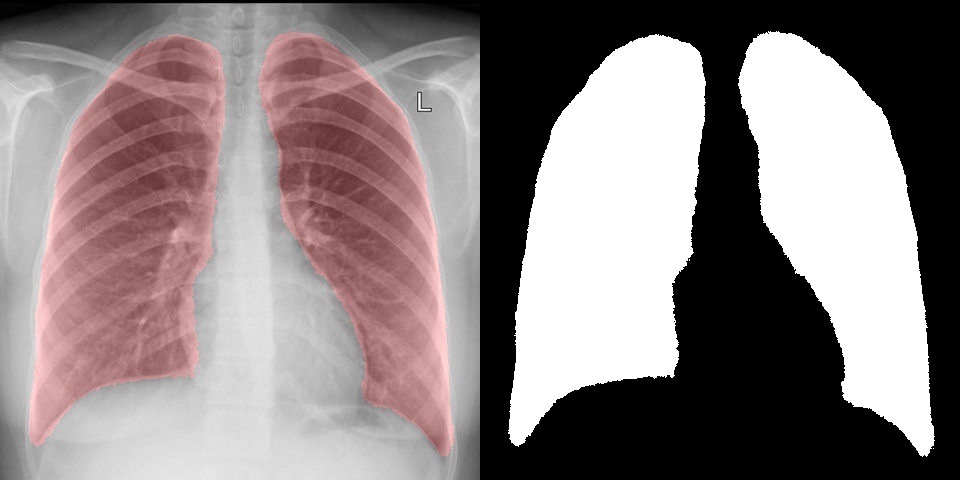

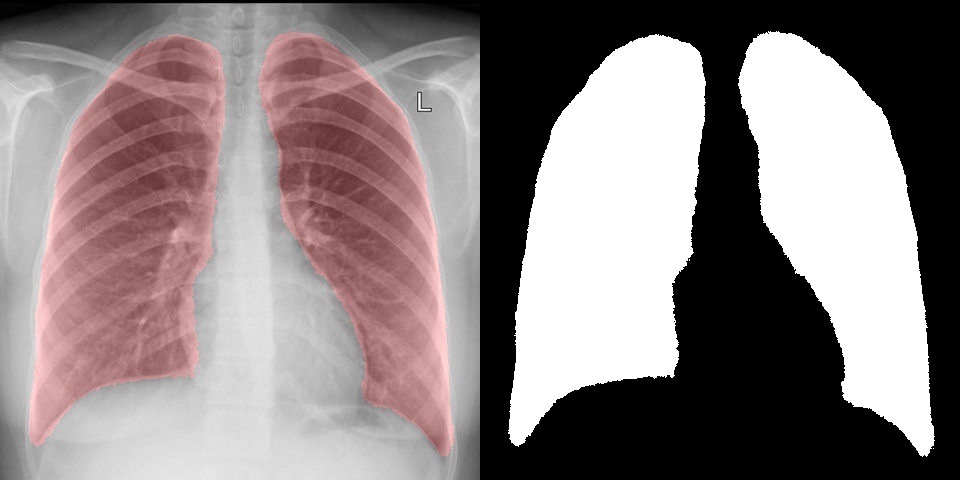

And what if you try something more unusual, interesting and difficult, such as the selection and segmentation of medical images. For the test, X-ray images were taken from an open CT database and X-RAY images, they were used to train lung segmentation, and as a result, it was possible to accurately identify the area of interest. The original image and a mask with zero and one were also fed to the input of the network. On the right is the result that the convolutional network produces, and on the left is the same area in the image.

For example, the article uses a lung model for segmentation. The result obtained using convolutional networks is not at all inferior, and in some cases even better. At the same time, it takes much faster to train a network than to create and debug an algorithm.

In general, this approach showed high performance and flexibility in a large range of tasks, with the help of it you can solve all sorts of problems of object search, segmentation and recognition, and not just classification.

This method works fairly quickly, on a Tesla video card, processing of a single image takes 10-15 ms, and on Jetson TK1 in 1.4 seconds. About how to run Caffe on Jetson TK1 and what processing speed can be achieved on it in these tasks, it is probably better to devote a separate article.

PS

Training took no more than 12 hours.

The size of the base of the numbers 1200 images.

The size of the base of the cars 6000 images.

Base size for a lightweight 480 images.

1. SegNet

2. A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation (pdf)

3. Haar

4. Hog

5. Lung segmentation in images (pdf)

There has long been a desire to explore convolutional networks and learn something new, besides there are several recent Tesla K40 with 12GB of memory, Tesla c2050, regular video cards, Jetson TK1 and a laptop with a mobile GT525M, so the most interesting of all is to try on TK1, how it can be used almost everywhere, even hang a lamp post. The very first thing I started with is the recognition of numbers, of course there is nothing to surprise, the numbers have long been recognized by networks, but there is always a need for new applications that need to recognize something: house numbers, car numbers, car numbers, etc. d. Everything would be fine, but the digit recognition task is only part of more general tasks.

')

Convolution networks are different. Some are only able to recognize objects in the image. Some are able to select a rectangle with an object (RCNN, for example). And some can filter the image and turn it into some logical picture. I liked the last ones the most: they are the fastest and most beautiful work. For testing, one of the most recent networks on this front was chosen - SegNet , more details can be found in the article . The main idea of this method is that instead of lable, it is not a number that is fed, but an image; a new layer “Upsample” is added to increase the dimension of the layer.

layer { name: "data" type: "DenseImageData" top: "data" top: "label" dense_image_data_param { source: "/path/train.txt" // : image1.png label1.png batch_size: 4 shuffle: true } } At the end, the expanded image and mask from lable are fed to the loss layer, where each class is assigned its weight as a function of loss.

layer { name: "loss" type: "SoftmaxWithLoss" bottom: "conv_1D" bottom: "label" top: "loss" softmax_param {engine: CAFFE} loss_param: { weight_by_label_freqs: true class_weighting: 1 class_weighting: 80 } } Recognizing the numbers correctly is only part of the number recognition task and is far from the most difficult. First you need to find this number, then find out where the numbers are located, and then recognize them. Quite often, large errors appear at the first stages, and as a result, it is rather difficult to obtain high accuracy of number recognition. Dirty and overwritten numbers are poorly detected and with large errors, the number pattern is poorly superimposed, resulting in many inaccuracies and difficulties. The number can be generally non-standard with arbitrary intervals, etc.

For example, wagon numbers have many variations of spelling. If you correctly identify the boundaries of the number, you can get at least 99.9% for each digit. And if the numbers are intertwined? If the segmentation will give different numbers in different parts of the car?

Or, for example, the task of finding a car number. Of course, it can be solved through Haar and Hog . But why not try another method and compare? Especially when there is a base ready for learning and marking?

At the entrance of a convolutional network, an image with a car number and a mask are applied, on which a rectangle with a number is filled with a unit, and everything else is zero. After training, we check the work on the test sample, where, for each input image, the network issues a mask of the same size, on which it paints the pixels where, in its opinion, there is a number. The result is in the pictures below.

After reviewing the test sample, you can understand that this method works quite well and almost does not fail, it all depends on the quality of training and settings. Since Vasyutka and ZlodeiBaal had a marked base of numbers, they learned from it and checked how well everything works. The result was no worse than the Haar cascade, and even better in many situations. It may be noted some disadvantages:

- does not detect oblique numbers (they were not in the training set)

- does not detect numbers shot at close range (they were not in the sample either)

- sometimes it does not detect white numbers on pure white machines (most likely also because of the incompleteness of the training sample, but, interestingly, the same glitch was in the Haar cascade)

In general, the manifestation of these shortcomings is natural, the network doesn’t find something that was not in the training set. If you carefully approach the process of preparing the base, then the result will be of high quality.

The resulting solution can be applied to a large class of objects search problems, not just car license plates. Well, the number is found, now you need to find numbers there and recognize them. This is also not an easy task, as it seems at first glance, you need to check many hypotheses of their location, and that if the number is not standard, does not fit the mask, then the case is a pipe. If car numbers are made according to GOST and have a certain format, then there are numbers that can be written as you like, by hand, at different intervals. For example, numbers of cars, are written with spaces, ones take much less space than other digits.

Convolution networks are again in a hurry to help us. And what if you use the same network for search and recognition. We will search and recognize wagon numbers. An image is fed to the input of the network, in which there is a number and a mask, where the squares with numbers are filled with values from 1 to 10, and the background is filled with zero.

After not very long training at Tesla K40, the result is obtained. To make the result more readable, different numbers are painted in different colors. Determine the number of colors of great work will not make.

In fact, it turned out to be a very good result, even the worst numbers that were not well recognized before were able to be found, divided into numbers and recognize the entire number. It turned out to be a universal method, which allows not only to recognize the numbers, but in general to find an object in the image, select and classify it, if there can be several such objects.

And what if you try something more unusual, interesting and difficult, such as the selection and segmentation of medical images. For the test, X-ray images were taken from an open CT database and X-RAY images, they were used to train lung segmentation, and as a result, it was possible to accurately identify the area of interest. The original image and a mask with zero and one were also fed to the input of the network. On the right is the result that the convolutional network produces, and on the left is the same area in the image.

For example, the article uses a lung model for segmentation. The result obtained using convolutional networks is not at all inferior, and in some cases even better. At the same time, it takes much faster to train a network than to create and debug an algorithm.

In general, this approach showed high performance and flexibility in a large range of tasks, with the help of it you can solve all sorts of problems of object search, segmentation and recognition, and not just classification.

- allocation of car numbers;

- license plate recognition;

- recognition of numbers on cars, platforms, containers, etc;

- segmentation and selection of objects: lungs, cats, pedestrians, etc.

This method works fairly quickly, on a Tesla video card, processing of a single image takes 10-15 ms, and on Jetson TK1 in 1.4 seconds. About how to run Caffe on Jetson TK1 and what processing speed can be achieved on it in these tasks, it is probably better to devote a separate article.

PS

Training took no more than 12 hours.

The size of the base of the numbers 1200 images.

The size of the base of the cars 6000 images.

Base size for a lightweight 480 images.

1. SegNet

2. A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation (pdf)

3. Haar

4. Hog

5. Lung segmentation in images (pdf)

Source: https://habr.com/ru/post/277781/

All Articles