In-depth training in the garage - Return smiles

This is the third article in the series about the definition of a smile on the face.

In-depth training in the garage - Data Brotherhood

In-depth training in the garage - Two networks

In-depth training in the garage - Return smiles

So what about the smiles?

Fuh, well, finally, face detection works, you can learn the smile recognition network. But what to learn? There are no open data sets. And from how long in the previous part I got to, in fact, the training of models, you should have already understood that in in-depth training the data is everything. And they need a lot.

There are open data sets with marked up emotions. But this does not suit me, because I want to understand not facial expressions, but caricature, specially constructed facial expressions, and this is not at all like real emotions.

')

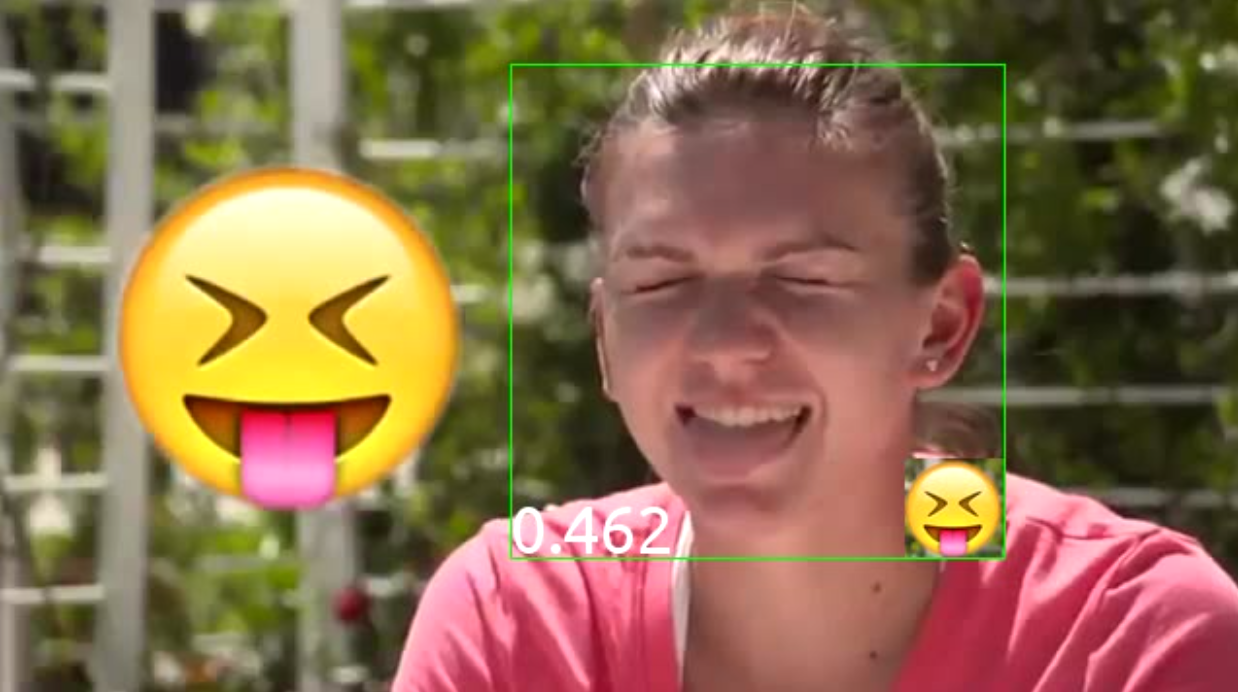

Well, it is necessary to collect your training sample! Photograph yourself? But one person is definitely not enough. After wandering through the pictures of Google and Yandex, I went to YouTube. And here, among the hordes of cats and latspleyev I came across this video:

Hooray! What you need! As a result of a brief search, I dug out a couple of similar videos and started on the markup, which is also quite fun, and gave reason to deal with OpenCV for python, but yes the article is not about that.

The result was such a dataset that I absolutely did not believe in the success of the operation:

- It is very small (about three thousand pictures).

- Only about twenty people.

- Only girls (this was a plus during the markup, but the network does not understand the result of the men at all)

- I marked up the face on almost every frame, so it turned out a dozen or a half of very similar pictures of each pair (girl, smile).

- Quite a lot of classes for such a number of photos: 17.

- There are similar smilies. In fact, as a result, the network periodically confuses them.

But since the work is done, why not try? I took the architecture of a large network from the detection module, replaced the classifier at the top from two classes to seventeen and went to teach.

def build_net_smiles(input): network = lasagne.layers.InputLayer(shape=(None, 3, 48, 48), input_var=input) network = lasagne.layers.dropout(network, p=.1) network = conv(network, num_filters=64, filter_size=(5, 5), nolin=relu) network = batchnorm.batch_norm(network) network = max_pool(network) network = conv(network, num_filters=64, filter_size=(5, 5), nolin=relu) network = batchnorm.batch_norm(network) network = max_pool(network) network = conv(network, num_filters=64, filter_size=(3, 3), nolin=relu) network = batchnorm.batch_norm(network) network = max_pool(network) network = conv(network, num_filters=64, filter_size=(3, 3), nolin=relu) network = batchnorm.batch_norm(network) network = max_pool(network) network = DenseLayer(lasagne.layers.dropout(network, p=.3), num_units=256, nolin=relu) network = batchnorm.batch_norm(network) network = DenseLayer(lasagne.layers.dropout(network, p=.3), num_units=17, nolin=linear) return network And I was right! I will not even show the picture, it turned out terribly. However, having learned from experience, I didn’t despair right away, but with a python I was ready to encode the most varied augmentation of data, trying to at least get closer to the “effectively infinite” data set paradigm, similar to augmentation for detection. I will not give the algorithm, it is exactly the same as it was for detection. I’ll say right away that the efficiency of the supplement is much lower here than for detection, because in fact there are very few unique photos, and all those three thousand are neighboring frames that differ little and, in fact, are similar to this very augmentation.

And again, the failure: on validation girls (i.e., girls allocated entirely to the validation set) is very poorly defined. As I expected, 20 girls are too few for everything to work well, so I decided to slightly weaken the requirements and not allocate whole girls to a validation set, as a result of which the model is expected to retrain for specific people from the training sample and it will work poorly on other people what happened; but do not retrain for specific images (there is a lot of data with augmentation!).

In such conditions it turned out even well:

It can be seen that the split saver converges, and my previous experiments suggest that increasing the amount of data is the most kosher way to fix it, but, alas, I don’t have any more data: they are rather difficult to find and even harder to mark.

System

Well, finally, the whole pipeline. One picture instead of a thousand words:

Here I will note that at the end of the detection of faces, two squares turned out, and not one, because the areas of their intersection are not enough for the algorithm to understand that they belong to the same person. I am still working on this and there is no definite solution. It is necessary to come up with an algorithm for the task, which I did for the demo: I chose one best square to classify the smiley on it.

Also, if you just take the square found by the detector and get a smile on it, it turns out bad: after all, this grid was trained on too little data, and it is very sensitive to changes in inputs. And the detector can very well produce very different results: covering the face, and the center of the face, and sometimes even just a piece of the face. This classifier of smiles reacts very badly, so instead of taking one square, I take 45 people standing next to each other and hold a vote. The number at the bottom left is just what percentage of the maximum this smiley scored. For each of the 45 windows, the classifier gives the distribution by smiles, which I simply vectorially summarize over all windows and divide by the sum over all smiles (which should be equal to 45, since the sum of smiles from each window, being the probability distribution, is equal to 1 ).

smile_probs_sum = T.sum(lasagne.layers.get_output(net_smiles, deterministic=True), axis=0) # sum probabilities over all windows classify_smiles = theano.function([T.tensor4("input")], [T.argmax(smile_probs_sum), T.max(smile_probs_sum)]) # get best class and its score def score(frames): smile_cls, smile_val = classify_smiles(*frames) smile_val = float(smile_val) return smile_cls, smile_val / 17 Show the result already!

As a test of the system, I drove it to the original video, one of those I studied (yes, this is not full of wines, but it was conceived, for a complete wine, you need to collect a lot of data from a large number of people). At the same time, the video was slowed down several times to keep up with the work of the network:

Bonus

As I said above, I have not collected enough data for training to train a full-fledged model that would work on arbitrary people. Therefore, it was decided to make a crowdfunding platform for collecting this data (ha ha, what a great name) !

So, a small service was made on Go called Smielfy v0.1 , which invites you to portray a smiley, shows an example of how it was done before you in a video on YouTube and gives you the opportunity to take a photo and send your favorite photos to the server, where it will be carefully put into a folder that I, if I have enough data to accumulate, can be used to train a cooler model that will already honestly be able to cope with the definition of a smiley on any person and which can be inserted into a (non-commercial) application!

The service uses a self-signed SSL certificate, which is needed only for the browser to allow using the webcam API.

Thanks

Thanks to Zoberg , who made the service Smielfy literally in one day yesterday and who helped me to mark out smiles.

Thanks to GeniyX , which is currently redesigning Smielfy and who was the main developer of DeepEvent v2.0 - a new version of the system for monitoring and visualizing learning of neural networks.

Source: https://habr.com/ru/post/277403/

All Articles