Bookmark in OS X, extending battery life for selected applications

Why 2 GPUs?

Laptops with two GPUs appeared a long time ago. The first MacBook Pro with this technology was released in 2008.

The advantage of two GPUs in flexibility. When you don’t need all the power of a video system, you’re using video embedded in the processor, enjoying long battery life. However, if you want to have fun, then at your service a powerful discrete GPU. Yes, he eats the battery and buzzes with fans, but gives a good FPS in games. How can one application switch the GPU?

In theory, switching should occur automatically when the load on the video card changes. However, in practice, things are not so simple.

In Windows, video card drivers are responsible for switching. They contain a bunch of games and applications with preferred settings. And the user can choose when to use a powerful discrete GPU, and when you want to work in silence.

')

In OS X with switching all addition. Let's start with the fact that the driver for OS X is written by Apple itself.

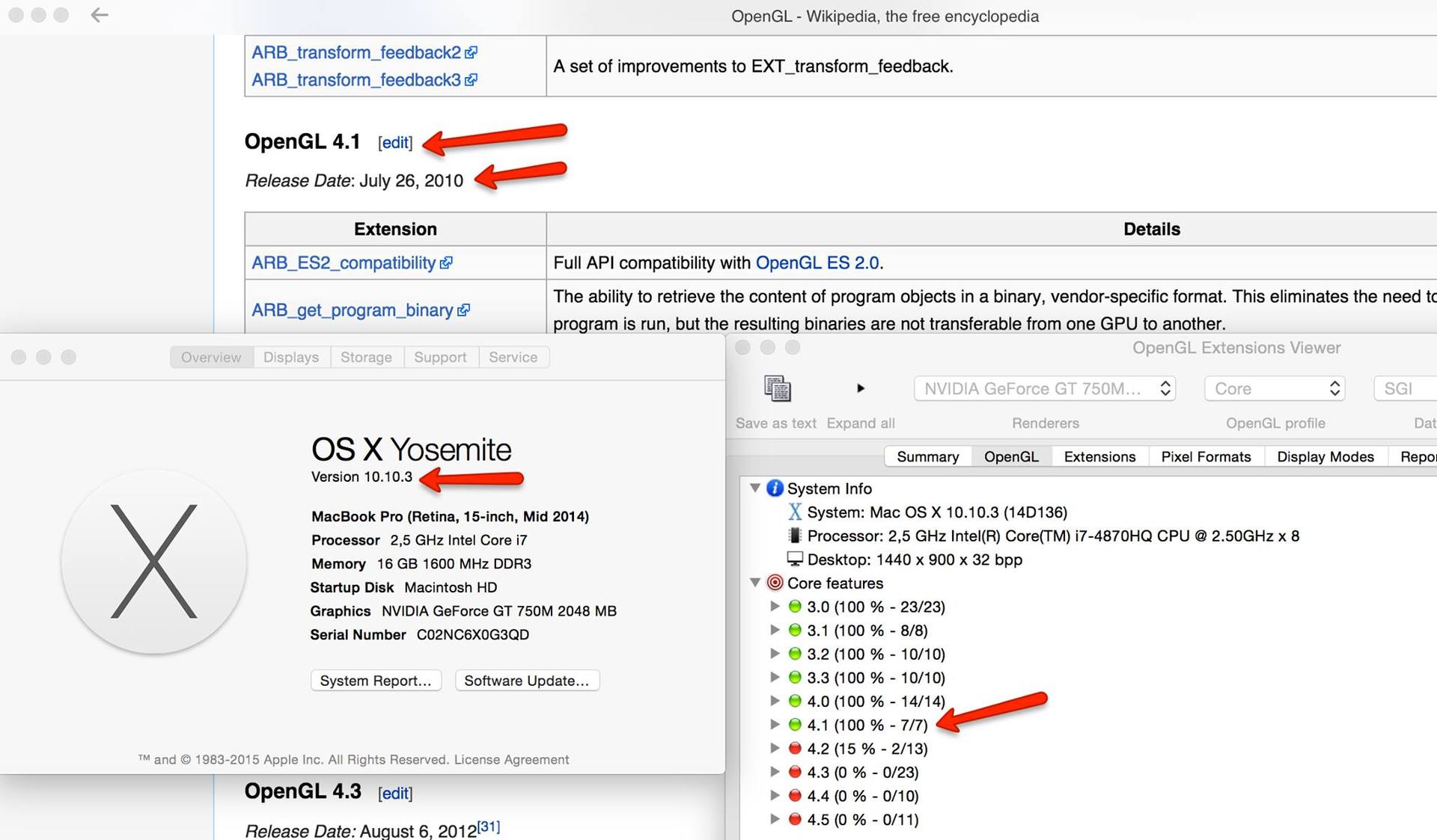

By the way, this leads to such incidents as a very outdated version of OpenGL. And no petitions help to correct this situation.

gfxCardStatus

In OS X, you can't even figure out which GPU is currently working. If only by humming fans.

Fortunately, there is an excellent utility gfxCardStatus , which shows which video card is active, and even allows you to switch between them.

When the discrete GPU turns on, the utility shows which application caused the switch.

Switching problem and official documentation

This implies that OS X automatically switches.

For example, Safari typically uses an embedded GPU. But, if you go to the site with WebGL, it will switch to a discrete GPU.

However, if you yourself want to write an application that could choose the mode of operation itself, you will have an unpleasant surprise.

To begin with, in the official documentation there is no answer to the question of how to switch correctly. There are very strange things written about it. But the documentation gives a set of keywords by which you can find information.

Chromium solution

We have a great open-source project chromium, which was able to solve this problem. It is necessary to create CGLPixelFormatObj with the kCGLPFAAllowOfflineRenderers flag when initializing the OpenGL context. In this case, the current (embedded) GPU will be used.

And if you want to switch to a discrete GPU, then you just need to create a CGLPixelFormatObj without flags. Even OpenGL context is not needed. In order to return to the integrated GPU, simply delete this CGLPixelFormatObj:

CGLContextObj ctx; CGLPixelFormatObj pix; GLint npix; std::vector<CGLPixelFormatAttribute> attribs; // Use current GPU attribs.push_back(kCGLPFAAllowOfflineRenderers); // comment this line for discrete GPU attribs.push_back((CGLPixelFormatAttribute) 0); CGLError err = CGLChoosePixelFormat(&attribs.front(), &pix, &npix); CGLCreateContext(pix, NULL, &ctx); CGLSetCurrentContext(ctx); .... Bookmark

I wrote a test application that almost worked. Why almost? Because deleting CGLPixelFormatObj did not lead to switching back to the integrated GPU! And chromium did it.

Knowing that the Nvidia drivers for Windows have special settings for chromium, and that they define chromium by the name of exe'shnik, I was expecting something similar here.

Under OS X, there is a more reliable way to define an application - Bundle Id. And if I change the Bundle Id of my application to org.chromium.Chromium, then, oh, a miracle, everything will work as it should.

The most annoying thing is that without the correct Bundle Id, you can’t switch back to the integrated GPU. And this is not just a setting, but a serious problem for writing a good program.

It also works Bundle Id: com.apple.Safari. They took care of themselves.

But com.operasoftware.Opera does not work. As a result, the same behavior is observed in Opera: Once a captured discrete GPU is never released, even if you close the voracious tab. The only way to return to the integrated GPU is to close the application.

Test application and video

A test application, where you can see the difference in the behavior of the program with different Bundle Id, can be downloaded here .

I also shot a video that reproduced the problem.

Decision

How to solve the problem? Since the active GPU is one per system, you can create a separate process when you need a discrete GPU. And complete this process if you need to return to the built-in GPU.

Source: https://habr.com/ru/post/276787/

All Articles