Quadcopter navigation using monocular vision

Now for many, computer vision is not a secret behind seven locks. However, new algorithms and approaches do not cease to impress. One such area is monocular vision, especially SLAM. How we solved the task of navigating a quadrocopter equipped with a single camera will be discussed in this article.

Task

The task is to move along a trajectory given by a sequence of positions in an initially unknown environment with possible obstacles. To solve it you need to be able to:

')

The technical base is Parrot AR.Drone. AR.Drone is equipped with the following devices of interest:

In addition, a uniform odometry is formed based on the indications of the INS and the lower chamber.

So, to build an environment map using standard AR.Drone tools, we can by and large only use the front camera. This directly leads us to the task of monocular vision, namely the monocular SLAM.

Large Scale Direct SLAM

We can safely say that SLAM with the help of a single camera is a squeak of modern technology. Such algorithms that have appeared in the last few years can be counted on the fingers of a careless milling operator - these are ORB SLAM, LSD (Large Scale Direct) SLAM (and its predecessor SVO (Semi-direct Visual Odometry)), PTAM (Parallel Tracking And Mapping). Even fewer algorithms that build more or less dense (semi-dense) environment maps. Of the most advanced algorithms, only LSD SLAM issues such cards:

In a nutshell, LSD SLAM works as follows. Three procedures work in parallel: tracking, mapping and map optimization. The tracking component estimates the position of each new frame relative to the current keyframe. The map building component processes frames with a known position, either clearing the frame map in a cunning way or creating a new keyframe. The map optimization component searches for cycles in the keyframe graph and eliminates the effect of floating scale. More information about the algorithm can be in the article developers .

For stable and efficient operation of the algorithm (these requirements apply to any monocular SLAM algorithm) the following is necessary:

Points 2 and 3 are related to each other with a simple consideration: to calculate the movement between two adjacent frames, the images on these frames must overlap to a sufficient degree. Accordingly, the faster the camera moves, the greater must be the angle of view or frame rate so that the connection between frames is not lost.

If you comply with these requirements, you can get very good quality cards, which can be seen by watching the video from the creators of LSD SLAM:

Impressive, isn't it? However, even if you have reached such a quality of cards, another trouble awaits you: not a single monocular SLAM algorithm fundamentally can estimate the absolute scale of the maps obtained and, therefore, localization. Therefore, it is necessary to resort to some tricks and find an external data source, either helping to determine the size of map objects, or evaluating the absolute values of camera movements. The first method is limited only by your imagination: you can put an object of a known size in the field of view of the camera and then compare it with the scales of similar parts of the map, you can initialize the algorithm in a previously known situation, and so on. The second method is fairly easy to apply, using, for example, the altimeter data, which we have done.

To estimate the scale, we used data on movements along the vertical axis, obtained from two sources: from the LSD SLAM algorithm and the AR.Drone altimeter. The ratio of these values is the scale of the map and the localization of the monocular system. To eliminate random disturbances, we filtered the resulting scale value with a low-pass filter.

Obstacle avoidance and trajectory correction

LSD SLAM stores the environment map in the form of a graph of key frames with partial depth maps attached to them. Combining all the nodes of the graph, we obtain a map of a known part of the environment in the form of a cloud of points. However, this is not an obstacle map! To get a dense (dense) obstacle map, we used the Octomap library, which builds an obstacle map in the form of an octree based on a point cloud.

We used the FCL (Flexible Collision Library) + OMPL (Open Motion Planning Library) library stack to check collisions and adjust trajectories. After the map is updated, the collision trajectory with obstacles test is started, in case of collisions, the trajectory segment is recalculated by the scheduler (we used BIT *, but there may be options).

Controller

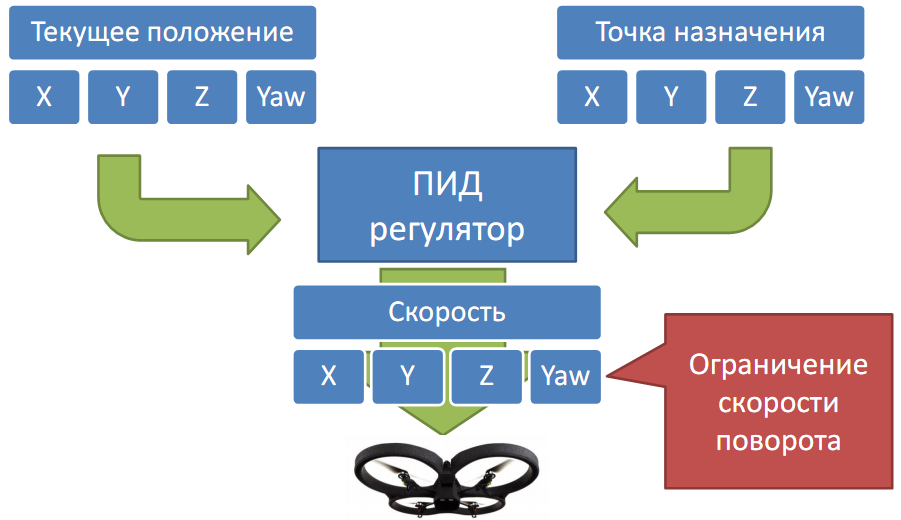

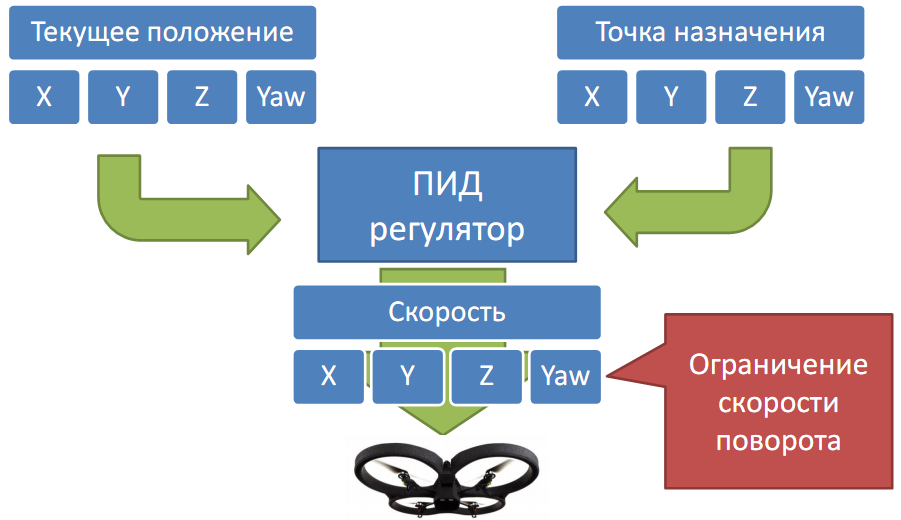

The controller was in the end quite simple, based on the PID controller. This was enough to follow the trajectory. The only thing that had to be added was the speed limit of the camera rotation to maintain the stability of SLAM.

Platform and general solution scheme

As a platform for the entire solution, we used ROS. The platform offers all the necessary infrastructure for the rapid development of parallel components (nodes in ROS terminology), communications between them, monitoring, dynamic tuning, an excellent Gazebo simulator, and much more, facilitating the development of serious robotic solutions. Although the stability of the individual components of the system still leaves much to be desired, and it’s not worthwhile to use it in the production of a responsible project.

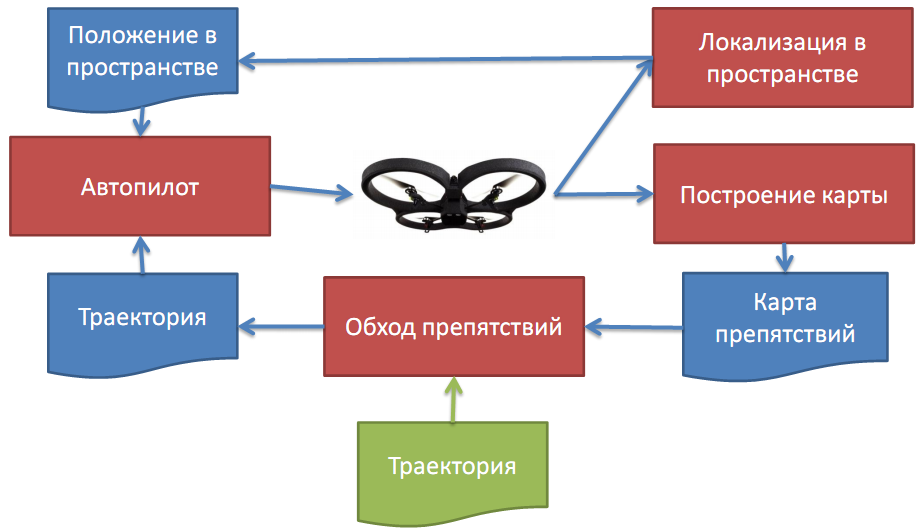

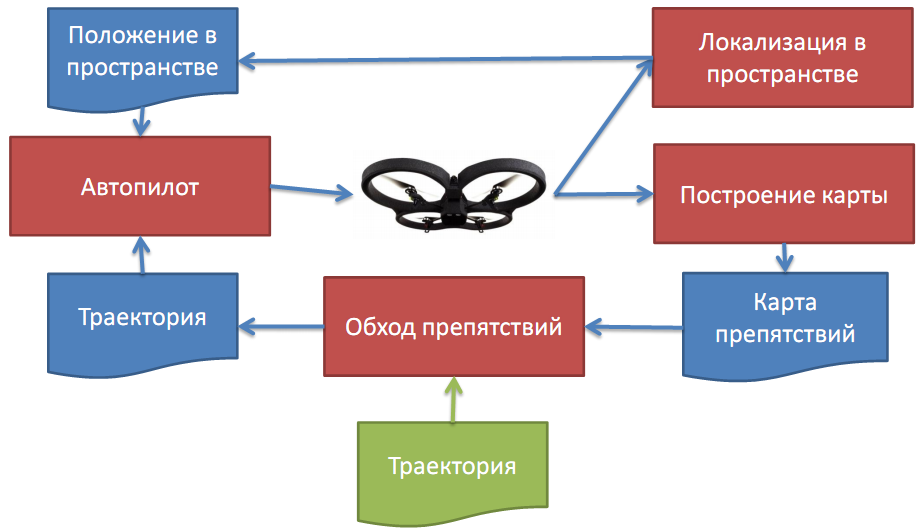

The general scheme of the solution was like this:

findings

Barrel of honey:

A spoon of tar:

References:

The LSD SLAM page on the developer's site: vision.in.tum.de/research/vslam/lsdslam

Open Motion Planning Library: ompl.kavrakilab.org

Flexible Collision Library: github.com/flexible-collision-library/fcl

Octomap: octomap.imtqy.com

Task

The task is to move along a trajectory given by a sequence of positions in an initially unknown environment with possible obstacles. To solve it you need to be able to:

')

- Build an obstacle map

- Determine the position of the quadrocopter relative to the trajectory and obstacles

- Adjust the trajectory taking into account the flyoff of obstacles

- Calculate control signals - implement the controller

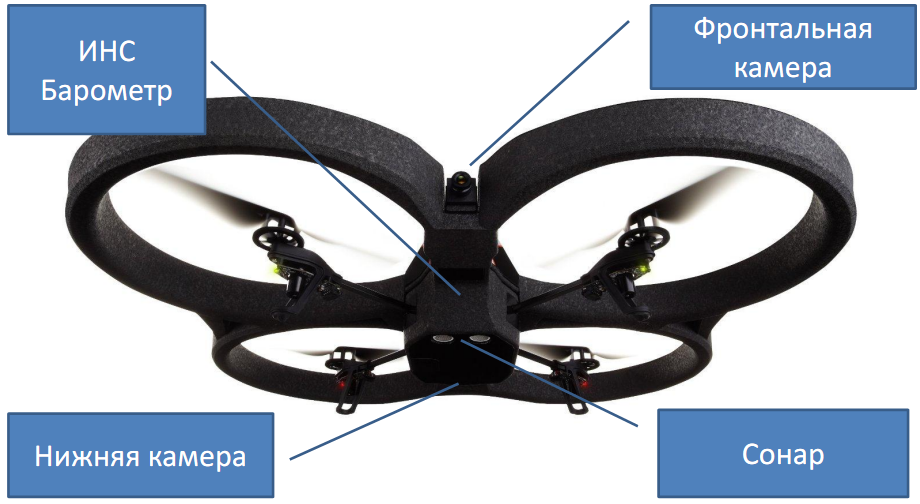

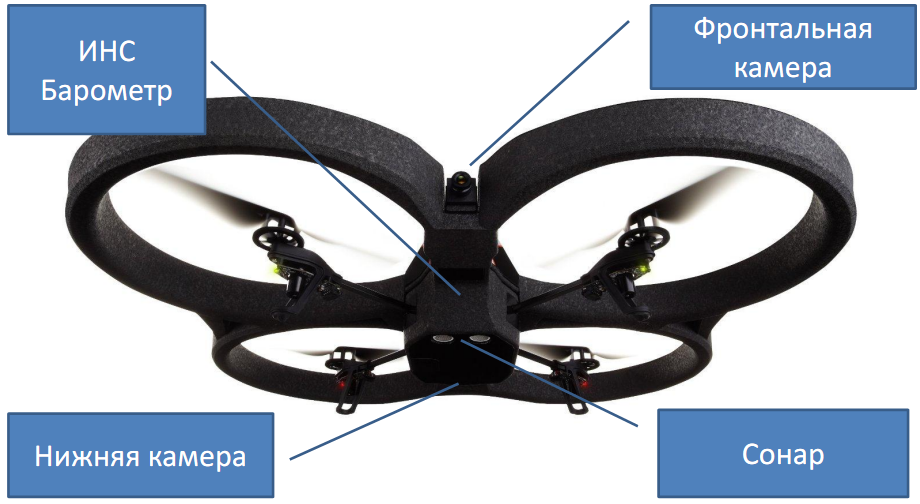

The technical base is Parrot AR.Drone. AR.Drone is equipped with the following devices of interest:

- Front camera: 640x360, 30 fps, diagonal viewing angle 92 degrees

- Lower camera: used by the integrated autopilot to compensate for wind and drift in general

- Ultrasonic height sensor: operates within 0.25 - 3 m

- INS (accelerometer + gyroscope + magnetometer) + barometer: all sensors are integrated into a single system using (apparently) sensor fusion

In addition, a uniform odometry is formed based on the indications of the INS and the lower chamber.

So, to build an environment map using standard AR.Drone tools, we can by and large only use the front camera. This directly leads us to the task of monocular vision, namely the monocular SLAM.

Large Scale Direct SLAM

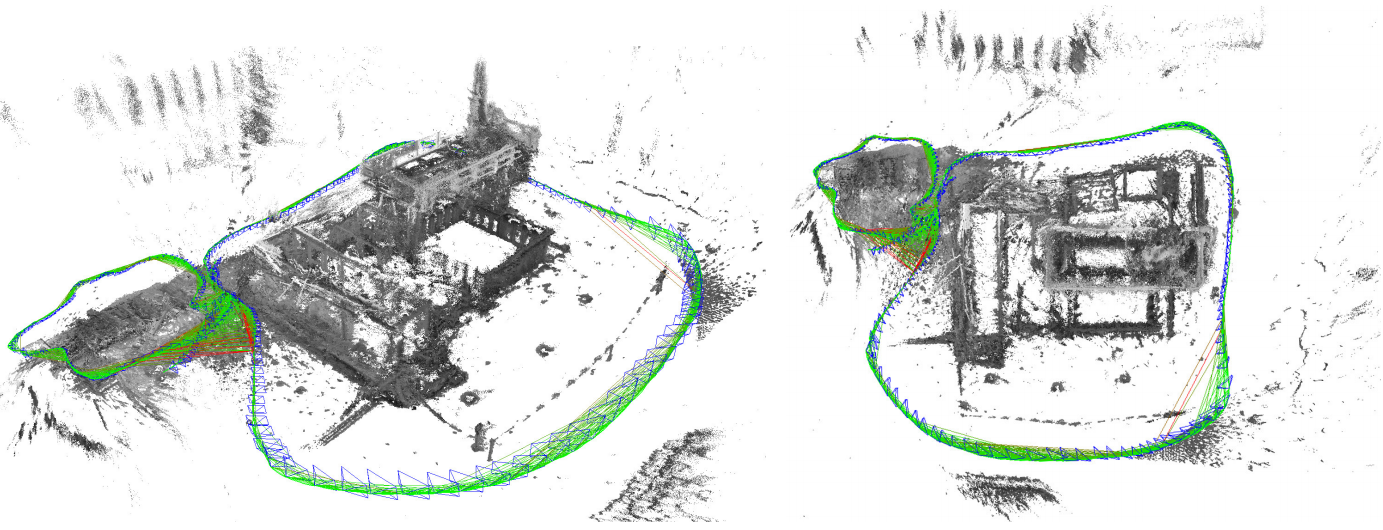

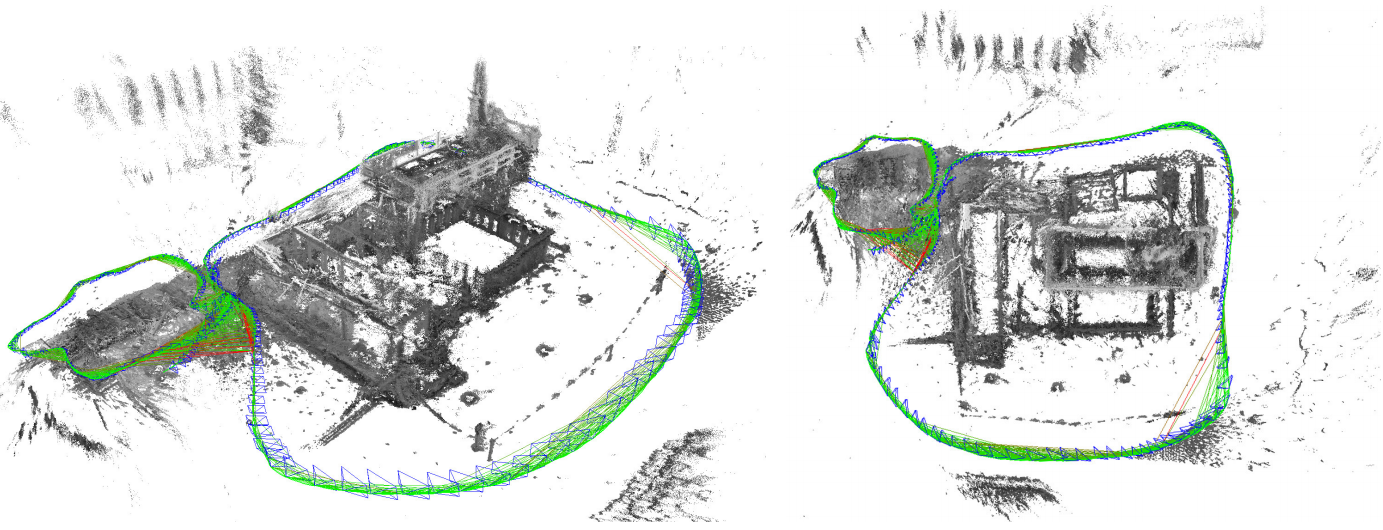

We can safely say that SLAM with the help of a single camera is a squeak of modern technology. Such algorithms that have appeared in the last few years can be counted on the fingers of a careless milling operator - these are ORB SLAM, LSD (Large Scale Direct) SLAM (and its predecessor SVO (Semi-direct Visual Odometry)), PTAM (Parallel Tracking And Mapping). Even fewer algorithms that build more or less dense (semi-dense) environment maps. Of the most advanced algorithms, only LSD SLAM issues such cards:

In a nutshell, LSD SLAM works as follows. Three procedures work in parallel: tracking, mapping and map optimization. The tracking component estimates the position of each new frame relative to the current keyframe. The map building component processes frames with a known position, either clearing the frame map in a cunning way or creating a new keyframe. The map optimization component searches for cycles in the keyframe graph and eliminates the effect of floating scale. More information about the algorithm can be in the article developers .

For stable and efficient operation of the algorithm (these requirements apply to any monocular SLAM algorithm) the following is necessary:

- The most accurate calibration of the camera and the subsequent rectification of the image. The accuracy of calibration and rectification, as well as the distortion model used, directly affects the quality of the resulting cards.

- Wide angle camera view. For more or less reliable operation, cameras with an FOV of more than 80-90 degrees are needed.

- A sufficient number of frames per second. With an FOV of 90 degrees, the number of frames per second should not be less than 30 (better - more).

- Camera movements should not contain turns without moving. Such a movement breaks the algorithm.

Points 2 and 3 are related to each other with a simple consideration: to calculate the movement between two adjacent frames, the images on these frames must overlap to a sufficient degree. Accordingly, the faster the camera moves, the greater must be the angle of view or frame rate so that the connection between frames is not lost.

If you comply with these requirements, you can get very good quality cards, which can be seen by watching the video from the creators of LSD SLAM:

Impressive, isn't it? However, even if you have reached such a quality of cards, another trouble awaits you: not a single monocular SLAM algorithm fundamentally can estimate the absolute scale of the maps obtained and, therefore, localization. Therefore, it is necessary to resort to some tricks and find an external data source, either helping to determine the size of map objects, or evaluating the absolute values of camera movements. The first method is limited only by your imagination: you can put an object of a known size in the field of view of the camera and then compare it with the scales of similar parts of the map, you can initialize the algorithm in a previously known situation, and so on. The second method is fairly easy to apply, using, for example, the altimeter data, which we have done.

To estimate the scale, we used data on movements along the vertical axis, obtained from two sources: from the LSD SLAM algorithm and the AR.Drone altimeter. The ratio of these values is the scale of the map and the localization of the monocular system. To eliminate random disturbances, we filtered the resulting scale value with a low-pass filter.

Obstacle avoidance and trajectory correction

LSD SLAM stores the environment map in the form of a graph of key frames with partial depth maps attached to them. Combining all the nodes of the graph, we obtain a map of a known part of the environment in the form of a cloud of points. However, this is not an obstacle map! To get a dense (dense) obstacle map, we used the Octomap library, which builds an obstacle map in the form of an octree based on a point cloud.

We used the FCL (Flexible Collision Library) + OMPL (Open Motion Planning Library) library stack to check collisions and adjust trajectories. After the map is updated, the collision trajectory with obstacles test is started, in case of collisions, the trajectory segment is recalculated by the scheduler (we used BIT *, but there may be options).

Controller

The controller was in the end quite simple, based on the PID controller. This was enough to follow the trajectory. The only thing that had to be added was the speed limit of the camera rotation to maintain the stability of SLAM.

Platform and general solution scheme

As a platform for the entire solution, we used ROS. The platform offers all the necessary infrastructure for the rapid development of parallel components (nodes in ROS terminology), communications between them, monitoring, dynamic tuning, an excellent Gazebo simulator, and much more, facilitating the development of serious robotic solutions. Although the stability of the individual components of the system still leaves much to be desired, and it’s not worthwhile to use it in the production of a responsible project.

The general scheme of the solution was like this:

findings

Barrel of honey:

- monocular SLAM is quite possible to try;

- ROS is a very convenient platform, at least for development and testing, implementation of cool robot projects becomes simpler and simpler.

A spoon of tar:

- making monocular SLAM work is a very, very tricky business, especially regarding the task of calibrating the camera.

References:

The LSD SLAM page on the developer's site: vision.in.tum.de/research/vslam/lsdslam

Open Motion Planning Library: ompl.kavrakilab.org

Flexible Collision Library: github.com/flexible-collision-library/fcl

Octomap: octomap.imtqy.com

Source: https://habr.com/ru/post/276595/

All Articles