Creating a program architecture or how to design a stool

By taking on the writing of a small, but real and growing project, we were “in our own shoes” convinced how important it was that the program not only worked well, but also was well organized. Do not believe that a thought-out architecture is needed only for large projects (just for large projects, the "mortality" of the absence of architecture is obvious). The complexity usually grows much faster than the size of the program. And if you do not take care of this in advance, then quite quickly the moment comes when you stop controlling it. Proper architecture saves a lot of effort, time and money. And often it generally determines whether your project will survive or not. And even if we are talking only about “building a stool,” it is still very useful at first to design it.

To my surprise, it turned out that the seemingly relevant question: “How to build a good / beautiful software architecture?” Is not so easy to find the answer. Despite the fact that there are many books and articles devoted to design patterns and design principles, for example, the SOLID principles (described briefly here , in detail and with examples you can see here , here and here ) and how to properly code the code, everything there was still a feeling that something important was missing. It was like if you were given many wonderful and useful tools, but you forgot the main thing - to explain how to “design a stool”.

I wanted to understand what the process of creating the architecture of the program generally includes, what tasks are being solved, what criteria are used (so that the rules and principles cease to be just dogmas, and their logic and purpose would become clear). Then it will be clearer and what tools are better to use in one way or another.

')

This article is an attempt to answer these questions, at least as a first approximation. The material was collected for himself, but maybe it will be useful to someone else. This work allowed me not only to learn a lot of new things, but also in a different context to look at the seemingly almost banal basic principles of the PLO and to truly appreciate their importance.

There was a lot of information, so only a general idea and brief descriptions are given, giving an initial idea of the topic and an understanding of where to look further.

Generally speaking, there is no generally accepted term “software architecture”. However, when it comes to practice, for most developers it’s clear what kind of code is good and which is bad. Good architecture is primarily a profitable architecture that makes the process of developing and maintaining a program simpler and more efficient. A program with a good architecture is easier to expand and change, and also to test, debug, and understand. That is, in fact, it is possible to formulate a list of quite reasonable and universal criteria:

System efficiency First of all, the program, of course, should solve the tasks and perform its functions well, and in different conditions. This could include such characteristics as reliability, safety, performance, ability to cope with increasing load (scalability), etc.

System flexibility Any application has to be changed over time - requirements change, new ones are added. The faster and more convenient it is possible to make changes to the existing functionality, the less problems and errors it causes - the more flexible and competitive the system. Therefore, in the development process, try to evaluate what happens, in order to find out how you might need to change it later. Ask yourself: “What will happen if the current architectural solution turns out to be wrong?”, “How much code will undergo changes?”. Changing one fragment of the system should not affect its other fragments. If possible, architectural solutions should not be “cut down in stone”, and the consequences of architectural errors should be reasonably limited. “ Good architecture allows for DECISION of making key decisions ” (Bob Martin) and minimizes the “cost” of errors.

System extensibility The ability to add new entities and functions to the system without breaking its main structure. At the initial stage, it only makes sense to lay the basic and most necessary functionality into the system (YAGNI principle - you ain't gonna need it, “You don’t need it”), but the architecture should allow you to easily add additional functionality as needed. And so that the introduction of the most likely changes required the least effort .

The requirement that the system architecture has flexibility and extensibility (that is, it is capable of change and evolution) is so important that it is even formulated as a separate principle - the “ Open-Closed Principle ” - the second of the five SOLID principles : Program entities (classes, modules, functions, etc.) must be open for expansion, but not open for modification.

In other words: It should be possible to expand / change the behavior of the system without changing / rewriting existing parts of the system.

This means that the application should be designed in such a way that changing its behavior and adding new functionality would be achieved by writing new code (extension), and at the same time you would not have to change already existing code. In this case, the emergence of new requirements will not entail a modification of the existing logic, and can be implemented primarily due to its expansion. This principle is the basis of the “plugin architecture” (Plugin Architecture). The fact by which techniques this can be achieved will be discussed further.

Scalable development process . The opportunity to shorten the development time by adding new people to the project. The architecture should allow parallelization of the development process, so that many people can work on the program at the same time.

Testability Code that is easier to test will contain fewer errors and more reliably work. But tests not only improve the quality of the code. Many developers come to the conclusion that the requirement of “good testability” is also a guiding force, automatically leading to good design, and at the same time one of the most important criteria for assessing its quality: “ Use the principle of“ testability ”of a class as a“ litmus test ”of good design Even if you do not write a single line of test code, the answer to this question in 90% of cases will help you to understand how everything is “good” or “bad” with its design "( Ideal architecture ).

There is a whole methodology for developing test-based programs that is called Test Development Driving (TDD ).

Reusability . It is desirable to design the system so that its fragments can be reused in other systems.

Well structured, readable and understandable code. Maintainability . Over the program, as a rule, a lot of people work - some leave, new ones come. After writing to accompany the program, too, as a rule, it is necessary to people who have not participated in its development. Therefore, a good architecture should make it possible for new people to understand the system relatively quickly and easily. The project should be well structured, free of duplication, have a well-designed code and preferably documentation. And whenever possible in the system it is better to use standard, generally accepted solutions familiar to programmers. The more exotic the system, the more difficult it is to understand others ( The principle of least surprise is Principle of least astonishment . Usually, it is used in relation to the user interface, but it is also applicable to writing code).

Well, to complete the criteria for bad design :

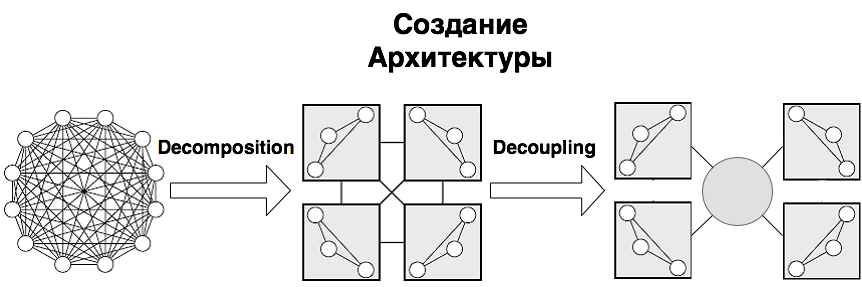

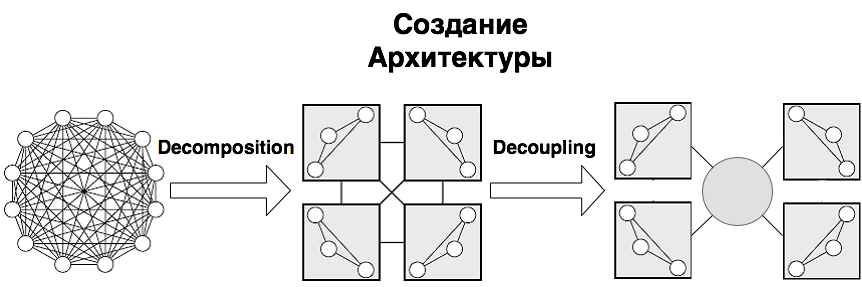

Despite the variety of criteria, the main task in developing large systems is considered to be the task of reducing complexity. And to reduce the complexity of nothing but dividing into parts, not yet invented. This is sometimes called the “divide and conquer” principle (divide et impera), but essentially it is a hierarchical decomposition . A complex system must be built from a small number of simpler subsystems, each of which, in turn, is built from smaller parts, etc., until the smallest parts are simple enough for direct understanding and creation.

Good luck lies in the fact that this solution is not only the only known, but also universal. In addition to reducing complexity, it also provides system flexibility, provides good scalability, and also allows for increased stability due to duplication of critical parts.

Accordingly, when it comes to building a program architecture, creating its structure, this mainly means decomposing a program into subsystems (functional modules, services, layers, subroutines) and organizing their interaction with each other and the outside world. Moreover, the more independent the subsystems, the safer it is to concentrate on the development of each of them separately at a specific point in time and at the same time not take care of all the other parts.

In this case, the program from the “spaghetti code” is transformed into a constructor consisting of a set of modules / subroutines that interact with each other according to well-defined and simple rules, which actually allows you to control its complexity, and also gives you the opportunity to get all the advantages usually correlated with the concept of good architecture:

It can be said that breaking up a complex problem into simple fragments is the goal of all design techniques. And the term “architecture”, in most cases, simply denotes the result of such a division, plus “ some constructive solutions that are difficult to change after their adoption ” (Martin Fowler, “Architecture of corporate software applications”). Therefore, most definitions in one form or another are as follows:

"The architecture identifies the main components of the system and the ways of their interaction. It is also a choice of such solutions, which are interpreted as fundamental and not subject to change in the future. "

" Architecture is the organization of a system , embodied in its components , their relations among themselves and with the environment .

A system is a set of components combined to perform a specific function. "

Thus, good architecture is, above all, a modular / block architecture . To get a good architecture you need to know how to properly decompose the system. So, it is necessary to understand - what decomposition is considered “correct” and how is it better to carry it out?

1. Hierarchical

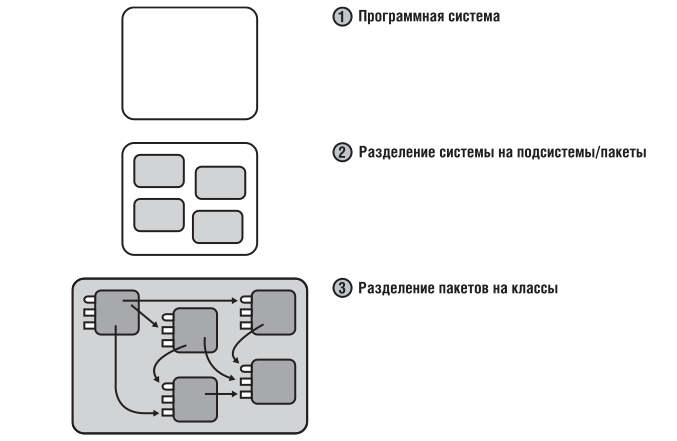

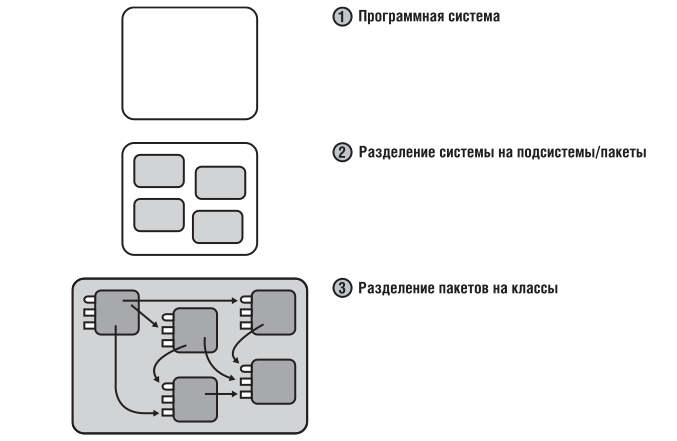

It is not necessary to immediately hack the application into hundreds of classes. As already mentioned, the decomposition should be carried out hierarchically - first, the system is divided into large functional modules / subsystems, describing its work in the most general form. Then, the obtained modules are analyzed in more detail and, in turn, are divided into sub-modules or objects.

Before you select objects, divide the system into basic semantic blocks, at least mentally. For small applications, two levels of hierarchy are often quite enough - the system is first divided into subsystems / packages, and packages are divided into classes.

This thought, for all its obviousness, is not as banal as it seems. For example, what is the essence of such a common “architectural pattern” as Model-View-Controller (MVC)? Only in the separation of the presentation from the business logic , that is, any user application is initially divided into two modules - one of which is responsible for the implementation of the logic business itself (Model), and the second for user interaction (User Interface or Representation). Then, in order for these modules to be developed independently, the connection between them is weakened using the “Observer” pattern (details of how to weaken the links will be discussed later) and we actually get one of the most powerful and sought-after “templates” that are currently in use. .

Typical first-level modules (obtained as a result of the first division of the system into the largest components) are just “business logic”, “user interface”, “database access”, “communication with specific equipment or OS”.

For visibility at each hierarchical level, it is recommended to allocate from 2 to 7 modules.

2. Functional

The division into modules / subsystems is best done based on the tasks that the system solves. The main task is divided into its subtasks, which can be solved / executed independently of each other. Each module must be responsible for solving a subtask and perform the corresponding function . In addition to the functional purpose, the module is also characterized by a set of data necessary for it to perform its function, that is:

Module = Function + Data required for its execution.

Moreover, it is desirable that the module could perform its function independently, without the help of other modules, only on the basis of its incoming data.

A module is not an arbitrary piece of code, but a separate functionally meaningful and complete software unit ( subroutine) that provides a solution to a certain task and, ideally, can work independently or in another environment and be reusable. The module should be some kind of "integrity, capable of relative independence in behavior and development" (Christopher Alexander).

Thus, competent decomposition is based primarily on the analysis of the functions of the system and the data necessary to perform these functions.

3. High Cohesion + Low Coupling

But the main criterion of the quality of decomposition is the extent to which the modules are focused on solving their problems and are independent. This is usually formulated as follows: " Modules obtained as a result of decomposition should be maximally interconnected inside (high internal cohesion) and minimally interconnected (low external coupling). "

It is believed that well-designed modules should have the following properties:

Literate decomposition is a kind of art and a huge problem for many programmers. Simplicity is very deceptive here, and mistakes are very expensive. If the selected modules are strongly linked with each other, if they cannot be developed independently or it is not clear what function each of them is responsible for, then it is worth considering whether the division is correct. It should be clear what role each module plays. The most reliable criterion that a decomposition is done correctly is if the modules are obtained as independent and valuable subroutines themselves that can be used in isolation from the rest of the application (and therefore can be reused).

Doing system decomposition it is desirable to check its quality by asking yourself the questions: “ What function does each module perform? ”, “ Is it easy to test modules? ”,“ Is it possible to use modules independently or in another environment? "," How much change in one module will affect the rest? "

First of all, of course, we should strive to ensure that the modules are extremely autonomous. As mentioned above, this is a key parameter for proper decomposition. Therefore, it should be carried out in such a way that the modules initially weakly depended on each other. But in addition, there are a number of special techniques and templates, which then allow you to further minimize and weaken the connections between the subsystems. For example, in the case of MVC, an “Observer” pattern was used for this purpose, but other solutions are possible. It can be said that techniques to reduce connectivity are precisely the main “tools of the architect.” It is only necessary to understand that we are talking about all subsystems and we need to weaken connectivity at all levels of the hierarchy , that is, not only between classes, but also between modules at each hierarchical level.

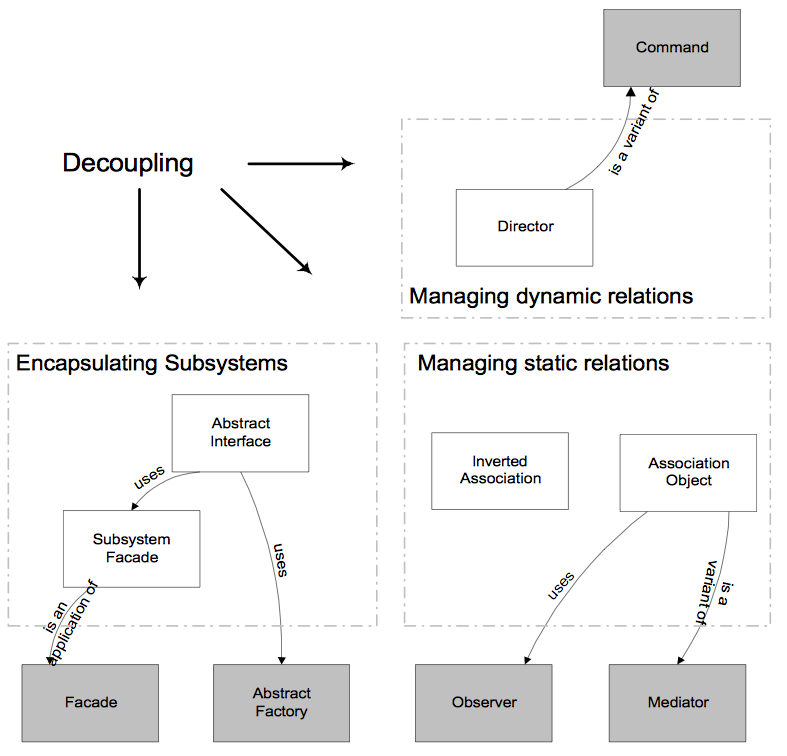

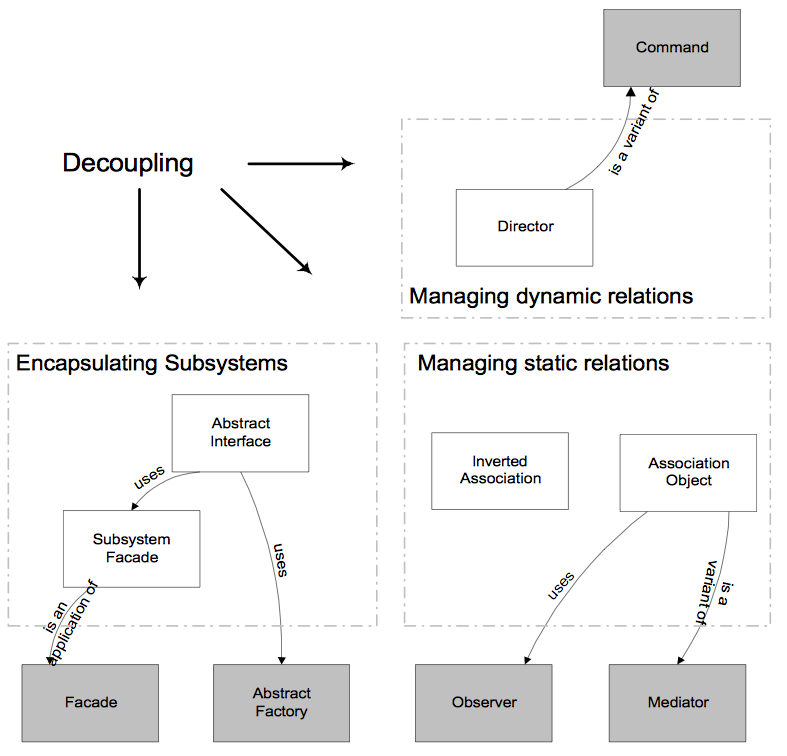

For clarity, a picture from a good article " Decoupling of Object-Oriented Systems ", illustrating the main points that will be discussed.

The main thing that makes it possible to reduce the connectivity of the system is, of course, the Interfaces (and the principle behind them, Encapsulation + Abstraction + Polymorphism) :

« » ( ) . , , . ( ). ( ) — . , . ( ) . , , — / , « , » ( ). . , , , .

, , , (Open-Closed Principle). , , , «» , , . « » (plugin architecture) — , . , /«» , ( ), ( ). Open-Closed Principle , + .

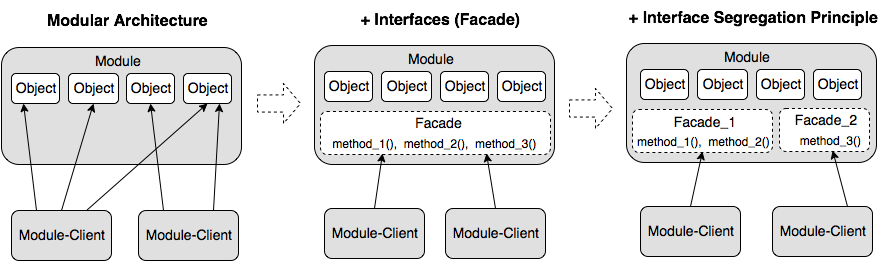

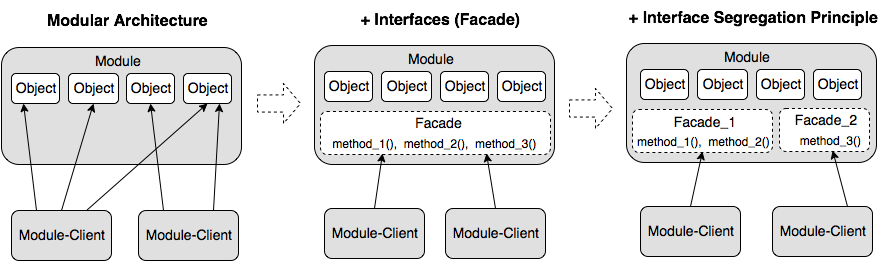

, . , , . , / , . SOLID — (Interface Segregation Principle) , « » , , , ( ) , . : " ( ), " “ , ”.

, , , , , / , . , — , , . . , , , ? : , , — .

— -, , . . — " , , , " .

, , , — « » . «» , .

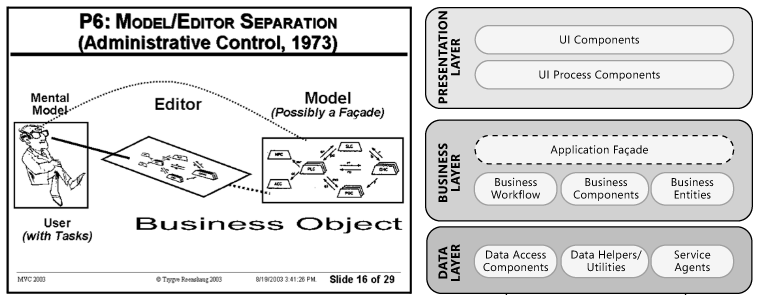

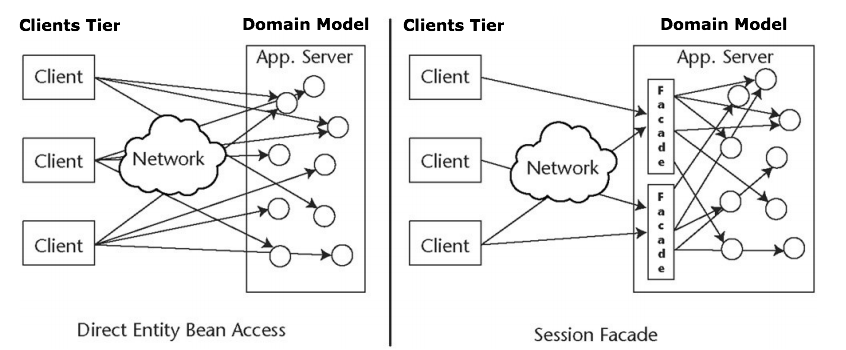

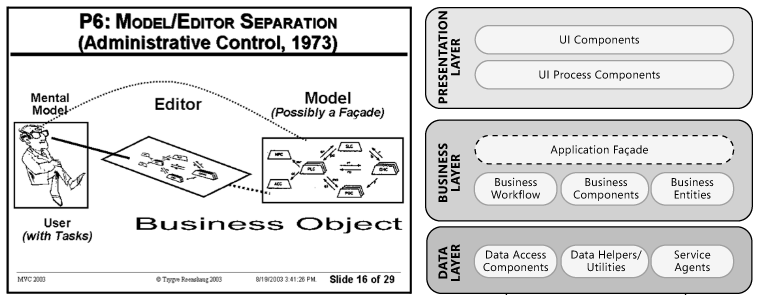

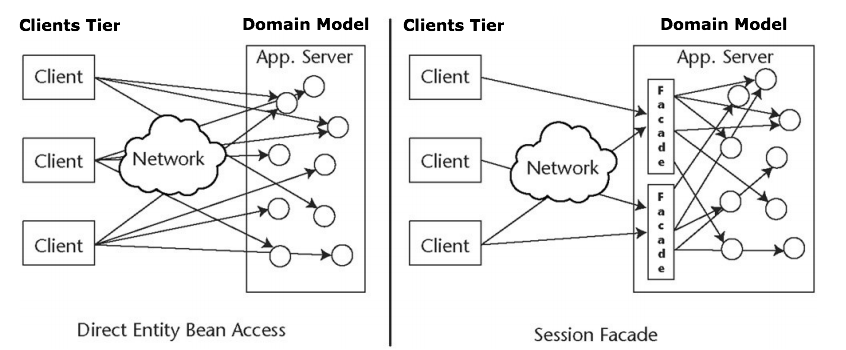

: (), , . , / «». , , «» « » --, , MVC ( Observer Composite)? , , , . MVC , , ( « The Model-View-Controller (MVC ). Its Past and Present », , 1973 , — Presentaition/UI Editior). «» , , MVC Microsoft (« Microsoft Application Architecture Guide »). :

, , «» , , - , , «», , , :

, , , , , ( Dependency Inversion — SOLID):

, , , : « ». « DIP, SRP, IoC, DI ..» . must-read, , .

, , . , , / new , .

, - . , , , , , . , — , , IoC-.

- (Single Choice Principle), : " , , ". , ( , ), , , .

, «» , Dependency Inversion.

, :

, , . :

, , ( , Dependency Inversion , Inversion of Control ; , ). Inversion of Control ( Dependency Injection Service Locator ) : " Inversion of Control Containers and the Dependency Injection pattern " “ Inversion of Control ”.

, ( Dependency Inversion ) + ( Dependency Injection ) / . , , , , , . , , . .

, - / , . « », , (messages) (events).

, :

: « , -. ( Command ). ( execute()), , - , . , , .

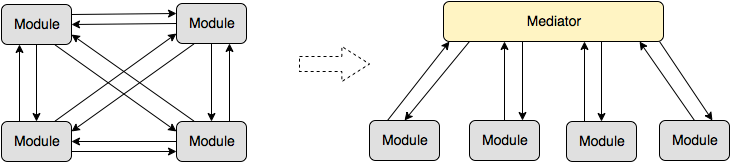

This approach summarizes and develops the idea embodied in the “Mediator” template. When there are a large number of modules in the system, their direct interaction with each other becomes too complicated. Therefore, it makes sense to replace the “all with all” interaction with the “one with all” interaction. To do this, a generalized intermediary is introduced, it can be a common core of the application, a storage or a data bus, and all other modules become independent of each other by clients using the services of this core or processing the information contained therein. The implementation of this idea allows client modules to communicate with each other through an intermediary and at the same time know nothing about each other.

The core proxy may either know about the client modules and control them (for example, the apache architecture ), so it may be completely, or almost completely, independent and not aware of anything about the clients. In essence, it is this approach that is implemented in the Model-View-Controller (MVC) “template”, where a lot of User Interfaces that work synchronously and do not know each other can interact with one Model (which is the core of the application and the common data store). and the Model does not know about them. Nothing prevents to connect to the general model and thus synchronize not only interfaces, but also other auxiliary modules.

Very actively, this idea is also used in the development of games, where independent modules responsible for graphics, sound, physics, and program management are synchronized with each other through the game core (model), where all data on the state of the game and its characters are stored. In contrast to MVC, in games, matching modules with the core (model) is not due to the "Observer" template, but by timer, which in itself is an interesting architectural solution, very useful for programs with animation and running graphics.

Demeter's law prohibits the use of implicit dependencies: " Object A should not be able to directly access object C if object A has access to object B and object B has access to object C. " Java example .

This means that all dependencies in the code must be “explicit” - classes / modules can use only “their own dependencies” in their work and should not go through them to others. Briefly, this principle is formulated in the following way: " Interact only with direct friends, not friends of friends ." Thereby, less cohesiveness is achieved, as well as greater visibility and transparency of its design.

The law of Demeter implements the already mentioned “ principle of minimal knowledge ”, which is the basis of weak connectivity, which means that an object / module should know as little detail as possible about the structure and properties of other objects / modules and anything else, including its own subcomponents . Analogy from life: If you want a dog to run, it is foolish to command its paws, it is better to give the command to the dog, and she will already deal with her paws herself.

One of the strongest links between objects is inheritance, so if possible, it should be avoided and replaced with composition. This topic is well covered in the article by Herb Sutter - “ Prefer Composition to Inheritance ”.

I can only advise in this context to pay attention to the Delegate pattern ( Delegation / Delegate ) and the Component pattern that came from games, which is described in detail in the book “Game Programming Patterns” (the corresponding chapter from this book in English and its translation ).

Articles on the Internet:

Wonderful Resource - The open source application architecture , where "the authors of four dozen open source applications tell about the structure of the programs they created and how these programs were created. What are their main components? How do they interact? And what did they discover are the creators in the development process? In answering these questions, the authors of the articles collected in these books give you a unique opportunity to penetrate into how they create . " One of the articles was fully published in Habré - " Scalable web architecture and distributed systems ."

Interesting solutions and ideas can be found in game development materials. Game Programming Patterns is a large site with a detailed description of many templates and examples of their application to the task of creating games (it turns out there is already a translation of it - “ Game Programming Templates ”, thanks to strannik_k for the link). It may also be useful article " Flexible and scalable architecture for computer games " (and its original . You just need to keep in mind that the author for some reason, the composition calls the template "Observer").

Regarding design patterns:

There are also the principles / patterns of GRASP described by Craig Laerman in the book " UML 2.0 Application and Design Patterns ", but they are more confusing than clarifying. The short review and discussion on Habré (the most valuable in comments).

And of course the book:

To my surprise, it turned out that the seemingly relevant question: “How to build a good / beautiful software architecture?” Is not so easy to find the answer. Despite the fact that there are many books and articles devoted to design patterns and design principles, for example, the SOLID principles (described briefly here , in detail and with examples you can see here , here and here ) and how to properly code the code, everything there was still a feeling that something important was missing. It was like if you were given many wonderful and useful tools, but you forgot the main thing - to explain how to “design a stool”.

I wanted to understand what the process of creating the architecture of the program generally includes, what tasks are being solved, what criteria are used (so that the rules and principles cease to be just dogmas, and their logic and purpose would become clear). Then it will be clearer and what tools are better to use in one way or another.

')

This article is an attempt to answer these questions, at least as a first approximation. The material was collected for himself, but maybe it will be useful to someone else. This work allowed me not only to learn a lot of new things, but also in a different context to look at the seemingly almost banal basic principles of the PLO and to truly appreciate their importance.

There was a lot of information, so only a general idea and brief descriptions are given, giving an initial idea of the topic and an understanding of where to look further.

Criteria for good architecture

Generally speaking, there is no generally accepted term “software architecture”. However, when it comes to practice, for most developers it’s clear what kind of code is good and which is bad. Good architecture is primarily a profitable architecture that makes the process of developing and maintaining a program simpler and more efficient. A program with a good architecture is easier to expand and change, and also to test, debug, and understand. That is, in fact, it is possible to formulate a list of quite reasonable and universal criteria:

System efficiency First of all, the program, of course, should solve the tasks and perform its functions well, and in different conditions. This could include such characteristics as reliability, safety, performance, ability to cope with increasing load (scalability), etc.

System flexibility Any application has to be changed over time - requirements change, new ones are added. The faster and more convenient it is possible to make changes to the existing functionality, the less problems and errors it causes - the more flexible and competitive the system. Therefore, in the development process, try to evaluate what happens, in order to find out how you might need to change it later. Ask yourself: “What will happen if the current architectural solution turns out to be wrong?”, “How much code will undergo changes?”. Changing one fragment of the system should not affect its other fragments. If possible, architectural solutions should not be “cut down in stone”, and the consequences of architectural errors should be reasonably limited. “ Good architecture allows for DECISION of making key decisions ” (Bob Martin) and minimizes the “cost” of errors.

System extensibility The ability to add new entities and functions to the system without breaking its main structure. At the initial stage, it only makes sense to lay the basic and most necessary functionality into the system (YAGNI principle - you ain't gonna need it, “You don’t need it”), but the architecture should allow you to easily add additional functionality as needed. And so that the introduction of the most likely changes required the least effort .

The requirement that the system architecture has flexibility and extensibility (that is, it is capable of change and evolution) is so important that it is even formulated as a separate principle - the “ Open-Closed Principle ” - the second of the five SOLID principles : Program entities (classes, modules, functions, etc.) must be open for expansion, but not open for modification.

In other words: It should be possible to expand / change the behavior of the system without changing / rewriting existing parts of the system.

This means that the application should be designed in such a way that changing its behavior and adding new functionality would be achieved by writing new code (extension), and at the same time you would not have to change already existing code. In this case, the emergence of new requirements will not entail a modification of the existing logic, and can be implemented primarily due to its expansion. This principle is the basis of the “plugin architecture” (Plugin Architecture). The fact by which techniques this can be achieved will be discussed further.

Scalable development process . The opportunity to shorten the development time by adding new people to the project. The architecture should allow parallelization of the development process, so that many people can work on the program at the same time.

Testability Code that is easier to test will contain fewer errors and more reliably work. But tests not only improve the quality of the code. Many developers come to the conclusion that the requirement of “good testability” is also a guiding force, automatically leading to good design, and at the same time one of the most important criteria for assessing its quality: “ Use the principle of“ testability ”of a class as a“ litmus test ”of good design Even if you do not write a single line of test code, the answer to this question in 90% of cases will help you to understand how everything is “good” or “bad” with its design "( Ideal architecture ).

There is a whole methodology for developing test-based programs that is called Test Development Driving (TDD ).

Reusability . It is desirable to design the system so that its fragments can be reused in other systems.

Well structured, readable and understandable code. Maintainability . Over the program, as a rule, a lot of people work - some leave, new ones come. After writing to accompany the program, too, as a rule, it is necessary to people who have not participated in its development. Therefore, a good architecture should make it possible for new people to understand the system relatively quickly and easily. The project should be well structured, free of duplication, have a well-designed code and preferably documentation. And whenever possible in the system it is better to use standard, generally accepted solutions familiar to programmers. The more exotic the system, the more difficult it is to understand others ( The principle of least surprise is Principle of least astonishment . Usually, it is used in relation to the user interface, but it is also applicable to writing code).

Well, to complete the criteria for bad design :

- It is hard to change, because any change affects too many other parts of the system. ( Stiffness, rigidity ).

- When changes are made, other parts of the system suddenly break. ( Fragility, Fragility ).

- The code is hard to reuse in another application, since it is too hard to “blow it out” from the current application. ( Immobility, Immobility ).

Modular architecture. Decomposition as a basis

Despite the variety of criteria, the main task in developing large systems is considered to be the task of reducing complexity. And to reduce the complexity of nothing but dividing into parts, not yet invented. This is sometimes called the “divide and conquer” principle (divide et impera), but essentially it is a hierarchical decomposition . A complex system must be built from a small number of simpler subsystems, each of which, in turn, is built from smaller parts, etc., until the smallest parts are simple enough for direct understanding and creation.

Good luck lies in the fact that this solution is not only the only known, but also universal. In addition to reducing complexity, it also provides system flexibility, provides good scalability, and also allows for increased stability due to duplication of critical parts.

Accordingly, when it comes to building a program architecture, creating its structure, this mainly means decomposing a program into subsystems (functional modules, services, layers, subroutines) and organizing their interaction with each other and the outside world. Moreover, the more independent the subsystems, the safer it is to concentrate on the development of each of them separately at a specific point in time and at the same time not take care of all the other parts.

In this case, the program from the “spaghetti code” is transformed into a constructor consisting of a set of modules / subroutines that interact with each other according to well-defined and simple rules, which actually allows you to control its complexity, and also gives you the opportunity to get all the advantages usually correlated with the concept of good architecture:

- Scalability

the ability to expand the system and increase its performance by adding new modules. - Maintainability

changing one module does not require changing other modules - Swappability Modules

module is easy to replace - Testing Capability (Unit Testing)

the module can be disconnected from all others and tested / repaired - Reusability

the module can be reused in other programs and other environments - Maintainability (maintenance)

a program broken into modules is easier to understand and maintain

It can be said that breaking up a complex problem into simple fragments is the goal of all design techniques. And the term “architecture”, in most cases, simply denotes the result of such a division, plus “ some constructive solutions that are difficult to change after their adoption ” (Martin Fowler, “Architecture of corporate software applications”). Therefore, most definitions in one form or another are as follows:

"The architecture identifies the main components of the system and the ways of their interaction. It is also a choice of such solutions, which are interpreted as fundamental and not subject to change in the future. "

" Architecture is the organization of a system , embodied in its components , their relations among themselves and with the environment .

A system is a set of components combined to perform a specific function. "

Thus, good architecture is, above all, a modular / block architecture . To get a good architecture you need to know how to properly decompose the system. So, it is necessary to understand - what decomposition is considered “correct” and how is it better to carry it out?

"Correct" decomposition

1. Hierarchical

It is not necessary to immediately hack the application into hundreds of classes. As already mentioned, the decomposition should be carried out hierarchically - first, the system is divided into large functional modules / subsystems, describing its work in the most general form. Then, the obtained modules are analyzed in more detail and, in turn, are divided into sub-modules or objects.

Before you select objects, divide the system into basic semantic blocks, at least mentally. For small applications, two levels of hierarchy are often quite enough - the system is first divided into subsystems / packages, and packages are divided into classes.

This thought, for all its obviousness, is not as banal as it seems. For example, what is the essence of such a common “architectural pattern” as Model-View-Controller (MVC)? Only in the separation of the presentation from the business logic , that is, any user application is initially divided into two modules - one of which is responsible for the implementation of the logic business itself (Model), and the second for user interaction (User Interface or Representation). Then, in order for these modules to be developed independently, the connection between them is weakened using the “Observer” pattern (details of how to weaken the links will be discussed later) and we actually get one of the most powerful and sought-after “templates” that are currently in use. .

Typical first-level modules (obtained as a result of the first division of the system into the largest components) are just “business logic”, “user interface”, “database access”, “communication with specific equipment or OS”.

For visibility at each hierarchical level, it is recommended to allocate from 2 to 7 modules.

2. Functional

The division into modules / subsystems is best done based on the tasks that the system solves. The main task is divided into its subtasks, which can be solved / executed independently of each other. Each module must be responsible for solving a subtask and perform the corresponding function . In addition to the functional purpose, the module is also characterized by a set of data necessary for it to perform its function, that is:

Module = Function + Data required for its execution.

Moreover, it is desirable that the module could perform its function independently, without the help of other modules, only on the basis of its incoming data.

A module is not an arbitrary piece of code, but a separate functionally meaningful and complete software unit ( subroutine) that provides a solution to a certain task and, ideally, can work independently or in another environment and be reusable. The module should be some kind of "integrity, capable of relative independence in behavior and development" (Christopher Alexander).

Thus, competent decomposition is based primarily on the analysis of the functions of the system and the data necessary to perform these functions.

3. High Cohesion + Low Coupling

But the main criterion of the quality of decomposition is the extent to which the modules are focused on solving their problems and are independent. This is usually formulated as follows: " Modules obtained as a result of decomposition should be maximally interconnected inside (high internal cohesion) and minimally interconnected (low external coupling). "

- High Cohesion , high contingency or “cohesion” within the module, suggests that the module focuses on solving one narrow problem, and is not engaged in performing heterogeneous functions or unrelated duties. ( Contingency - cohesion , describes the degree to which the tasks performed by the module are related to each other)

The consequence of High Cohesion is the principle of sole responsibility ( Single Responsibility Principle - the first of the five principles of SOLID), according to which any object / module should have only one responsibility and, accordingly, there should not be more than one reason for changing it. - Low Coupling , weak connectivity, means that the modules into which the system is broken should be, if possible, independent or weakly connected to each other. They should be able to interact, but at the same time know as little about each other as possible (the principle of minimum knowledge ).

This means that with proper design, with a change in one module, there will be no need to edit others or these changes will be minimal. The weaker the connectivity, the easier it is to write / understand / expand / repair the program.

It is believed that well-designed modules should have the following properties:

- functional integrity and completeness - each module implements one function, but implements well and completely; the module itself (without the help of additional funds) performs a full set of operations to implement its function.

- one input and one output - at the input the program module receives a specific set of input data, performs meaningful processing and returns one set of output data, i.e. the standard IPO principle is implemented — input – process – output ;

- logical independence - the result of the work of the program module depends only on the source data, but does not depend on the work of other modules;

- Weak information links with other modules - the exchange of information between modules should be minimized wherever possible.

Literate decomposition is a kind of art and a huge problem for many programmers. Simplicity is very deceptive here, and mistakes are very expensive. If the selected modules are strongly linked with each other, if they cannot be developed independently or it is not clear what function each of them is responsible for, then it is worth considering whether the division is correct. It should be clear what role each module plays. The most reliable criterion that a decomposition is done correctly is if the modules are obtained as independent and valuable subroutines themselves that can be used in isolation from the rest of the application (and therefore can be reused).

Doing system decomposition it is desirable to check its quality by asking yourself the questions: “ What function does each module perform? ”, “ Is it easy to test modules? ”,“ Is it possible to use modules independently or in another environment? "," How much change in one module will affect the rest? "

First of all, of course, we should strive to ensure that the modules are extremely autonomous. As mentioned above, this is a key parameter for proper decomposition. Therefore, it should be carried out in such a way that the modules initially weakly depended on each other. But in addition, there are a number of special techniques and templates, which then allow you to further minimize and weaken the connections between the subsystems. For example, in the case of MVC, an “Observer” pattern was used for this purpose, but other solutions are possible. It can be said that techniques to reduce connectivity are precisely the main “tools of the architect.” It is only necessary to understand that we are talking about all subsystems and we need to weaken connectivity at all levels of the hierarchy , that is, not only between classes, but also between modules at each hierarchical level.

How to weaken connectivity between modules

For clarity, a picture from a good article " Decoupling of Object-Oriented Systems ", illustrating the main points that will be discussed.

1. Interfaces. Facade

The main thing that makes it possible to reduce the connectivity of the system is, of course, the Interfaces (and the principle behind them, Encapsulation + Abstraction + Polymorphism) :

- " " ( ). , «» .

- / ( , , ) .

« » ( ) . , , . ( ). ( ) — . , . ( ) . , , — / , « , » ( ). . , , , .

, , , (Open-Closed Principle). , , , «» , , . « » (plugin architecture) — , . , /«» , ( ), ( ). Open-Closed Principle , + .

, . , , . , / , . SOLID — (Interface Segregation Principle) , « » , , , ( ) , . : " ( ), " “ , ”.

, , , , , / , . , — , , . . , , , ? : , , — .

— -, , . . — " , , , " .

, , , — « » . «» , .

: (), , . , / «». , , «» « » --, , MVC ( Observer Composite)? , , , . MVC , , ( « The Model-View-Controller (MVC ). Its Past and Present », , 1973 , — Presentaition/UI Editior). «» , , MVC Microsoft (« Microsoft Application Architecture Guide »). :

, , «» , , - , , «», , , :

2. Dependency Inversion.

, , , , , ( Dependency Inversion — SOLID):

- . , .

- Abstractions should not depend on the details. .

, , , : « ». « DIP, SRP, IoC, DI ..» . must-read, , .

, , . , , / new , .

, - . , , , , , . , — , , IoC-.

- (Single Choice Principle), : " , , ". , ( , ), , , .

, «» , Dependency Inversion.

, :

- ,

- , /

, , . :

- .

, , — , , . ( Factory Method ).

" , new, - . , ".

, , ( Abstract factory ). - , ( , , , ).

( Service Locator ), , , , (), .

, Service Locator , ( / , , ). , . , . , Service Locator, , - .

Service Locator , , . Service Locator , .

, Service Locator ( , ). :

Service Locator is an Anti-Pattern

Abstract Factory or Service Locator? - «» . , , («») - .

— ( Dependency Injection ). ( Constructor Injection ), ( Setter injection ).

— - . , — , . ( Inversion of Control ), — « , ».

, . , « » — , . , «» ( — IoC-).

, : , , . , — , - , .

, , ( , Dependency Inversion , Inversion of Control ; , ). Inversion of Control ( Dependency Injection Service Locator ) : " Inversion of Control Containers and the Dependency Injection pattern " “ Inversion of Control ”.

, ( Dependency Inversion ) + ( Dependency Injection ) / . , , , , , . , , . .

3.

, - / , . « », , (messages) (events).

, :

- (Observer). « -- », — . , , , , , «» . , () - .

«» — «» «» (.. ) . / . - (Mediator). , « . , . (« ») (« »). , .

— . , , , , . , , . , , .

: « , -. ( Command ). ( execute()), , - , . , , .

4.

This approach summarizes and develops the idea embodied in the “Mediator” template. When there are a large number of modules in the system, their direct interaction with each other becomes too complicated. Therefore, it makes sense to replace the “all with all” interaction with the “one with all” interaction. To do this, a generalized intermediary is introduced, it can be a common core of the application, a storage or a data bus, and all other modules become independent of each other by clients using the services of this core or processing the information contained therein. The implementation of this idea allows client modules to communicate with each other through an intermediary and at the same time know nothing about each other.

The core proxy may either know about the client modules and control them (for example, the apache architecture ), so it may be completely, or almost completely, independent and not aware of anything about the clients. In essence, it is this approach that is implemented in the Model-View-Controller (MVC) “template”, where a lot of User Interfaces that work synchronously and do not know each other can interact with one Model (which is the core of the application and the common data store). and the Model does not know about them. Nothing prevents to connect to the general model and thus synchronize not only interfaces, but also other auxiliary modules.

Very actively, this idea is also used in the development of games, where independent modules responsible for graphics, sound, physics, and program management are synchronized with each other through the game core (model), where all data on the state of the game and its characters are stored. In contrast to MVC, in games, matching modules with the core (model) is not due to the "Observer" template, but by timer, which in itself is an interesting architectural solution, very useful for programs with animation and running graphics.

5. Law of Demeter

Demeter's law prohibits the use of implicit dependencies: " Object A should not be able to directly access object C if object A has access to object B and object B has access to object C. " Java example .

This means that all dependencies in the code must be “explicit” - classes / modules can use only “their own dependencies” in their work and should not go through them to others. Briefly, this principle is formulated in the following way: " Interact only with direct friends, not friends of friends ." Thereby, less cohesiveness is achieved, as well as greater visibility and transparency of its design.

The law of Demeter implements the already mentioned “ principle of minimal knowledge ”, which is the basis of weak connectivity, which means that an object / module should know as little detail as possible about the structure and properties of other objects / modules and anything else, including its own subcomponents . Analogy from life: If you want a dog to run, it is foolish to command its paws, it is better to give the command to the dog, and she will already deal with her paws herself.

6. Composition instead of inheritance

One of the strongest links between objects is inheritance, so if possible, it should be avoided and replaced with composition. This topic is well covered in the article by Herb Sutter - “ Prefer Composition to Inheritance ”.

I can only advise in this context to pay attention to the Delegate pattern ( Delegation / Delegate ) and the Component pattern that came from games, which is described in detail in the book “Game Programming Patterns” (the corresponding chapter from this book in English and its translation ).

What to read

Articles on the Internet:

- Design patterns for decoupling (small useful chapter from the UML Tutorial );

- A little bit about architecture ;

- Patterns For Large-Scale JavaScript Application Architecture ;

- Weak binding of components in JavaScript ;

- How to write test code - an article from which it is clearly seen that the criteria for code testing and good design are the same;

- Martin Fowler site .

Wonderful Resource - The open source application architecture , where "the authors of four dozen open source applications tell about the structure of the programs they created and how these programs were created. What are their main components? How do they interact? And what did they discover are the creators in the development process? In answering these questions, the authors of the articles collected in these books give you a unique opportunity to penetrate into how they create . " One of the articles was fully published in Habré - " Scalable web architecture and distributed systems ."

Interesting solutions and ideas can be found in game development materials. Game Programming Patterns is a large site with a detailed description of many templates and examples of their application to the task of creating games (it turns out there is already a translation of it - “ Game Programming Templates ”, thanks to strannik_k for the link). It may also be useful article " Flexible and scalable architecture for computer games " (and its original . You just need to keep in mind that the author for some reason, the composition calls the template "Observer").

Regarding design patterns:

- Interesting " Thought about design patterns ";

- Convenient site with brief descriptions and diagrams of all design patterns - “ Review of design patterns ”;

- a site based on the book of the gang of four, where all the patterns are described in great detail - “ Design Patterns [Patterns] ”

There are also the principles / patterns of GRASP described by Craig Laerman in the book " UML 2.0 Application and Design Patterns ", but they are more confusing than clarifying. The short review and discussion on Habré (the most valuable in comments).

And of course the book:

- Martin Fowler, Enterprise Software Architecture ;

- Steve McConnell " Perfect Code ";

- Design patterns from the gang of four (Gang of Four, GoF) - “The techniques of object-oriented design. Design patterns .

Source: https://habr.com/ru/post/276593/

All Articles