New leader in price-performance among storage systems?

If you are interested in data storage systems, then the Storage Performance Council (SPC) site is probably familiar to you. Many manufacturers, in accordance with accepted methods, conduct tests of their systems and publish their results. Of course, as in any other synthetic tests, one can criticize both the methodology and the accuracy of the published price characteristics, but at the current moment this is perhaps the most objective open source of data on storage performance.

The recently published test results of the DataCore SANsymphony-V 10 system demonstrate a serious breakthrough of software-defined storage systems (Software Defined Storage).

It is not even about the absolute values of the integrated performance - the tested system was behind the top ten winners with a score of about 450 thousand IOPs, although this is not the worst result. The breakthrough happened in such an important indicator as the cost of a single input-output operation ($ / IOPs) - the manufacturer managed to reach a value of 0.08 $ / IOPs (8 cents (!) For IOPs). This is really a great result, considering that the closest competitor (Infortrend EonStor DS 3024B) shows the result 3 times worse - only 0.24 $ / IOPs. For most classic storage systems, the result is several times more.

')

In addition, at 100% load, the response time was 0.32ms, which is also a remarkable indicator - for many All Flash storage systems, a result less than 1ms is quite acceptable.

The low cost of IOPs has been achieved, of course, due to the fact that the system has a very affordable price - the whole set of hardware and licenses costs $ 38,400 (taking into account not a very big discount). The cost of the decision is detailed in the report itself and anyone can read it.

Well, the time has come for new technologies and all the old storage systems need to be taken out of service by replacing them with SDS?

Yes, at first glance, it will be very difficult for competitors to obtain a similar result (if at all possible within the framework of existing technologies). The fact is that SANsymphony-V is a software solution that runs inside the server and, as a result, it does not require any switching (FC / Ethernet) with all the associated delays. In addition, version 10 implements the technology of multi-threaded parallel access ( parallel I / O ) to the data and here modern multi-core processors offer a noticeable advantage. (Why multithreaded access becomes relevant can be read here )

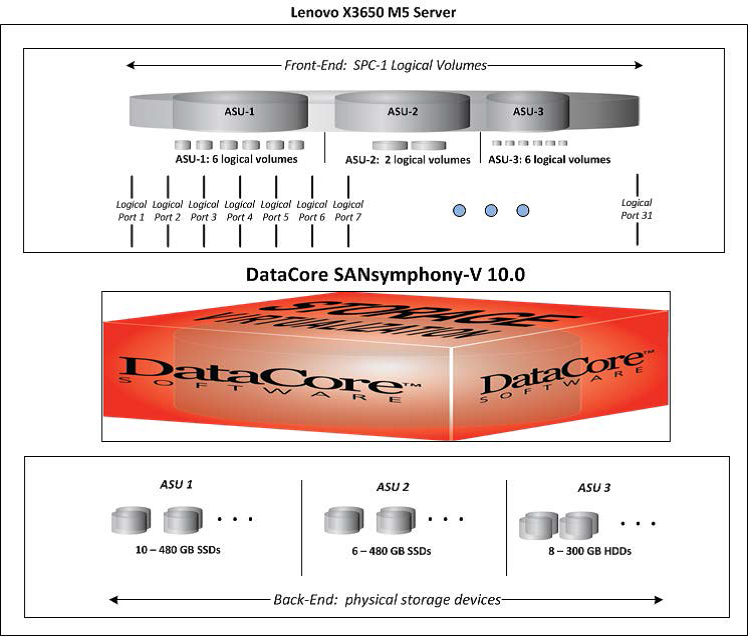

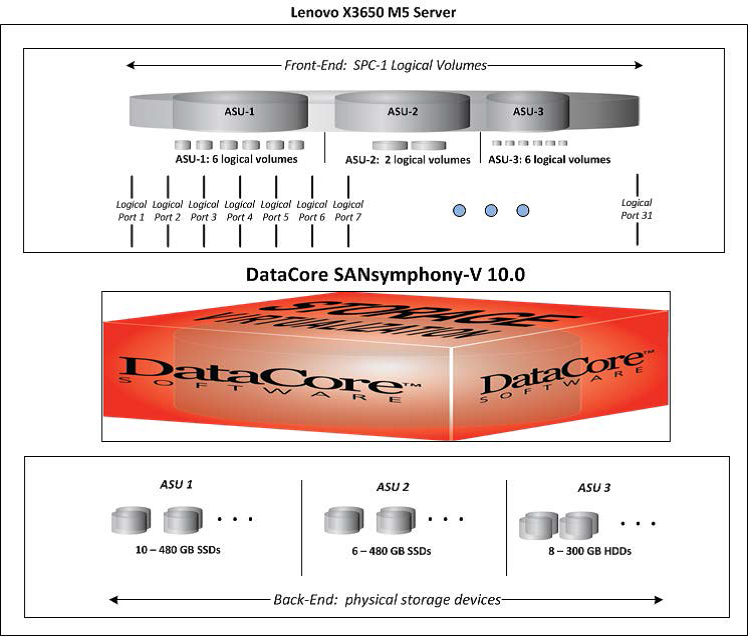

But let's take a closer look at the configuration of the system that participated in the tests. This is just one Lenovo x3650M5 server with two Intel Xeon E5-2695v3 processors and 544GB of RAM (of which just under 409GB was allocated for SANsymphony-V). For data placement, 16 SSD and 8 HDD with a total volume of 10TB were used. The real usable volume was 2.9TB (29% of the total “raw” capacity).

It is quite interesting to note the obtained performance values for the ASU-3 partition (it was placed on conventional HDD drives) - more than 120 thousand IOPs, which unequivocally indicate that the data was actually transferred from the cache (of which there was a lot of data in the system). Therefore, I do not really understand why these discs were even put in the system at all - if only to show the possibility of creating a hybrid solution.

As for the architecture, the absence of any fault tolerance at the level of the entire “storage system” is certainly striking. The data itself is mirrored inside a single server, but the server itself is not protected by anything. How much will the performance change when we want to provide normal fault tolerance? This is where the network protocols will start to play, from which we have so successfully got rid of earlier, and the question of delays will return to the agenda.

Not used "features" to optimize storage - compression, deduplication. Of course, no one in their right mind will include them during tests, but it is worth remembering that in real life they can be useful. Although, of course, the already low cost of both one IOPs and the system as a whole may make it possible to abandon these opportunities.

What do competitors offer? Let's take the recently published result for IBM FlashSystem 900 - 440 thousand IOPs, 0.49ms response time at 100% load (almost 50% worse than DataCore) and a whole $ 1.61 / IOPs (20 times longer than DataCore) . But what do we have from the point of view of the customer? A full-fledged storage system connected via FC (one FIBER switch is even included in the estimated cost), which has basic fault tolerance functionality - not only with respect to disk modules, but also to controllers. Using such a classic storage system, the customer does not need to configure and monitor the health of the operating system in the storage system (in the case of DataCore, Windows Server 2008R2 was used). An important factor for the user is the volume of the system - 34TB (50% of the total capacity) was used in the FlashSystem 900. And this is 10 times more than for the SANsymphony-V test. Now that hundreds of thousands of IOPs have ceased to be an unattainable limit, for a number of solutions the usable capacity of the system is again coming to the fore. And the difference in the price of a gigabyte is not so big. Of course, you can begin to argue that SANsymphony-V will increase with the volume, but there are certain doubts - the system cache cannot be proportionally increased, and using multiple DataCore servers in parallel will cause a decrease in performance due to all the same problems with delays in network protocols and interfaces.

DataCore solution is certainly interesting and can be successfully used to build software-defined storage systems for tasks that require maximum performance. The cost of the solution, especially in these difficult days, can be a determining factor for many customers. But when working out a solution, you should not forget about the fault tolerance of the system and correctly compare the prices of various options, taking into account all the features of the system as a whole, and not just the abstract performance or cost of IOPs.

Make the system sizing, select and compare implementation options and, of course, Trinity experts are always happy to prepare the project. Our goal is to help the customer choose the most appropriate solution for your tasks.

Other Trinity articles can be found on the Trinity blog and hub . Subscribe!

The recently published test results of the DataCore SANsymphony-V 10 system demonstrate a serious breakthrough of software-defined storage systems (Software Defined Storage).

It is not even about the absolute values of the integrated performance - the tested system was behind the top ten winners with a score of about 450 thousand IOPs, although this is not the worst result. The breakthrough happened in such an important indicator as the cost of a single input-output operation ($ / IOPs) - the manufacturer managed to reach a value of 0.08 $ / IOPs (8 cents (!) For IOPs). This is really a great result, considering that the closest competitor (Infortrend EonStor DS 3024B) shows the result 3 times worse - only 0.24 $ / IOPs. For most classic storage systems, the result is several times more.

')

In addition, at 100% load, the response time was 0.32ms, which is also a remarkable indicator - for many All Flash storage systems, a result less than 1ms is quite acceptable.

The low cost of IOPs has been achieved, of course, due to the fact that the system has a very affordable price - the whole set of hardware and licenses costs $ 38,400 (taking into account not a very big discount). The cost of the decision is detailed in the report itself and anyone can read it.

Well, the time has come for new technologies and all the old storage systems need to be taken out of service by replacing them with SDS?

Yes, at first glance, it will be very difficult for competitors to obtain a similar result (if at all possible within the framework of existing technologies). The fact is that SANsymphony-V is a software solution that runs inside the server and, as a result, it does not require any switching (FC / Ethernet) with all the associated delays. In addition, version 10 implements the technology of multi-threaded parallel access ( parallel I / O ) to the data and here modern multi-core processors offer a noticeable advantage. (Why multithreaded access becomes relevant can be read here )

But let's take a closer look at the configuration of the system that participated in the tests. This is just one Lenovo x3650M5 server with two Intel Xeon E5-2695v3 processors and 544GB of RAM (of which just under 409GB was allocated for SANsymphony-V). For data placement, 16 SSD and 8 HDD with a total volume of 10TB were used. The real usable volume was 2.9TB (29% of the total “raw” capacity).

It is quite interesting to note the obtained performance values for the ASU-3 partition (it was placed on conventional HDD drives) - more than 120 thousand IOPs, which unequivocally indicate that the data was actually transferred from the cache (of which there was a lot of data in the system). Therefore, I do not really understand why these discs were even put in the system at all - if only to show the possibility of creating a hybrid solution.

As for the architecture, the absence of any fault tolerance at the level of the entire “storage system” is certainly striking. The data itself is mirrored inside a single server, but the server itself is not protected by anything. How much will the performance change when we want to provide normal fault tolerance? This is where the network protocols will start to play, from which we have so successfully got rid of earlier, and the question of delays will return to the agenda.

Not used "features" to optimize storage - compression, deduplication. Of course, no one in their right mind will include them during tests, but it is worth remembering that in real life they can be useful. Although, of course, the already low cost of both one IOPs and the system as a whole may make it possible to abandon these opportunities.

What do competitors offer? Let's take the recently published result for IBM FlashSystem 900 - 440 thousand IOPs, 0.49ms response time at 100% load (almost 50% worse than DataCore) and a whole $ 1.61 / IOPs (20 times longer than DataCore) . But what do we have from the point of view of the customer? A full-fledged storage system connected via FC (one FIBER switch is even included in the estimated cost), which has basic fault tolerance functionality - not only with respect to disk modules, but also to controllers. Using such a classic storage system, the customer does not need to configure and monitor the health of the operating system in the storage system (in the case of DataCore, Windows Server 2008R2 was used). An important factor for the user is the volume of the system - 34TB (50% of the total capacity) was used in the FlashSystem 900. And this is 10 times more than for the SANsymphony-V test. Now that hundreds of thousands of IOPs have ceased to be an unattainable limit, for a number of solutions the usable capacity of the system is again coming to the fore. And the difference in the price of a gigabyte is not so big. Of course, you can begin to argue that SANsymphony-V will increase with the volume, but there are certain doubts - the system cache cannot be proportionally increased, and using multiple DataCore servers in parallel will cause a decrease in performance due to all the same problems with delays in network protocols and interfaces.

DataCore solution is certainly interesting and can be successfully used to build software-defined storage systems for tasks that require maximum performance. The cost of the solution, especially in these difficult days, can be a determining factor for many customers. But when working out a solution, you should not forget about the fault tolerance of the system and correctly compare the prices of various options, taking into account all the features of the system as a whole, and not just the abstract performance or cost of IOPs.

Make the system sizing, select and compare implementation options and, of course, Trinity experts are always happy to prepare the project. Our goal is to help the customer choose the most appropriate solution for your tasks.

Other Trinity articles can be found on the Trinity blog and hub . Subscribe!

Source: https://habr.com/ru/post/276583/

All Articles