Connectivity components in a dynamic graph in one pass

People meet, people quarrel, add and remove friends in social networks. This post is about mathematics and algorithms, beautiful theory, love and hate in this unstable world. This post is about searching for connected components in dynamic graphs.

The big world generates big data. So a big graph fell on our head. So big that we can keep in memory its vertices, but not edges. In addition, there are updates regarding the graph - which edge to add, which one to delete. We can say that every such update we see for the first and last time. In such conditions it is necessary to find the components of the connectivity

Search in depth / width here will not pass simply because the entire graph in the memory does not hold. A system of disjoint sets could greatly help if the edges in the graph were added. What to do in the general case?

')

Task . Dan undirected graph

The solution of the problem consists of three ingredients.

- Incidence matrix as a representation.

- The method as an algorithm.

Sampling as optimization.

You can see the implementation on the githaba: link .

Incidence Matrix as Representation

The first data structure for the storage of the graph will be extremely non-optimal. We will take the incidence matrix

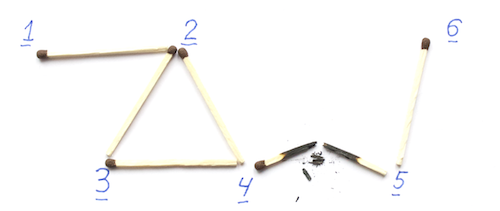

As an example, look at the graph in the picture below.

For him, the incidence matrix will look like this.

The naked eye can see that this view has a serious drawback - the size

There is also an implicit advantage. If you take a lot of vertices

For example, if you take a lot

Non-zero values are at the edges

Shrinking the graph as an algorithm

Understand what a rib is. Here we have two peaks

An interesting feature: in terms of the matrix of incidence, to tighten the edge

Now the algorithm. Take a graph and for each non-isolated vertex we choose a neighbor.

We pull the corresponding edges.

Repeat iteration

Note that after each iteration of the connection of the connected components of the new graph are one-to-one mapped to the components of the old. We can even mark which vertices have been merged into the resulting vertices, in order to restore the answer later.

Note also that after each iteration, any connected component of at least two vertices is reduced at least two times. This is natural, since at least two vertices of the old one were merged into each vertex of the new component. So after

We search through all isolated vertices and restore the answer in the history of mergers.

-sampling as optimization

-sampling as optimization

Everything would be great, but the above algorithm works in terms of time and memory.

From the sketch you need three properties.

First, compactness. If we build a sketch for a vector

Secondly, sampling. At each iteration of the algorithm, we need to choose a neighbor. We want a way to get the index of at least one non-zero element, if there is one.

Third, linearity. If we built for two vectors

We will use the solution of the problem.

Task. Dan vector zero vector

1-sparse vector

To begin, we will solve a simpler problem. Suppose we have a guarantee that the final vector contains exactly one nonzero position. We say that such a vector is 1-sparse. We will support two variables

Denote the desired position by

You can probabilistically check whether the vector is 1-sparse. For this we take a prime number

Obviously, if the vector is really 1-sparse, then

and he will pass the test. Otherwise, the probability of passing the test is not more than

Why?

In terms of a polynomial, a vector passes the test if the values of a polynomial

%20%3D%20%5Csum_i%20a_i%20%5Ccdot%20z%5Ei%20-%20S_0%20%5Ccdot%20z%5E%7BS_1%20%2F%20S_0%7D)

randomly selected point equals zero. If the vector is not 1-sparse, then

equals zero. If the vector is not 1-sparse, then ) is not identically zero. If we passed the test, we guessed the root. The maximum degree of a polynomial is

is not identically zero. If we passed the test, we guessed the root. The maximum degree of a polynomial is  means no more roots

means no more roots  so the probability of guessing them is no more

so the probability of guessing them is no more  .

.

randomly selected point

If we want to increase the accuracy of verification to an arbitrary probability

-frame vector

-frame vector

Now we will try to solve the problem for

Take a random 2-independent hash function

More about hash functions

The algorithms have two fundamentally different approaches to hash functions. The first is based on the fact that the data is less random, and our favorite hash function will mix them correctly. The second is that an evil adversary can pick up data, and the only chance to save the algorithm from unnecessary computation and other problems is to choose a hash function randomly. Yes, sometimes it can work poorly, but we can measure how bad it is. Here we are talking about the second approach.

The hash function is selected from the family of functions. For example, if we want to stir all the numbers from before

before  you can take random

you can take random  and

and  and determine the hash function

and determine the hash function

%20%3D%20a%20%5Ccdot%20x%20%2B%20b%20%5Ctext%7B%20mod%20%7D%20p.)

Functions for all possible![a, b \ in [p]](http://tex.s2cms.ru/svg/a%2C%20b%20%5Cin%20%5Bp%5D) set the family of hash functions. Randomly select the hash function - it is essentially random to select those

set the family of hash functions. Randomly select the hash function - it is essentially random to select those  and

and  . They are usually chosen equiprobably from the set

. They are usually chosen equiprobably from the set ![[p]](http://tex.s2cms.ru/svg/%5Bp%5D) .

.

The remarkable property of the example above is that any two different keys are randomly distributed independently. Formally, for any different![x_1, x_2 \ in [p]](http://tex.s2cms.ru/svg/x_1%2C%20x_2%20%5Cin%20%5Bp%5D) and any, possibly the same,

and any, possibly the same, ![y_1, y_2 \ in [p]](http://tex.s2cms.ru/svg/y_1%2C%20y_2%20%5Cin%20%5Bp%5D) probability

probability

![\ textbf {Pr} \ left [h (x_1) = y_1 \ text {and} h (x_2) = y_2 \ right] = p ^ {- 2}.](http://tex.s2cms.ru/svg/%5Ctextbf%7BPr%7D%5Cleft%5B%20h(x_1)%20%3D%20y_1%20%5Ctext%7B%20and%20%7D%20h(x_2)%20%3D%20y_2%20%5Cright%5D%20%3D%20p%5E%7B-2%7D.)

This property is called 2-independence. Sometimes, the probability may not be , but

, but  where

where  some kind of reasonably small value.

some kind of reasonably small value.

There is a 2-independence generalization - -independence. This is when the function distributes not 2,

-independence. This is when the function distributes not 2,  arbitrary keys regardless. Polynomial hash functions with

arbitrary keys regardless. Polynomial hash functions with  random coefficients have this property.

random coefficients have this property.

%20%3D%20%5Csum_%7Bi%20%3D%200%7D%5E%7Bk%20-%201%7D%20a_i%20%5Ccdot%20x%5Ei%20%5Ctext%7B%20mod%20%7D%20p)

Have Independence is one unpleasant property. With growth

Independence is one unpleasant property. With growth  function takes up more memory. This property does not depend on any particular implementation, but simply a general information property. Therefore, the less independence we can do, the easier it is to live.

function takes up more memory. This property does not depend on any particular implementation, but simply a general information property. Therefore, the less independence we can do, the easier it is to live.

The hash function is selected from the family of functions. For example, if we want to stir all the numbers from

Functions for all possible

The remarkable property of the example above is that any two different keys are randomly distributed independently. Formally, for any different

This property is called 2-independence. Sometimes, the probability may not be

There is a 2-independence generalization -

Have

Take a hash table size

It can be calculated that for a single cell, the probability that there will be a collision at least along two non-zero coordinates will be no more than

Why?

The probability that another element does not fall into the cell to us ) . The probability that everyone will not get to us:

. The probability that everyone will not get to us: %5E%7Bs-%201%7D) . The probability that at least someone will fall

. The probability that at least someone will fall %5E%7Bs%20-%201%7D) . You may notice that this function is monotonically increasing. I'll just bring a picture here:

. You may notice that this function is monotonically increasing. I'll just bring a picture here:

In the limit, the function gives the value .

.

In the limit, the function gives the value

Suppose we want to restore all coordinates with probability of success.

The final algorithm is as follows. Take

Every update

Upon completion, we extract from all successfully completed 1-decoders by coordinate and merge them into one list.

The maximum in the general table will be

Another hash for general case

Last step in

Let's say that the update

Run the algorithm for

Find the first level with the highest

There are several points that I want to briefly pay attention to.

First, how to choose

This determines the choice

Secondly, from

So we learned how to choose a random non-zero position according to a uniform distribution.

Mix, but do not shake

It remains to understand how to combine everything. We know that in the graph

When adding an edge

When the ribs are over and we are asked about the components, we will run the tightening algorithm. On

Everything. In the end, just go through the isolated peaks, the history of mergers restore the answer.

Who is to blame and what to do again

In fact, the topic of this post has arisen for a reason. In January, Ilya Razenshtein (@ilyaraz), a graduate student at MIT, came to us at Peter's CS Club and talked about algorithms for big data. There was a lot of interesting (see course description ). In particular, Ilya managed to tell the first half of this algorithm. I decided to finish the job and tell the whole result on Habré.

In general, if you are interested in mathematics related to computational processes aka Theoretical Computer Science, come to our Academic University in the direction of Computer Science . Inside they will teach complexity, algorithms and discrete mathematics. The real science will start from the first semester. You can get out and listen to courses in the CS Club and CS Center . If you are not from St. Petersburg, there is a hostel. A great chance to move to the Northern Capital.

Apply , get ready and come to us.

Sources

Source: https://habr.com/ru/post/276563/

All Articles