Migrating data from various types of storage using EMC VPLEX and EMC RecoverPoint technologies

Migration of data processing centers (hereinafter referred to as the data center) is a non-trivial and time-consuming task, although, if there are built and tested processes, it is fairly easily executable. Last summer I had the opportunity to work on the migration of two data centers, and since I deal mainly with SAN, I’ll talk about how to migrate them.

In the source data center, the customer had storage systems from IBM and NetApp, and in the destination, EMC VNX. The task was to quickly and with minimal downtime migrate all hosts, both physical and virtual, to a new location. Migrating data and hosts had to be performed with minimizing downtime - a very large company acted as the customer, and every minute was critical. The optimal solution was the use of technology from EMC - VPLEX and RecoverPoint.

After much deliberation, the following migration pattern was developed:

')

Encapsulation

1. The host is shutting down;

2. Zoning switches change from XIVax to VPLEX;

3. The necessary Storage group is created on the VPLEX;

4. The same thing is done on the destinaion side;

5. A pair is created in RecoverPoint;

6. Start asynchronous replication;

7. Host turns on.

Migration

1. The host is shutting down;

2. Replication stops, direct access to the destination side is enabled;

3. The host is physically transported to the new data center;

4. Host turns on.

If everything is done correctly, the host should not even notice that it was transported to another place; all that remains is for the OS to connect the necessary moons.

As we can see, the plan is not particularly complicated, however, during its execution, we had to face a lot of pitfalls, such as inconsistent data in the destination data center. In this article I will try to give detailed instructions on the migration itself, as well as a working procedure that will help avoid our mistakes. So let's go!

I want to note that I am writing this procedure using examples XIV and VNX, but it applies to any systems.

Encapsulation

Presenting volumes from XIV to VPLX. We need to connect to XIV, find all the volumes that our migrated host uses, and dump them on the VPLEX. I think this will not cause difficulties. Needless to say, you need to have zoning configured so that the XIV can see the VPLEX.

We define volumes on the VPLEX. The CLI is best for this.

cd /clusters/cluster-1/storage-elements/storage-arrays/<IBM-XIV-SerialNumber1> cd /clusters/cluster-1/storage-elements/storage-arrays/<IBM-XIV-SerialNumber2> cd /clusters/cluster-1/storage-elements/storage-volumes/ claim --storage-volumes VPD83T2:<WWN_Volume> --name <Name_Volume> Create virtual volumes on the VPLEX source.

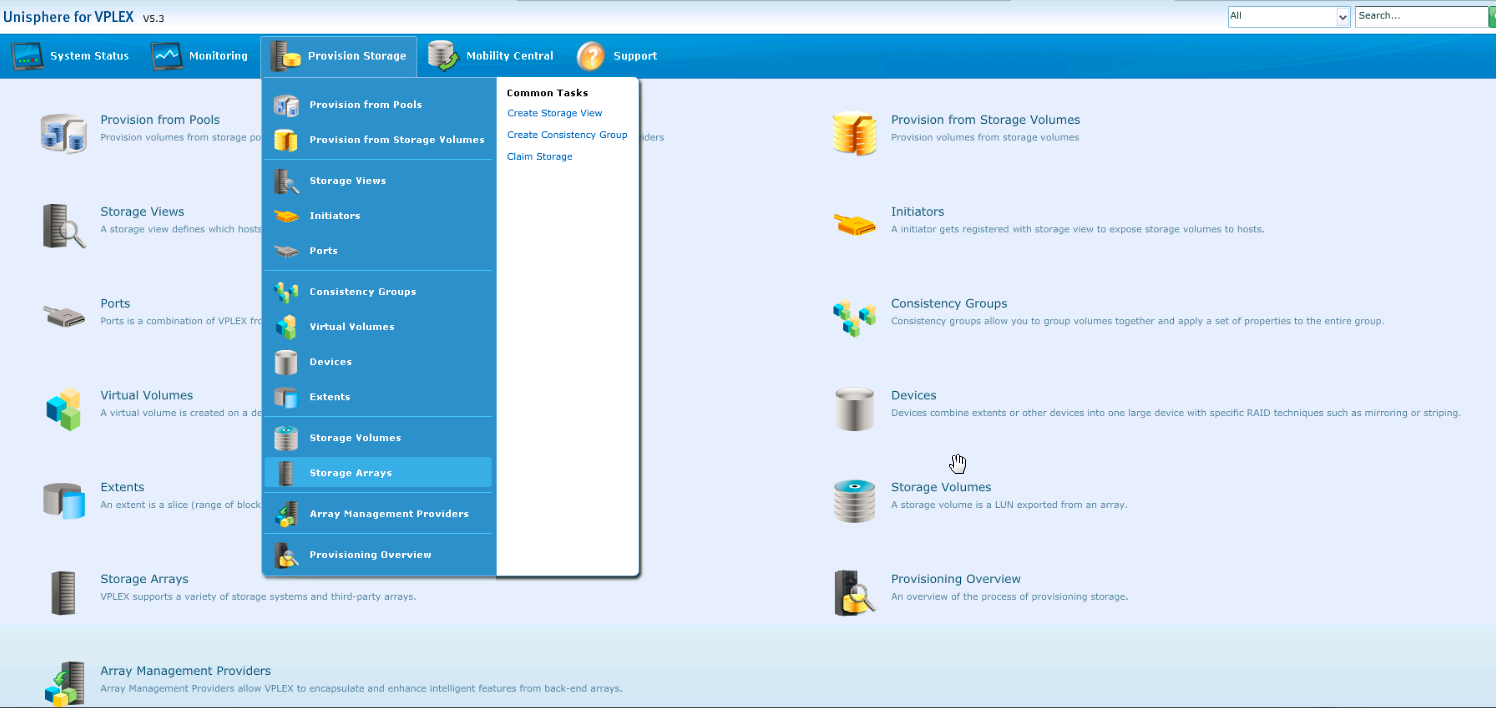

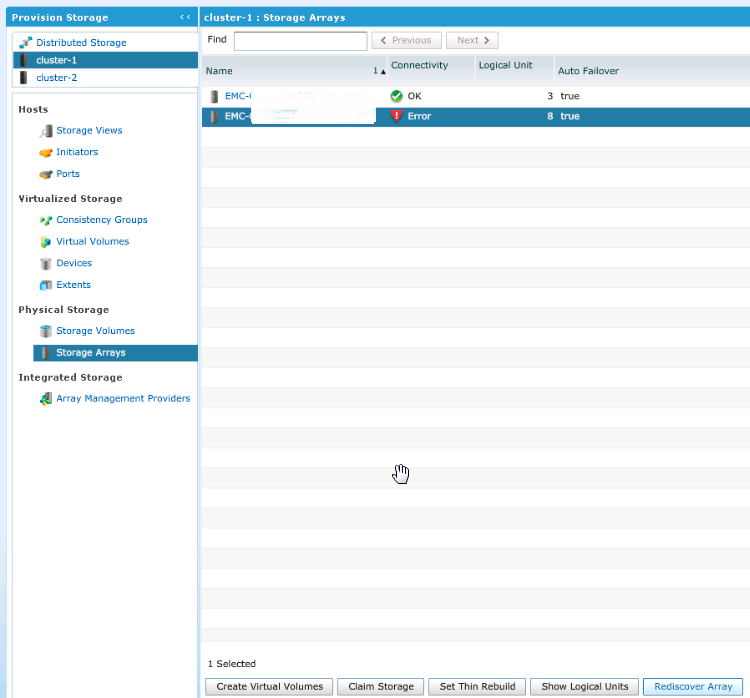

Go to the Provision Storage -> Storage array:

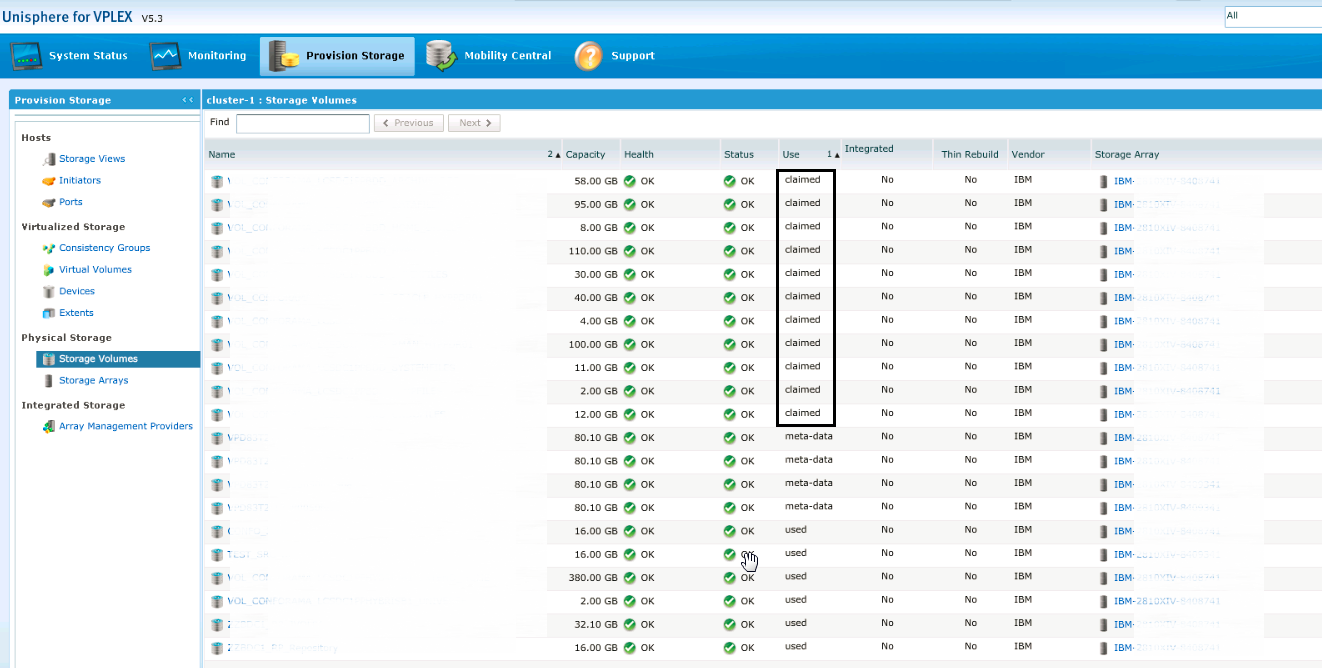

Then, on the “Storage Volumes” tab, we check that all our moons are presented (are in Claimed status)

Go to "Extents" and click "Create", select all LUNs from our host and add them.

Go to “Devices”. We will need to create a device associated with our Extents. Click "Create", after which we need to specify the type of device, in our case we need 1: 1 Mapping:

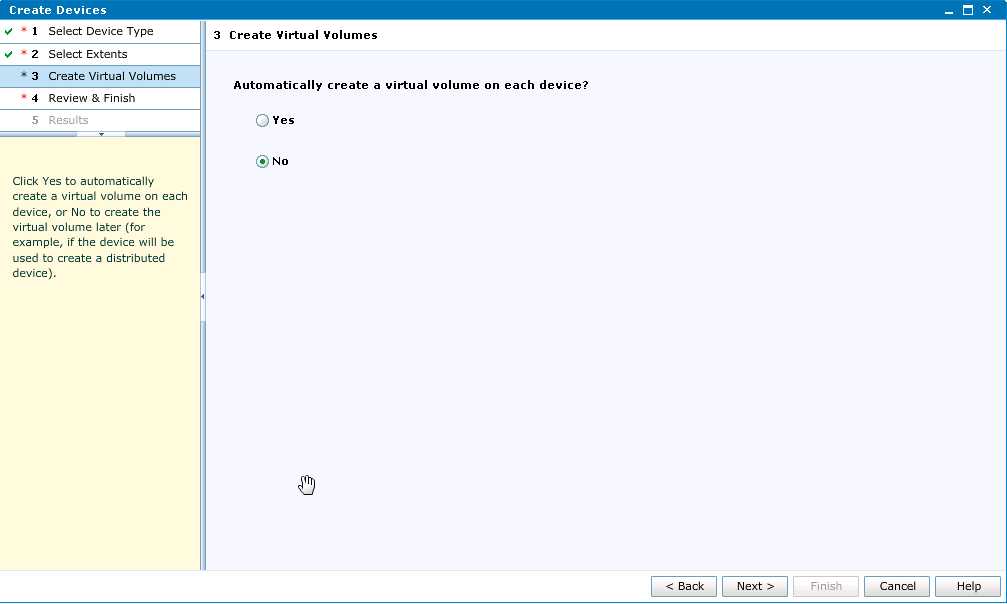

Add all Extents created in the previous step, disable automatic creation of virtual volumes:

Now we need to create virtual volumes, for which we go to the appropriate menu item and click Create from Devices, select all the devices that we created in the previous step.

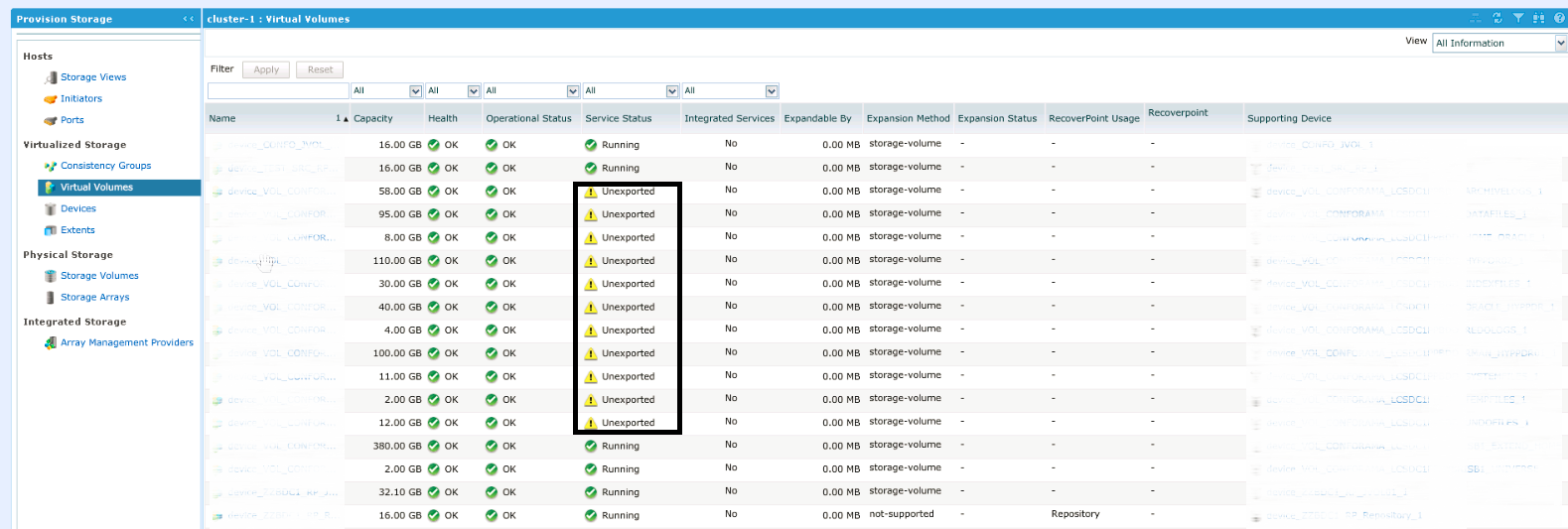

After adding, please note that our new virtual volumes are still in the status of Unexported:

Now let's zoning, as you understand, at the moment all our moons are presented not to the host, but to the VPLEX, and we need to reconfigure the zoning so that the host can see the moon data. We need to do this in both factories. So:

SAN01

alishow | grep <ServerName> zonecreate "<ServerName>_VPLEX","<AliasServerName>;VPLEX_1E1_A0_FC00" cfgadd "CFG_20160128_07h05FR_SCH","<ServerName>_VPLEX_1E1_A0_FC00" zonecreate "<ServerName>_VPLEX_1E1_B0_FC00","<AliasServerName>;VPLEX_1E1_B0_FC00" cfgadd "CFG_20160128_07h05FR_SCH ","<ServerName>_VPLEX_1E1_B0_FC00" cfgsave cfgenable CFG_20160128_07h05FR_SCH cfgactvshow | grep <ServerName> Do the same for the second factory SAN02.

Now we need to create a new host on VPLEX and present it to the moon. We return to the VPLEX admin panel and go to Initiators . If zoning is configured correctly, we should see two unregistered initiators:

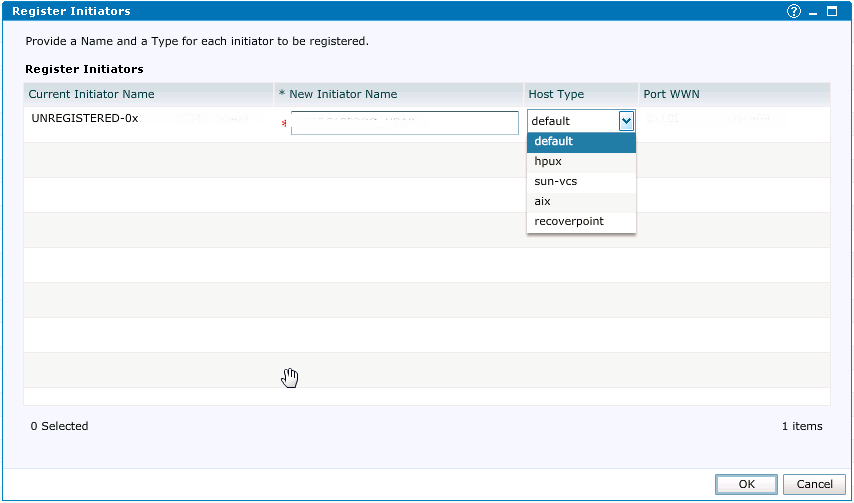

Once again, check the WWN and feel free to click Register. In the next step, we set the name of our new initiator (I usually set the * name of the host_HBA number *), the type of host in our case is default.

We perform the same step for the second initiator. Everything, our host sees VPLEX now, it remains only to present LUNs to it.

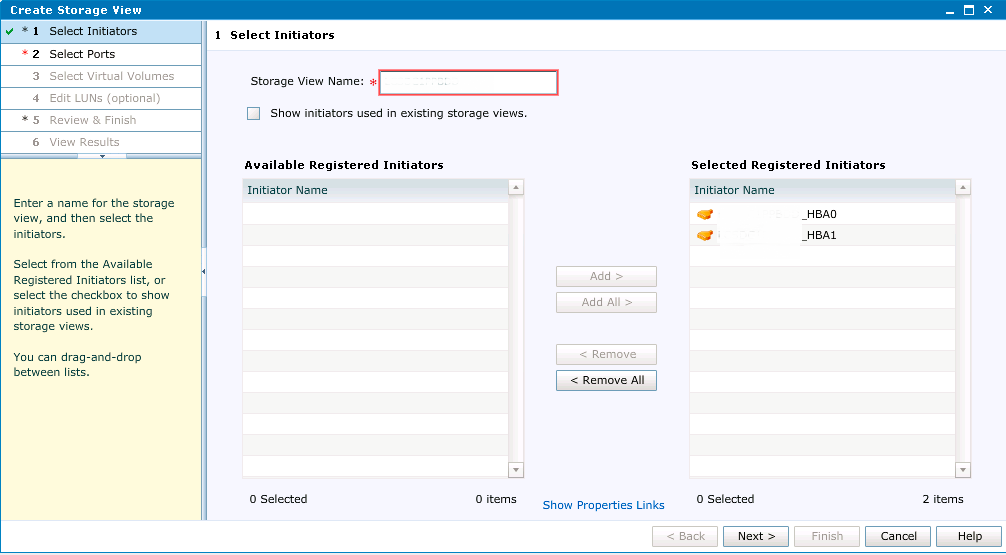

Go to the Storage View and click Create, set the name of the Storage View (usually this is the host name), add initiators:

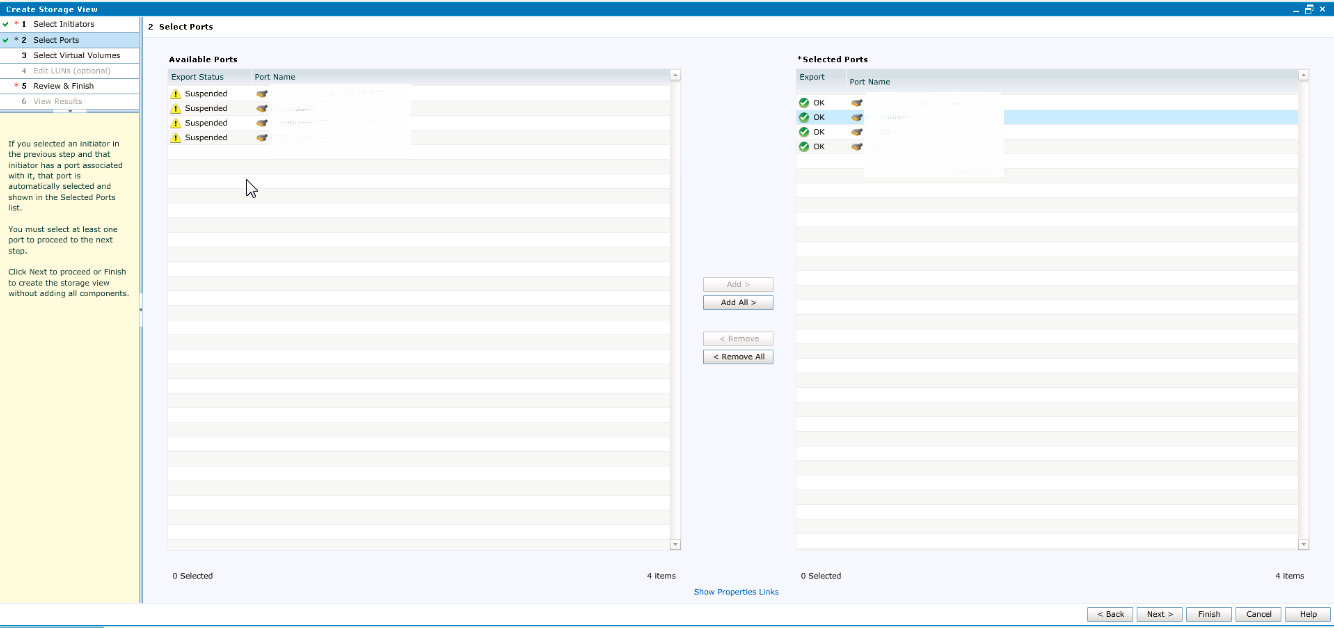

In the next step, we need to associate initiators with free ports on the VPLEX side. First you need to make sure that all ports are selected: A0-FC00, A0-FC01, B0-FC00, and B0-FC01. If they are not there, we check once again the correctness of the zoning setup and perform FC rescan on the host.

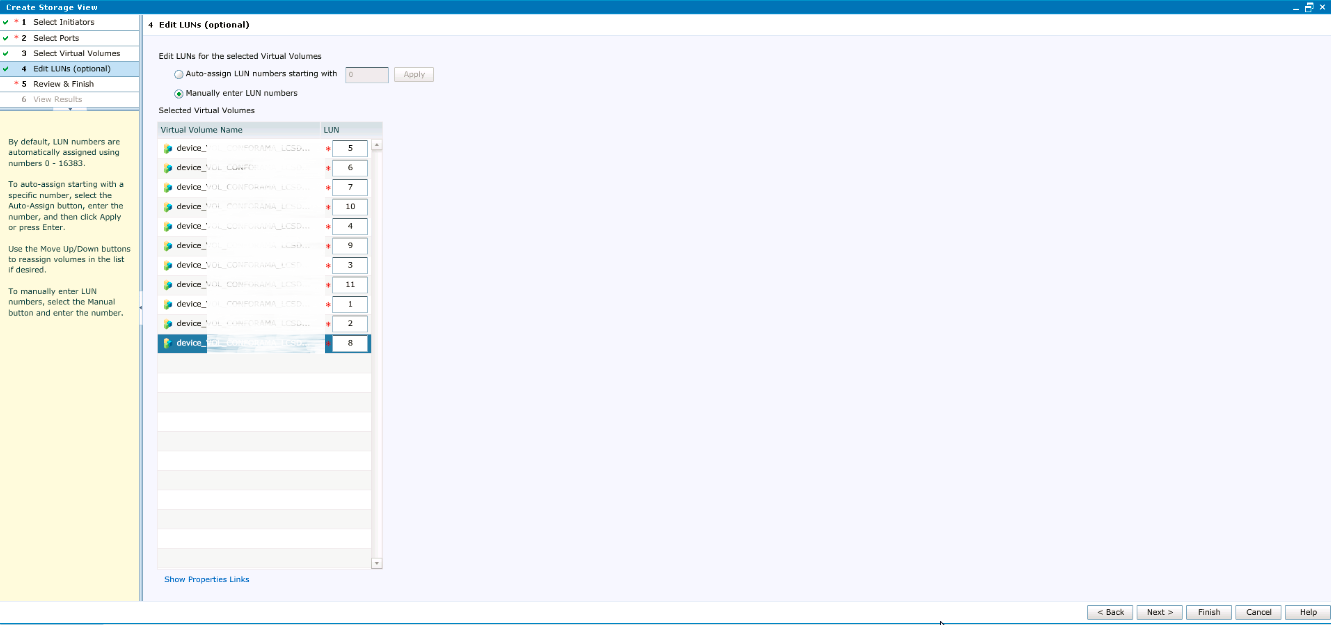

In the next step, select all volumes that we have created for our server, then specify the LUN numbers. Be especially careful with this step: the LUN numbers should match the numbers we had on XIV. Select Manually enter LUN numbers and assign the numbers accordingly.

Check everything again and click Close. After this stage, all previously used disks can be connected back to the host; ideally, the host should not notice that it is now working with Storage via VPLEX. It should be noted that, although we have added a new link to the chain, there should be no performance problems, latency, of course, can subside slightly, but only slightly. But, I think this is a topic for a separate article, I conducted research in this area and, if the results are interesting, I will be happy to share them.

We have almost completed the setup on the source side, now we will deal with destination. We begin with the fact that once again we’ll go to XIV and rewrite the exact size of our moons, I recommend to take the size in blocks.

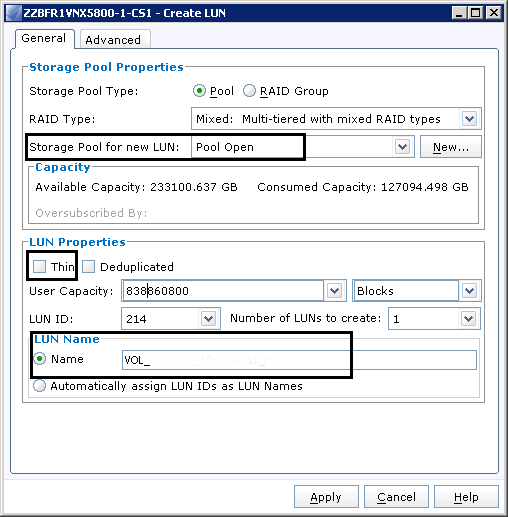

Go to VNX, then Storage -> LUNs -> Create LUN.

We check the LUN settings, in our case the Storage Pool should be Pool Open, the LUN should be Thin, copy and paste the size and set the name. There are no requirements for the name, therefore we are guided by the rules adopted in the organization.

I assume that your VPLEX is already connected, zoning is configured and the Storage Group on the VNX is created. Therefore, after creating LUNs, we simply add them to the appropriate VPLEX Storage Group.

The next step is to present the LUNs from VNX to VPLEX. You can, of course, do this manually, but for this purpose I use a small script from EMC. Download a useful utility from EMC - NavisphereCLI and create a simple BAT file:

REM remplacer FR1 par le nom du premier VNX.

REM remplacer FR2 par le nom du deuxieme VNX.

REM remplacer 0.0.0.0 IP VNX.

REM remplacer 0.0.0.0 IP VNX.

REM user/user: service/password

rem naviseccli -AddUserSecurity -user sysadmin -password sysadmin -scope 0

del c:\R1.txt

del c:\R2.txt

"C:\EMC\NavisphereCLI\NaviSECCLI.exe" -h 0.0.0.0 getlun -uid -name > c:\R1.txt

"C:\EMC\NavisphereCLI\NaviSECCLI.exe" -h 0.0.0.0 getlun -uid -name > c:\R2.txt

Of course, in the script, we need to fix the way. The result of the script will be two files R1 and R2 (as in our case there are two locations).

Go back to the VPLEX and map our LUNs. Go to Provision Storage -> Array Management, select our VNX and click Rediscover Array:

After this procedure, again, select our VNX and click Claim Storage. Next, select the item Use a Name Mapping File:

As is probably already clear, then we will need to specify the file that we generated using the script. Next we need to see all the LUNs that were created on the VNX. We give them a new name (I recommend giving a name by analogy with the source), and after all these procedures we see that the moons are presented:

Now we need to create Extents, Devices, Virtual Volumes and Storage Group. All this is done by analogy with the source side and is not difficult. By analogy, zoning and initiators are created. After all these actions, the distination side is already ready to accept our host, we just have to set up replication.

For RecoverPoint to work, you need a volume for logs, the so-called JVOL, create it on both sides and add it to the Storage Group that we created in the previous steps.

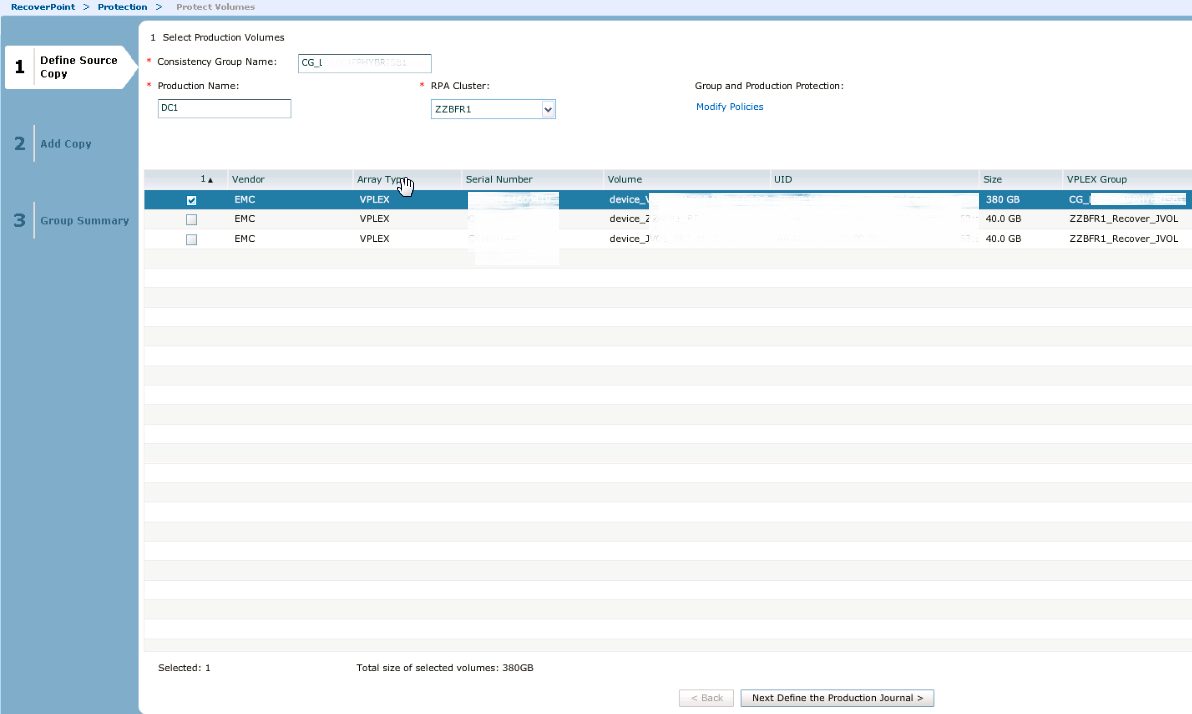

So replication. Open RecoverPoint and feel free to go to Protection -> Protect Volumes:

We are looking for our source VPLEX Consistency group, select the source site in the RPA cluster field and select all LUNs that will be replicated:

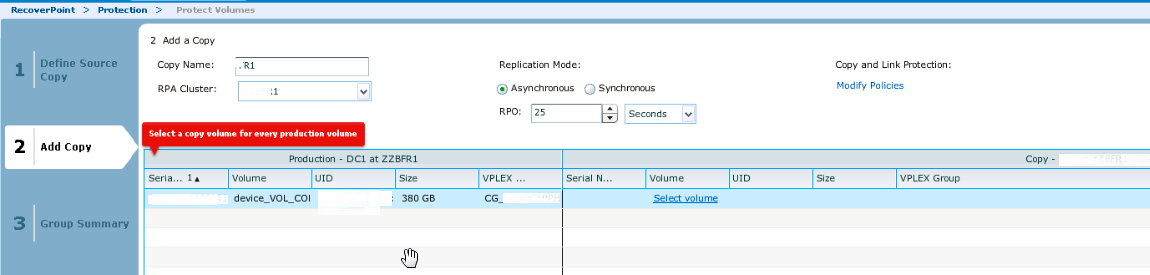

In the next step, choose our JVOL. Next, we will need to specify the name of the pair (any in accordance with the accepted rules), RPA cluster already select Destination and select Detination volumes:

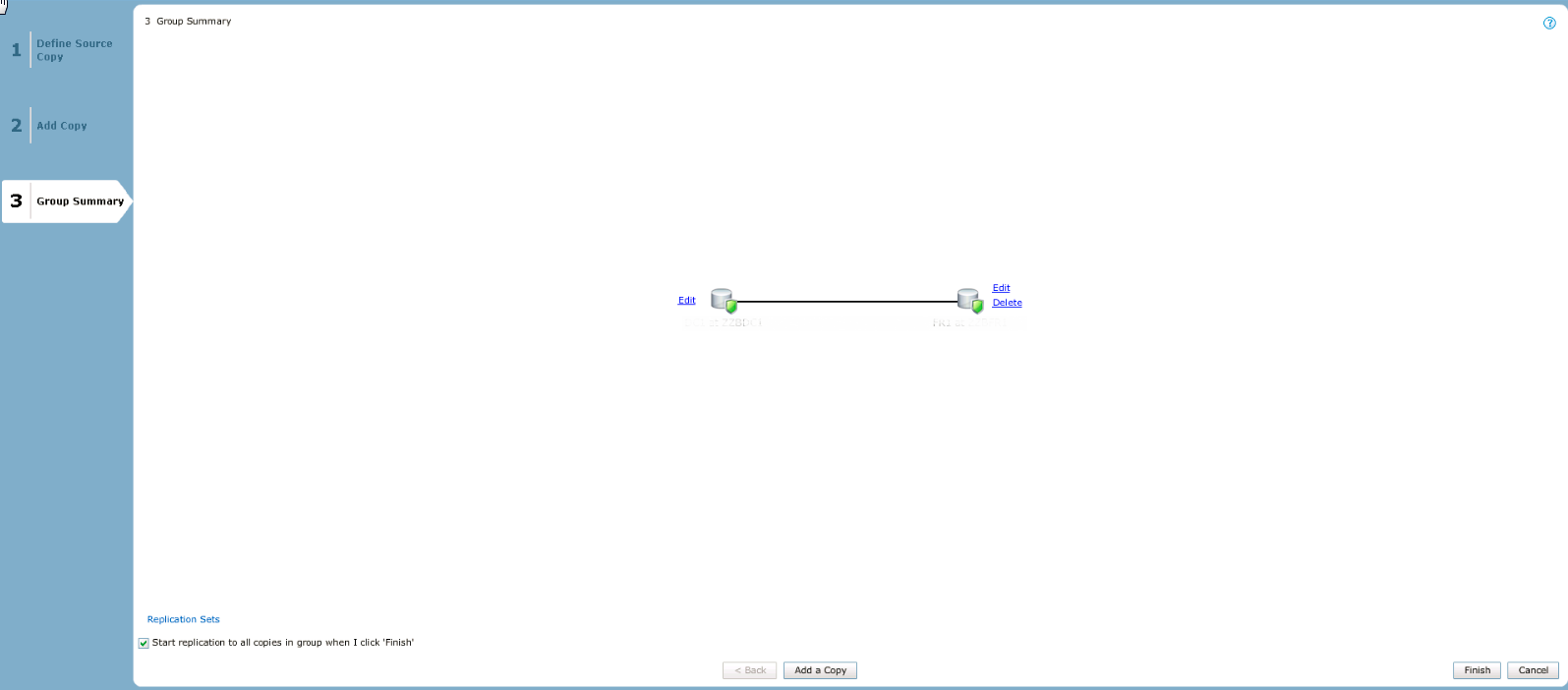

Next, we need to specify the destination JVOL. We will be shown a simple picture with our replication, after the appearance of which we press Add a copy.

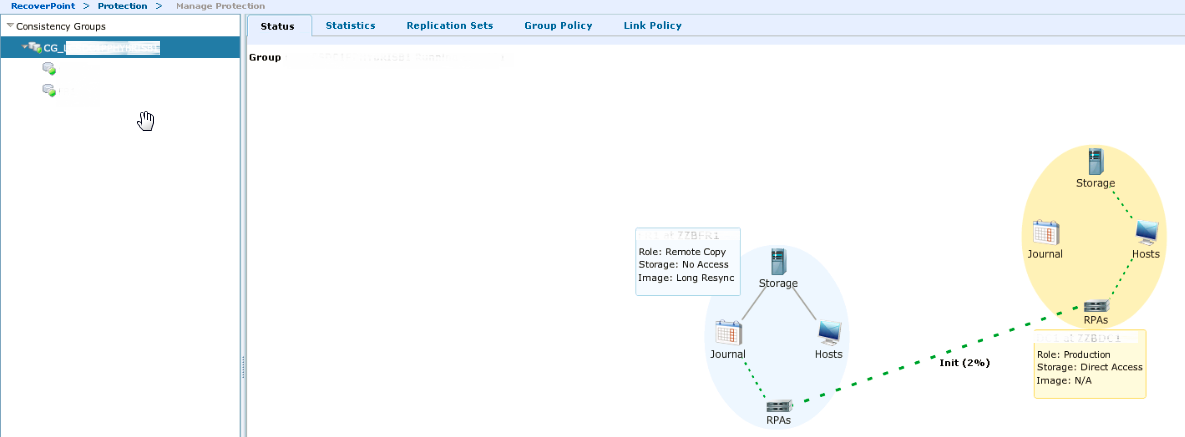

Click on the name of our CG group and see a beautiful interactive synchronization status:

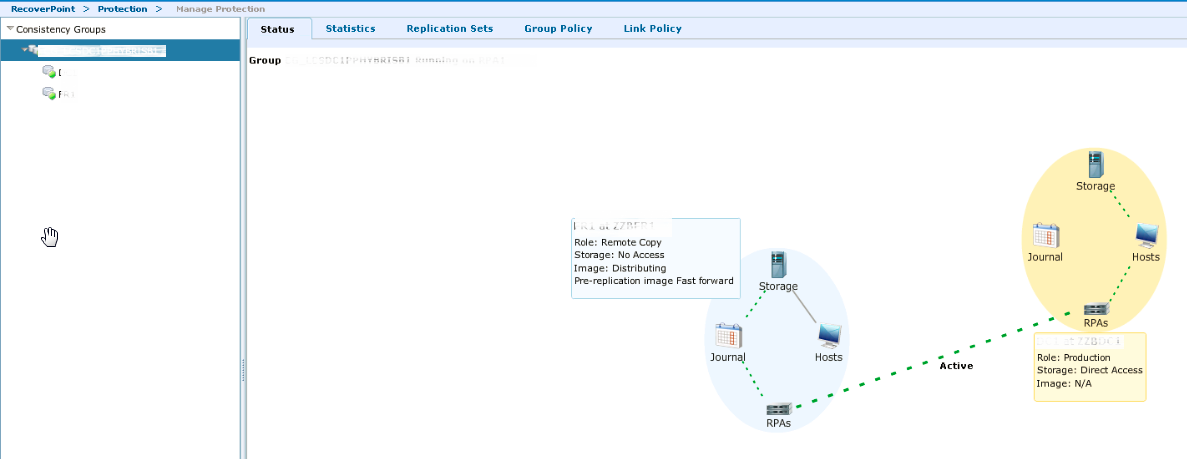

We are waiting for the data to crash. After that we will observe the following status:

At this stage, we are all ready to physically move the host to a new data center. But there is a caveat: be sure to turn off the host first, then stop replication.

Let's say the host is already on the way, we need to break the pair and enable direct access for the host. If we did not mess up with zoning, then after switching on the host will not even guess about its new location.

We return to RecoverPoint, go through Protection -> Manage Protection, find our CG group and click the Pause Transfer button:

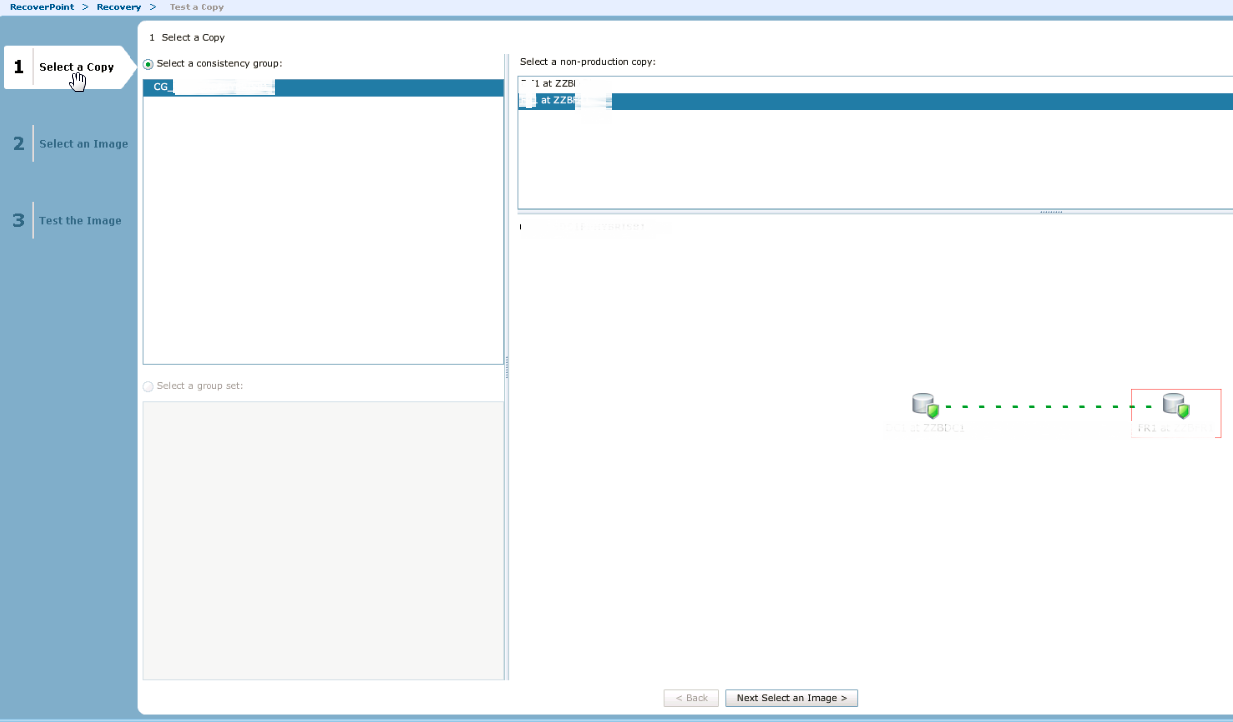

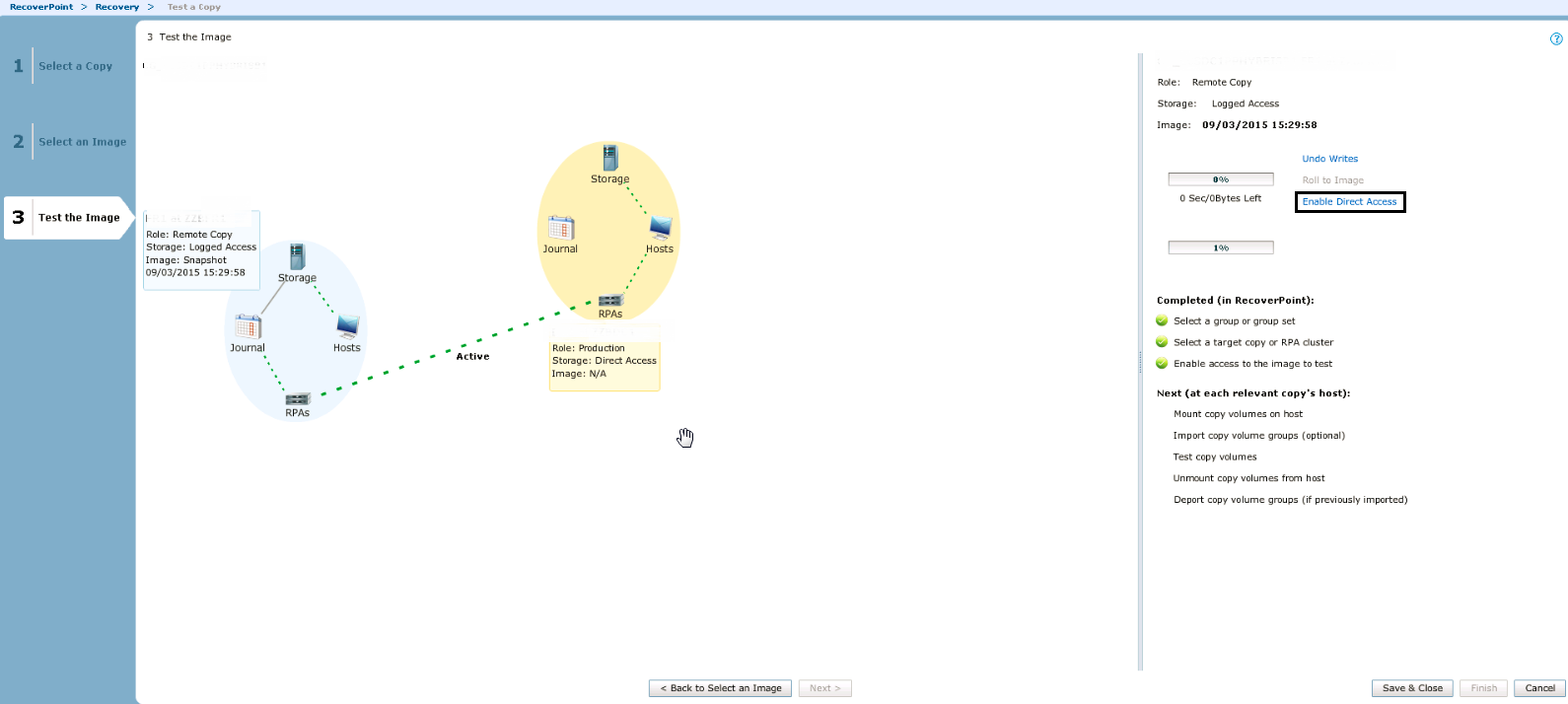

Then we run the test with the Test Copy button, select our destination side and click Next select an image

In the next window, we leave everything by default; Warning is answered with YES. We are waiting for the copy to be tested, and click Enable Direct Access.

In fact, that's all. Again, if you don’t get messed up with the settings of the zoning and initiators, the host should see all its LUNs with consistent data. If you have any questions and additions to the article, I will be happy to answer them. All easy migrations!

Source: https://habr.com/ru/post/276163/

All Articles