CNTK - Microsoft Research Neural Networking Toolkit

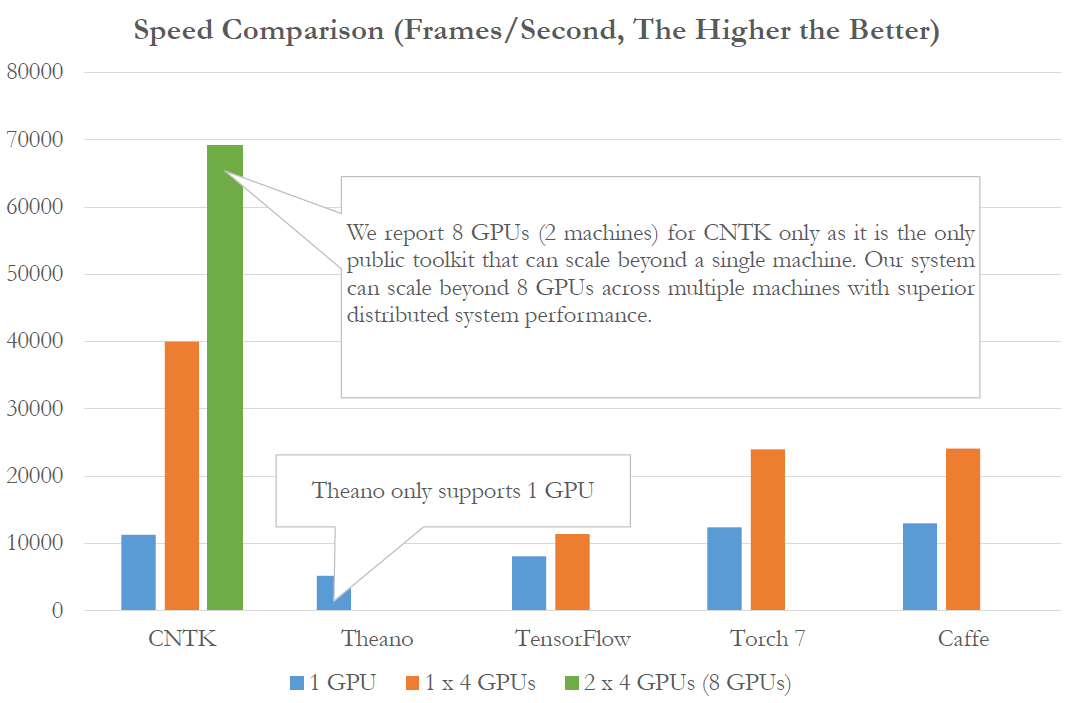

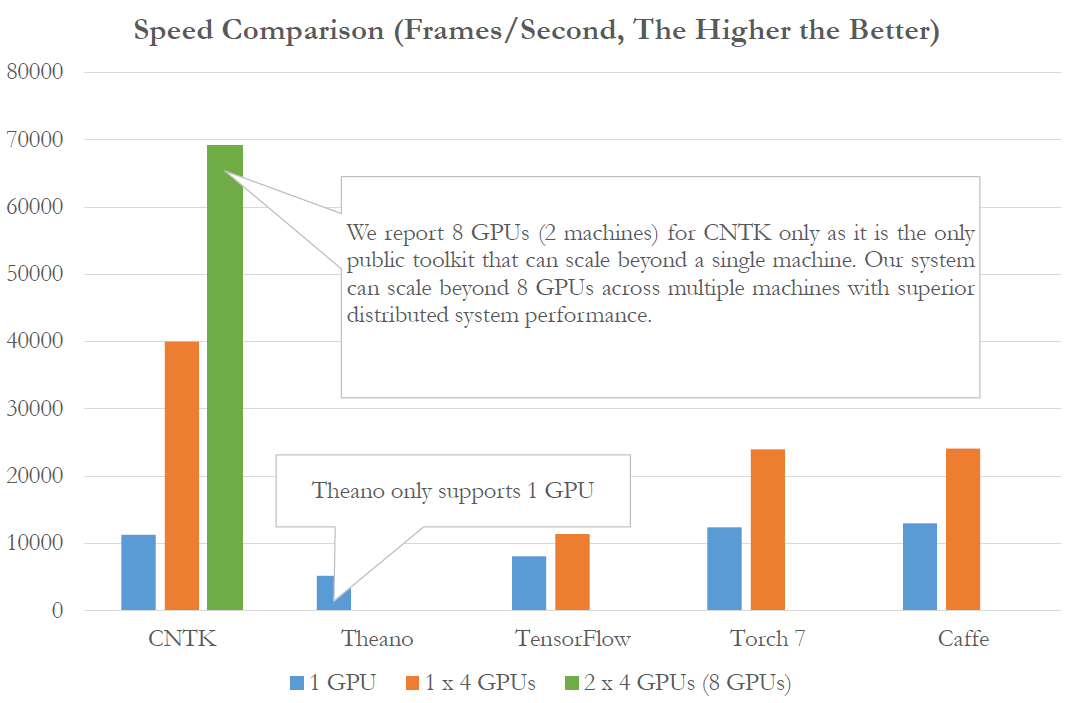

2015 was very rich in events related to neural network technologies and machine learning. Convolutional and recurrent networks, which are suitable for solving problems in computer vision and speech recognition, have shown notable progress. Many large companies have published their developments on Github, Google released TensorFlow , Baidu - warp-ctc . A group of scientists from Microsoft Research also decided to join this initiative by releasing the Computational Network Toolkit , a set of tools for designing and training networks of various types that can be used for pattern recognition, speech understanding, text analysis, and more. And it’s intriguing that this network won the ImageNet LSVR 2015 competition and is the fastest among existing competitors.

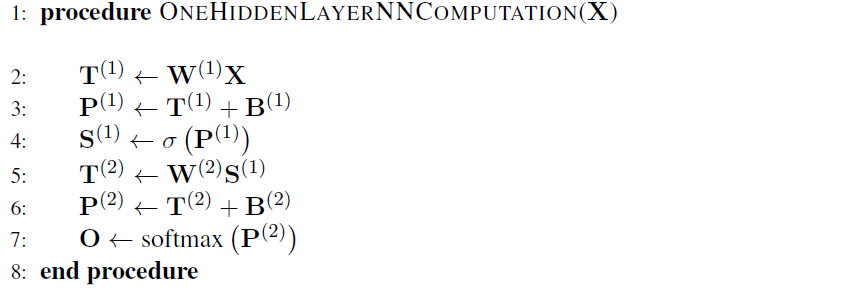

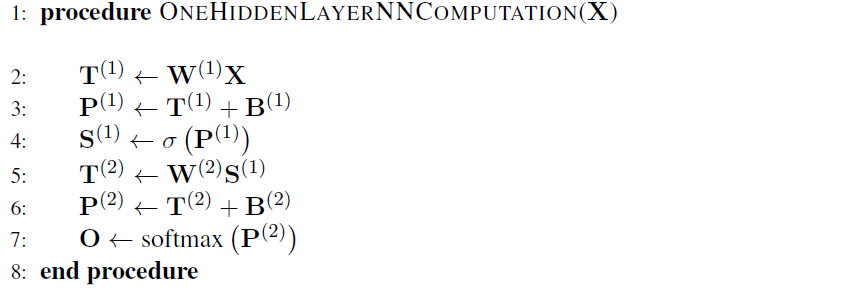

Computational Network Toolkit is an attempt to systematize and summarize the main approaches to the construction of various neural networks, provide scientists and engineers with a wide range of functions and simplify many routine operations. CNTK allows you to create deep learning networks ( DNN ), convolutional networks ( CNN ), recurrent networks and memory networks ( RNN , LSTM ). The basis for CNTK is a concise way of describing a neural network through a series of computational steps adopted in many other solutions, for example, Theano. The simplest network with one hidden layer can be represented as follows:

')

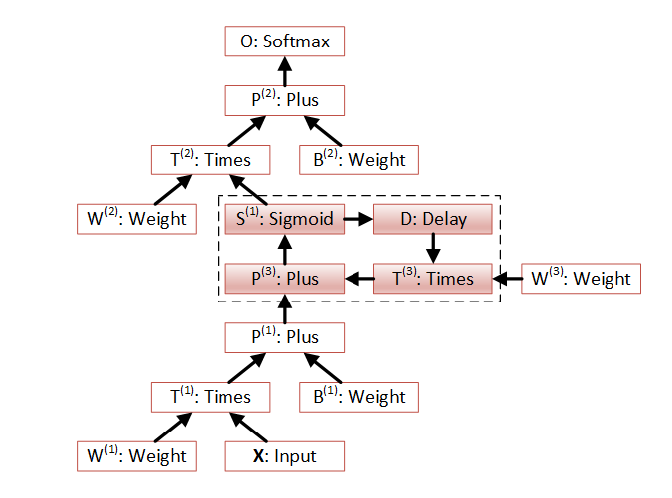

In step 2, the input vector of X values (in other words, a set of numbers) is multiplied by the weight vector W1, then added to the displacement vector B1, the resulting weights are transferred to the sigma of the excitation function. Then, the computed vector S1 is multiplied by the weights of the hidden layer W2, added by the offset B2, and the softmax function is calculated, which is the network response.

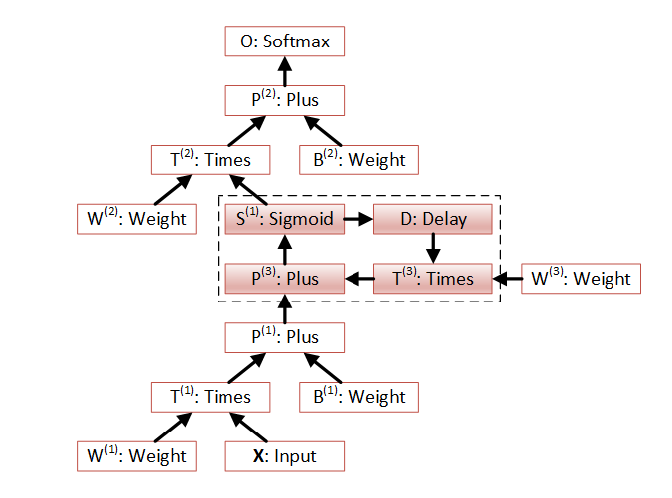

The same algorithm can be represented graphically as follows (the calculation goes from bottom to top):

Networks of this type can be described using the CNTK configuration syntax, which in the simple case has the following form:

Network training, or in other words, the selection of W [N] coefficients, is carried out using a stochastic gradient descent ( SGD ), and is also described in the configuration file, setting the parameters for learning speed, number of epochs and moment:

CNTK helps to solve problems of an infrastructural nature, in particular, it already has subsystems for reading data from various sources. It can be text and binary files, images, sounds.

For more information about the features and functions of CNTK, see the document on Computational Networks and the Computational Network Toolkit .

For quick mastering of CNTK capabilities, there are a number of examples in the Examples folder that demonstrate the most common approaches to solving problems applicable to neural networks.

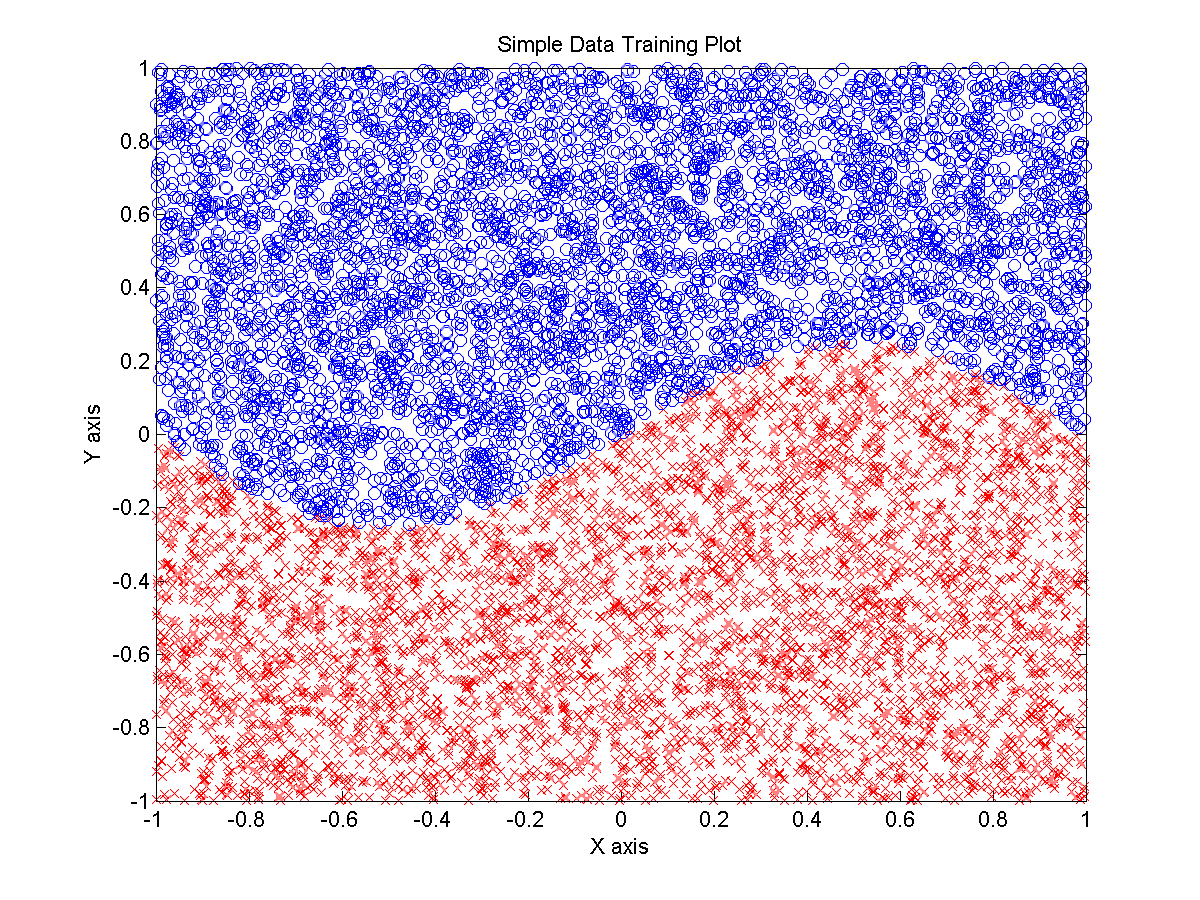

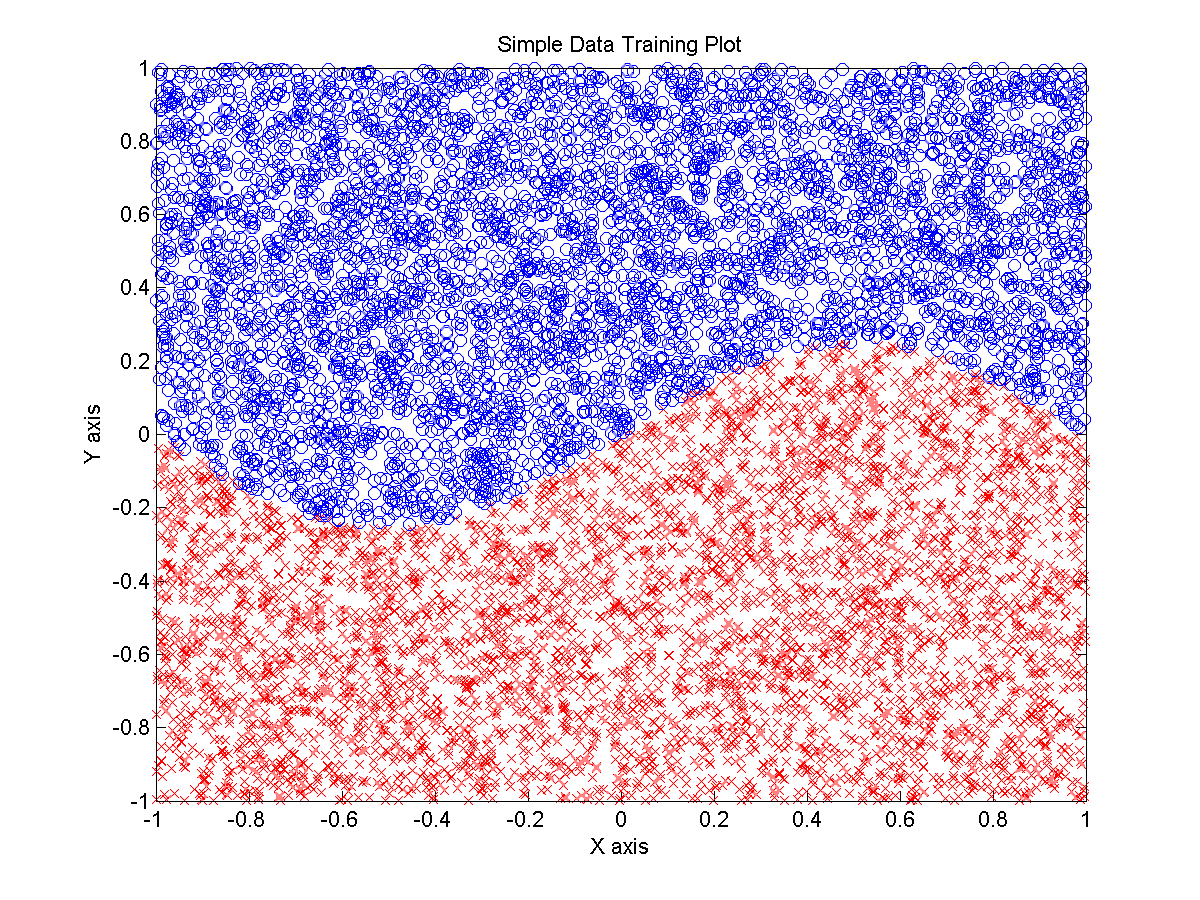

Based on the artificial data generated by the Matlab script, a network is created that solves the binary classification problem - does the dot belong to the blue or red cluster:

In fact, the neural network is trying to restore the function label = 0.25 * sin (2 * pi * 0.5 * x )> y with which this data was generated. This example demonstrates all the strength and power of neural networks, since it has been mathematically proven that, thanks to the universal approximation theorem, any function can be chosen .

The technology of how to classify images using convolutional networks is usually demonstrated using the MNIST dataset.

From this example, you will understand how the image data falls into a convolutional network (CNN). Let's not make secrets, everything is really simple. All the pixel values of the image, and in our case, this 28x28 square falls on the network input, and the network response is the probability that the submitted image belongs to a particular class. Accordingly, the network has 784 inputs and 10 outputs.

In addition, CNTK includes examples of working with classic image sets, which are most popular with scientists involved in Deep Convolution Neural Networks, these are CIFAR-10 and ImageNet (AlexNet and VGG formats).

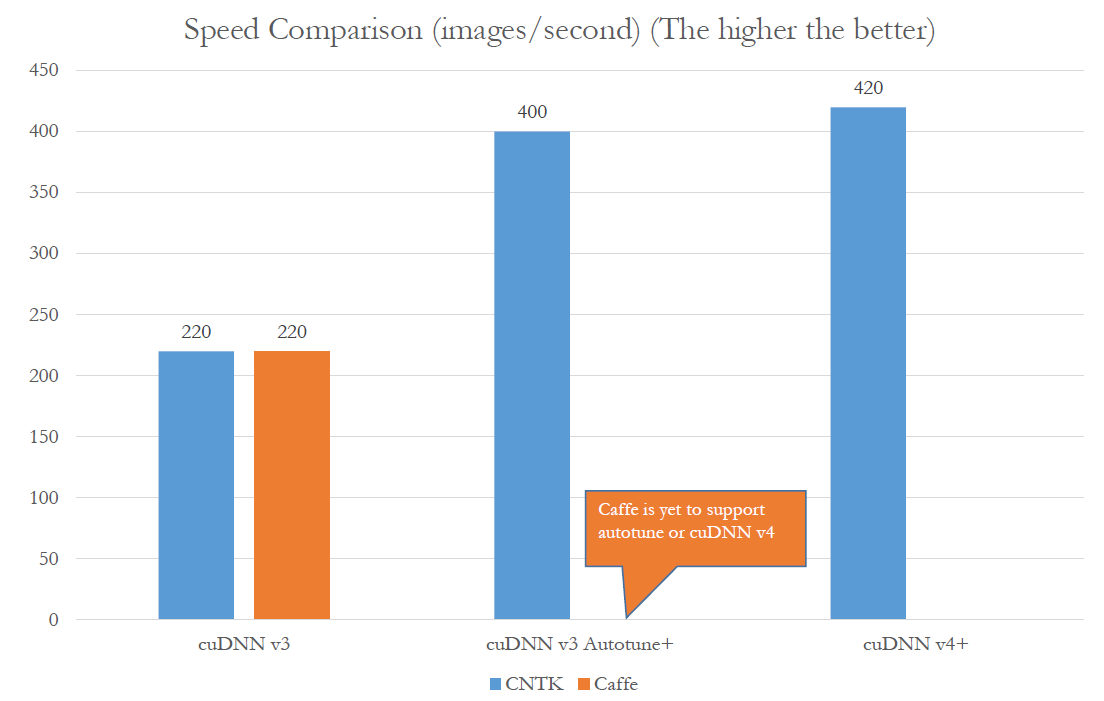

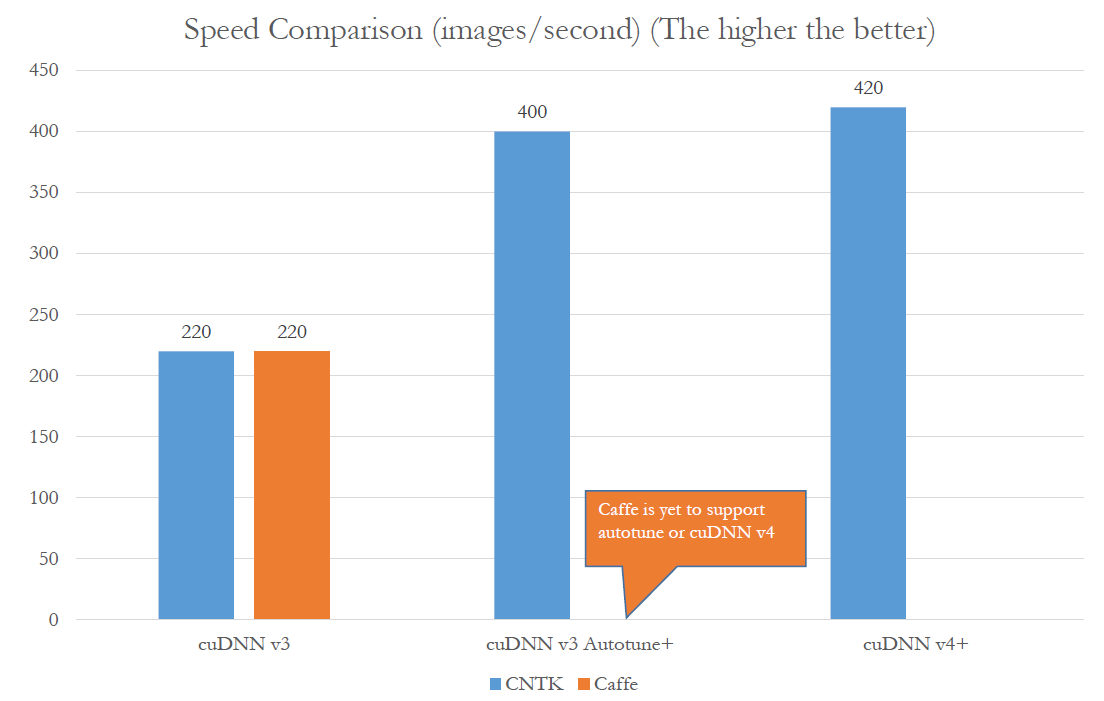

The efforts that MSR scientists put into creating the CNTK can give hope for the creation of very fast classifiers. According to some tests (as the author understands, on the “classic” in the CNN world of 224x224 pixels), the recognition rate can be over 400 frames per second, which is a very high result. Even more interesting is the fact that modern CNN networks in some cases already exceed the ability of a person to recognize patterns. You can learn more about this in the Delving Deep into Rectifiers Human Survival Report on ImageNet Classification .

The recognition of speech and text in natural languages is one of the complex areas. With the emergence of the idea of recurrent networks, there was hope that this scientific barrier would be overcome. Recurrent networks (RNN) allow you to remember the previous state, thereby reducing the amount of data supplied to the input of the network. The need for "looping" and "possessing memory" of neural networks is due to the fact that voice or text data cannot be fed to the input of the network in full, they arise in several portions separated in time.

Close in this context are the LSTM networks for which a special type of neuron has been developed, which translates itself into an excited state based on some values and does not change it until the deactivation vector arrives at the input. LSTM networks also help solve the problem of transforming a sequence into a sequence that occurs when translating one language into another. The capabilities of RNN and LSTM networks are so high that, in some cases, they are already superior to the person in recognizing short phrases and handwriting.

CNTK includes several examples of speech analysis, based on the AN4 Dataset , Kaldi Project , TIMIT , and a broadcast project based on the scientific work Sequence-to-sequence neural . The analysis of the text is presented by several projects on the analysis of comments, news, and spoken phrases (spoken language understanding, SLU), with details of the latest technology you can find out from the scientific work of SPOKEN LANGUAGE UNDERSTANDING USING LONG SHORT-TERM MEMORY NEURAL NETWORKS .

The CNTK kernel was created in C ++, but the developers promise to create Python bindings and C # interfaces in the future.

You can build CNTK for Windows or Linux yourself, in the first case you will need Visual Studio 2013 , and a set of CUDA , CUB , CuDNN , Boost , ACML (or MKL ) libraries and MS-MPI . Optionally, CNTK can be compiled with OpenCV support. Details can be found in the CNTK Wiki .

You can use ready-made binary builds with and without GPU support, which are published at https://github.com/Microsoft/CNTK/wiki/CNTK-Binary-Download-and-Configuration

A brief introduction to CNTK, which was conducted by scientists from MSR at the NIPS 2015 conference, is published at http://research.microsoft.com/pubs/226641/CNTK-Tutorial-NIPS2015.pdf

A very brief introduction to CNTK

Computational Network Toolkit is an attempt to systematize and summarize the main approaches to the construction of various neural networks, provide scientists and engineers with a wide range of functions and simplify many routine operations. CNTK allows you to create deep learning networks ( DNN ), convolutional networks ( CNN ), recurrent networks and memory networks ( RNN , LSTM ). The basis for CNTK is a concise way of describing a neural network through a series of computational steps adopted in many other solutions, for example, Theano. The simplest network with one hidden layer can be represented as follows:

')

In step 2, the input vector of X values (in other words, a set of numbers) is multiplied by the weight vector W1, then added to the displacement vector B1, the resulting weights are transferred to the sigma of the excitation function. Then, the computed vector S1 is multiplied by the weights of the hidden layer W2, added by the offset B2, and the softmax function is calculated, which is the network response.

The same algorithm can be represented graphically as follows (the calculation goes from bottom to top):

Networks of this type can be described using the CNTK configuration syntax, which in the simple case has the following form:

Network training, or in other words, the selection of W [N] coefficients, is carried out using a stochastic gradient descent ( SGD ), and is also described in the configuration file, setting the parameters for learning speed, number of epochs and moment:

CNTK helps to solve problems of an infrastructural nature, in particular, it already has subsystems for reading data from various sources. It can be text and binary files, images, sounds.

For more information about the features and functions of CNTK, see the document on Computational Networks and the Computational Network Toolkit .

CNTK usage examples

For quick mastering of CNTK capabilities, there are a number of examples in the Examples folder that demonstrate the most common approaches to solving problems applicable to neural networks.

Simple classification example

Based on the artificial data generated by the Matlab script, a network is created that solves the binary classification problem - does the dot belong to the blue or red cluster:

In fact, the neural network is trying to restore the function label = 0.25 * sin (2 * pi * 0.5 * x )> y with which this data was generated. This example demonstrates all the strength and power of neural networks, since it has been mathematically proven that, thanks to the universal approximation theorem, any function can be chosen .

Image analysis

The technology of how to classify images using convolutional networks is usually demonstrated using the MNIST dataset.

From this example, you will understand how the image data falls into a convolutional network (CNN). Let's not make secrets, everything is really simple. All the pixel values of the image, and in our case, this 28x28 square falls on the network input, and the network response is the probability that the submitted image belongs to a particular class. Accordingly, the network has 784 inputs and 10 outputs.

In addition, CNTK includes examples of working with classic image sets, which are most popular with scientists involved in Deep Convolution Neural Networks, these are CIFAR-10 and ImageNet (AlexNet and VGG formats).

The efforts that MSR scientists put into creating the CNTK can give hope for the creation of very fast classifiers. According to some tests (as the author understands, on the “classic” in the CNN world of 224x224 pixels), the recognition rate can be over 400 frames per second, which is a very high result. Even more interesting is the fact that modern CNN networks in some cases already exceed the ability of a person to recognize patterns. You can learn more about this in the Delving Deep into Rectifiers Human Survival Report on ImageNet Classification .

Analysis of speech and natural languages

The recognition of speech and text in natural languages is one of the complex areas. With the emergence of the idea of recurrent networks, there was hope that this scientific barrier would be overcome. Recurrent networks (RNN) allow you to remember the previous state, thereby reducing the amount of data supplied to the input of the network. The need for "looping" and "possessing memory" of neural networks is due to the fact that voice or text data cannot be fed to the input of the network in full, they arise in several portions separated in time.

Close in this context are the LSTM networks for which a special type of neuron has been developed, which translates itself into an excited state based on some values and does not change it until the deactivation vector arrives at the input. LSTM networks also help solve the problem of transforming a sequence into a sequence that occurs when translating one language into another. The capabilities of RNN and LSTM networks are so high that, in some cases, they are already superior to the person in recognizing short phrases and handwriting.

CNTK includes several examples of speech analysis, based on the AN4 Dataset , Kaldi Project , TIMIT , and a broadcast project based on the scientific work Sequence-to-sequence neural . The analysis of the text is presented by several projects on the analysis of comments, news, and spoken phrases (spoken language understanding, SLU), with details of the latest technology you can find out from the scientific work of SPOKEN LANGUAGE UNDERSTANDING USING LONG SHORT-TERM MEMORY NEURAL NETWORKS .

If you want to try CNTK in

The CNTK kernel was created in C ++, but the developers promise to create Python bindings and C # interfaces in the future.

You can build CNTK for Windows or Linux yourself, in the first case you will need Visual Studio 2013 , and a set of CUDA , CUB , CuDNN , Boost , ACML (or MKL ) libraries and MS-MPI . Optionally, CNTK can be compiled with OpenCV support. Details can be found in the CNTK Wiki .

You can use ready-made binary builds with and without GPU support, which are published at https://github.com/Microsoft/CNTK/wiki/CNTK-Binary-Download-and-Configuration

A brief introduction to CNTK, which was conducted by scientists from MSR at the NIPS 2015 conference, is published at http://research.microsoft.com/pubs/226641/CNTK-Tutorial-NIPS2015.pdf

Source: https://habr.com/ru/post/275959/

All Articles