Fuzzy search in the dictionary with the universal Levenshtein automaton. Part 1

Fuzzy string searching is a very costly task in terms of computing resources, especially if you need high accuracy of the results. The article describes the fuzzy search algorithm in the dictionary, which provides high search speed while maintaining 100% accuracy and relatively low memory consumption. It is the Levenshtein machine gun that allowed Lucene developers to increase the speed of fuzzy search by two orders of magnitude.

Introduction

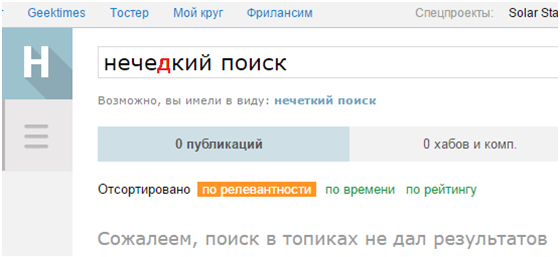

Fuzzy search of lines in the dictionary is the basis for the construction of modern spell checking systems that are used in text editors, optical character recognition systems and search engines. In addition, fuzzy search is used in solving a number of computational problems of bioinformatics.

')

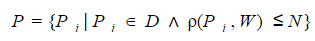

The formal definition of the fuzzy search task in the dictionary can be formulated as follows. For a given search query W, it is necessary to select from the dictionary D a subset P of all words, the measure of difference between which p and search query does not exceed a certain threshold value N :

The degree of difference between two words can be measured, for example, using the Levenshtein or Damerau-Levenshtein distance .

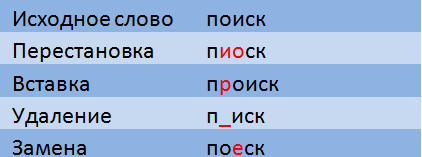

The Levenshtein distance is a measure of the difference between two lines, defined as the minimum number of inserts, deletes, and replacements of characters required to translate one line to another.

When calculating the Damerau-Levenshtein distance, transpositions (permutations of two adjacent symbols) are also allowed.

A few years ago on Habré there was already a post from ntz devoted to fuzzy searching in the dictionary and text - in more detail about the distances of Levenshteyn and Damerau-Levenshteyn can be read there. I only remind you that the time complexity of checking the conditions

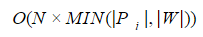

p (Pi, W) <= N using dynamic programming methods is estimated as

,

,where | P i |, | W | - the length of the string and the request, respectively. Therefore, in solving practical problems, a complete enumeration of the dictionary values with the verification of each word is, as a rule, unacceptable.

It should be noted that not every fuzzy search algorithm ensures that all the words from the dictionary D that satisfy the condition p (P i , W) <= N are found at the request of W. Therefore, it makes sense to talk about the accuracy of the search as the ratio of the number of results found to the actual number of words in the dictionary that satisfy the given condition. For example, the author of the post already mentioned by me estimated the accuracy of the search by the n-gram method at 65%.

Nondeterministic Lowenstein automaton

The author assumes that the reader is familiar with the basics of the theory of automata and formal languages and will refrain from presenting the terminology of this subject area. Instead, I'll get right to the point.

To solve practical problems, a deterministic Levenshtein finite automaton is used (if to be completely accurate, then its imitation). However, in order to understand the principles of operation of the deterministic Levenshtein finite state machine, it is better to first consider the operation of the non-deterministic version.

For a Levenshtein automaton for a word W, we call the finite automaton A N (W) , which accepts the word S if and only if the Levenshtein (Damerau-Levenshtein) distance between the words W and S does not exceed the specified value N.

The Levenshtein finite-state machine for the word W and the admissible number of modifications N can be given in the form of an ordered five of elements A N (W) = <E, Q, q 0 , F, V> , where:

E is the automaton alphabet;

Q is the set of internal states;

q 0 is the initial state, belongs to the set Q;

F - a set of final, or final states

V is a transition function that determines which (which) states it is possible to switch from the current state when the automaton arrives at the input of the next character.

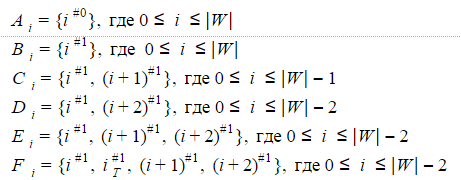

The states of the non-deterministic Levenshtein automaton A N (W) are usually denoted as i #e , where i = 0 .. | W | , e = 0 ..N . If the automaton is in the i #e state , it means that i “correct” characters have been entered into the automaton and e modifications have been detected. Since we consider an automaton that supports transpositions (that is , the Damerau-Levenshtein distance is used as p (S, W) ), the set of states must be supplemented with the states {i T #e } , where i = 0 .. | W | -1 , e = 1..N .

The initial state of the automaton is the state 0 # 0 .

The set of final states includes such states i #e for which the condition | W | - i <= N - e .

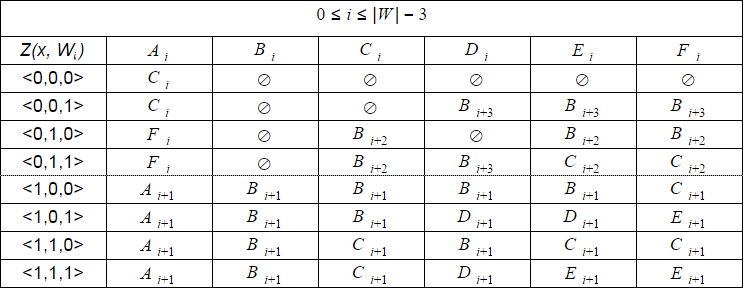

Based on the physical meaning of the states of the automaton, it is not difficult to determine the composition of admissible transitions. The transition function of the Levenshtein automaton is specified as a table.

If you are interested in a rigorous mathematical substantiation of the composition of the states of the automaton and other theses presented above, you can find it in the article by Schultz and Mihov (2002) .

The characteristic vector Z (x, W i ) for the symbol x is the bit vector of length min (N + 1, | W | - i) , the kth element of which is equal to 1 if (i + k) ith character of the string W is equal to x , and 0 otherwise. For example, for W = ”PISK”

Z ('P', W 0 ) = <1, 0>, and Z ('P', W 1 ) = <0, 0>.

A graphic representation of the Levenshtein automaton for W = ”PISK” and N = 1 is shown in the figure. Transitions of the automaton are signed by their corresponding characteristic vectors.

The end states of the automaton are highlighted in green. Light blue - the current (active) state of the machine.

The transition along horizontal arrows is carried out when a “correct” symbol is entered into the machine.

The transition along the vertical arrows corresponds to the assumption that the next symbol entered into the automaton is inserted into the original word W before the (i + 1) -th symbol. When moving along vertical arrows, the automaton “detects” a modification of the word W - the value of e increases by 1 .

The transition from the state i #e to the state (i + 1) # e + 1 corresponds to the assumption that the next character replaces the (i +1) -th symbol in the word W.

The transition from the state i #e to the state (i + 2) # e + 1 corresponds to the assumption that the next character corresponds to the (i + 2) th character in the word W , and the (i + 1) th character of the word W is omitted in the word s .

Probably, you have already guessed that the transition to the state i T #e implies that the transposition of the (i + 1) -th and (i + 2) -th characters of the word W is detected.

Now let's see how it works. Entering a new symbol into the automaton will be indicated by a curved red arrow, and to the right of the arrow you will indicate the value of the characteristic vector. This is how the automaton A 1 (“PISK”) will work when you type the word “SEARCH” into it.

Note, in fact, the sequence of changes in the states of the automaton is not determined by the specific word supplied to the input of the automaton, but only by the sequence of characteristic vectors. This sequence may be the same for two different words. For example, if the automaton is set to the word “mother”, then the sequence of characteristic vectors will be the same for the words “Lama”, “frame”, “lady”, etc.

Another interesting fact is that the structure of admissible transitions of the automaton does not change for i = 0 .. | W | - (N + 1) .

These two circumstances make it possible, when using a software implementation of fuzzy search algorithms, to use a machine that is not calculated for each specific word, but its universal imitation. That is why the title of the post deals with the “universal” Lowenstein automaton.

The calculation of the automaton for a particular word S is reduced in this case to a simple calculation of the characteristic vectors for each character x of the word S. The change of states is programmatically implemented as a simple increase in the two variables e , i by a value determined from universal tables. Schulz and Mihov (2002) showed that the calculation of all characteristic vectors for the word S can be made in time O (| S |) . This is the temporary complexity of the machine.

Symbols of the word S are sequentially fed to the input of the machine. If, after submitting a symbol, it becomes clear that the distance between lines S and W exceeds the threshold value N , then the machine is in the “empty” state — there are no active states in the machine. In this case, the need to calculate the characteristic vectors for the remaining characters of the word S is eliminated.

This is how the automaton “fulfills” the word S = ”IKS” with W = ”PISK”:

After entering the “AND” symbol in the machine, four active states appeared at once, however, after entering the “K” symbol, there were no active states left - the machine turned out to be in an error state and did not accept the word “X”. In this case, the calculation of the characteristic vectors for the symbol “C” was not carried out.

Deterministic Lowenstein automaton

In accordance with the theorem on determinism, for any non-deterministic finite-state machine, an equivalent deterministic finite-state machine can be constructed. Why do we need a deterministic automaton? Just when the software implementation, it will work faster. Mainly due to the fact that it can have only one current state and, as a result, when entering the next character, it will be necessary to calculate only one characteristic vector and define only one transition using the transition table.

If, for the nondeterministic Lowenstein automaton 1 (W) considered above, iterate through all possible values of the characteristic vectors, then it can be verified that the states constituting one of the following sets can be active at the same time:

The six sets listed above will be the states of the deterministic Lowenstein automaton for N = 1. More precisely, the automaton will have six states for i = 0 .. | W | -2 , three states for i = | W | -1 and two more states for i = | W | .

The dimension of the characteristic vector for a deterministic automaton can be calculated as 2N + 1 . Then the automaton transition table for a word from | W | letters with N = 1 should have 2 2x1 + 1 lines and 6x (| W | -1) + 3 + 2 columns (for example, 8x35 for a word of 6 letters). In addition, such a table will have to be calculated for each value of | W | separately. This is not very convenient and requires additional time to calculate or additional memory for storage.

However, as I already wrote above, the composition of admissible transitions of the automaton does not change for i = 0 .. | W | - (2N + 1) . Therefore, with a software implementation, it is much more convenient to simulate a deterministic automaton instead of calculating the real one. To do this, it is enough to store the offset value i and use a universal jump table with eight rows and six columns. Such a table can be calculated in advance.

As i increases, some states of the automaton become unreachable, therefore for i = | W | -2 .. | W | separate smaller tables should be provided.

Further, speaking of the Levenshtein deterministic automaton, I will imply the universal imitation described above.

With increasing N, the number of states increases exponentially. So, for N = 2, a deterministic automaton can have 42 states, for N = 3, already several hundred. This means that the memory consumption will be proportional to O (N 2 ) .

The initial state of the Levenshtein deterministic automaton will be the state A 0 .

Which states will be final? Those that correspond to the final states of a non-deterministic automaton. For N = 1, these will be the states A | W | , B | W | , A | W | -1 , C | W | -1 , D | W | -2 , E | W | -2 , F | W | -2 .

Since the number of transitions between the six states is very large, and the physical meaning of the states of the deterministic Lowenstein automaton is not obvious, I will not give here a graphical representation. I think that the picture is not quite clear. If you still want to see her, you can find in the article by Mihov and Schultz (2004) . I will give one more example of the work of a non-deterministic automaton, but this time I will indicate in what state its deterministic equivalent is at each moment.

Software implementation of the Levenshtein deterministic automaton

I wrote the software implementation of the Lowenstein automaton in C # - this language is most familiar to me. Sources can be found here . Universal conversion tables are implemented as fields of the static class ParametricDescription. The class contains universal conversion tables for N = 1.2.

In addition to transition tables, the ParametricDescription class also contains offset increment tables. The offset increment is the amount by which the value of i must be increased when entering the next state.

The Levenshtein automaton itself is implemented in the LevTAutomataImitation class. All class methods are quite simple and I will not describe them in detail. When performing a fuzzy search in the dictionary, it is enough to create one instance of the class per request.

Please note - the creation of an instance of the LevTAutomataImitation class is performed in a small constant time for any values of W , S , N. An instance of the class stores only the value of W and auxiliary variables of small size.

To select only those lines from the array of lines that are not more than 2 from the Damerau-Levenshtein distance, you can use the following code:

//Misspelled word string wordToSearch = "fulzy"; //Your dictionary string[] dictionaryAsArray = new string[] { "fuzzy", "fully", "funny", "fast"}; //Maximum Damerau-Levenstein distance const int editDistance = 2; //Constructing automaton LevTAutomataImitation automaton = new LevTAutomataImitation (wordToSearch, editDistance); //List of possible corrections IEnumerable<string> corrections = dictionaryAsArray.Where(str => automaton.AcceptWord(str)); The code above implements the simplest fuzzy search algorithm in the dictionary using the Levenshtein automaton — an exhaustive search algorithm. Of course, this is not the most efficient algorithm. I will talk about more efficient search algorithms using the Levenshtein automaton in the second part of the article .

Links

- Source codes for an article in C #

- Levenshtein distance

- Distance Damerau-Levenshteyn

- State machine

- Good post about a fuzzy search in the dictionary and text

- Brief description of the Levenshtein automaton

- A detailed mathematical description of the Levenshtein automaton in the article by Schultz and Mikhov (2002)

- Another article by Mihov and Schulz (2004) on the same topic.

- The history of the introduction of Levenshtein's automaton into the fuzzy search for Lucene

- Implementations in java: one and two

- The second part of my publication

Source: https://habr.com/ru/post/275937/

All Articles