Fast and Furious Symfony + HHVM + MongoDB + CouchDB + Varnish

Today we want to talk about how the system was built, to which more than 1 million unique visitors per day (without taking into account requests to the API), about the intricacies of architecture, as well as those rakes and pitfalls that we had to face. Go...

Initial data

The system works on Symfony 2.3 and turns on DigitalOcean droplets, works cheerfully, no comments.

')

Symfony

Symfony has a great kernel.terminate event. Here in the background, after the client received a response from the server, all the hard work is done (writing to files, saving data to the cache, writing to the database).

As you know, each Symfony bundle loaded one way or another increases memory consumption. Therefore, for each component of the system we load only the required set of bundles (for example, no admin bandlets are needed at the front end, and no admin and front end bandages are needed in the API, etc.). The list of loadable bundles in the example is shortened for simplicity, in reality there are, of course, more of them:

Class /app/BaseAppKernel.php

<?php use Symfony\Component\HttpKernel\Kernel; use Symfony\Component\Config\Loader\LoaderInterface; class BaseAppKernel extends Kernel { protected $bundle_list = array(); public function registerBundles() { // $this->bundle_list = array( new Symfony\Bundle\FrameworkBundle\FrameworkBundle(), new Symfony\Bundle\SecurityBundle\SecurityBundle(), new Symfony\Bundle\TwigBundle\TwigBundle(), new Symfony\Bundle\MonologBundle\MonologBundle(), new Symfony\Bundle\AsseticBundle\AsseticBundle(), new Doctrine\Bundle\DoctrineBundle\DoctrineBundle(), new Sensio\Bundle\FrameworkExtraBundle\SensioFrameworkExtraBundle(), new Doctrine\Bundle\MongoDBBundle\DoctrineMongoDBBundle() ); // , if ($this->needLoadAllBundles()) { // Admin $this->addBundle(new Sonata\BlockBundle\SonataBlockBundle()); $this->addBundle(new Sonata\CacheBundle\SonataCacheBundle()); $this->addBundle(new Sonata\jQueryBundle\SonatajQueryBundle()); $this->addBundle(new Sonata\AdminBundle\SonataAdminBundle()); $this->addBundle(new Knp\Bundle\MenuBundle\KnpMenuBundle()); $this->addBundle(new Sonata\DoctrineMongoDBAdminBundle\SonataDoctrineMongoDBAdminBundle()); // Frontend $this->addBundle(new Likebtn\FrontendBundle\LikebtnFrontendBundle()); // API $this->addBundle(new Likebtn\ApiBundle\LikebtnApiBundle()); } return $this->bundle_list; } /** * , . * dev- text- prod-, * */ public function needLoadAllBundles() { if (in_array($this->getEnvironment(), array('dev', 'test')) || $_SERVER['SCRIPT_NAME'] == 'app/console' || strstr($_SERVER['SCRIPT_NAME'], 'phpunit') ) { return true; } else { return false; } } /** * */ public function addBundle($bundle) { if (in_array($bundle, $this->bundle_list)) { return false; } $this->bundle_list[] = $bundle; } public function registerContainerConfiguration(LoaderInterface $loader) { $loader->load(__DIR__.'/config/config_'.$this->getEnvironment().'.yml'); } } Class /app/AppKernel.api.php

<?php require_once __DIR__.'/BaseAppKernel.php'; class AppKernel extends BaseAppKernel { public function registerBundles() { parent::registerBundles(); $this->addBundle(new Likebtn\ApiBundle\LikebtnApiBundle()); return $this->bundle_list; } } Fragment /web/app.php

// // - , // $_SERVER['REQUEST_URI'] if (strstr($_SERVER['HTTP_HOST'], 'admin.')) { // require_once __DIR__.'/../app/AppKernel.admin.php'; } elseif (strstr($_SERVER['HTTP_HOST'], 'api.')) { // API require_once __DIR__.'/../app/AppKernel.api.php'; } else { // require_once __DIR__.'/../app/AppKernel.php'; } $kernel = new AppKernel('prod', false); The trick is that you need to load all bundles only in the dev-environment and at the time when the cache is cleared on the prod-environment.

MongoDB

MongoDB on Compose.io is used as the main database. The database is located in the same datacenter as the main server - good, Compose allows you to place the database in DigitalOcean.

At some point there were difficulties with slow queries, due to which the overall system performance began to decline. The issue was resolved with the help of well-composed indices. Practically all manuals on creating indexes for MongoDB state that if a query uses range selection operations ($ in, $ gt or $ lt), then for such a request the index will not be used under any circumstances, for example:

{"_id":{"$gt":ObjectId('52d89f120000000000000000')},"ip":"140.101.78.244"} So, this is not entirely true. Here is a universal algorithm for creating indexes, which allows you to use indexes for queries with a choice of ranges of values (why you can read this algorithm here ):

- First, the index includes fields by which specific values are selected.

- Then the fields that are sorted.

- Finally, the fields that are involved in the selection of a range.

And voila:

Couchdb

It was decided to store statistical data in CouchDB and give directly to clients using JavaScript without authorization, without once again pulling the server. Previously, they did not work with this database; the phrase “CouchDB is designed specifically for the web” bribed them.

When everything was set up and it was time for load testing, it turned out that with our stream of write requests, CouchDB just choked. Almost all CouchDB manuals do not directly recommend using it for frequently updated data, but we certainly didn’t believe it and hoped for it. It was quickly done to accumulate data in Memcached and transfer them to CouchDB at short intervals.

Also, CouchDB has the function of saving revisions of documents, which cannot be disabled using standard tools. This was learned when it was too late to rush. The compaction procedure, which starts when certain conditions occur, removes old revisions, but nevertheless, the revision memory is eaten.

Futon is the CouchDB web admin area, accessible at / _utils / to everyone, including anonymous users. The only way to prevent anyone who wants to view the database that they could find is to simply delete the following CouchDB configuration entries in the [httpd_db_handlers] section (the admin also loses the ability to view the lists of documents):

_all_docs ={couch_mrview_http, handle_all_docs_req} _changes ={couch_httpd_db, handle_changes_req} In general, CouchDB did not let me relax.

HHVM

Backends, preparing the main content, spin on HHVM , which in our case works much more vigorously and more stable than the PHP-FPM + APC bundle used previously. Benefit Symfony 2.3 is 100% compatible with HHVM. Installing HHVM on Debian 8 without any difficulty.

To allow HHVM to interact with the MongoDB database, the Mongofill for HHVM extension is used, implemented in C ++ half and PHP half. Due to a small bug , in case of errors when executing queries to the database, it falls out:

Fatal error: Class undefined: MongoCursorExceptionHowever, this does not prevent the expansion to work successfully in production.

Varny

For caching and directly sharing content, the monster Varnish is used. There were problems with the fact that for some reason the varnishd periodically killed children. It looked like this:

varnishd[23437]: Child (23438) not responding to CLI, killing it. varnishd[23437]: Child (23438) died signal=3 varnishd[23437]: Child cleanup complete varnishd[23437]: child (3786) Started varnishd[23437]: Child (3786) said Child starts This led to clearing the cache and a sharp increase in the load on the system as a whole. The reasons for this behavior, as it turned out, a great many, as well as tips and recipes for treatment. First, we sinned on the

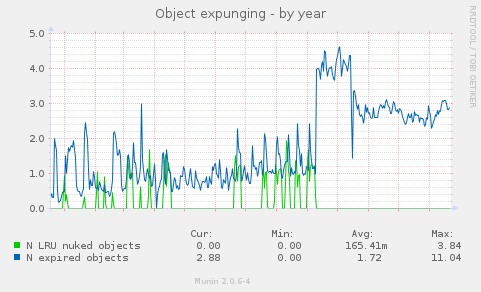

-p cli_timeout=30s parameter in / etc / default / varnish, but it turned out not to be in it. In general, after rather lengthy experiments and searching the parameters, it was established that this happened at those moments when Varnish began to actively delete items from the cache in order to place new ones. Experimentally, for our system, the beresp.ttl parameter in default.vcl was selected, which is responsible for the item's storage time in the cache, and the situation returned to normal: sub vcl_fetch { /* Set how long Varnish will keep it*/ set beresp.ttl = 7d; } The beresp.ttl parameter had to be set such that the old elements were deleted (expired objects) from the cache before the new elements started to run out of space (nuked objects) in the cache:

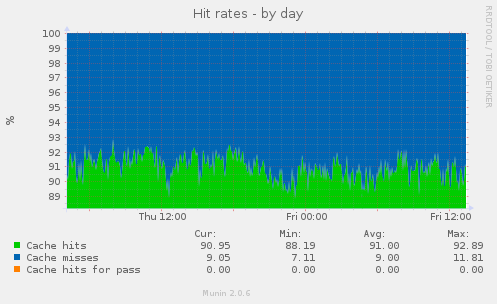

The percentage of cash hits while stably in the region of 91% :

In order for the settings to take effect, Varnish needs to be rebooted. A reboot causes the cache to clear with all the consequences. Here is a trick that allows you to load new configuration settings without restarting Varnish and losing the cache:

varnishadm -T 0.0.0.0:6087 -S /etc/varnish/secret vcl.load config01 /etc/varnish/default.vcl vcl.use config01 quit config01 - the name of the new configuration, you can set arbitrarily, for example: newconfig, reload, etc.

Cloudflare

CloudFlare covers the whole thing and caches static data, and at the same time provides SSL certificates.

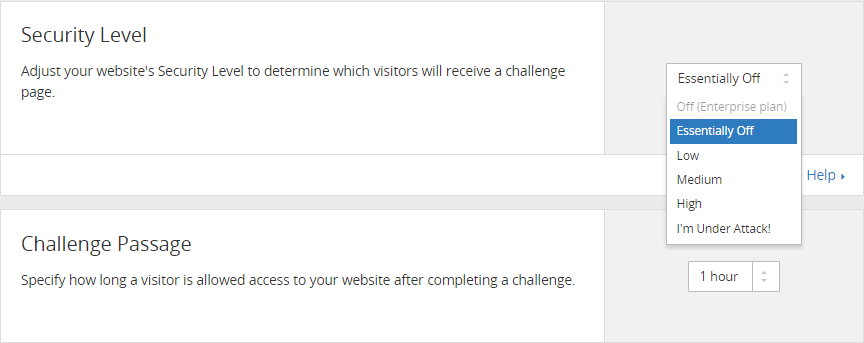

Some clients had problems with accessing our API - they received a request to enter the Captcha “Challenge Passage”. As it turned out, CloudFlare uses Project Honey Pot and other similar services to keep track of servers - potential spammers, and it was them who issued the warning. CloudFlare tech support for a long time could not offer a sensible solution. As a result, the simple switching of the Security Level to Essentially Off in the CloudFlare panel helped:

Conclusion

That's all for now. The load on the project grew rapidly, there was a minimum of time for analysis and search for solutions, so we have what we have. We will be grateful if someone offers more elegant ways to solve the above tasks.

Source: https://habr.com/ru/post/275661/

All Articles