Parallel algorithms for processing BigData: pitfalls and difficult decisions

This publication is based on the AlexSerbul speech at the BigData Conference in the fall.

Big data is a fashionable and popular topic. But many are still scared away by an excess of theoretical reasoning and some lack of practical recommendations. In this post, I want to partly fill this gap and talk about using parallel algorithms for processing big data on the example of clustering a product catalog of 10 million items.

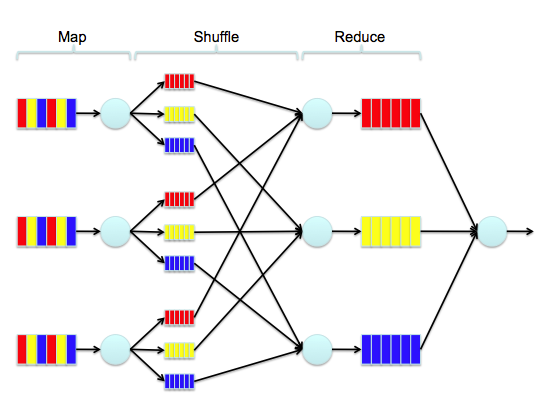

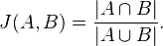

Unfortunately, when you have a lot of data, you will have to “reinvent” the classical algorithm anew, so that it works in parallel using MapReduce. And this is a big problem.

')

What is the way out? In order to save time and money, you can certainly try to find a library that allows you to implement a parallel clustering algorithm. There is of course something on the Java platform: the dying Apache Mahout and the evolving Apache Spark MLlib. Unfortunately, Mahout supports few algorithms under MapReduce - most of them are consistent.

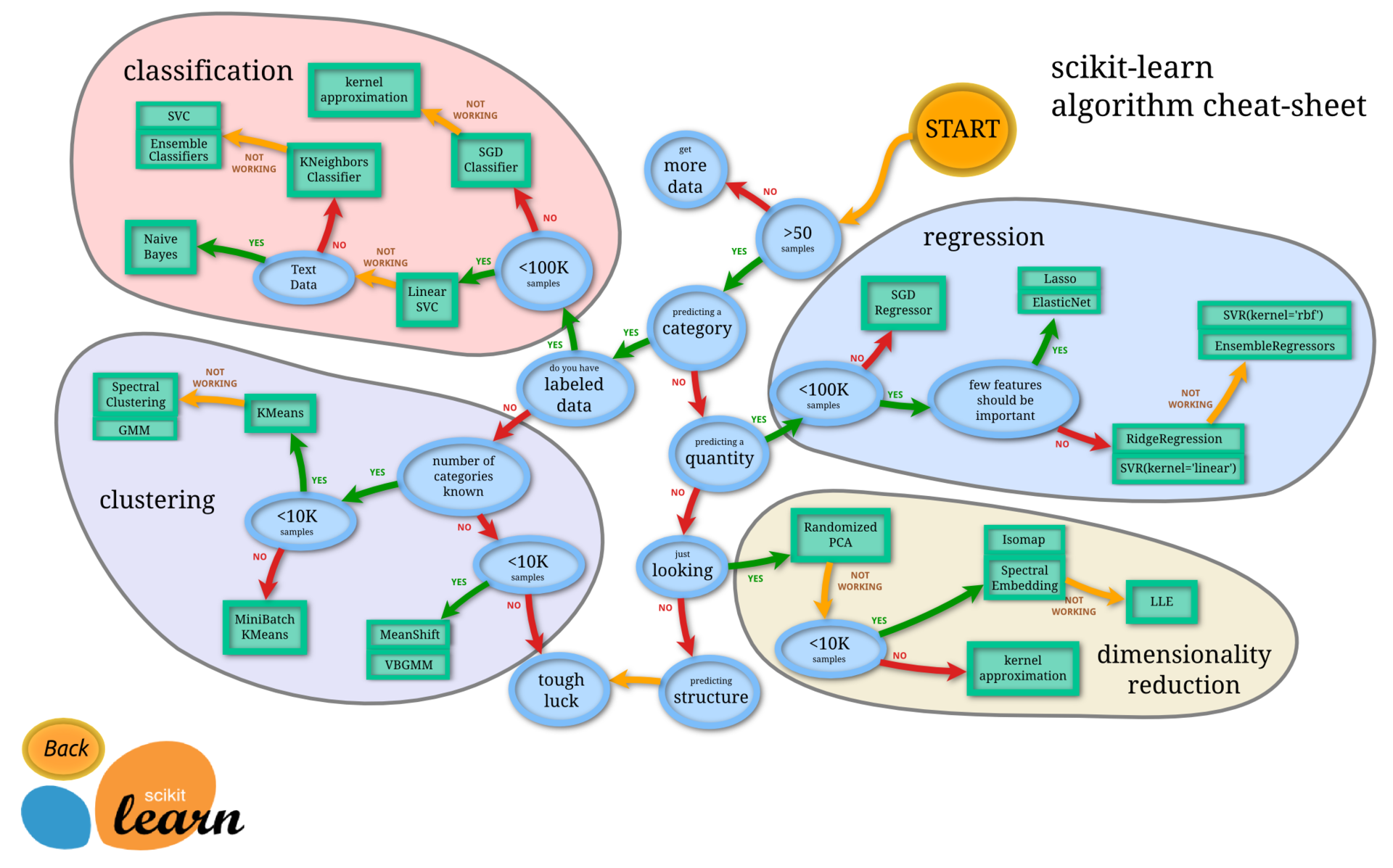

The promising mountain Spark MLlib is also not rich in clustering algorithms. And with our volumes the matter is even worse - this is what is proposed:

When you have 10-20 million entities for clustering, the above solutions will no longer help, you need hardcore. But first things first.

So, we need to cluster a catalog of 10 million items. Why do you need it? The fact is that our users can use a recommendation system in their online stores. And her work is based on an analysis of the aggregated catalog of products of all sites operating on our platform. For example, in one of the shops the buyer was recommended to choose an ax to kill his mother-in-law (yes, this is a corporate blog, but without jokes about bigdates and mathematics, it’s impossible to say that everyone will fall asleep). The system will know about it, analyze it and in another store will recommend the button accordion to the same buyer to play and rejoice. That is, the first problem solved by clustering is the transfer of interest.

The second task: to ensure the creation of correct logical connections of goods to increase related sales. For example, if a user bought a camera, the system will recommend a battery and a memory card to it. That is, we need to cluster similar products into clusters, and work in different stores at the cluster level.

As noted above, our product database consists of catalogs of several tens of thousands of online stores operating on the 1C-Bitrix platform. Users enter there textual descriptions of different lengths, someone adds brands of goods, models, manufacturers. It would be expensive, time consuming and difficult to create a consistent, accurate, unified system for collecting and classifying all the goods from tens of thousands of stores. And we wanted to find a way to quickly glue similar products together and significantly improve the quality of collaborative filtering. After all, if the system knows the buyer well, then it does not matter what specific goods and their brands he is interested in, the system will always find what to offer him.

First of all, it was necessary to choose a storage system suitable for working with “big” data. I will repeat a little, but this is useful for securing the material. For ourselves, we have identified four "camps" of databases:

Having taken up the issue of clustering closely, we found out that there are not so many ready-made offers on the market.

As a result, we stopped at SparkMLlib, because there, as it seemed, there are reliable parallel clustering algorithms.

We had to first figure out how to combine text descriptions of goods (names and short descriptions) into clusters. Natural language processing is a separate, huge area of Computer Science, which includes machine learning and Information retrieval.

and linguistics and even (tadam!) Deep Learning.

When there are tens, hundreds, thousands of data for clustering, almost any classical algorithm will suit, even hierarchical clustering. The problem is that the algorithmic complexity of hierarchical clustering is approximately O (N 3 ). That is, you have to wait for “billions of years” until it runs on our data volume, on a huge cluster. And it was impossible to confine ourselves to processing just a sample. Therefore, hierarchical clustering in the forehead did not suit us.

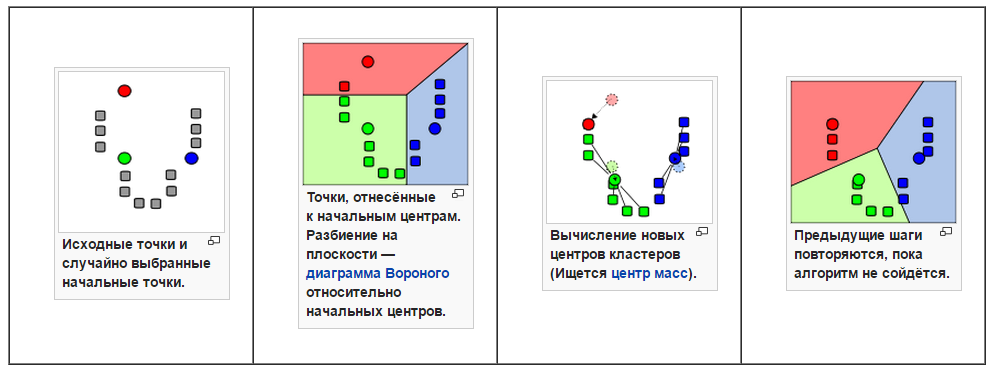

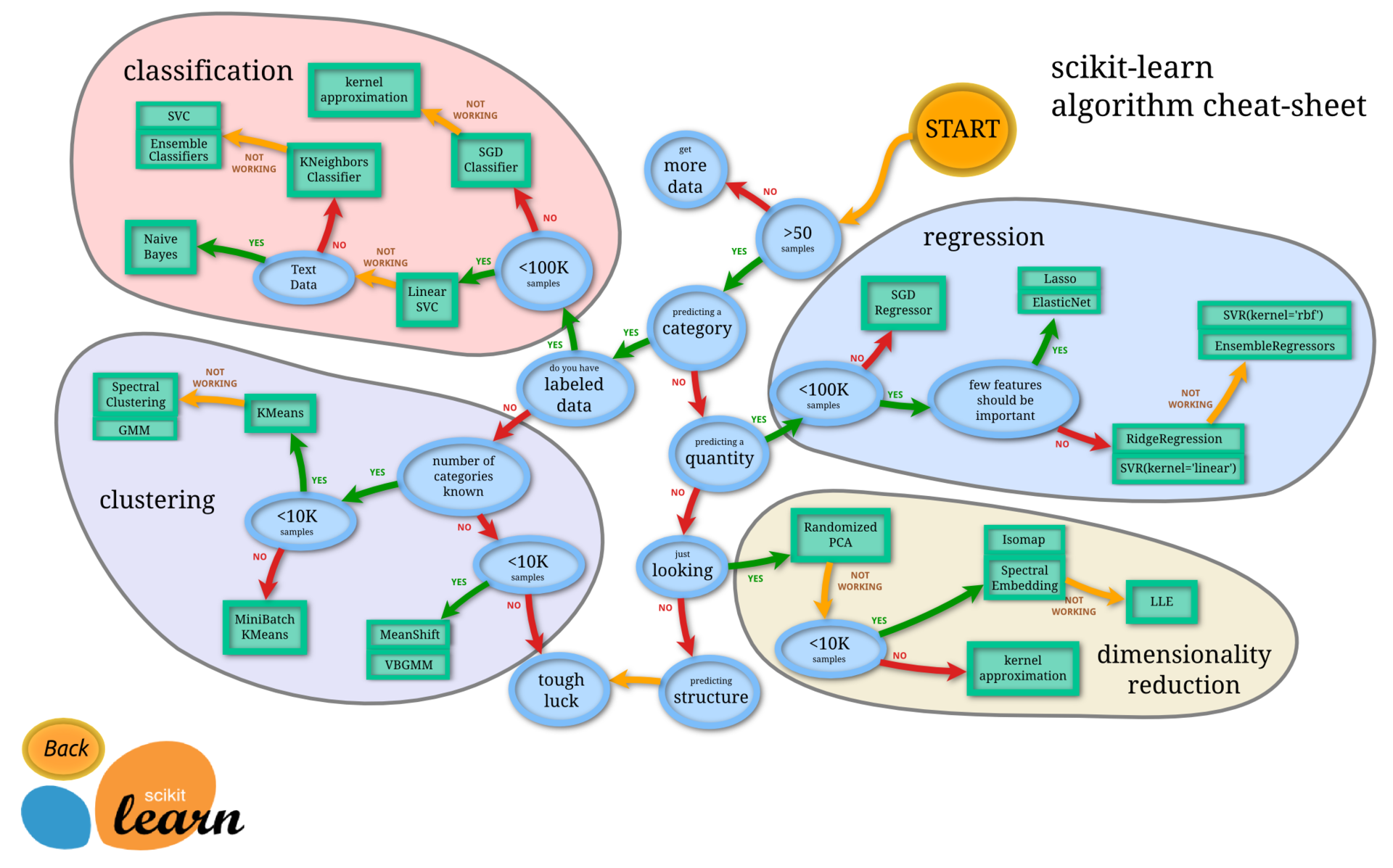

Then we turned to the "bearded" algorithm K-means:

This is a very simple, well-studied and common algorithm. However, it also works very slowly on big data: the algorithmic complexity is approximately O (nkdi). With n = 10,000,000 (number of goods), k = 1,000,000 (expected number of clusters), d = <1,000,000 (word types, vector dimension), i = 100 (approximate number of iterations), O = 10 21 operations . For comparison, the age of the Earth is 1.4 * 10 17 seconds.

The C-means algorithm, although it allows you to do fuzzy clustering, but also works on our volumes slowly, just like spectral factorization. For the same reason, DBSCAN and probabilistic models did not suit us.

To implement clustering, we decided at the first stage to turn the text into vectors. A vector is a certain point in multidimensional space, the clusters of which will be the desired clusters.

We needed to cluster descriptions of products consisting of 2-10 words. The simplest, classic solution to the forehead or to the eye is bag of words . Once we have a directory, then we can define a dictionary. As a result, we have a body of about a million words. After stemming, there are about 500 thousand of them left. They discarded high-frequency and low-frequency words. Of course, you could use tf / idf, but decided not to complicate things.

What are the disadvantages of this approach? The resulting huge vector is expensive then cheated, comparing its similarity with others. After all, what is clustering? This is the process of finding similar vectors. And when they are 500 thousand in size, the search takes a lot of time, so you need to learn how to compress them. To do this, you can use Kernel hack, hashing words not in 500 thousand attributes, but in 100 thousand. A good working tool, but collisions may occur. We did not use it.

And finally, I will talk about one more technology that we have rejected, but now we are seriously considering starting to use it. This is Word2Vec, a method for statistical processing of text by compressing the dimension of text vectors of a two-layer neural network, developed by Google. In fact, this is the development of the good old eternal statistical N-gram text models, only skip-gram variation is used.

The first task, which is beautifully solved using Word2Vec: reducing the dimension due to the "matrix factorization" (in quotes specifically, because there are no matrices there, but the effect is very similar). That is, it turns out, for example, not 500 thousand attributes, but only 100. When there are similar words “in context”, the system considers them “synonymous” (with a stretch, of course, coffee and tea can be combined). It turns out that the points of these similar words in a multidimensional space begin to coincide, that is, words that are close in meaning are clustered into a common cloud. Say, “coffee” and “tea” will be words that are close in meaning, because they are often found in context together. Thanks to the Word2Wec library, you can reduce the size of the vectors, and they themselves are more meaningful.

This topic has been around for many years: Latent semantic indexing and its variations through PCA / SVD have been studied well, and a solution to the forehead through clustering columns or rows of the term2document matrix will, in fact, give a similar result - only it will take a very long time.

It’s very likely that we’ll start using Word2Vec. By the way, its use also allows you to find typos and play with the vector algebra of sentences and words.

In the end, after all the long search for scientific publications, we wrote our own version of k-Means - Clustering by Bootstrap Averaging for Spark.

In essence, this is a hierarchical k-Means, which makes preliminary layer-by-layer data sampling. It took quite a reasonable time to process 10 million items, hours, although it took a bunch of servers to work. But the result did not suit, because it was not possible to cluster part of the text data - the socks were glued to the aircraft. The method worked, but very roughly and inaccurately.

There was hope for the old, but now forgotten, probabilistic methods of searching for duplicates or "almost duplicates" - locality-sensitive hashing .

The variant of the method described here required the use of vectors of the same size transformed from text, for further “scattering” of them by hash functions. And we took MinHash.

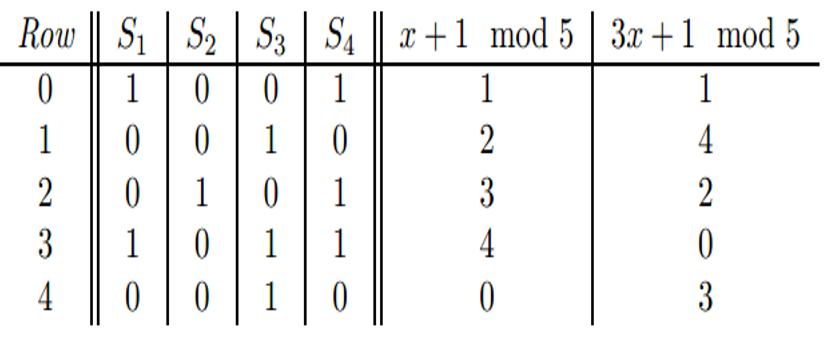

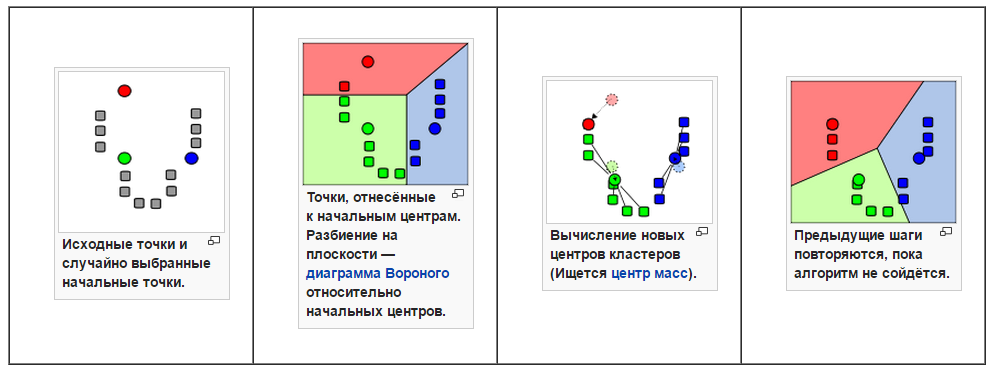

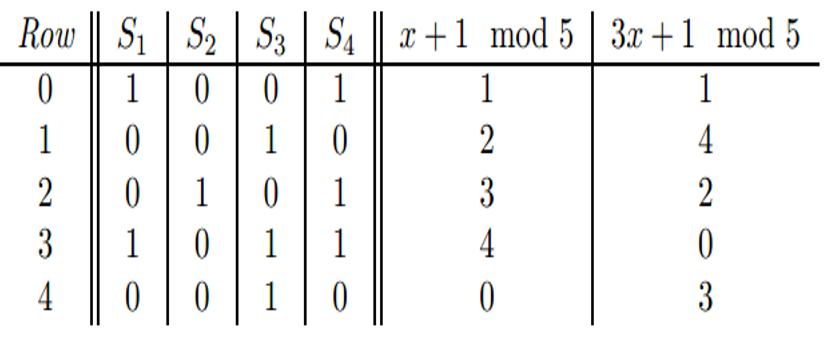

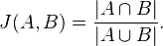

MinHash is a technology for compressing large-dimension vectors into a small vector while preserving mutual Jaccard-likeness. How does she work? We have a number of vectors or sets of sets, and we define a set of hash functions through which we run each vector / set.

We define, for example, 50 hash functions. Then we run each hash function by vector / set, determine the minimum value of the hash function, and get a number that we write to the N-position of the new squeezed vector. We do it 50 times.

Pr [h min (A) = h min (B)] = J (A, B)

Thus, we solved the problem of compressing dimensions and reducing vectors to a single number of dimensions.

I completely forgot to tell that we refused to vectorize the text in the forehead, because the names and brief descriptions of the goods created extremely discharged vectors suffering from the "curse of dimension".

Product names were usually of approximately the same type and size:

"Pants red terry striped"

"Red Striped Pants"

These two phrases differ in a set of words, in their number and location. In addition, people make typos when typing. Therefore, it is impossible to compare words even after a stemming, because all the texts will be mathematically dissimilar, although they are close in meaning.

In such a situation, the algorithm of shingles (shingle, scales, tiles) is often used. We present the text in the form of shingles, pieces.

{"Pants", "tans", "any", "us to", "s kra", "red", ...}

And when comparing the many pieces, it turns out that two texts of different texts may suddenly find similarity with each other. We have experimented with text processing for a long time, and in our experience, this is the only way to compare short text descriptions in our product catalog. This method is also used to identify similar articles, scientific papers, with the aim of detecting plagiarism.

I repeat once again: we abandoned highly sparse text vectors, replaced each text with many (set) shingles, and then brought them to the same size using MinHash.

As a result, we solved the problem of catalog vectorization as follows. Using MinHash-signatures, we obtained small-sized vectors from 100 to 500 (the size is chosen the same for all vectors). Now they need to compare each with each to form clusters. In the forehead, as we already know, it is very long. And thanks to the LSH ( Locality-Sensitive Hashing ) algorithm, the task was solved in one pass.

The idea is that similar objects, texts, vectors collide into one set of hash functions, into one bucket. And then it remains to go through them and collect similar elements. After clustering, you get a million buckets, each of which will be a cluster.

Traditionally use several bands - sets of hash functions. But we simplified the task even more - we left only one band. Suppose that the first 40 elements of a vector are taken and entered into a hash table. And then there are elements that have the same piece first. That's all! For starters, it's great. If greater accuracy is needed, then you can work with the bands group, but then in the final part of the algorithm you will have to collect mutually similar objects from them for a longer time.

After the first iteration, we obtained quite good results: almost all duplicates and almost all similar goods stuck together. Evaluated visually. And to further reduce the number of microclusters, we previously removed frequently encountered and rare words.

Now we have just two or three hours on 8 spot-servers clustered 10 million products in approximately one million clusters. In fact, in one pass, because there is only one band. Having experimented with the settings, we got quite adequate clusters: yachts, cars, sausage, etc., without nonsense like “ax + plane”. And now this compressed cluster model is used to improve the accuracy of the personal recommendation system.

In collaborative algorithms, we began to work not with specific products, but with clusters. A new product appeared, we found a cluster, put it there. And the reverse process - we recommend the cluster, then select the most popular product from it and return it to the user. The use of a cluster catalog improved the accuracy of recommendations several times (we measure the recall of the current model a month earlier). And this is only due to the compression of data (names) and combining them in meaning. Therefore, I want to advise you - look for simple solutions to your problems associated with big data. Do not try to complicate things. I believe that you can always find a simple, effective solution that in 10% of efforts will solve 90% of problems! Good luck and success in working with big data!

Big data is a fashionable and popular topic. But many are still scared away by an excess of theoretical reasoning and some lack of practical recommendations. In this post, I want to partly fill this gap and talk about using parallel algorithms for processing big data on the example of clustering a product catalog of 10 million items.

Unfortunately, when you have a lot of data, you will have to “reinvent” the classical algorithm anew, so that it works in parallel using MapReduce. And this is a big problem.

')

What is the way out? In order to save time and money, you can certainly try to find a library that allows you to implement a parallel clustering algorithm. There is of course something on the Java platform: the dying Apache Mahout and the evolving Apache Spark MLlib. Unfortunately, Mahout supports few algorithms under MapReduce - most of them are consistent.

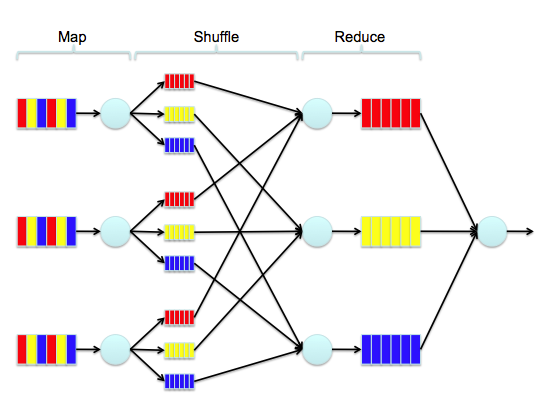

The promising mountain Spark MLlib is also not rich in clustering algorithms. And with our volumes the matter is even worse - this is what is proposed:

- K-means

- Gaussian mixture

- Power iteration clustering (PIC)

- Latent Dirichlet allocation (LDA)

- Streaming k-means

When you have 10-20 million entities for clustering, the above solutions will no longer help, you need hardcore. But first things first.

So, we need to cluster a catalog of 10 million items. Why do you need it? The fact is that our users can use a recommendation system in their online stores. And her work is based on an analysis of the aggregated catalog of products of all sites operating on our platform. For example, in one of the shops the buyer was recommended to choose an ax to kill his mother-in-law (yes, this is a corporate blog, but without jokes about bigdates and mathematics, it’s impossible to say that everyone will fall asleep). The system will know about it, analyze it and in another store will recommend the button accordion to the same buyer to play and rejoice. That is, the first problem solved by clustering is the transfer of interest.

The second task: to ensure the creation of correct logical connections of goods to increase related sales. For example, if a user bought a camera, the system will recommend a battery and a memory card to it. That is, we need to cluster similar products into clusters, and work in different stores at the cluster level.

As noted above, our product database consists of catalogs of several tens of thousands of online stores operating on the 1C-Bitrix platform. Users enter there textual descriptions of different lengths, someone adds brands of goods, models, manufacturers. It would be expensive, time consuming and difficult to create a consistent, accurate, unified system for collecting and classifying all the goods from tens of thousands of stores. And we wanted to find a way to quickly glue similar products together and significantly improve the quality of collaborative filtering. After all, if the system knows the buyer well, then it does not matter what specific goods and their brands he is interested in, the system will always find what to offer him.

Tool selection

First of all, it was necessary to choose a storage system suitable for working with “big” data. I will repeat a little, but this is useful for securing the material. For ourselves, we have identified four "camps" of databases:

- SQL on MapReduce: Hive, Pig, Spark SQL . Load one billion rows into an RDBMS and aggregate the data — it will become clear that this class of storage systems is “not very” suitable for this task. Therefore, intuitively, you can try using SQL via MapReduce (which Facebook did once). The obvious disadvantages here are the speed of short queries.

- SQL on MPP (massive parallel processing): Impala, Presto, Amazon RedShift, Vertica . Representatives of this camp say that SQL through MapReduce is a dead end, so you need, in fact, to raise a driver on each node where data is stored that will quickly access this data. But many of these systems suffer from instability.

- NoSQL: Cassandra, HBase, Amazon DynamoDB . The third camp says: BigData is NoSQL. But we understand that NoSQL is, in fact, a set of “memcached-servers”, combined into a ring structure, which can quickly execute a query like “take the key by value and return the answer”. They can also return a set of data sorted in RAM by key. This is almost everything - forget about JOINS.

- Classics: MySQL, MS SQL, Oracle, etc. Monsters do not give up and claim that all of the above - garbage, grief of mind, and relational databases work fine with BigData. Someone suggests fractal trees ( https://en.wikipedia.org/wiki/TokuDB ), someone - clusters. Monsters, too, want to live.

Having taken up the issue of clustering closely, we found out that there are not so many ready-made offers on the market.

- Spark MLlib (Scala / Java / Python / R) - when a lot of data.

- scikit-learn.org (Python) - when there is little data.

- R - causes conflicting feelings due to the terrible curvature of the implementation (trust programming to mathematicians and extras), but there are many ready-made solutions, and the recent integration with Spark is very pleasing.

As a result, we stopped at SparkMLlib, because there, as it seemed, there are reliable parallel clustering algorithms.

Search for clustering algorithm

We had to first figure out how to combine text descriptions of goods (names and short descriptions) into clusters. Natural language processing is a separate, huge area of Computer Science, which includes machine learning and Information retrieval.

and linguistics and even (tadam!) Deep Learning.

When there are tens, hundreds, thousands of data for clustering, almost any classical algorithm will suit, even hierarchical clustering. The problem is that the algorithmic complexity of hierarchical clustering is approximately O (N 3 ). That is, you have to wait for “billions of years” until it runs on our data volume, on a huge cluster. And it was impossible to confine ourselves to processing just a sample. Therefore, hierarchical clustering in the forehead did not suit us.

Then we turned to the "bearded" algorithm K-means:

This is a very simple, well-studied and common algorithm. However, it also works very slowly on big data: the algorithmic complexity is approximately O (nkdi). With n = 10,000,000 (number of goods), k = 1,000,000 (expected number of clusters), d = <1,000,000 (word types, vector dimension), i = 100 (approximate number of iterations), O = 10 21 operations . For comparison, the age of the Earth is 1.4 * 10 17 seconds.

The C-means algorithm, although it allows you to do fuzzy clustering, but also works on our volumes slowly, just like spectral factorization. For the same reason, DBSCAN and probabilistic models did not suit us.

To implement clustering, we decided at the first stage to turn the text into vectors. A vector is a certain point in multidimensional space, the clusters of which will be the desired clusters.

We needed to cluster descriptions of products consisting of 2-10 words. The simplest, classic solution to the forehead or to the eye is bag of words . Once we have a directory, then we can define a dictionary. As a result, we have a body of about a million words. After stemming, there are about 500 thousand of them left. They discarded high-frequency and low-frequency words. Of course, you could use tf / idf, but decided not to complicate things.

What are the disadvantages of this approach? The resulting huge vector is expensive then cheated, comparing its similarity with others. After all, what is clustering? This is the process of finding similar vectors. And when they are 500 thousand in size, the search takes a lot of time, so you need to learn how to compress them. To do this, you can use Kernel hack, hashing words not in 500 thousand attributes, but in 100 thousand. A good working tool, but collisions may occur. We did not use it.

And finally, I will talk about one more technology that we have rejected, but now we are seriously considering starting to use it. This is Word2Vec, a method for statistical processing of text by compressing the dimension of text vectors of a two-layer neural network, developed by Google. In fact, this is the development of the good old eternal statistical N-gram text models, only skip-gram variation is used.

The first task, which is beautifully solved using Word2Vec: reducing the dimension due to the "matrix factorization" (in quotes specifically, because there are no matrices there, but the effect is very similar). That is, it turns out, for example, not 500 thousand attributes, but only 100. When there are similar words “in context”, the system considers them “synonymous” (with a stretch, of course, coffee and tea can be combined). It turns out that the points of these similar words in a multidimensional space begin to coincide, that is, words that are close in meaning are clustered into a common cloud. Say, “coffee” and “tea” will be words that are close in meaning, because they are often found in context together. Thanks to the Word2Wec library, you can reduce the size of the vectors, and they themselves are more meaningful.

This topic has been around for many years: Latent semantic indexing and its variations through PCA / SVD have been studied well, and a solution to the forehead through clustering columns or rows of the term2document matrix will, in fact, give a similar result - only it will take a very long time.

It’s very likely that we’ll start using Word2Vec. By the way, its use also allows you to find typos and play with the vector algebra of sentences and words.

"I will build my amusement park! .."

In the end, after all the long search for scientific publications, we wrote our own version of k-Means - Clustering by Bootstrap Averaging for Spark.

In essence, this is a hierarchical k-Means, which makes preliminary layer-by-layer data sampling. It took quite a reasonable time to process 10 million items, hours, although it took a bunch of servers to work. But the result did not suit, because it was not possible to cluster part of the text data - the socks were glued to the aircraft. The method worked, but very roughly and inaccurately.

There was hope for the old, but now forgotten, probabilistic methods of searching for duplicates or "almost duplicates" - locality-sensitive hashing .

The variant of the method described here required the use of vectors of the same size transformed from text, for further “scattering” of them by hash functions. And we took MinHash.

MinHash is a technology for compressing large-dimension vectors into a small vector while preserving mutual Jaccard-likeness. How does she work? We have a number of vectors or sets of sets, and we define a set of hash functions through which we run each vector / set.

We define, for example, 50 hash functions. Then we run each hash function by vector / set, determine the minimum value of the hash function, and get a number that we write to the N-position of the new squeezed vector. We do it 50 times.

Pr [h min (A) = h min (B)] = J (A, B)

Thus, we solved the problem of compressing dimensions and reducing vectors to a single number of dimensions.

Text shingling

I completely forgot to tell that we refused to vectorize the text in the forehead, because the names and brief descriptions of the goods created extremely discharged vectors suffering from the "curse of dimension".

Product names were usually of approximately the same type and size:

"Pants red terry striped"

"Red Striped Pants"

These two phrases differ in a set of words, in their number and location. In addition, people make typos when typing. Therefore, it is impossible to compare words even after a stemming, because all the texts will be mathematically dissimilar, although they are close in meaning.

In such a situation, the algorithm of shingles (shingle, scales, tiles) is often used. We present the text in the form of shingles, pieces.

{"Pants", "tans", "any", "us to", "s kra", "red", ...}

And when comparing the many pieces, it turns out that two texts of different texts may suddenly find similarity with each other. We have experimented with text processing for a long time, and in our experience, this is the only way to compare short text descriptions in our product catalog. This method is also used to identify similar articles, scientific papers, with the aim of detecting plagiarism.

I repeat once again: we abandoned highly sparse text vectors, replaced each text with many (set) shingles, and then brought them to the same size using MinHash.

Vectorization

As a result, we solved the problem of catalog vectorization as follows. Using MinHash-signatures, we obtained small-sized vectors from 100 to 500 (the size is chosen the same for all vectors). Now they need to compare each with each to form clusters. In the forehead, as we already know, it is very long. And thanks to the LSH ( Locality-Sensitive Hashing ) algorithm, the task was solved in one pass.

The idea is that similar objects, texts, vectors collide into one set of hash functions, into one bucket. And then it remains to go through them and collect similar elements. After clustering, you get a million buckets, each of which will be a cluster.

Clustering

Traditionally use several bands - sets of hash functions. But we simplified the task even more - we left only one band. Suppose that the first 40 elements of a vector are taken and entered into a hash table. And then there are elements that have the same piece first. That's all! For starters, it's great. If greater accuracy is needed, then you can work with the bands group, but then in the final part of the algorithm you will have to collect mutually similar objects from them for a longer time.

After the first iteration, we obtained quite good results: almost all duplicates and almost all similar goods stuck together. Evaluated visually. And to further reduce the number of microclusters, we previously removed frequently encountered and rare words.

Now we have just two or three hours on 8 spot-servers clustered 10 million products in approximately one million clusters. In fact, in one pass, because there is only one band. Having experimented with the settings, we got quite adequate clusters: yachts, cars, sausage, etc., without nonsense like “ax + plane”. And now this compressed cluster model is used to improve the accuracy of the personal recommendation system.

Results

In collaborative algorithms, we began to work not with specific products, but with clusters. A new product appeared, we found a cluster, put it there. And the reverse process - we recommend the cluster, then select the most popular product from it and return it to the user. The use of a cluster catalog improved the accuracy of recommendations several times (we measure the recall of the current model a month earlier). And this is only due to the compression of data (names) and combining them in meaning. Therefore, I want to advise you - look for simple solutions to your problems associated with big data. Do not try to complicate things. I believe that you can always find a simple, effective solution that in 10% of efforts will solve 90% of problems! Good luck and success in working with big data!

Source: https://habr.com/ru/post/275455/

All Articles