Custom gestures, Kinect + Unity. Part 1

Hello, today we will learn to use custom gestures in our Unity-applications, we will do this with the help of Kinect'2. Gestures can be used for a wide range of tasks: moving around the scene, managing objects, working with the user interface, etc. In the first part we will look at the process of learning gestures, in the second we will use the resulting model of training in Unity. We will also learn about possible problems and solutions.

What we need:

1. Unity 5.0 Pro

2. Kinect v2 SDK

3. Kinect v2 Unity plugin

After we have downloaded and installed the Kinect SDK, we need to make sure that everything works. Open the Kinect SDK Browser, and run the Body basic WPF example — you should see the skeleton of the person who sees Kinect. If everything is good, go ahead, if not, open the first example in the same SDK Browser, the Kinect Configuration Verifier, and see what does not work for us.

Our example will be built around learning to discretely waving a hand from top to bottom. “Discrete” here means that our trained model will detect the fact of the presence of a gesture (returning a degree of confidence - was there a gesture at a given time). In contrast to the discrete possible learning long (continuous) gesture. The main difference here is that in a continuous gesture we can find out the relative position between the initial and final phases of the gesture. Long gestures are beyond the scope of our tutorial.

')

To record training videos, we need Kinect Studio V2.0 . Let's look at the interface:

If you do not see the output from Kinect, click on the button. in the upper left corner to connect. On the left we see a list of streams to be recorded. By default, the audio and color camera streams are turned off, which suits us, since we do not need them for training. To start recording a video, click on the button.

in the upper left corner to connect. On the left we see a list of streams to be recorded. By default, the audio and color camera streams are turned off, which suits us, since we do not need them for training. To start recording a video, click on the button.  for the record.

for the record.

Recommendations for recording a video: the video should include both “positives” and “negatives”, so it makes sense to first write down several correctly executed gestures, and then a few incorrect ones - this will make it easier to mark the video in the future. It is better to record videos with different people, otherwise it is possible to train for the specific execution of a particular person’s gesture

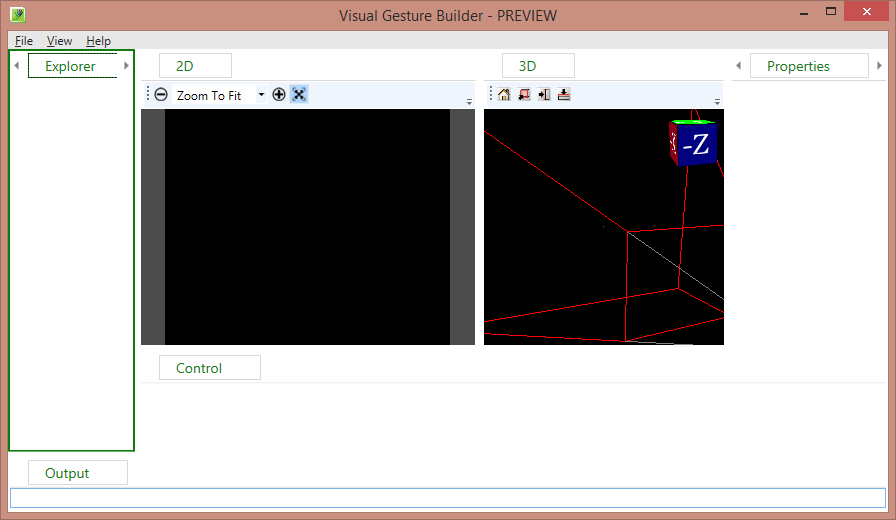

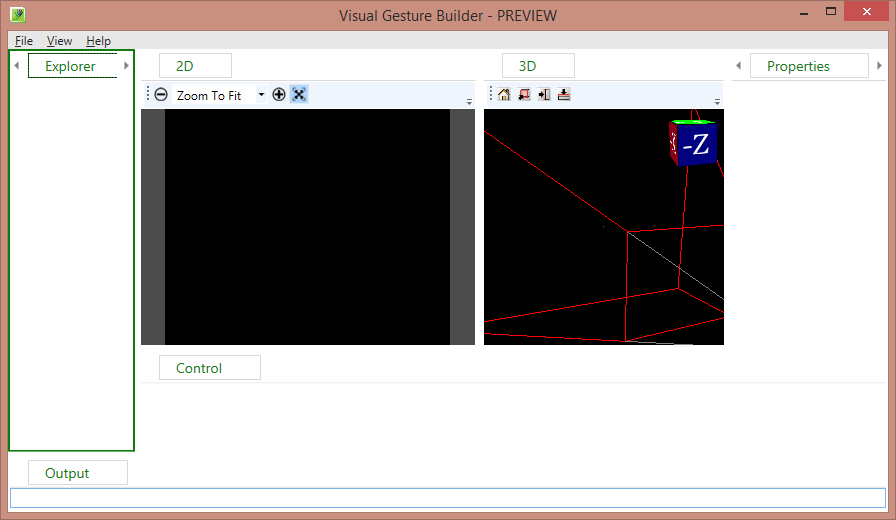

Finally, we recorded several videos for training, for our training purposes 3-4 such clips are enough. We now turn to learning. To do this, we use the Visual Gesture Builder utility. It looks like this:

Let's start, create a new solution, File -> New Solution, select the folder and specify the name.

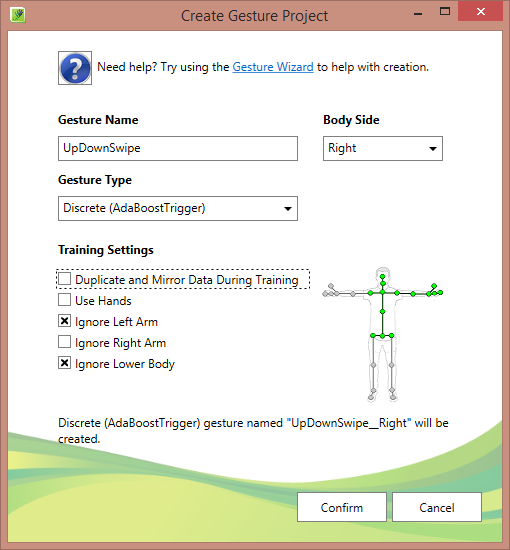

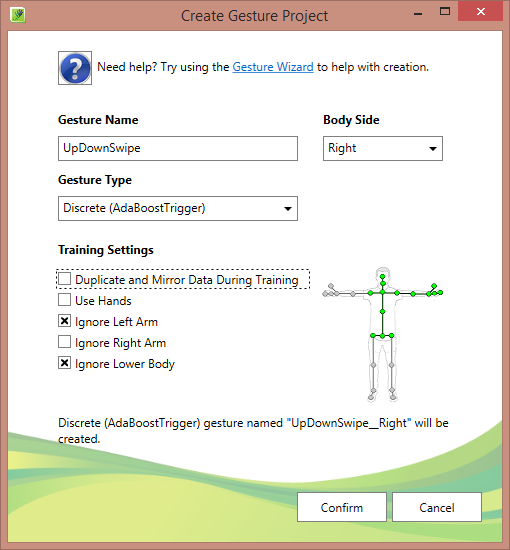

Now PCM on the created solution and “Create New Project”, logic similar to Visual Studio - one solution may contain several projects, each project is a specific gesture. The project setting is clear enough, we are interested in the discrete gesture of the right hand, so we remove the mirroring (Duplicate and Mirror Data During Training) and ignore the lower part of the body (Ignore Lower Body) and the left hand (Ignore Left Arm). Use Hands setting is used when hands are important to us. Ignoring here means that we will not take these parts of the body into account in the learning process.

Now we add our videos to the project (I recommend copying the videos to the project folder):

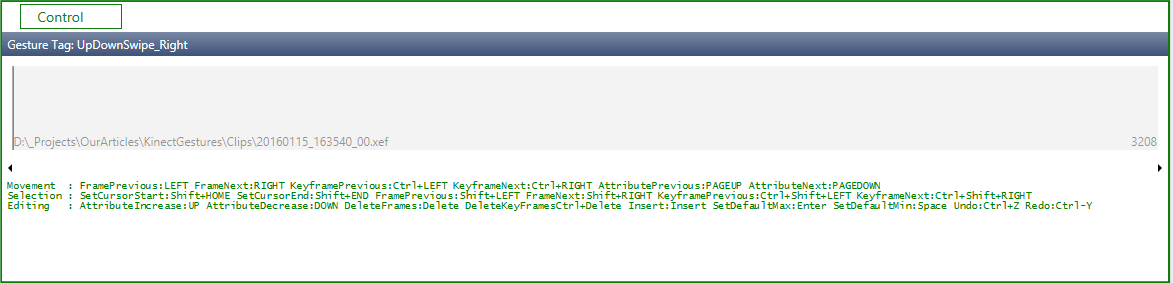

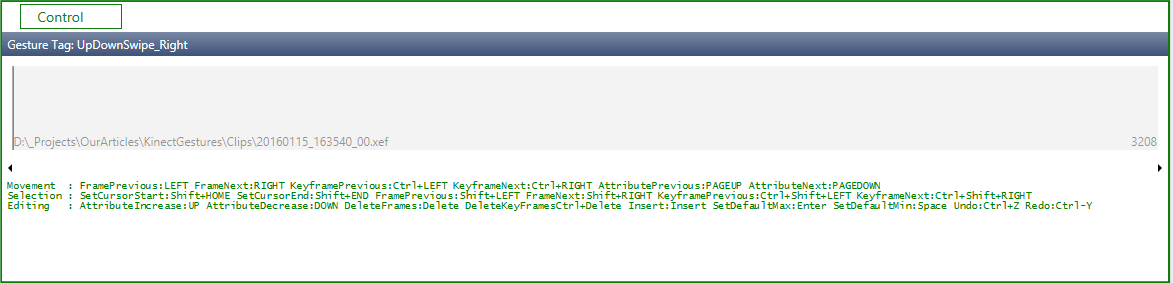

Select the video, we are interested in this one panel:

This is the panel for marking the roller into positive sections and negative sections. Control: moving left and right - with arrows, with shift will be highlighted, set the selection to negative - space, to positive - enter.

To begin, select everything and set it to negative, to do this, set the cursor to the beginning of the strip, press shift + end (the entire timeline will be highlighted), press the spacebar. Next, we move the arrows in our video and look for moments with our gestures, select each correct gesture (remember, with shift) and press enter.

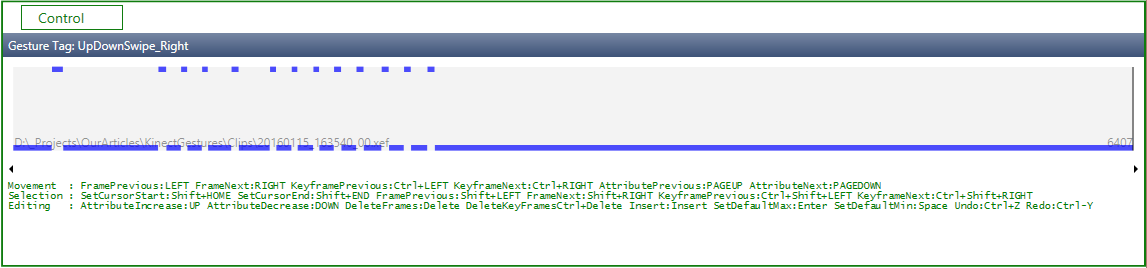

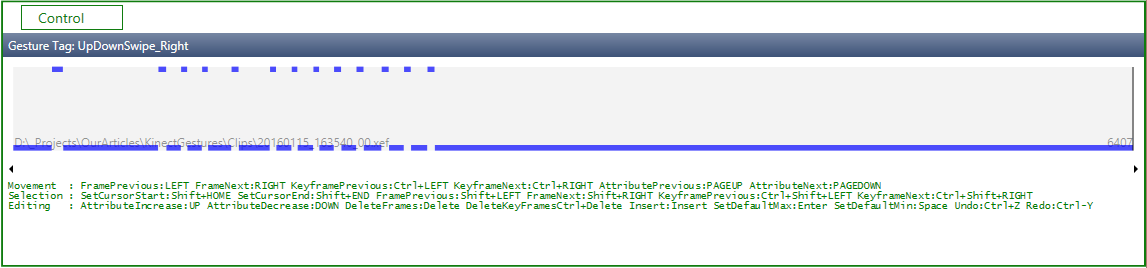

After all the manipulations should get something like this:

Where blue above is positive, the right gesture is made at this time, everything below is negative, there is no our gesture there

And the last - bildim solution (right click on the solution -> Build), you can drink tea, depending on the number of clips, the process may be delayed. At the output in our folder with videos there will be a file with the extension .gdb (so we copied the videos into the folder with the project - so that everything was together). This file is a trained model that we will use in the future.

Let's see what we've learned. Run the Visual gesture builder , click “O” and select our .gdb file, on the left there is the output from the camera depth, on the right there is one window for each gesture (we have it alone) when we execute it before Kinect, we must receive something like "Christmas trees":

This graph shows the degree of confidence that the gesture is at the moment of time (the abscissa axis is time, the ordinate is the degree of confidence)

On this with the first part of all. In the second part, we will look at how to use our trained model in Unity.

In practice, we ( Singularis lab ) used custom gestures in a real project and encountered a number of problems during development:

- Kinect is quite picky about hardware - it does not work with all usb3 controllers. In addition, we had to torment drivers with the controller on one machine, despite the recommendation “update drivers to the latest version”, it didn’t work with the latest drivers, and it worked with the older ones.

- During the recording of the training sample, it is not necessary to go to extremes: it is equally harmful to try to make a gesture identical and to make it as diverse as possible. At one stage, we had a lot of videos with a large number of people and a very diverse implementation of the same gesture (swipe hand), as a result, after the training, there were many false positives. In addition, Kinect is sensitive to relative position and tilt, that is, if you shot videos at a height of half a meter, and then use a trained model with a device already at a height of two meters, the results may surprise you unpleasantly.

- Videos from Microsoft on training with Kinect v2

- Microsoft Evangelist's blog , Peter Daukintis, where there are many articles about Kinect as a whole, and about learning gestures and Unity integration

What we need:

1. Unity 5.0 Pro

2. Kinect v2 SDK

3. Kinect v2 Unity plugin

Prelude. Kinect Check

After we have downloaded and installed the Kinect SDK, we need to make sure that everything works. Open the Kinect SDK Browser, and run the Body basic WPF example — you should see the skeleton of the person who sees Kinect. If everything is good, go ahead, if not, open the first example in the same SDK Browser, the Kinect Configuration Verifier, and see what does not work for us.

Learning set

Our example will be built around learning to discretely waving a hand from top to bottom. “Discrete” here means that our trained model will detect the fact of the presence of a gesture (returning a degree of confidence - was there a gesture at a given time). In contrast to the discrete possible learning long (continuous) gesture. The main difference here is that in a continuous gesture we can find out the relative position between the initial and final phases of the gesture. Long gestures are beyond the scope of our tutorial.

')

To record training videos, we need Kinect Studio V2.0 . Let's look at the interface:

If you do not see the output from Kinect, click on the button.

in the upper left corner to connect. On the left we see a list of streams to be recorded. By default, the audio and color camera streams are turned off, which suits us, since we do not need them for training. To start recording a video, click on the button.

in the upper left corner to connect. On the left we see a list of streams to be recorded. By default, the audio and color camera streams are turned off, which suits us, since we do not need them for training. To start recording a video, click on the button.  for the record.

for the record.Recommendations for recording a video: the video should include both “positives” and “negatives”, so it makes sense to first write down several correctly executed gestures, and then a few incorrect ones - this will make it easier to mark the video in the future. It is better to record videos with different people, otherwise it is possible to train for the specific execution of a particular person’s gesture

Sample training video

Training

Finally, we recorded several videos for training, for our training purposes 3-4 such clips are enough. We now turn to learning. To do this, we use the Visual Gesture Builder utility. It looks like this:

Let's start, create a new solution, File -> New Solution, select the folder and specify the name.

Now PCM on the created solution and “Create New Project”, logic similar to Visual Studio - one solution may contain several projects, each project is a specific gesture. The project setting is clear enough, we are interested in the discrete gesture of the right hand, so we remove the mirroring (Duplicate and Mirror Data During Training) and ignore the lower part of the body (Ignore Lower Body) and the left hand (Ignore Left Arm). Use Hands setting is used when hands are important to us. Ignoring here means that we will not take these parts of the body into account in the learning process.

Now we add our videos to the project (I recommend copying the videos to the project folder):

Select the video, we are interested in this one panel:

This is the panel for marking the roller into positive sections and negative sections. Control: moving left and right - with arrows, with shift will be highlighted, set the selection to negative - space, to positive - enter.

To begin, select everything and set it to negative, to do this, set the cursor to the beginning of the strip, press shift + end (the entire timeline will be highlighted), press the spacebar. Next, we move the arrows in our video and look for moments with our gestures, select each correct gesture (remember, with shift) and press enter.

Sample marking process

After all the manipulations should get something like this:

Where blue above is positive, the right gesture is made at this time, everything below is negative, there is no our gesture there

And the last - bildim solution (right click on the solution -> Build), you can drink tea, depending on the number of clips, the process may be delayed. At the output in our folder with videos there will be a file with the extension .gdb (so we copied the videos into the folder with the project - so that everything was together). This file is a trained model that we will use in the future.

Check

Let's see what we've learned. Run the Visual gesture builder , click “O” and select our .gdb file, on the left there is the output from the camera depth, on the right there is one window for each gesture (we have it alone) when we execute it before Kinect, we must receive something like "Christmas trees":

This graph shows the degree of confidence that the gesture is at the moment of time (the abscissa axis is time, the ordinate is the degree of confidence)

On this with the first part of all. In the second part, we will look at how to use our trained model in Unity.

Remarks

In practice, we ( Singularis lab ) used custom gestures in a real project and encountered a number of problems during development:

- Kinect is quite picky about hardware - it does not work with all usb3 controllers. In addition, we had to torment drivers with the controller on one machine, despite the recommendation “update drivers to the latest version”, it didn’t work with the latest drivers, and it worked with the older ones.

- During the recording of the training sample, it is not necessary to go to extremes: it is equally harmful to try to make a gesture identical and to make it as diverse as possible. At one stage, we had a lot of videos with a large number of people and a very diverse implementation of the same gesture (swipe hand), as a result, after the training, there were many false positives. In addition, Kinect is sensitive to relative position and tilt, that is, if you shot videos at a height of half a meter, and then use a trained model with a device already at a height of two meters, the results may surprise you unpleasantly.

Utility

- Videos from Microsoft on training with Kinect v2

- Microsoft Evangelist's blog , Peter Daukintis, where there are many articles about Kinect as a whole, and about learning gestures and Unity integration

Source: https://habr.com/ru/post/275451/

All Articles