Are you sure that all All-Flash storage systems are ready for use in the corporate sector?

Free translation of the blog of the world's strongest (in all senses) technological guru Jorge Maestre .

When flash drives just appeared, everyone just admired their incredible speeds. And this is not surprising, given that such carriers can work 10,000 times faster than their predecessors. At the same time, they have only two potential drawbacks:

All manufacturers have directed their efforts to eliminate these shortcomings. Each sought to improve the performance of DWPD (the ability of the drive to withstand a certain number of complete rewriting cycles during the warranty period), using unique techniques. The issue of high cost until today has been resolved through aggressive discounts and the active promotion of deduplication technology. It's funny, but today the bulk of the savings in the industry is still provided by the technology of dynamic capacity allocation (Thin Provisioning), which is not related to deduplication. In addition, every day customers hear promises that deduplication will help reduce flash memory costs: “Do you need 100 TB of space? We will sell you 20 TB and guarantee 5: 1 data compression! ”

')

It can be argued that by 2016, the problem of cell wear is basically solved. And given that today the cost of a gigabyte of flash memory naturally decreases due to an increase in the capacity of the drives, is there any point in focusing the attention of customers on these characteristics?

More and more manufacturers offer high-capacity solid-state drives that are comparable in price to traditional hard drives, even without deduplication. The cost and reliability of the drives themselves have ceased to be differentiators in the storage market.

Now it is time to reflect on the needs of corporate customers and try to understand whether all All-Flash storage systems are ready for use in data centers of large enterprises?

Tier-1 class arrays should have the following characteristics:

Corporate customers are interested in minimal data access time; their infrastructure usually contains business critical applications. And since a few seconds of downtime can result in multi-million dollar losses, fault tolerance should be ABSOLUTE. Of course, it is necessary to provide data replication to a backup site for building disaster-tolerant configurations.

It has always been this way, but how well do the All-Flash arrays meet these requirements? High performance? Of course! Fault tolerance? Are solid-state arrays more fault tolerant than their predecessors? If we compare only drives by MTBF, then the answer is YES. But are the arrays themselves more resilient? The answer is NO. Arrays with two controllers are simply not suitable for the corporate sector. And never will be. In this sense, the majority of AFA have no differences from arrays based on traditional disks. Nevertheless, there are several truly enterprise-level array models on the market, with a large number of controllers that are able to ensure data availability at the 99.9999% level.

Recall also the requirements for replication. The picture is quite clear - solid-state media as such are suitable for the corporate sector, WHY CAN'T TELL ABOUT most All-Flash arrays. There is a GREAT difference between the AFA array and the AFA corporate array (generally speaking, nothing surprising).

Since the advent of solid-state arrays, we are constantly talking about deduplication, but high coefficients alone are not enough (to be honest, never was enough). How can factors help replicate data? Or when organizing federated data storage on multiple arrays? Do they improve VMware integration? Or provide guaranteed levels of service to minimize delays when business users require it? What do you say to the management of your unit when the limits of array scaling become clear? And in the event of an accident? “Sorry, we were not protected from failures, but we have a deduplication factor of 3: 1!”

Already today, solid-state drives with a capacity of 3.84 TB are available, and capacity continues to grow. Increasing the density contributes to the rapid spread of solid-state disks, and, as a result, talk of deduplication factors is a thing of the past.

Not all architectures offered by manufacturers support such large capacities or the latest technologies. Some manufacturers continue to hold on to the old, relying on outdated arguments, such as comparing eMLC and MLC technologies, which are essentially no longer relevant. Such arguments only emphasize all the flaws of their architecture, but they are not even aware of this. Let's consider the following points:

More importantly: if such architectures cannot support constant changes and high densities typical of SSD technologies, how can you build an array based on them that provides Tier-1 level functionality?

Gradually, we come to this issue. In terms of introducing new technologies for cost-effective solid-state disks with high density, we are significantly ahead of our competitors.

I officially declare: HPE 3PAR StoreServ is the only true Tier-1 corporate enterprise array built entirely on flash technology.

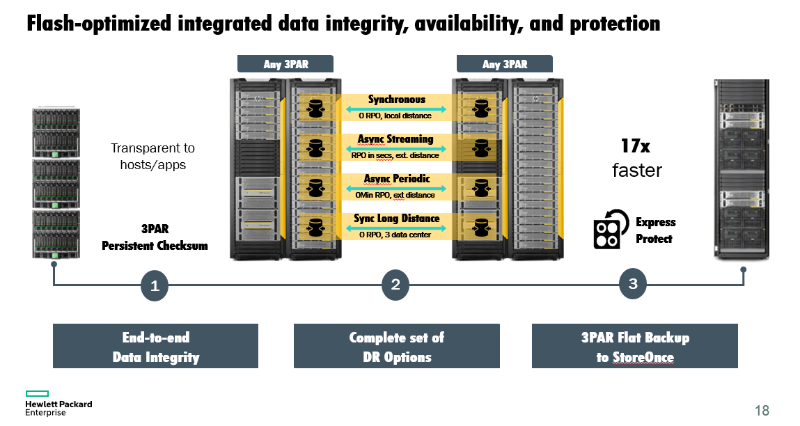

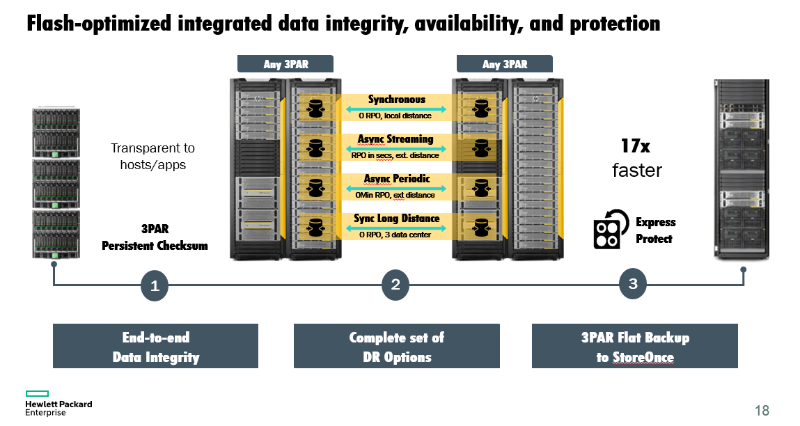

Architecture matters. And our architecture invariably allows us to stay ahead of our competitors. We are not limited to the fact that we offer the most effective set of tools for replication. We are not limited to maximum performance or the undoubtedly highest level of fault tolerance. Unlike arrays from other manufacturers, in our fault tolerance is a priority at all levels without exception. We offer an optimized backup model, which may lead to decades for other companies to create analogs.

But on this we do not stop. Our architecture is designed to efficiently consolidate multiple workloads. We are effectively fighting the outdated “one application - one storage” approach. Moreover, we offer the best support for guaranteed levels of service (QoS) for various business applications today:

Note that access time is one of the threshold metrics for QoS in the HPE 3PAR.

Thanks for attention! Take care of yourself! When you look at the storage capabilities of your enterprise, remember what Tier-1 really means.

When flash drives just appeared, everyone just admired their incredible speeds. And this is not surprising, given that such carriers can work 10,000 times faster than their predecessors. At the same time, they have only two potential drawbacks:

- Worn cells while writing

- Cost of

All manufacturers have directed their efforts to eliminate these shortcomings. Each sought to improve the performance of DWPD (the ability of the drive to withstand a certain number of complete rewriting cycles during the warranty period), using unique techniques. The issue of high cost until today has been resolved through aggressive discounts and the active promotion of deduplication technology. It's funny, but today the bulk of the savings in the industry is still provided by the technology of dynamic capacity allocation (Thin Provisioning), which is not related to deduplication. In addition, every day customers hear promises that deduplication will help reduce flash memory costs: “Do you need 100 TB of space? We will sell you 20 TB and guarantee 5: 1 data compression! ”

')

It can be argued that by 2016, the problem of cell wear is basically solved. And given that today the cost of a gigabyte of flash memory naturally decreases due to an increase in the capacity of the drives, is there any point in focusing the attention of customers on these characteristics?

More and more manufacturers offer high-capacity solid-state drives that are comparable in price to traditional hard drives, even without deduplication. The cost and reliability of the drives themselves have ceased to be differentiators in the storage market.

Now it is time to reflect on the needs of corporate customers and try to understand whether all All-Flash storage systems are ready for use in data centers of large enterprises?

Tier-1 class arrays should have the following characteristics:

- Performance

- fault tolerance

- Data replication

Corporate customers are interested in minimal data access time; their infrastructure usually contains business critical applications. And since a few seconds of downtime can result in multi-million dollar losses, fault tolerance should be ABSOLUTE. Of course, it is necessary to provide data replication to a backup site for building disaster-tolerant configurations.

It has always been this way, but how well do the All-Flash arrays meet these requirements? High performance? Of course! Fault tolerance? Are solid-state arrays more fault tolerant than their predecessors? If we compare only drives by MTBF, then the answer is YES. But are the arrays themselves more resilient? The answer is NO. Arrays with two controllers are simply not suitable for the corporate sector. And never will be. In this sense, the majority of AFA have no differences from arrays based on traditional disks. Nevertheless, there are several truly enterprise-level array models on the market, with a large number of controllers that are able to ensure data availability at the 99.9999% level.

Recall also the requirements for replication. The picture is quite clear - solid-state media as such are suitable for the corporate sector, WHY CAN'T TELL ABOUT most All-Flash arrays. There is a GREAT difference between the AFA array and the AFA corporate array (generally speaking, nothing surprising).

Deduplication rates are not all

Since the advent of solid-state arrays, we are constantly talking about deduplication, but high coefficients alone are not enough (to be honest, never was enough). How can factors help replicate data? Or when organizing federated data storage on multiple arrays? Do they improve VMware integration? Or provide guaranteed levels of service to minimize delays when business users require it? What do you say to the management of your unit when the limits of array scaling become clear? And in the event of an accident? “Sorry, we were not protected from failures, but we have a deduplication factor of 3: 1!”

Already today, solid-state drives with a capacity of 3.84 TB are available, and capacity continues to grow. Increasing the density contributes to the rapid spread of solid-state disks, and, as a result, talk of deduplication factors is a thing of the past.

Not all architectures offered by manufacturers support such large capacities or the latest technologies. Some manufacturers continue to hold on to the old, relying on outdated arguments, such as comparing eMLC and MLC technologies, which are essentially no longer relevant. Such arguments only emphasize all the flaws of their architecture, but they are not even aware of this. Let's consider the following points:

- All OEM flash memory vendors can offer IT vendors a warranty of up to 7 years on new high-capacity drives. So what’s wrong with the array architecture that doesn’t support their implementation and focuses on eMLC? How much more will we allow them to sell inefficient solutions, the flaws of which are given for a feature?

- What is stopping manufacturers of storage systems using MLC and 3D NAND from realizing support for larger capacity drives? The question is even better: why can a manufacturer add a 1.92 TB solid-state drive to supported hardware, but the useful and effective capacity of the array does not change? What is wrong with architecture?

More importantly: if such architectures cannot support constant changes and high densities typical of SSD technologies, how can you build an array based on them that provides Tier-1 level functionality?

The advantage of Hewlett Packard Enterprise

Gradually, we come to this issue. In terms of introducing new technologies for cost-effective solid-state disks with high density, we are significantly ahead of our competitors.

- We are ahead in the development of other suppliers of storage systems by 12–18 months, offering 20% more capacity when using the same drives (480 GB and 920 GB).

- As for solid state drives with a capacity of 1.92 TB, here we are ahead of other manufacturers by 6-18 months.

- And if we talk about solid-state drives with a capacity of 3.84 TB, then we were ahead of everyone else for 6-12 months.

I officially declare: HPE 3PAR StoreServ is the only true Tier-1 corporate enterprise array built entirely on flash technology.

Architecture matters. And our architecture invariably allows us to stay ahead of our competitors. We are not limited to the fact that we offer the most effective set of tools for replication. We are not limited to maximum performance or the undoubtedly highest level of fault tolerance. Unlike arrays from other manufacturers, in our fault tolerance is a priority at all levels without exception. We offer an optimized backup model, which may lead to decades for other companies to create analogs.

But on this we do not stop. Our architecture is designed to efficiently consolidate multiple workloads. We are effectively fighting the outdated “one application - one storage” approach. Moreover, we offer the best support for guaranteed levels of service (QoS) for various business applications today:

Note that access time is one of the threshold metrics for QoS in the HPE 3PAR.

Thanks for attention! Take care of yourself! When you look at the storage capabilities of your enterprise, remember what Tier-1 really means.

Source: https://habr.com/ru/post/275033/

All Articles