Autotest, nightly assembly, extreme Agile. How we test our products

Our testing is a great product, tight deadlines, a huge responsibility.

Each company has its own views on the organization of workflows. And they can be very different.

Today we want to talk about how we test our products. Perhaps you will argue with something, but you will adopt something.

Our products consist of dozens of modules. We regularly update them, and these upgrades are completed mini-products.

')

Developers assemble a pack of code in a separate version. And write the description: “ New online store in the cloud. Changes such and such. Added a bunch of new pages . ” And be sure to put a huge changelog with a few hundred commits.

All this goes to testers. I call this process “Extreme Agile” - we work on precise iterations. Deviate from these rules of testing is impossible.

This is a necessary measure - the product is huge, as are the changes in it. And in order not to delay testing, we sacrifice a certain freedom.

After receiving the task to check for updates, we check with the test plan. It is designed separately for each module. The plan lists all important usage scenarios.

We used to do this. We made detailed descriptions of precedents: “ Click here. Circles should appear in the password entry field. If they do not appear, something is wrong . ”

We abandoned this practice when the number of precedents exceeded all reasonable limits.

As a result, we came to the conclusion that our test plan is a listing of important business scenarios.

An example is a list of case studies for working with tasks in Bitrix24.

- Saving task works? Great, go ahead.

- Comments to the task are added? Fine, the next item.

First, we start with top-level, main scenarios - for example, creating and saving a store order. And then we proceed to the scenarios at a lower level — for example, the correct work of the deadline in the tasks.

Then we check in several stages how the system actions are performed.

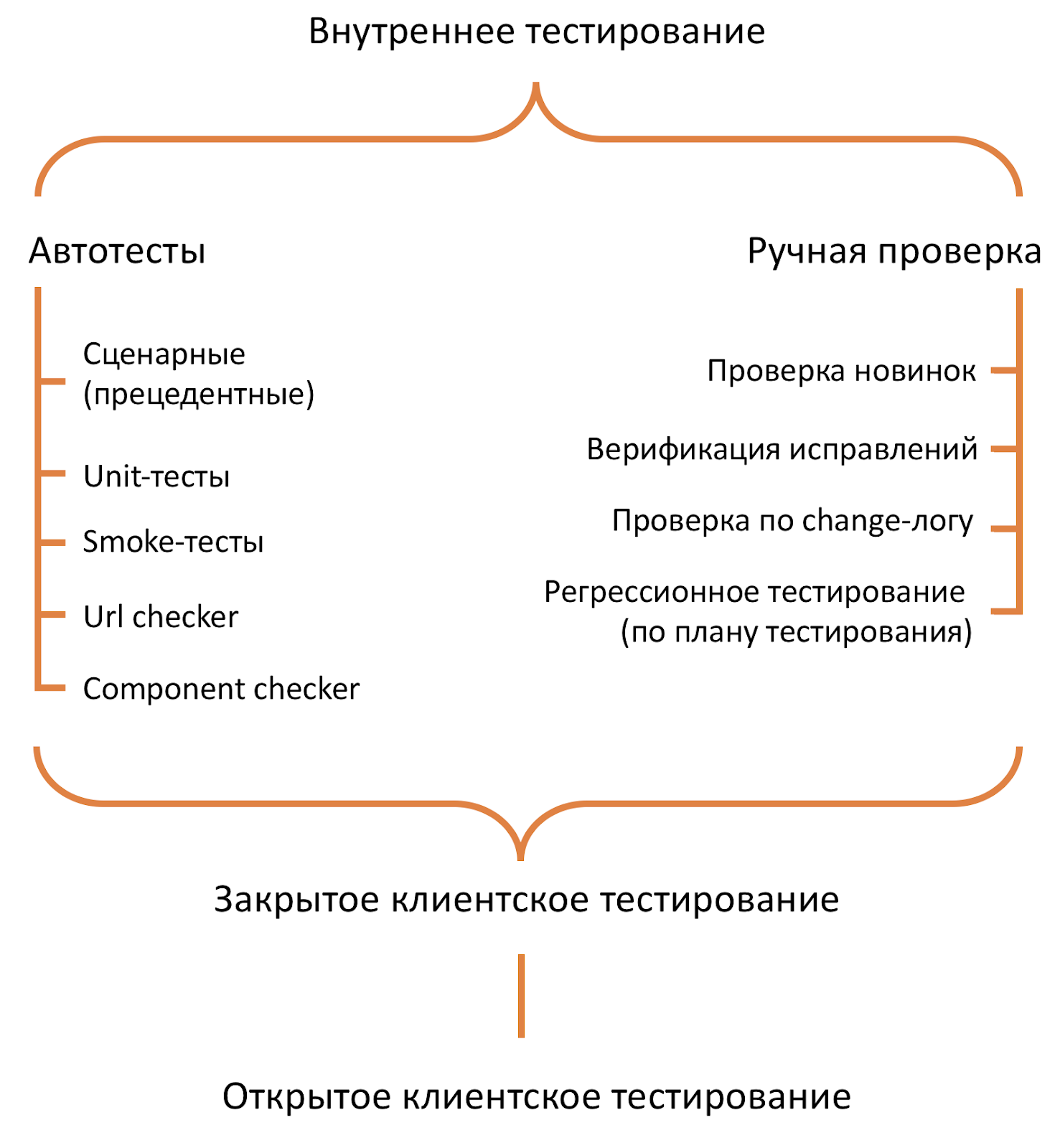

Testing stages

At the very beginning, we run autotests for specific modules. We run all profitable modules through them.

In parallel with the autotest run, we do:

Stage 1

- We study the description of the changes from the developers.

We look, as it should be on TZ. Compare with how the module is made in reality. But the main thing is to learn to look at the product and changes from the point of view of common sense and the average user.

Is the working script convenient? Is everything done “humanly”? In parallel, we also conduct usability testing.

It does not always work - a huge amount of changes. But we still tell developers when a script can be made more convenient.

We have automated a huge part of the routine. And we constantly think that it is possible to screw it up.

We discuss questions to developers in separate chats for each update. We do not have a "broken phone" - in the chat there are all the staff involved in the release.

Stage 2

So, we have studied the description of the update, and then we read into the change log - this is the second stage.

- At this stage, we “catch” most of the errors. The developer may miss something in the “normal” description, but the changelog will show all errors.

Recently there was a funny thing. The developer has made changes to the text of the localized version of the product. In French.

It would seem just a text. But in this tricky combination there was an apostrophe. As a result, at a certain stage of testing, JavaScript just started to fall.

It turned out that the system simply does not know how to handle this combination. But the author of the change did not mention it in the description. We found a mistake only as a result of a careful study of the change log.

Stage 3

Then the third stage begins - the analysis of customer requests for errors in the product:

Calls are recorded in our Mantis error tracking system. Why do we use it? This is a historical heritage, Mantis has been “working” for us for 15 years already.

Several times I suggested to colleagues to find something else. We started to analyze and every time it turned out that Mantis has everything we need. We perfectly integrated it into work: we linked it with “open lines” and technical support.

The entire customer inquiry log for errors we take in Mantis.

In each mini-update, we include both features and bug fixes that customers and testers have found.

The developer claims that the error is fixed - we check. If the error is not corrected, we return the ticket to work.

If the developer fails to correct the same error several times, then remove this non-working part from the update, send it to the developer for revision.

Stage 4

After verification, proceed to the fourth stage - the re-run of the test plan.

The market situation may change. If yesterday conditions were alone, then tomorrow they may change. We do not have a tight binding to the TZ, which we developed six months ago. Therefore, we run the test plan again.

5 stage

The fifth stage is the assembly of the update package and uploading to the reference testing environment: It is located in the Amazon cloud and is a separate portal “Bitrix24”.

Here is the final check of business scenarios. At night, the whole set of autotests is run out, they track changes in infrastructure indicators.

6 stage

At the sixth stage, we organize a group of closed testing.

The groups are not testers, but real customers and our partners. We do this to get feedback from real users of our product. Those who will work directly with him.

By this stage, almost all the errors have already been caught. Users tell how comfortable it is for them to work in different scenarios. You can call this additional usability testing.

Stage 7

Then comes the turn of the seventh stage - semi-closed testing:

As a rule, in my Facebook, I invite customers to be the first to test our new products. Anyone can participate, it's free, just email me .

For semi-closed testing, we have a stage environment. Portals of customers who wish to participate are added to it. Usually we recruit several thousand portals, but it all depends on the scale of the update.

Here we also appreciate the convenience of working with the product, the convenience of scenarios.

There are things that sometimes seem unobvious and at the previous stage the partners write that it is impossible to do this. And this stage allows you to run the solution on a much larger sample of clients, to test the hypotheses.

This stage can take 2-3 weeks. We constantly make changes to customer feedback.

After testing on the update update rolls out in production. And we happily write educational articles and shoot a video.

You can write about your impressions on Facebook, in person or on a public page . Ask questions, express opinions, criticize - I always welcome the constructive.

Cloud first

Many customers complain that we release updates first for the cloud version of Bitrix24. So far, we have not been able to build the testing process and product release in such a way as to synchronously release updates for both the cloud and the box.

The release of a box update is a more complicated process. If you are familiar with running in a raw product, then you know what a test environment is. In the cloud, we already have a ready-made infrastructure with given versions of PHP and MySQL with its own encoding. Everything is set up and working there - you can install the product and test it calmly.

With the "box" is different. The variability of "environments" among customers is huge. We try to encourage users to switch to higher versions of PHP, but many do so reluctantly.

In fact, a large proportion of customers begin to change the version of PHP, only when they are forced hosters. Add to this a variety of MySQL versions and encodings.

That is why testing the "box" is much more difficult and longer.

Autotesting

Above, I described in detail the process of manual testing, but this is only part of the whole process. Auto-testing plays an equally important role.

The creation of autotests in our special department.

Previously, we wrote large screenplay projects. And these scenarios were related. But they are difficult to maintain, if in a short time a huge amount of changes rolls out in the product.

Therefore, we have broken large scenario autotests into smaller independent scenarios, cases.

To work with autotests, we have a self-written framework on .NET and a separate framework for working with the database.

Why do we have a PHP product, and a framework for autotests on .NET?

In my many years of experience, any framework that will work is suitable for autotesting UI. We use .NET because it connects perfectly to the UI and works great with Selenium, well, I just like it.

How is the average autotest?

- Prepare a test installation to test the script, through the API, bypassing the interface

- The robot checks the given case. For example, tasks.

The same with data cleansing. Checked the script. If everything is good, then the task is not deleted through the interface, but through the API — we call the method of deleting the task. The robot quickly clears the data behind it.

Previously, we tested only through the interface.

It is believed that the more we click the interface, the better. But we left it, because today it is important to test for precedents.

Smoke tests and night auto tests

We also conduct smoke tests. They work very fast and check out the highest level, most popular business scenarios.

We usually use them for final verification.

If we return to the large testing process, a large iteration, a large update package, then we “set” the package on the reference environment. And at night the autotests are scheduled. All sleep, and the robot clicks on the specified scenarios.

Now our full cycle of night autotests has finally begun to fit in at night. It used to take about 32 hours. It was possible to accelerate autotests with the help of virtual machines and constant optimizations of the framework and the tests themselves.

But there is no constant integration in our testing process. It does not suit us, because there is an accumulated “historical heritage”.

We cannot abandon any part of the infrastructure that is very large and complex. So, for now we are timidly trying to implement small elements of the classic continuous integration.

Nightly builds

Users of the boxed version know that every three months we release a new distribution. And for three months, a new build is automatically assembled and laid out every night.

How does this happen?

The self-written system calls the update collector and automatically installs all possible updates. Looks that fell during the installation phase.

Then the machine collects all available distributions - we have 8 editions of them - and at night it develops local installations.

Night builds we do not lay out in open access. The system again fixes whether everything is established. If everything is in order, all autotests are started.

In the morning, the tester checks the logs and looks at where and what fell, where the developer made a mistake, where you need to fix the autotest.

Unit Tests

Most of our tests are UI, not Unit. We use unit tests for important components only: main module, CRM, online store.

In some companies, there is disagreement about who should write unit tests.

In my opinion, they can write and testers, and developers. We have developed so that the developers write them.

To run unit tests, we use the standard PHP unit tool. Just call the method. We look at how it works with the parameters that are given to the input. And look at the answer.

URL checker

This is my old idea. Perhaps someone will be useful.

Entry robot:

- Gets the root URL of the site

- Collects the URL of all pages

- Goes over them and looks to see if there are any errors.

We have a ready list of possible errors: error 404, fatal error, database errors, JS errors, invalid characters, broken image, error 500.

All that can be found and pulled out of the page code, the robot adds to the log with screenshots.

To write such a robot is extremely simple, but it can save a lot of time.

Component checker

Our component checker works like this:

- The component is placed on the page.

- It is saved, updated.

- We look what is given in text form and in code

An effective and simple test for web development.

If you are our partner, and have ever given the module to our moderator in the Marketplace for moderation, know that we will definitely drive all your decisions with this checker.

Visual experiment

Now we are conducting a small experiment - we translate test plans from text into visual diagrams. They are like communication diagrams.

They immediately see which elements of the product are connected with what: what does saving orders or what affects saving itself? What and how can affect the inventory accounting scheme?

So the tester can quickly assess - which elements will be affected by changes in a particular component.

Quality testing

We abandoned over-testing. I am a supporter of the principle of sufficiency.

Now we are testing very little for “foolproof”. For example, in the input field of a sum of money, most likely, we will not enter letters.

In some companies it is practiced. Some people think that any good tester is not obliged to limit himself to prescribed test precedents. He can just make them up.

But for us this approach has ceased to work - in our realities there is enough “sufficient” testing.

Sufficiency is determined by the needs of the market and the timing of product release. We can test the new feature of the year, but be late with the release.

Therefore, we adhere to the principle of sufficiency. We do not release the raw product, because we are thrown out of the market. And do not pull with the release of a licked feature, which will be outdated at the time of release.

* * *

I am interested in your opinion on the read. How is testing done in your company?

Source: https://habr.com/ru/post/274955/

All Articles