Servo browser engine architecture

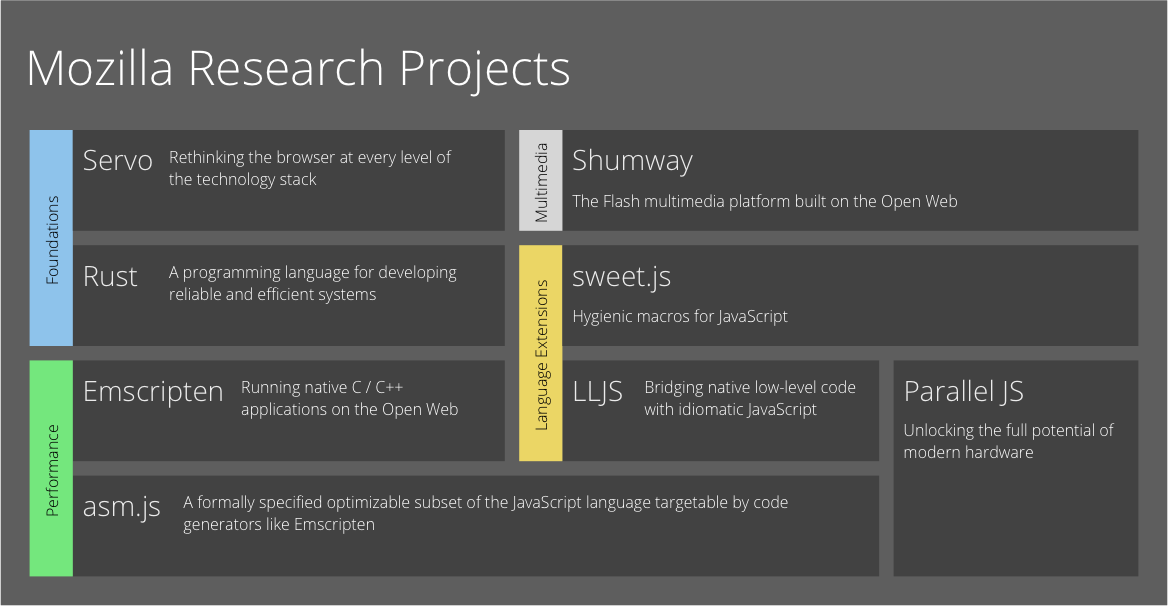

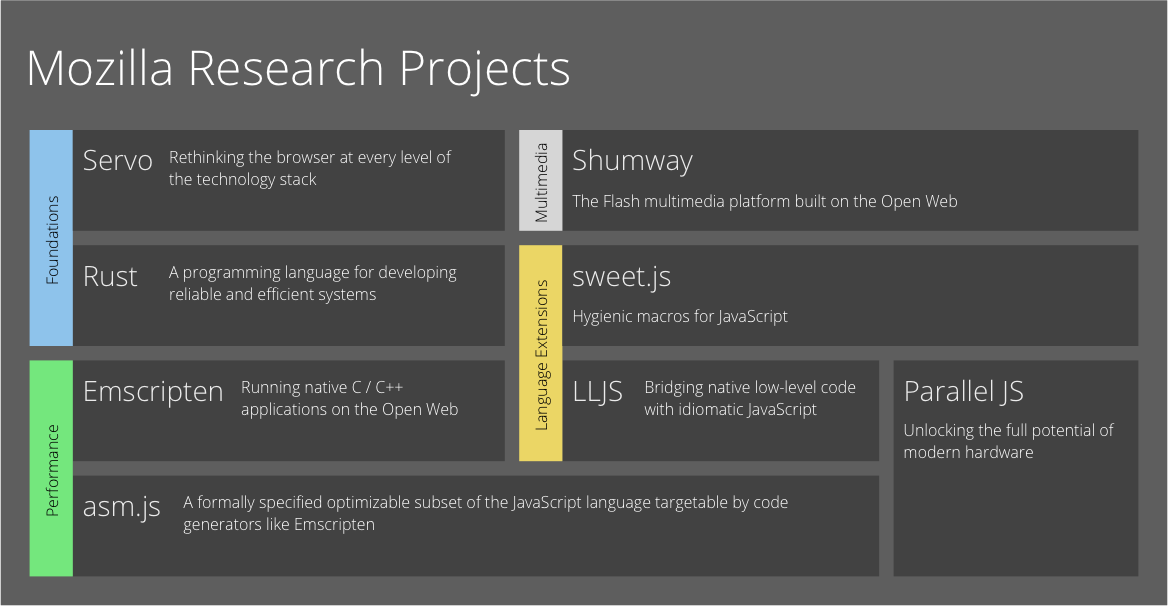

From the translator . Allow me to submit to the court of the habrasoobshchestvo translation of the documentation on the browser engine Servo. This engine is developed by the Mozilla community in the Rust language, and is perhaps the largest active project in this language. This document describes the architecture of the engine, how developers use Rust in conjunction with C ++, and what difficulties they encountered during development. The original is available in the project wiki on github.

This is a very superficial overview of the Servo architecture. Servo remains the prototype, and some parts of the architecture are not yet represented as code. Some important aspects of the system have not yet been considered in detail.

Servo is a research project to develop a new browser engine. Our goal is to create an architecture that benefits from concurrency and at the same time eliminates common sources of bugs and vulnerabilities associated with incorrect memory management and race conditions.

')

Since C ++ is not well suited to prevent these problems, Servo is written in Rust , a new language designed specifically to meet the requirements of Servo. Rust provides a parallel infrastructure based on tasks (task-parallel infrastructure) and a strong type system that provides memory security and freedom from race conditions.

When making decisions during design, we give priority to the features of a modern web platform, which boil down to high-performance, dynamic, rich multimedia applications, possibly to the detriment of what cannot be optimized. We want to know what a fast and responsive web platform is and implement it.

Servo clearly does not claim to create a full-fledged browser (except for the needs of the demonstration or experiments). On the contrary, it aims to create a holistic, embeddable engine. Although Servo is a research project, it is designed to be usable for real use - the code we write must be of sufficient quality to ultimately reach users.

Competitiveness is the division of tasks into parts for the alternation of execution. Parallelism is the simultaneous execution of several parts of work to increase speed. Some ideas in this direction that we are studying or planning to consider:

Each constellation instance can be viewed as a separate tab or window. It manages the task pipeline, which accepts input, executes JavaScript for the DOM, performs element placement, builds display lists, renders display lists to tiles, and displays the final image on the surface.

The conveyor consists of four main tasks:

In this pipeline, when interacting with tasks, two complex data structures are used: the DOM and the display list. The DOM is passed from the content processing task to the placement processing task, and the display list from the placement task to the renderer. Finding an effective and type-safe way to represent, share and / or transfer these two structures is one of the main difficulties of this project.

A DOM in Servo is a tree with support for versioning nodes that can be shared between one writer and several readers. The DOM uses the copy-on-write strategy to allow the writer to modify the DOM in parallel with the work of the readers. The content processing task is always written, and the allocation tasks or their subtasks are always read.

DOM nodes are Rust values, whose lifetime is controlled by the JavaScript garbage collector. JavaScript addresses the DOM nodes directly — there is no XPCOM or similar infrastructure.

The DOM interface is currently not type-safe - it is possible to incorrectly manipulate nodes, which will lead to dereference of incorrect pointers. The elimination of this insecurity is a high priority and necessary goal of the project; since the DOM nodes have a difficult life cycle, this will lead to some difficulties.

Servo rendering is completely controlled by the display list — a sequence of high-level commands created by the layout task. The Servo display list is immutable, so that it can be divided between competing renderers and it contains everything you need to display. This is different from the WebKit renderer, which does not use the display list and the Gecko renderer, which uses the display list, but also renders additional information, such as the DOM directly, for rendering.

We currently use SpiderMonkey, although plug-in engines are a long-term, low-priority task. Each content processing task receives its own JavaScript runtime. DOM bindings use the native API engine instead of XPCOM. Automatic generation of bindings from WebIDL in priority.

Just like Chromium and WebKit2, we intend to have a trusted process-application and several less-trusted process engines. The high-level API will, in fact, be based on IPC, and most likely with non-IPC implementations for testing and a single-process variant, although it is assumed that the most serious users will use several processes. The engine process will use the sandbox mechanisms provided by the operating system to limit access to system resources.

At the moment, we do not intend to go to extremes regarding the sandbox, as the developers of Chromium, mainly because setting up on a sandbox requires a lot of developers (in particular on low-priority platforms like Windows XP or old Linux) and other aspects of the project . The Rust Type System also adds an important level of defense against memory protection vulnerabilities. This alone does not make the sandbox less important in terms of protection against unsafe code, bugs in the type system and third-party libraries and libraries on the local computer, but this significantly reduces the possibility for attacks on Servo compared to other browser engines. In addition, we are concerned about performance related to some sandbox techniques (for example, proxying all OpenGL calls to a separate process).

Web pages depend on a variety of external resources, with a large number of mechanisms for obtaining and decoding. These resources are cached at several levels — on disk, in memory, and / or in decoded form. Within the framework of a parallel browser, these resources should be distributed among competitive tasks.

Traditionally, browsers have been single-threaded, performing input-output in the “main stream”, where most of the computation is also done. This leads to problems with delays. In Servo, there is no “main thread” and all external resources are loaded in a single resource manager .

Browsers use a lot of caches, and a task-based Servo architecture means that it will probably use more caches than existing engines (we may have both a global cache based on a separate task and a local cache). which stores the results from the global cache to avoid calls through the scheduler). Servo should have a unified caching mechanism, with customizable caches that will work in environments with low memory capacity.

This is a very superficial overview of the Servo architecture. Servo remains the prototype, and some parts of the architecture are not yet represented as code. Some important aspects of the system have not yet been considered in detail.

Overview and Goals

Servo is a research project to develop a new browser engine. Our goal is to create an architecture that benefits from concurrency and at the same time eliminates common sources of bugs and vulnerabilities associated with incorrect memory management and race conditions.

')

Since C ++ is not well suited to prevent these problems, Servo is written in Rust , a new language designed specifically to meet the requirements of Servo. Rust provides a parallel infrastructure based on tasks (task-parallel infrastructure) and a strong type system that provides memory security and freedom from race conditions.

When making decisions during design, we give priority to the features of a modern web platform, which boil down to high-performance, dynamic, rich multimedia applications, possibly to the detriment of what cannot be optimized. We want to know what a fast and responsive web platform is and implement it.

Servo clearly does not claim to create a full-fledged browser (except for the needs of the demonstration or experiments). On the contrary, it aims to create a holistic, embeddable engine. Although Servo is a research project, it is designed to be usable for real use - the code we write must be of sufficient quality to ultimately reach users.

Concurrency and Competitiveness Strategies

Competitiveness is the division of tasks into parts for the alternation of execution. Parallelism is the simultaneous execution of several parts of work to increase speed. Some ideas in this direction that we are studying or planning to consider:

- Task based architecture . The main components of the system should be

presented in the form of actors, with isolated memory, with clear boundaries for the possibility of failure and recovery. This will also contribute to weak binding of the system components, allowing us to replace them for the purpose of experimentation and research. Implemented. - Competitive rendering . Rendering and compositing are performed in different streams, separated from the presentation to ensure responsiveness. The compositing stream manages its own memory to avoid garbage collections. Implemented.

- Tile based rendering . We present the screen as a grid of tiles and draw each of them in parallel. In addition to the gains from concurrency, tiles are needed for performance on mobile devices. Partially implemented.

- Layering rendering . We divide the display list into subtrees that can be processed by the GPU and rendered in parallel. Partially implemented.

- Matching selectors . This task is surprisingly easy to parallelize. Unlike Gecko, Servo compares the selectors separately from the construction of the display tree, which is parallelized much easier. Implemented.

- Parallel placement . We build a mapping tree using parallel DOM traversal that takes dependencies based on elements, such as float. Implemented.

- Forming text . An important part of the inline arrangement, the formation of the text (the use of italics, boldface type - approx. Lane) is quite expensive and can potentially be parallelized. Not implemented.

- Parsing We are currently writing a new HTML parser on Rust, focusing equally on security and specification compliance. In the process.

- Image decoding . Parallel decoding of multiple images is easy. Implemented.

- Decoding other resources . This is probably less important than image decoding, but anything that is loaded by a page can be processed in parallel, such as parsing style sheets or video decoding. Partially implemented.

- The JS garbage collector competes with the display . With almost any architecture, including JS and display, JS will wait for the execution of calls to the display, perhaps often. This will be the most suitable time for garbage collection.

Difficulties

- Performance . Parallel algorithms, as a rule, require hard compromises. It is important to really be fast. We need to make sure that Rust itself has performance similar to C ++.

- Data structures Rust has a rather innovative type system, in particular, to make parallel types and algorithms safe, and we need to figure out how to use it efficiently.

- Immaturity of language The compiler Rust and language have recently stabilized. Rust also has a smaller selection of libraries than C ++; we can use C ++ libraries, but this requires more effort than just using header files.

- Non-parallel libraries . Some third-party libraries we need behave badly in a multithreaded environment. In particular, there were difficulties with fonts. Even if libraries are technically and thread-safe, often this security is achieved through a single library mutex, which hurts our parallelization capabilities.

Task Architecture

Charts

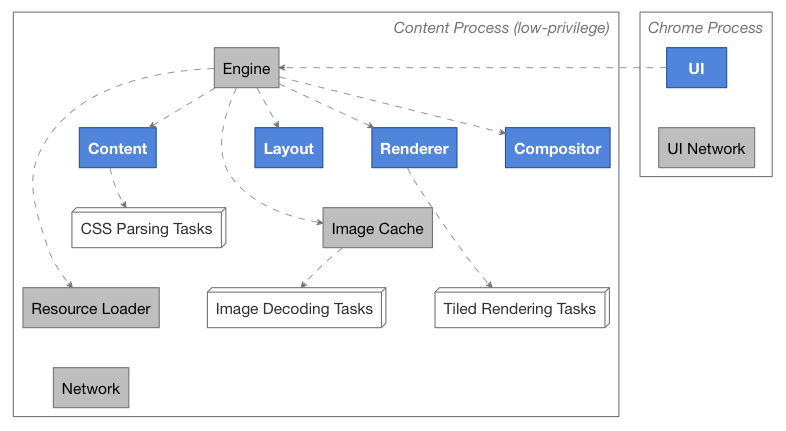

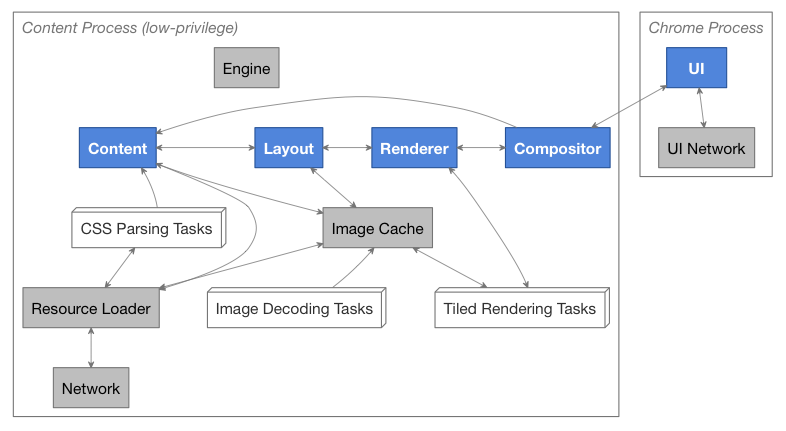

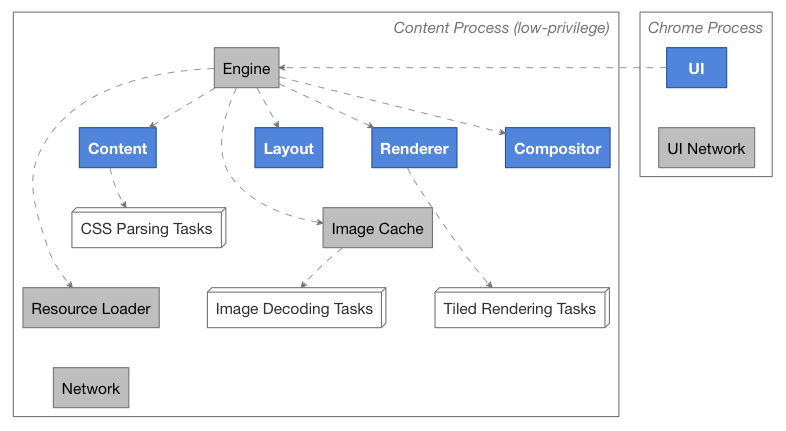

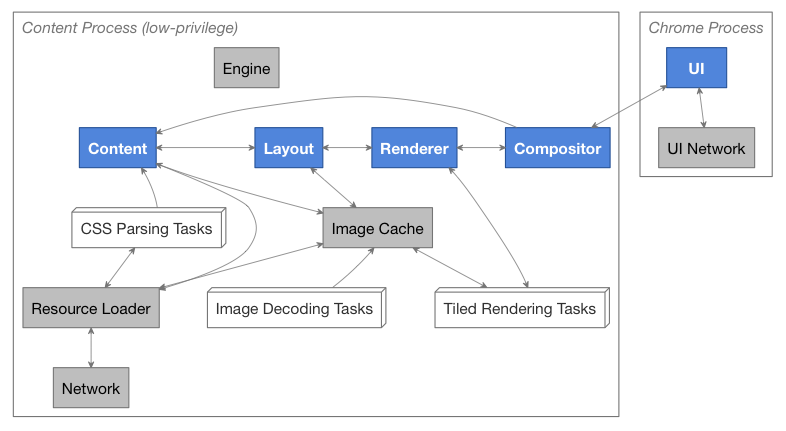

Task Supervision Chart

Task Interaction Chart

- Each rectangle represents a Rust task.

- The blue rectangles represent the main tasks from the browser pipeline.

- Gray rectangles represent auxiliary tasks for the pipeline.

- White rectangles represent work tasks. Each such rectangle represents several such tasks, the exact number of which depends on the workload.

- Dashed lines indicate an oversight attitude.

- Solid lines represent communication links.

Description

Each constellation instance can be viewed as a separate tab or window. It manages the task pipeline, which accepts input, executes JavaScript for the DOM, performs element placement, builds display lists, renders display lists to tiles, and displays the final image on the surface.

The conveyor consists of four main tasks:

- Script - The main mission of the script is to create your own DOM and execute JavaScript. It receives events from a variety of sources, including navigation events, and forwards them as required. When the task processing the content is required to obtain information about the placement, it should send a request to the placement task.

- The placement task — placement makes a DOM cast, calculates styles, and builds the main display data structure — the display tree . The display tree is used to calculate the location of the nodes, and based on this, a display list is built, which is sent to the renderer.

- Renderer - the renderer gets the display list and renders the visible parts into one or more tiles, if possible in parallel.

- Typesetter - typesetter combines tiles from the renderer and sends them to the screen. As part of the UI stream, the typesetter is the first to receive UI events, which are usually immediately sent to the content for processing (although some events, such as scrolling events, can be pre-processed by the typesetter for responsiveness).

In this pipeline, when interacting with tasks, two complex data structures are used: the DOM and the display list. The DOM is passed from the content processing task to the placement processing task, and the display list from the placement task to the renderer. Finding an effective and type-safe way to represent, share and / or transfer these two structures is one of the main difficulties of this project.

nwrite DOM

A DOM in Servo is a tree with support for versioning nodes that can be shared between one writer and several readers. The DOM uses the copy-on-write strategy to allow the writer to modify the DOM in parallel with the work of the readers. The content processing task is always written, and the allocation tasks or their subtasks are always read.

DOM nodes are Rust values, whose lifetime is controlled by the JavaScript garbage collector. JavaScript addresses the DOM nodes directly — there is no XPCOM or similar infrastructure.

The DOM interface is currently not type-safe - it is possible to incorrectly manipulate nodes, which will lead to dereference of incorrect pointers. The elimination of this insecurity is a high priority and necessary goal of the project; since the DOM nodes have a difficult life cycle, this will lead to some difficulties.

Display list

Servo rendering is completely controlled by the display list — a sequence of high-level commands created by the layout task. The Servo display list is immutable, so that it can be divided between competing renderers and it contains everything you need to display. This is different from the WebKit renderer, which does not use the display list and the Gecko renderer, which uses the display list, but also renders additional information, such as the DOM directly, for rendering.

JavaScript and DOM bindings

We currently use SpiderMonkey, although plug-in engines are a long-term, low-priority task. Each content processing task receives its own JavaScript runtime. DOM bindings use the native API engine instead of XPCOM. Automatic generation of bindings from WebIDL in priority.

Multi-process architecture

Just like Chromium and WebKit2, we intend to have a trusted process-application and several less-trusted process engines. The high-level API will, in fact, be based on IPC, and most likely with non-IPC implementations for testing and a single-process variant, although it is assumed that the most serious users will use several processes. The engine process will use the sandbox mechanisms provided by the operating system to limit access to system resources.

At the moment, we do not intend to go to extremes regarding the sandbox, as the developers of Chromium, mainly because setting up on a sandbox requires a lot of developers (in particular on low-priority platforms like Windows XP or old Linux) and other aspects of the project . The Rust Type System also adds an important level of defense against memory protection vulnerabilities. This alone does not make the sandbox less important in terms of protection against unsafe code, bugs in the type system and third-party libraries and libraries on the local computer, but this significantly reduces the possibility for attacks on Servo compared to other browser engines. In addition, we are concerned about performance related to some sandbox techniques (for example, proxying all OpenGL calls to a separate process).

I / O and resource management

Web pages depend on a variety of external resources, with a large number of mechanisms for obtaining and decoding. These resources are cached at several levels — on disk, in memory, and / or in decoded form. Within the framework of a parallel browser, these resources should be distributed among competitive tasks.

Traditionally, browsers have been single-threaded, performing input-output in the “main stream”, where most of the computation is also done. This leads to problems with delays. In Servo, there is no “main thread” and all external resources are loaded in a single resource manager .

Browsers use a lot of caches, and a task-based Servo architecture means that it will probably use more caches than existing engines (we may have both a global cache based on a separate task and a local cache). which stores the results from the global cache to avoid calls through the scheduler). Servo should have a unified caching mechanism, with customizable caches that will work in environments with low memory capacity.

Source: https://habr.com/ru/post/274815/

All Articles