High Speed File Transfer Protocol - Aspera FASP

Nowadays, in the age of popularity of the Internet and diverse content, including media content, the size of which in HD quality can occupy several Gigabytes, the problem of high-speed file transfer over the network has become most acute. As an example, consider the work of a news television studio, where a reporter, while on another continent, must quickly transmit a report shot in high quality to a central studio for processing and launching on the air. It is clear that here the speed of transmission plays a key role, since the news will no longer be news if it appears in a couple of days.

Or, for example, in the case when a floating drilling rig on which there is only a satellite channel has to transmit a cube of geophysical data from drilling a well for interpretation to high-performance computing centers, each day of delay can lead to losses.

The first thing that comes to mind is to use the FTP protocol. And, indeed, FTP, running on the basis of the Transmission Control Protocol (TCP), will do an excellent job if you need to transfer files over short distances or over a “good” network. But in the examples above, the FTP protocol speed will be very low due to the inefficient operation of TCP on networks with delays and packet loss. An increase in the channel does not solve the problem, and the expensive channel is not utilized.

')

The Aspera FASP protocol has no TCP flaws, and it maximizes the utilization of any available channel, increasing the data transfer speed by hundreds of times compared to FTP. Before the appearance of Aspera, data was recorded on hard disks or tapes and transferred by couriers, which was not very fast, and there was also a risk of data loss.

FASP is a high-speed file transfer protocol with guaranteed delivery, developed and patented by Aspera (www.asperasoft.com). In 2014, IBM buys Aspera and the solution falls into the IBM cloud division.

Abbreviation FASP stands for fast, adaptive, secure protocol, which in English means fast, adaptive and secure protocol. Fast because it effectively uses all available bandwidth; adaptive, because it adapts to the state of the network and at the same time is “friendly” for other traffic; it is secure because it uses encryption both in transmission and in a “state of rest”.

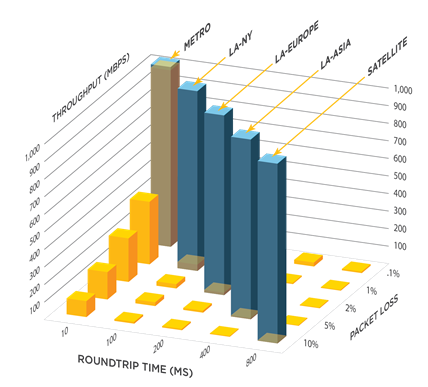

In order to understand the benefits of FASP, let's remember how TCP works. In the 70s of the last century, scientists were given the task of developing a protocol that could withstand a nuclear strike. It was necessary to create a protocol that could safely transfer data. Therefore, when creating TCP, the main efforts were directed at creating a mechanism of precisely reliable, and not high-speed transmission. In those years, there were no mobile or satellite networks, and the only transatlantic channel from the US to Europe had a speed of 64 Kbps, which shows the state of the technology for that period. TCP was designed so that the transmission rate is inversely proportional to the distance between the end points. In addition, in the case of packet loss, TCP considers that the channel is overloaded, and independently reduces the transmission rate. TCP performance decreases with increasing transmission distance and due to poor network quality. The greater the distance, the greater the delay, and the lower the transmission rate. Delay is usually measured by the value of Round-Trip Time (RTT). This is the time it takes to send a package and receive confirmation from the recipient. The delay arises due to the laws of physics, limiting the speed of light or an electromagnetic signal. For example, the delay in transmission over satellite networks can reach 800 ms. Moreover, when transmitting over long distances on the global Internet network (WAN), a packet must pass through a large number of routers before it is received by the recipient. The router takes time to process the packet, and if it is configured incorrectly or overloaded, packet loss may occur. The higher the number of lost packets, the more time consuming the transmission becomes. Figure 1 shows that TCP has good performance in local area networks (LAN) with respect to the available network bandwidth, but, in this case, the greater the RTT and packet loss, the lower the transmission performance.

Also, TCP protocol performance does not increase with increasing channel. In other words, if you have a slow transfer on a 10 Mbit / s channel, there is no guarantee that if you increase the channel to 1 Gbit / c, the speed will increase. Of course, if you need to transfer a file to a nearby street, the performance increase will be noticeable, but if the task is to transfer data over long distances, then increasing the channel to 1 Gbit / s will most likely not help much.

Figure 1 TCP performance

TCP is a session protocol, i.e. during transmission, it establishes a connection, as seen in Figure 2. all sent packets receive confirmation in the form of ACK packets. Delay (RTT) is the time difference between sending a packet and receiving an acknowledgment (ACK).

Figure 2 TCP packet exchange

The number of packets that TCP can send at a time is determined by a mechanism called the TCP window (TCP Sliding Window). The TCP window is controlled by the Adaptive Increase Multiplicative Decrease (AIMD) and Flow Control algorithm to control the rate at which packets are sent. Because of this, TCP can “be sure” that packets are not sent faster than the receiver can receive them. As shown in Figure 3, thanks to the TCP window, the sender can simultaneously send several packets, but if this is not confirmed, TCP will block the transmission of any subsequent packets.

Figure 3 TCP Sliding Window

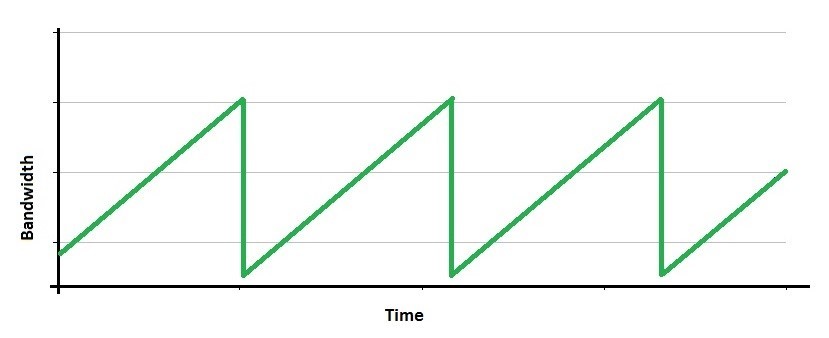

If a packet loss / acknowledgment is detected, the sender, using the AIMD algorithm, reduces the TCP window size to half or zero. The situation where the sender does not wait for delivery confirmation and reduces the transmission window is most often manifested when transmitting over long distances, i.e. in networks with large RTT. In this case, if there is also a packet loss, the transmission rate will decrease to almost zero. If we imagine the TCP transmission rate as a graph, we get the so-called “sawtooth” function (sawtooth pattern).

Figure 4 TCP AIMD “Sawtooth” Pattern

As can be seen in Figure 4, due to the use of the TCP overload prevention algorithm AIMD, the transfer rate in TCP increases until an “overload” occurs, as a result of which the speed drops sharply and the sawtooth process is constantly repeated without allowing TCP fully dispose of available channel. There are tools on the Internet that allow you to estimate the effective transmission rate over TCP (http://asperasoft.com/performance_calculator/). For example, on a network with a bandwidth of 100 Mbps, RTT = 150 ms and a packet loss of 1.5%, the transmission speed will be less than 1 Mbps, and no matter what the channel will be: 100 Mbps or 1 Gbit / sec. because TCP transfer rate depends on RTT and packet loss.

FASP protocol

Aspera FASP transport protocol, unlike TCP, works fine on any network, providing guaranteed delivery. This protocol effectively transmits unlimited data over the global Internet, satellite and mobile channels, and at the same time does not reduce its effectiveness in networks with increased RTT and packet loss. The protocol provides maximum speed and overload prevention mechanism, as well as transmission policy control, security and reliability.

Figure 5 FASP performance

When operating in a local network, the difference between TCP and FASP is not significant, but as soon as RTT and packet loss increases between the two endpoints, the performance of FASP becomes significantly better (Figure 5). FASP transmits data faster, maximally using the available transmission channel. At the same time, there are no limitations in the theoretical maximum transfer rate, which may be more likely limited by hardware resources, for example, the performance of the disk subsystem. TCP transmission shows a “ragged” graph, something you will never see in FASP, where the transmission speed will reach the specified level (Target Rate) and will stick to it regardless of the presence of lost packets. Of course, the lost data will be transferred again, but this will not affect the transmission performance. Using Aspera FASP, you can guarantee a predictable transmission time regardless of network quality.

FASP works on the basis of the UDP (User Datagram Protocol) protocol at the transport layer of the OSI model, and since UDP does not guarantee delivery, overload prevention and transmission control algorithms are implemented at the application level.

FASP does not use the TCP window mechanism and is independent of the transmission distance. The transmission starts at a certain speed and sends data at a speed calculated by a mathematical algorithm. FASP uses a negative acknowledgment (NACK) mechanism; this means that, if there is a lost packet, the recipient will report that they have not received this packet, while the sender will continue to transmit. FASP, unlike TCP, does not wait for confirmation before sending the following data. Since it does not matter for the file transfer, in what order the packets arrive, the data transfer does not stop with packet loss, as happens in TCP. Data effectively continues to be transmitted at high speed. The sender will re-send only the lost packet, and not the entire packet window, as in TCP, without stopping the current transmission. The use of the FASP protocol allows minimizing overhead transfer, allowing a figure to be less than 0.1% with 30% packet loss.

Figure 6 Aspera and TCP performance comparison utility

Figure 6 shows a comparison of the file transfer rate, 100 GB in size on a network with a bandwidth of 100 Mbit / s, RTT of 150 ms, and 2% packet loss. As you can see, the effective file transfer rate is 98 Mbps when using Aspera FASP, and 0.7 Mbps when using the TCP protocol.

Failure to use redundant algorithms to control the speed and reliability of the TCP protocol allows the FASP protocol not to reduce the transmission rate when packets are lost. Lost data is sent at the available for the channel or a given speed, without sending duplicate data.

The available channel bandwidth is determined based on the FASP rate control mechanism. Adapted speed control mechanism in the FASP protocol on an ongoing basis sends test packets, with the help of which the so-called queuing delay is measured. That is, when a packet hits the router, it needs to be processed and forwarded. Since Since a router can process one packet at a time, then when a packet is received before the router can process it, the packet is placed in the queue. Thus, a delay in the queue is formed. FASP uses measured queuing delay values as the main indicator of network congestion (or disk subsystem). The network is constantly polled, the transfer rate increases as the delayed value in the queue decreases below the target value (indicates that the channel is not fully utilized and the speed needs to be raised) and decreases as the delay in the queue exceeds the specified level (indicates that the channel is fully utilized and overload possible). By constantly sending test packets to the network, FASP obtains the exact values of the queue delay on the entire transmission route. When increasing the delay in the queue, the FASP session reduces the transmission rate in proportion to the difference between the target and the current delay in the queue, allowing you not to overload the network. By reducing the load on the network, the FASP session quickly increases the speed in proportion to the target delay, while utilizing almost 100% of the available bandwidth.

In addition to efficient channel utilization based on the measurement of delays in the speed control mechanism, FASP allows you to evenly share the bandwidth with other TCP traffic, as well as prioritize various file transfers.

Alternative technologies and FASP

Today, there are various technologies for accelerating data transfer over the WAN, for example, compression technology (eg Riverbed, Silverpeak). This technology consists in compressing files from the sender and further decompression on the recipient. That is, in fact, you are not speeding up the transmission of data in its pure form, but simply sending less data over the WAN. But scenarios are possible when files cannot be compressed, or they are already archived or encrypted - in this case, productivity growth will not occur.

Another technology is Traffic Shaping. It puts a different priority on different types of traffic. For example, it makes sense to put a high priority on the traffic of applications with which the sales team works, since this traffic is critical for business. Traffic from the SAP or e-mail system can be set to medium priority, and viewing of WEB pages is low. It is clear that this allows you to adjust the quality of service for certain tasks, but this method has nothing to do with speeding up data transfer.

Another method to speed up the transfer is caching. The idea of this method is to cache data that is often transmitted over the network. A software and hardware system that is on the sender's side polls the software and hardware complex on the recipient's side to see if it has a file to be transferred or a part of it, and if they are, the file is not transferred, but retrieved from the cache. The disadvantages of this method are expensive equipment: a hardware-software complex that needs to be installed in each location, and an all-or-nothing approach (the method does not work if the object is not found in the cache or has been changed).

The positioning of the FASP protocol differs from the methods listed above, since the protocol efficiently utilizes the available bandwidth, ensuring that data is transmitted at an acceptable rate.

To summarize, here are the main technological advantages of Aspera:

• Ability to transfer very large files (from 500 GB to several TB). Aspera clients regularly send files larger than 1 TB in both cloud and local storage, transferring more than 10 TB in a single session.

• Transmission over large distances in networks with a large delay (round-trip time) and packet loss. For example, BGI transmitted genomic data between the United States and China at a speed of 10 Gbit / s. (http://phys.org/news/2012-06-bgi-genomic-gigabits-china.html).

• Guaranteed and predictable delivery of files of unlimited size over long distances and at maximum speed.

Source: https://habr.com/ru/post/274807/

All Articles