About excitement in the minds

A couple of months ago, I wanted to experiment with a neural interface. I never dealt with this topic, but suddenly I became curious. It seems like the boom of neural devices promised 5-10 years ago, and all that we now have on the market is a device for waving ears , a device for shining with a pebble , and a device for levitating a ball . Somewhere on the way a device to wake up on time. Here is a good article about the whole thing. At the same time, some studies regularly appear where they say that people can learn to move with robotic arms and legs or write texts ( 1 , 2 , 3 , here is a selection). But this is all experienced, in a single copy, with the cost of equipment as a good car.

And where is something in the middle? Something useful to the average user? Even if not everywhere, but in some individual applications. After all, even offhand, a few things are invented: a sleep detector for the driver, an increase in working capacity (for example, through the choice of music, or control of interruptions!). You can choose something more specific. For example, watch and analyze your status in eSports. For the same, even pupil trackers are manufactured and used. Why are there no such uses? This question tormented me. In the end, I decided to read where the science is moving, as well as buy a simple neuro headset and test it. The article is an attempt to understand the topic, some sources and a lot of analysis of current achievements of consumer electronics.

What used and why

There are many different neurogronsets on sale. Here are a few collections on the topic: 1 , 2 .

In Moscow, there are not all of them. Take decided banal MindWave NeuroSky. It is budget, and most importantly - there is a good SDK with examples in several languages.

')

Cons Neurosky

Immediately on the minuses. For some reason, little is written about them. But they are:

1) Mindwave itself clings to the computer as a COM port. An application can cling to it directly through the .NET wrapper functions. And it can cling through the Neurosky web server, which hangs at 127.0.0.1. The second is necessary if the development goes through some kind of flash that does not have direct access to the COM port. But the COM example attached to the SDK is flawed, the device often does not cling. In fact, the only way to work stably is through a server. At the end of the article there will be a link to GitHub, I gave an example of how you can still work through the COM port (using the server for initialization).

2) Libraries. The test case did not start. Libraries that are in the SDK do not work with the latest drivers. It is necessary to dig out the latest versions of the libraries installed with the drivers and use them with examples from the SDK.

3) No matter how NeuroSky boasts noise filtering algorithms, the lowest frequencies are not usable (Delta and Theta). Really use 1 / 2-1 / 5 data on them. In this case, you need to connect either your filtering system, or take some ready-made (for example, OpenVibe openvibe.inria.fr ).

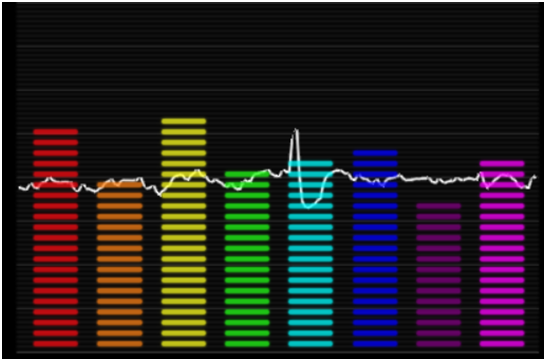

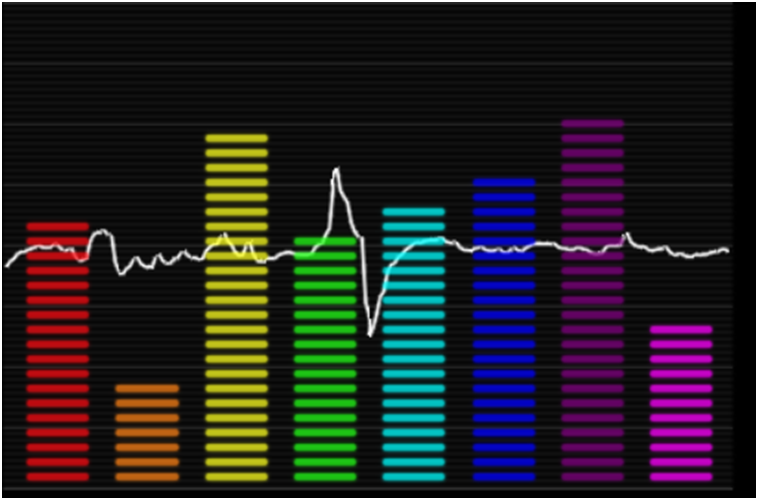

4) The blink detector that NeuroSky so boasts is functional in half. The manual says, “blink as naturally as possible.” But then blinking is not detected. If you blink hard, straining, then too. It should be somewhere in the middle. Oh yes. The COM version of the protocol still incorrectly transfers them to the program. Here is how strong / average / weak blinking will look on the chart. Below is an example of how it looks on the Fourier spectrum. You can not always distinguish.

5) Infectious uncomfortable. I want to take him off the head. And, apparently, I'm not the only one.

What NeuroSky actually sees

But it is not all that bad:

1) Low, high alpha, beta, gamma waves are visible well. There are bursts on them, but they are filtered. A small averaging + emission filter gives an objective picture:

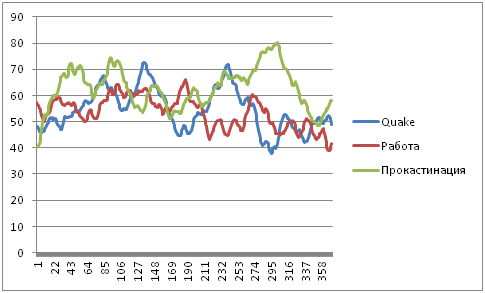

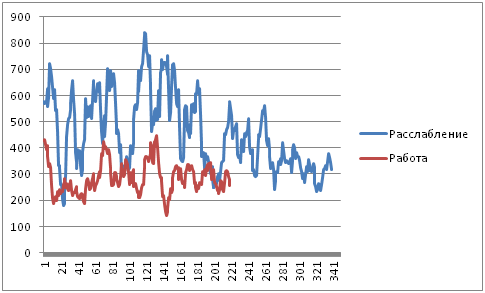

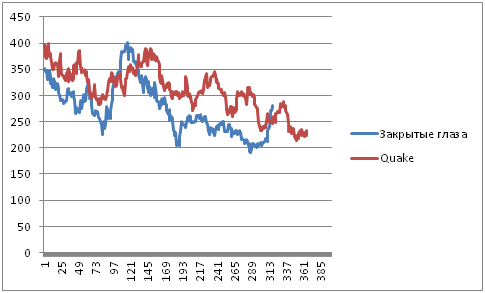

2) There are two parameters for which everyone loves Neurosky: “Meditation” and “Attention”. They are weakly correlated, but they can be controlled. I'm not sure that these options mean exactly that. The first one is maximized if something is presented with eyes closed, the second one, if you start to remember and associate various factors. But when playing Quake | The work readings are very average, although both classes require maximum immersion and attention. Procrastination rates for “attention” are even higher:

On the other hand, these values can be learned to manage more or less effectively. They are some dependent on the parameters "alpha", "beta", "delta", "gamma", "theta" values. In "meditation" the maximum dependence on alpha:

It is seen that not always, but the peaks and derivatives are often dependent.

3) The very indications of "Alpha waves" are filtered by the device. For the test, I myself built the full spectrum of the input signal, calculated the alpha from it and compared it (in the figure, the spectrum is somewhere for 0-40Hz):

In principle, after closing the eyes, it can be seen that the alpha calculated manually began to grow. For the conversion, I used a conversion window of the order of 1.5-2x seconds. If you narrow the window somewhere to 1/3 of a second, then the effect of blinking will take up much less area.

I conducted a few more tests, and in general I got that the alpha that the device produces is fairly well filtered compared to the alpha, which can be counted by hands. But, counted hands will certainly be more accurate. For example, if you take short samples without blinking, then it is better to read everything manually. If you take the average, then the instrument readings are better, if you don’t bother with the filter.

A few words about theory

There is a fairly extensive theory of what electrical oscillations the brain generates in what situations. An introduction to this theory would take this whole article. Besides, I am clearly not the one who can explain this in sufficient detail by answering all the questions. So I will limit myself to a reference to Wikipedia and a small summary:

Different modes of the brain cause electrical oscillations at different characteristic frequencies. Several groups of such frequencies were identified and their identification with the activity was carried out. For example, deep sleep causes vibrations at frequencies below four hertz. Relaxed and closed eyes at frequencies of the order of 8-12 hertz. NeuroSky displays the following parameters:

• Delta wave: <4Hz. Usually characteristic of deep sleep.

• Theta waves: 4-7 Hz. REM sleep, or when the brain is focused on one source of information.

• Alpha waves: 8-15 Hz. Relaxation, calm wake.

• Beta waves: 16-31 Hz. Attention, stress, mental activity.

• Gamma wave:> = 32 Hz. Problem solving, maximum attention stress.

These indicators are some averaged metric. For each new head, they may be different or shifted. But they are fairly stable + for most people may be representative. For example, the response on alpha waves for me for different states:

According to the theory, the larger the alpha, the more relaxed the person is. It seems to converge. For beta waves, it seems like the dependence is also visible, but not so obvious:

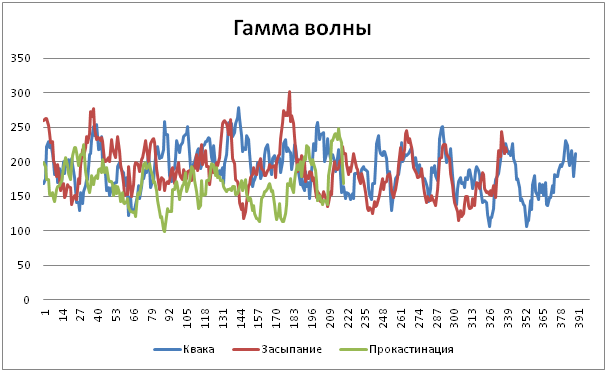

For Gamma waves I did not make any difference depending on the activity. Maybe the brain is wrong, maybe the sensitivity of the device is not enough, maybe 50 hz crosstalk kills any attempts to detect something tangible:

In principle, I have the feeling that at frequencies above 30Hz there is some inconceivable number of different oscillations. At first glance, it’s difficult to get something sensible as a stable metric (range from 0 to 80Hz):

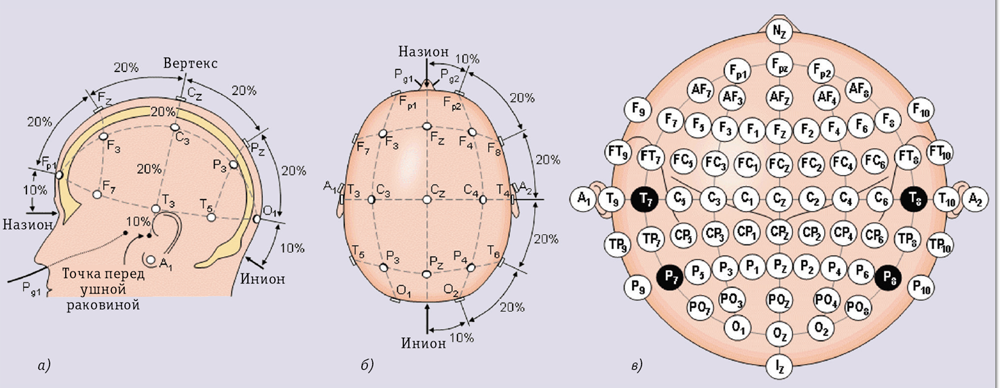

The device itself is stuck in the point of the head Fp1. It is said that this is not the most optimal point, but quite representative. It seems like the points of the back of the head should give out clearer alphas.

Okay. It seems like the device was twisted, tested. Let's try to do something?

Puppy delight around

I started by browsing the Internet about existing projects and ideas for MindWave and other devices on 1-3 electrodes. And, frankly, I was horrified.

First, the monstrous amount of meaningless movement around. Thousands of videos on YouTube where a person shows his brain signals through the application Brainwave Visualizer or analogues. There are many more applications that, in terms of the variables "meditation" and "attention", do some simplest action: they control a helicopter, or a machine. The movement around the arduino is a million times more useful and more interesting. Immediately it all comes down to two values (

There is still a topic. Write articles about how awesome it all is that neuroscience will save the world. Even here, on Habré. After the article, you should always offer either to buy a device in the relevant office, or somehow deliver the benefits to the writer of the article. The main direction of such articles is not “to make a useful device”, but “shake a denyuzhka from the user”.

For devices with a large number of electrodes, the same “Emotiv epoc” applications are much more. Primarily related to control in games, or control devices. But Emotive well detects micromimics, on the basis of which such systems are built. Management due to brain activity almost never occurs.

Serious work. Yes, there is, but they are deeply buried.

Digging deeper into the brain

The topics I raised in the title of the article are not new. In general, there is little new under the moon in science and technology. Is it possible to determine the condition of a person with the help of several electrodes? Is he tired, working, falling asleep, driving a car? And how to implement it at all?

The most obvious is the analysis of data from the EEG data analysis and training algorithms: SVM, Random Forest, ANN, KNN, etc. And really. The corresponding request in Google gives a lot of work on this topic. Not everyone is perfect, there are many places where one can undermine, but there are enough of them to understand the existing progress and opportunities. We will analyze in more detail.

First, it is coolworld.me/classifying-EEG-SVM . The study was conducted on MindWave. The author recorded many short sets of 10 seconds each with the following mental actions: “imagine the rotation of the cube”, “imagine the motor”, “imagine the audience”. At the same time, apparently, I tried to combine them with micromovements of muscles. For each set, a fast Fourier transform was done with a base of 2 seconds. The resulting 5 vectors were used for learning. A total of 3 entries were made for each action. Total 15 vectors.

He assures that it turned out to be classified in 97-100% of cases. It is believed, honestly, with difficulty. But, the author described the experiment very badly. Perhaps, a very specific dataset is used, maybe, besides mental activity, micromotions are used, maybe in 10 seconds of recording there is no blinking (or it was written with eyes closed), maybe the separation of sets was binary, etc. Here and here there are a little more articles of the author.

In general, the author rather investigated not the “difference of mental states”, but the methods through EEG to transmit certain labels. And in my opinion this method really works. Maybe not in 97% of cases, but in 90% it is still possible to give fixed commands. At the same time, they are untied from “meditation” and “attention”, which cannot always be controlled. Hands busy - turned on the music of the brain.

Very important is the author’s refusal to use the built-in values of the alpha, beta, delta, gamma, theta spectra. The author worked with fast processes. In terms of MindWave, filtering and accumulation occur, which leads to a drop in accuracy and latency. The author explains this approach by the fact that an honestly calculated spectrum is more accurate. But I do not think so. As I showed above, when blinking, the error will be higher for spectra, which are considered hands. Plus they have a lot of noise exposure.

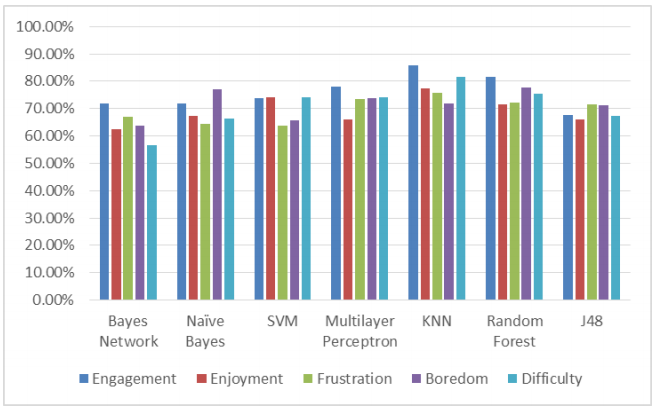

Secondly, I really liked this article. It is about Emotiv, and not about NeuroSky. But that is exactly what I think such systems should do. The possibility of detecting various mental states is analyzed. Authors recorded datasets with driving and described them in several ways, like “work”, “pleasure”, “complexity”, etc. After which they trained and tested.

In their work, they showed not only the fundamental possibility of classification. They analyzed various classifiers and calculated their accuracy. We also considered several applications, including the analysis of the driver’s mental state (the work itself is devoted to this).

Almost everything was done that was possible. The only negative - was used Emotiv EPOC with 14 electrodes. And I have a Mindwave with a half.

Next job . In my opinion quite mediocre, although on Neurosky. Detected only falling asleep. The only thing that in my opinion was well done - they verified the base during the collection.

There is such a job. It is dedicated to the definition of movements for indications Emotiv. I would not say so unequivocally that it is the brain waves that are used in it (it may be that mimicry is also involved).

There is still work , where everything was done without training, but simply on the thresholds of various + sly logic. But in my opinion it is weak.

Time to do something

To be honest, I started doing it before I studied all the articles. I decided to take the SVM and try to recognize the human activity on a long stretch of time. Does a person work, lead, read, play, etc. Perhaps I will try out other ideas from the articles above, but so far there has not been enough time for it.

First of all, I collected a base for several situations:

• I sit and work (This is the easiest to collect. 90% of the time - I prog)

• I will procrastinate. In reality, it is difficult to assemble. I tried to write down those moments when I look through VKontakte, or read something simple like Heckayms. But sometimes I caught myself thinking that I was too immersed.

• I read. Now on the reading room is a new Gibbson novel, and before that was Myvil. So diving is not bad.

• I drive a car.

• I sit with my eyes closed, trying to sleep. Usually recorded in the evening when tired. Because of the mounting scheme of MindWave to the head, sleep itself is difficult to record.

• I run on a treadmill. I recorded a couple of data sets, but in my opinion they cannot be used. Too much interference.

• Playing guitar. I play very mediocre, but enough for testing.

• I play on my computer. For the purity of the experiment, separately recorded Quake and a couple of games in Starcraft.

I recorded each process in small pieces of about 10-15 minutes. This is not a very fair set of bases. While reading, I was distracted by checking the recording. While working I could be distracted by Skype. By procrastinating, I could start thinking, and so on. Among the data, I tried to flip the obviously substandard (MindWave often has poor contact). For the car and for running, measurements are very often unconditioned.

In the works mentioned by me above a lot of attention was paid to the correctness of the base. For this, got quite good results. But it is clear that in real conditions such a base would be incorrect. People blink. People are distracted. I wanted to add stability. It is clear that the accuracy falls. Therefore, it will be impossible to work with a large number of situations and recognize them simultaneously.

Here I highlighted not all the examples, but in my opinion the most interesting. All collected databases are laid out in the repository (link at the end of the article). You can test it yourself.

What will we give to the entrance. This may or may not be a mistake. But I decided not to input the DCT spectrum, but the MindWave calculated alpha-beta-waves ... waves + the “Meditation” and “Concentration” parameters. For good, you need something else: submit the original spectrum, filtering it in time, as is done in almost all articles. But the holidays are over and in the near future I will not check it. How to check - either add a chapter to this article, or write another one.

I did this for two reasons. First, at that time, as I began to collect the database, I found only one article. Secondly, it seemed to me that the MindWave values are somewhat more stable. Especially to blink. I did not want to do my own algorithm for its suppression.

Understanding that it is difficult to distinguish between more than two classes, I used the distinction only between the two classes. I used SVM as a solver. Judging by all the articles it is not bad. Its easiest to configure. Plus is familiar with several implementations. The input vector was:

Theta, Beta, Alpha1, Alpha2, Beta1, Beta2, Gamma1, Gamma2, Meditation, Concentration

540 248 374 110 98 181 61 120 64 27

The vector was normalized so that the parameters lay approximately in one region. For one class, the result was +1, for the second -1.

Work examples

I will not give exact statistics. Because of how the base was being assembled - it’s hard to say something definite. Collecting a base of 100 examples of each class for 15 minutes is almost impossible. Therefore, I am building a classifier for two classes from samples X1 and Y1, and then I look at how examples X2, X3, ..., Xn, Y2, Y3, ... Yn were recognized.

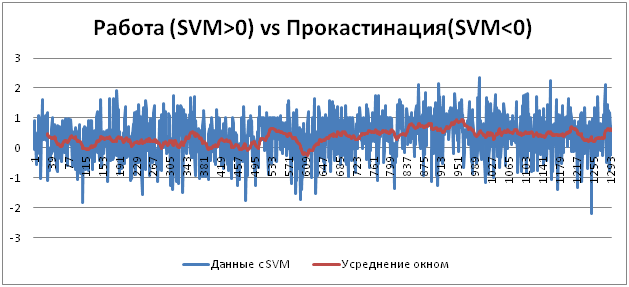

The vector "alpha", "beta", "gamma", ... changes about once per second. Due to the presence of noise caused by blinking, turning of the head and in spite of the internal filtration, the vector is extremely unstable. Often he needs 2-3 seconds to return to normal. Therefore, we introduce an averaging window 30 seconds long. It turns out something like this:

Work-Procrastination Test

An example of the recognition of procrastination, where> 80% of the example was recognized correctly. And here is an example of a recording with work for 20 minutes, where it was recognized correctly> 90%:

I had a total of 5 entries with 15-25 minutes work. Of these, one was used for training. 4 others were recognized correctly. Each is more than 90% long. Of the 6 procrastination records, one was used as training, the rest as a test. Four were recognized as procrastination by more than 80%, one as almost all work. Probably not enough prokrastiroval: (

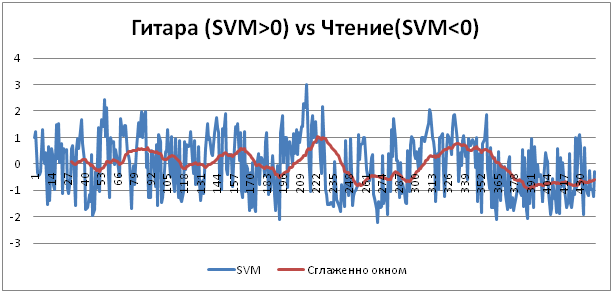

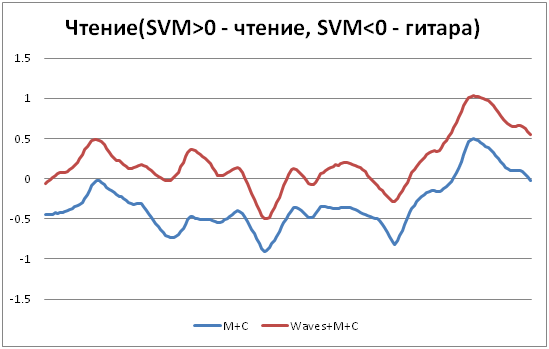

Test Guitar-Reading

Not a very useful test, but since I accumulated many examples in these two classes, I tested a lot. It differs very well. For reading was a sample of 9 examples. For guitar 6 examples. One by one for testing. Only one guitar was recognized incorrectly (somewhere only 60% was defined as a guitar, the other 40% was reading). Here is an example:

Test Guitar - Kwaka

And here is the first badly working test. On Q - 4 tests, from them 1 training, the others 3 were recognized. But on the guitar 6 tests, 2 of them are not recognized correctly.

Test Guitar - procrastination

Does not work almost entirely. Retrained in the direction of playing the guitar. Almost all of the records are attributed to playing the guitar.

Seat with eyes closed - driving

This is very disappointing, but it does not work for me either. Nevertheless, I am almost sure that the reason is that the seat is with eyes closed! = Falling asleep. I managed to fall asleep in only one of four examples (there were high alpha waves, an example below). But I don’t understand how to put together a base for falling asleep within a reasonable time. Especially with Mindwave in which it is almost impossible to lie down it is difficult.

What is encouraging - in the articles that have been given above, the cutoff of falling asleep while driving can be given. Even the level of alpha. And most likely, everything will work on a correctly assembled database.

Final thoughts where there is no work

I would say that the criterion is simple: the more different the states of the brain, the easier it is to distinguish what is happening. But in essence, this approach boils down to distinguishing between two, maximum three brain states. Which can be fooled by similar activities.

Is it fair?

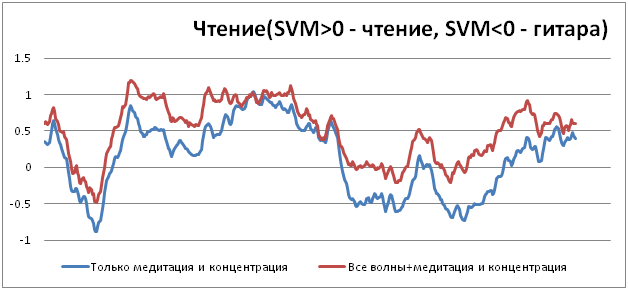

In general, why do I need alpha-beta-gamma waves? May be enough meditation and concentration? After all, these two parameters result?

This thought came to me, and I checked it out. Yet it turns out that the introduction of waves significantly clarifies the accuracy of recognition. I give examples for reading and playing the guitar. For other classes, about the same. It can be seen that the quality has improved, although general trends have been preserved:

In principle, as was shown in the introductory parts, the parameters Meditation and Concentration are strongly tied to the waves and show quite well that the waves are. But they do not carry 100% of the information from the waves.

findings

Such a small article turned out on the topic of EEG. New Year's weekend was not very long :)

Let's return to the questions asked at the beginning. Why didn’t the neurodevice boom happen in consumer class? In my opinion, the main problem is the complexity of creating something universal. Algorithms, except for the most basic, will require tuning for each person. And to make automatic tuning without the user making the settings himself is very difficult.

The second problem is the complexity of operation. Sensors are unstable. Fix them so that there is no noise is not trivial. In this case, dry contact is possible only for the frontal part. The same Emotiv is desirable to fix with the gel.

As a result, we have a technology that is in itself unstable. It is not bad for some analytics. But as soon as it comes to some guaranteed result, it immediately loses. To solve the same problem with falling asleep driver can be much more simple and cheap ways. Analytics with an ECG are much more interesting than an EEG in the general case.

Yes, there are a number of applications where you can try to develop. In the end, it is difficult to make the detector “working for a person” or “procrastinate” in other ways (except to make a video analytics of an image on a monitor).But all such solutions require very large development and are unlikely to work for 100% of people.

In my opinion, in my opinion, only a toy market and useless gadgets can occupy a niche of budget EEG devices. What is successfully engaged.

Codes

I laid out the terrible and raw source codes of the whole case here .

SVM is taken from the Accord Framework, for simplicity of display, the old EmguCV is hooked up. There are also Neurosky libraries for interacting with MindWawe.

Source: https://habr.com/ru/post/274665/

All Articles