Compare incomparable: json in PostgreSQL vs Mysql vs Mongodb

As such, there is no standard “standard” benchmark. Only your requirements, your data, and your infrastructure can.

First, a little philosophy. NoSql surrounds and not run away from it (although I didn’t really want to). Let us leave questions about the underlying causes beyond the scope of this text, we only note that this trend is reflected not only in the emergence of new NoSql solutions and the development of old ones. Another facet is the mixture of opposites, namely the support for storing schema-less data in traditional relational databases. In this gray area at the junction of the relational data storage model and the rest lies a dizzying number of possibilities. But, as always, you need to be able to find a balance that is right for your data. This is difficult, primarily due to the fact that it is necessary to compare little comparable things, for example, the performance of the NoSql solution with a traditional database. In this small note, such an attempt will be proposed and a comparison of the performance of working with jsonb in PostgreSQL with json in Mysql and with bson in Mongodb is given.

What the hell is going on?

Brief news from the fields:

- PostgreSQL 9.4 - a new jsonb data type, whose support will be slightly extended in the upcoming PostgreSQL 9.5

- Mysql 5.7.7 - a new json data type

and a number of other examples, which I will discuss next time. It is remarkable that these data types do not assume textual, but binary json storage, which makes working with it much faster. The basic functionality is identical everywhere; these are obvious requirements - create, select, update, delete. The most ancient, almost cave-like, desire of a person in this situation is to hold a series of benchmarks. PostgreSQL & Mysql are selected because the implementation of json support is very similar in both cases (besides, they are in the same weight category), and Mongodb is like the old-timer NoSql of the world. The work carried out by EnterpriseDB is a bit outdated in this regard, but it can be taken as a first step for a thousand li road. At the moment, the goal of this road is not to show who is faster / slower in artificial conditions, but to try to give a neutral rating and get feedback.

Initial data and some details

pg_nosql_benchmark from EnterpriseDB suggests a fairly obvious way - first, a predetermined amount of different types of data with slight fluctuations is generated, which is then recorded in the database under study and sampled.

There is no functionality for working with Mysql, so it was necessary to implement it on the basis of the same for PostgreSQL. At this stage, there is only one subtlety when we think about indexes - the fact is that Mysql is not implemented

json indexing on a straight line, so you have to create virtual columns and index them already. In addition, I was embarrassed by the fact that for the mongodb part of the generated data exceeds 4096 bytes in size and does not fit into the mongo shell buffer, i.e. just discarded. As a hack, it turned out to perform the insert'y from the js file (which also has to break several chunk, because one can not be more than 2GB). In addition, in order to avoid the costs associated with starting the shell, authentication, etc., a corresponding number of “no-op” requests are made, the time of which is then excluded (although they are, in fact, quite small).

')

With all the changes received, checks were performed for the following cases:

- PostgreSQL 9.5 beta1, gin

- PostgreSQL 9.5 beta1, jsonb_path_ops

- PostgreSQL 9.5 beta1, jsquery

- Mysql 5.7.9

- Mongodb 3.2.0 WiredTiger storage engine

- Mongodb 3.2.0 storage engine MMAPv1

Each of them was deployed on a separate m4.xlarge instance with ubuntu 14.04 x64 on board with the default settings, tests were performed on the number of records equal to 1,000,000. For tests with jsquery, you should read the readme and remember to install bison , flex , libpq-dev and even postgresql-server-dev-9.5 . The results will be saved to a json file, which can be visualized using matplotlib (see here ).

In addition, there were doubts about the settings related to durability. Therefore, I have conducted a couple of additional tests for the following cases (in my opinion, some of them are more likely a theory, as someone will use such settings live):

- Mongodb 3.2.0 journaled (writeConcern j: true)

- Mongodb 3.2.0 fsync (transaction_sync = (enabled = true, method = fsync))

- PostgreSQL 9.5 beta 1, no fsync (fsync = off)

- Mysql 5.7.9, no fsync (innodb_flush_method = nosync)

Pictures

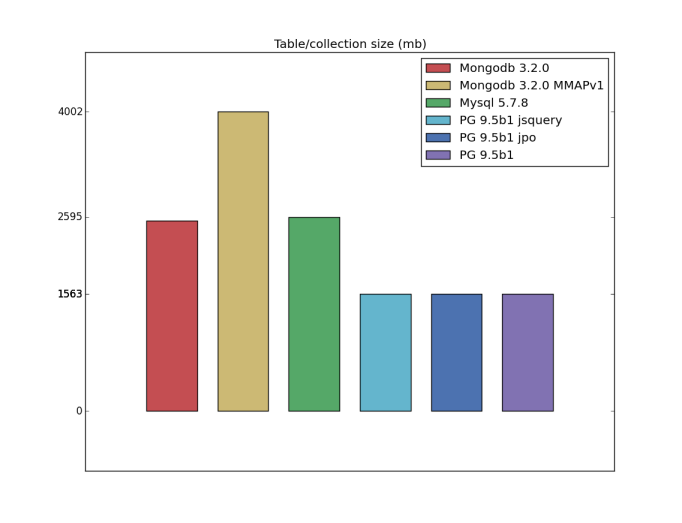

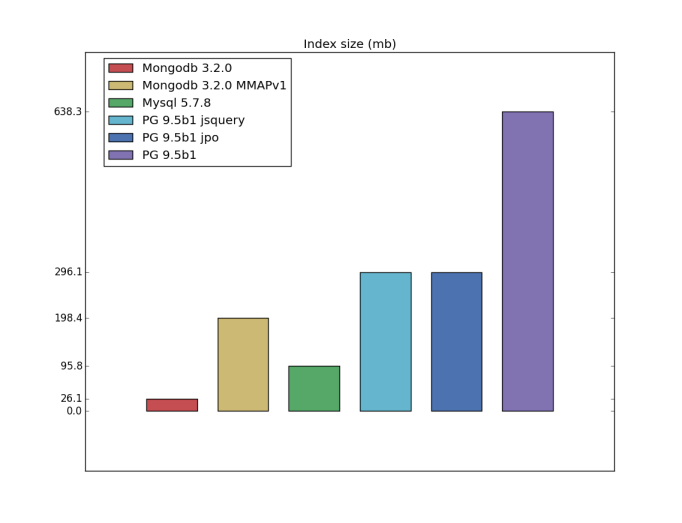

All graphs associated with the query execution time are presented in seconds, related to the size - in megabytes. Accordingly, for both cases, the smaller the value, the greater the performance.

Select

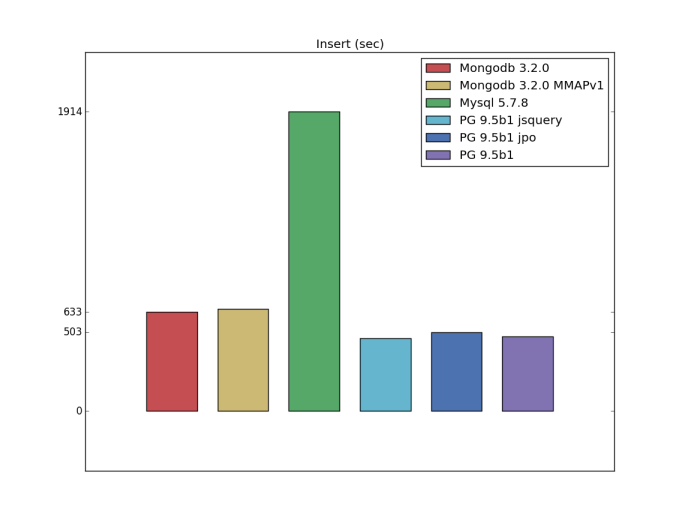

Insert

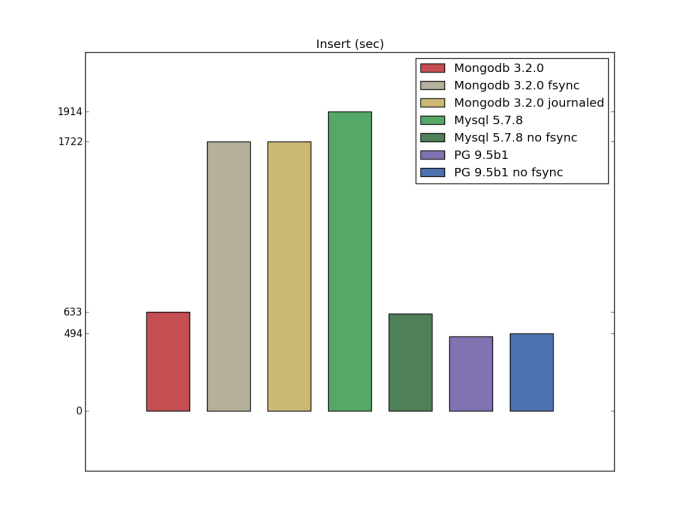

Insert (custom configuration)

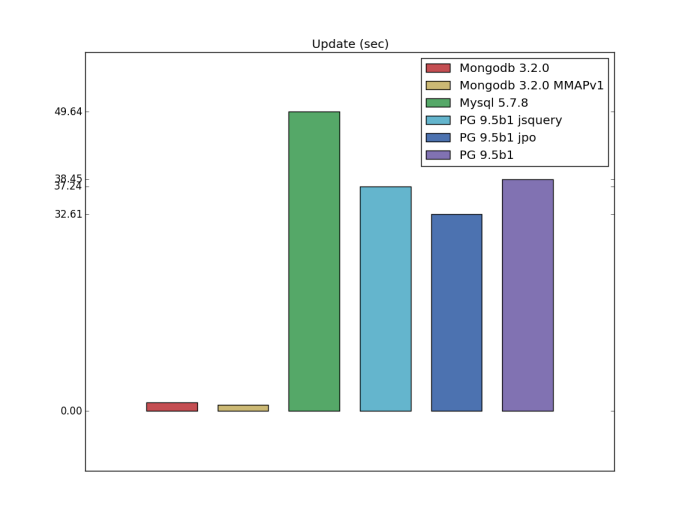

Update

Another change regarding the original pg_nosql_benchmark code was the addition of update tests. Here, the clear leader was Mongodb, most likely due to the fact that in PostgreSQL and Mysql updating even one value at the moment means overwriting the entire field.

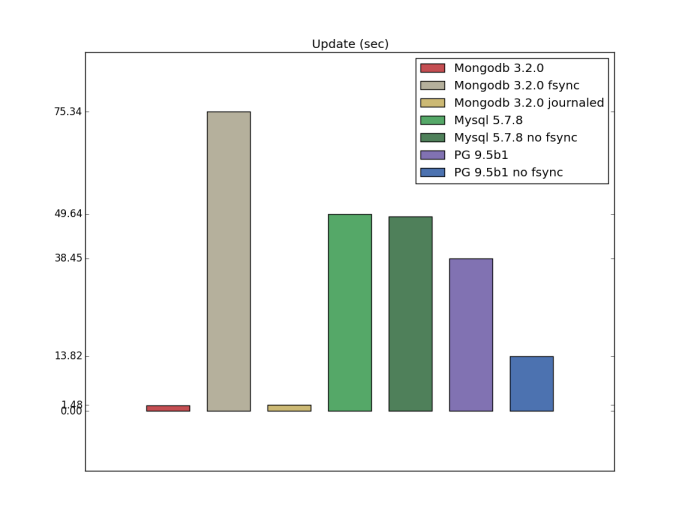

Update (custom configuration)

As you can guess from the documentation and peep in this answer , writeConcern j: true is the highest possible durability level for one mongodb server, and apparently it should be equivalent to configurations with fsync . I didn’t check durability, but it’s interesting that for mongodb, updating operations with fsync were much slower.

Table / index size

I have a bad feeling about this

Performance measurement is too slippery, especially in this case. Everything described above cannot be considered a complete and complete benchmark; this is only the first step to understanding the current situation - something like food for thought. At the moment, we are engaged in testing using ycsb , and, if lucky, we will compare the performance of cluster configurations. In addition, I will be glad to all constructive suggestions, ideas and corrections (since I could easily miss something).

Source: https://habr.com/ru/post/274313/

All Articles