Threads vs processes using the example of native Node.js add-on for load testing

A little less than a year ago, I wrote a note about trying to create a load testing tool for Node.js using built-in features (cluster and net modules). The comments rightly pointed out the need for analyzing RPS and comparing with other benchmarks. As a result of the comparison, I came to the natural conclusion that a multi-process service can never be compared to a multi-threaded performance due to very expensive data exchange costs (later we will see this with an example)

At first glance, there is no significant difference, and this ensures the parallelism of requests, the only difference is in the shared memory for threads and the process of creating processes is a little more expensive. But we can create all the processes in advance and simply transfer tasks to them. But now we need a channel of communication. Let's see what interprocessor communication methods are available:

Signals

Fast and universal (supported by almost all OS) method of communication systems and processes. The problem is exceptional inflexibility, and they were not created to send JSON through them.

')

Sockets

This is how cluster is implemented in Node. The process.send method causes data to be sent to another process via TCP. What do sockets mean? This means a new handle for each call, a bunch of I / O and CPU idling.

There are several other ways, but they are either not cross-platform or also dependent on I / O.

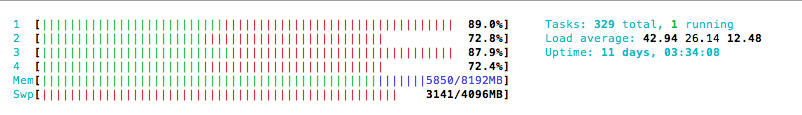

Now let's look at the state of the system when we create 100 processes:

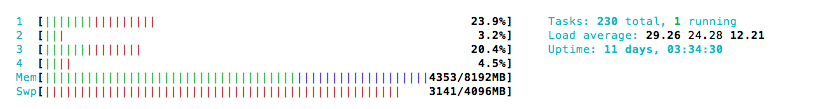

And 100 threads:

Obviously, now the CPU is busy only in order to maintain communication between these primitive processes (everyone must perform one request at a time, otherwise V8 will put them in a synchronous event loop). CPU utilization drops and every request takes longer (God forbid, even the memory will end and the swap file will go)

Make a native C ++ addon using multithreading. Nan, Node-gyp, POSIX threads and, as a result, the addon became similar to ab - concurrency arrives at the input, and test results at the output. Only unlike ab, we can use all the js capabilities to analyze the results:

Additional heders are supported, POST payload and since this is POSIX, unfortunately only Linux / Mac.

If desired, only heders can be read, usually this is enough, then you can save

a little more processing time.

As a result, in terms of performance, nnb caught up with ab, delivering up to 3000 RPS on different machines and networks.

There is JMeter, there is Tsung, there are a lot of other paid and free benchmarks, but the reason why many of them do not take root in the arsenal of developers is overloaded functionality and, as a result, there is still insufficient flexibility. Basically, nnb you can create your own tool for specific purposes or just a 10-line script that does just what you need in one of the most popular languages.

For example, stress , which can be run with the default config and watch live on Google's RPS with an increase in load (spoiler: nothing) right in the browser and on any unix machine.

Here on the x-axis the number of sent requests, on the y-axis - the server response time in milliseconds. On the second chart requests per second. In the end you can see the slowdown, it probably started to cut me hoster.

Unfortunately, with the machines available to me, it has not been possible to achieve more than 5000 RPS. Usually it comes down to the limitations of the network. At the same time, the CPU and memory are almost not loaded. Stress by the way supports both Node.js cluster and multi-threading via nnb. You can play with this and that by first setting ulimit -u (the maximum number of processes started by the user) and ulimit -n (the maximum number of descriptors).

Hope the article was helpful. I am still glad to cooperate with everyone who is interested in this topic and, of course, with the upcoming one!

Processes or threads?

At first glance, there is no significant difference, and this ensures the parallelism of requests, the only difference is in the shared memory for threads and the process of creating processes is a little more expensive. But we can create all the processes in advance and simply transfer tasks to them. But now we need a channel of communication. Let's see what interprocessor communication methods are available:

Signals

Fast and universal (supported by almost all OS) method of communication systems and processes. The problem is exceptional inflexibility, and they were not created to send JSON through them.

')

Sockets

This is how cluster is implemented in Node. The process.send method causes data to be sent to another process via TCP. What do sockets mean? This means a new handle for each call, a bunch of I / O and CPU idling.

There are several other ways, but they are either not cross-platform or also dependent on I / O.

Now let's look at the state of the system when we create 100 processes:

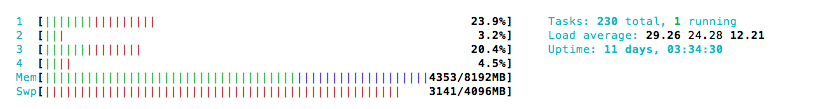

And 100 threads:

Obviously, now the CPU is busy only in order to maintain communication between these primitive processes (everyone must perform one request at a time, otherwise V8 will put them in a synchronous event loop). CPU utilization drops and every request takes longer (God forbid, even the memory will end and the swap file will go)

But the node is single threaded, what to do?

Make a native C ++ addon using multithreading. Nan, Node-gyp, POSIX threads and, as a result, the addon became similar to ab - concurrency arrives at the input, and test results at the output. Only unlike ab, we can use all the js capabilities to analyze the results:

[ { time: 80, body: '<!doctype html><html itemscope=...', headers: 'HTTP/1.1 200 OK\r\nDate: Mon, 28 Dec 2015 10:37:35 GMT\r\nConnection: close\r\n\r\n' }, .... ] Additional heders are supported, POST payload and since this is POSIX, unfortunately only Linux / Mac.

If desired, only heders can be read, usually this is enough, then you can save

a little more processing time.

As a result, in terms of performance, nnb caught up with ab, delivering up to 3000 RPS on different machines and networks.

Why do you need it?

There is JMeter, there is Tsung, there are a lot of other paid and free benchmarks, but the reason why many of them do not take root in the arsenal of developers is overloaded functionality and, as a result, there is still insufficient flexibility. Basically, nnb you can create your own tool for specific purposes or just a 10-line script that does just what you need in one of the most popular languages.

For example, stress , which can be run with the default config and watch live on Google's RPS with an increase in load (spoiler: nothing) right in the browser and on any unix machine.

Here on the x-axis the number of sent requests, on the y-axis - the server response time in milliseconds. On the second chart requests per second. In the end you can see the slowdown, it probably started to cut me hoster.

Unfortunately, with the machines available to me, it has not been possible to achieve more than 5000 RPS. Usually it comes down to the limitations of the network. At the same time, the CPU and memory are almost not loaded. Stress by the way supports both Node.js cluster and multi-threading via nnb. You can play with this and that by first setting ulimit -u (the maximum number of processes started by the user) and ulimit -n (the maximum number of descriptors).

Hope the article was helpful. I am still glad to cooperate with everyone who is interested in this topic and, of course, with the upcoming one!

Source: https://habr.com/ru/post/274287/

All Articles