How flash memory will change the structure of data centers

Today we decided to analyze a note by Ron Wilson (Ron Wilson), who works for Altea. Ron shared his views on how flash memory development can change the data center structure.

/ Photo Wonderlane / CC

Now we are on the verge of a turning point in the history of the data center. With the increase in flash memory capabilities, we get not only more productive gadgets, but also cloud services that are completely different in their efficiency.

')

It is worth mentioning such new items as NAND memory with a new architecture and non-volatile memory Intel-Micron 3D Xpoint - they will go into mass production within the year.

At the Flash Memory Summit, Samsung Vice President Jim Elliott announced the launch of a new 256-gigabit 48-layer chip. According to him, such chips will be capable of delivering twice the sequential read speed and will consume 40% less power. In this case, the parallel transfer of data from the memory arrays goes directly to the microcontroller using through holes through silicon. This approach allows to increase throughput.

Against this background, the development of 3D Xpoint is noticeable, which is capable of creating a new category of non-volatile memory: fast enough to use the DRAM bus, and large enough to store a large amount of data. HDD sphere is not lagging behind, tiled magnetic recording, 2D recording and thermoassisted recording are gaining momentum.

In response to new technologies, completely different solutions for the data center are emerging. For example, a cluster of flash memory chips running a chip that emulates a disk controller. This SSD replaces hard drives in critical locations.

If we talk about servers, here SSD began to crowd out the built-in SAS-drives with low latency, and flash memory pools began to replace even the storage of "cold" data. Such an approach may not always be considered an expedient solution.

The emergence of a variety of start-ups and services in the IT market has given rise to a significant revision of data center solutions. Habitual relational databases have replaced key-value storages or simply heaps of unstructured documentary data.

“To download one page of the Amazon site, you need about 30 microservices,” said Val Bercovici, director of technical strategy at NetApp. - You can have Redis or Riak key-value repositories, a Neo4j graph database for defining related products, and a MongoDB document-oriented database. All this is needed to create a single page. ”

The main difference between new and old applications, such as Hadoop, is their approach to data storage. “The proliferation of Memcached APIs and the emergence of Spark and Redis have led to applications starting to devour memory,” warns Diablo Technologies president Riccardo Badalone. “We need to find an alternative to DRAM.”

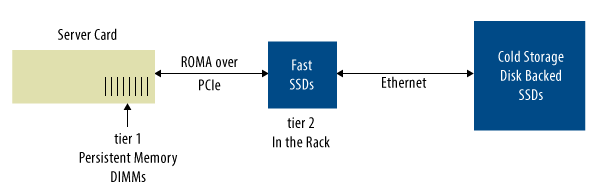

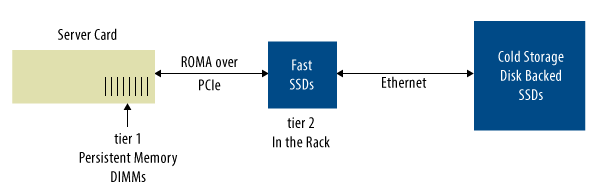

Today it is quite possible to consider flash memory, and not storage. All-flash DIMMs allow you to transfer four or even ten times more operational data over the server bus. The result is a dramatic change in the structure of the data warehouse: a small amount of DRAM becomes a large array of fast flair memory.

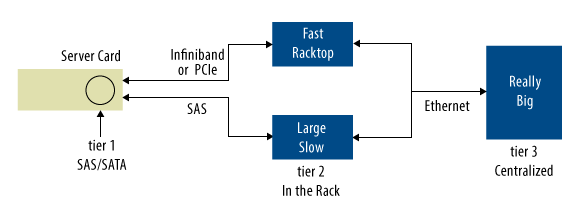

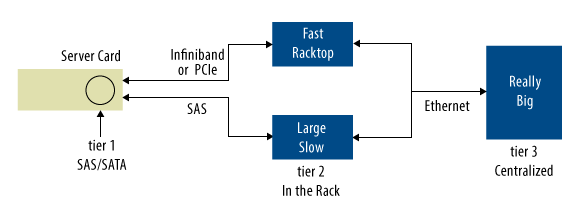

If we delve into the structure of the data center, we will see high-capacity and highly reliable SSDs for dozens or hundreds of terabytes that work with server-side DIMMs in direct access mode - thus costing the OS and the hypervisor reduces latency. Some architects call this disaggregation when data storage is distributed across the entire data center, as close as possible to the servers.

As a result, DRAM, DIMM flash, memory connected by the RDMA channel, and cold storage form concentric cache layers, creating a seamless architecture that seamlessly transitions from the first-level cache to the permanent storage at the other end of the network. From the point of view of the application, all data will be in DRAM, and the data center operator will not notice fluctuations in the magnitude of the delays.

However, this system has a flaw. Small delays require synchronism in reading and writing. "The temporal characteristics of DDR4 are strictly defined," one of the speakers warned. The DDR4 protocol does not work with memory if its reading behavior is deterministic, but the write behavior is completely unpredictable.

If the recording is not frequent, the controller with a sufficiently fast memory buffer can solve this problem. You just need to put the information in the appropriate buffer and issue it as the equipment is released. Fortunately, most modern applications satisfy this condition [the condition of infrequent recording]. Programs such as Spark and Redis rarely write to memory.

Even in older SQL applications, writing occurs less frequently than the data center managers consider. Zaher Finblit (Shacher Fienblit), the chief engineer of Kaminario, found that 97% of users record the entire set of (their) data less than once a day. With a good controller, he says, you can keep the write load level at 15% of all data received in a day. Write buffers can handle this.

With the advent of new technologies, the data center architecture most likely will not enter the SSD era, avoiding the problems associated with disk software drivers and new memory connection schemes - it will enter a new unknown world. In this world, the software will consider all memory operative, there will be no disk drivers, an API for data warehouses or virtualization of levels - all these functions will be transferred to program-defined controllers and switches.

Server CPU SRAM caches, DRAM, non-volatile high-speed memory, and network SSD flash are combined, controller to controller, into a topology that can change with applications. Most of the data center data will now be stored closer to the CPU.

Terabytes of data will begin to be transmitted directly through the DDR memory bus. Low-cost, high-capacity disk drives will take their place as storage of “cold” data. This architecture will be very different from the existing ones.

/ Photo Wonderlane / CC

Now we are on the verge of a turning point in the history of the data center. With the increase in flash memory capabilities, we get not only more productive gadgets, but also cloud services that are completely different in their efficiency.

')

It is worth mentioning such new items as NAND memory with a new architecture and non-volatile memory Intel-Micron 3D Xpoint - they will go into mass production within the year.

Technologies that make this possible

At the Flash Memory Summit, Samsung Vice President Jim Elliott announced the launch of a new 256-gigabit 48-layer chip. According to him, such chips will be capable of delivering twice the sequential read speed and will consume 40% less power. In this case, the parallel transfer of data from the memory arrays goes directly to the microcontroller using through holes through silicon. This approach allows to increase throughput.

Against this background, the development of 3D Xpoint is noticeable, which is capable of creating a new category of non-volatile memory: fast enough to use the DRAM bus, and large enough to store a large amount of data. HDD sphere is not lagging behind, tiled magnetic recording, 2D recording and thermoassisted recording are gaining momentum.

We are here for a while

In response to new technologies, completely different solutions for the data center are emerging. For example, a cluster of flash memory chips running a chip that emulates a disk controller. This SSD replaces hard drives in critical locations.

If we talk about servers, here SSD began to crowd out the built-in SAS-drives with low latency, and flash memory pools began to replace even the storage of "cold" data. Such an approach may not always be considered an expedient solution.

“Arrays of flash memory have a higher bandwidth than disk drives,” say the experts, “but the SAS interface, and, in some cases, PCI Express buses (PCIe), reduce this advantage to nothing”

Software evolution

The emergence of a variety of start-ups and services in the IT market has given rise to a significant revision of data center solutions. Habitual relational databases have replaced key-value storages or simply heaps of unstructured documentary data.

“To download one page of the Amazon site, you need about 30 microservices,” said Val Bercovici, director of technical strategy at NetApp. - You can have Redis or Riak key-value repositories, a Neo4j graph database for defining related products, and a MongoDB document-oriented database. All this is needed to create a single page. ”

The main difference between new and old applications, such as Hadoop, is their approach to data storage. “The proliferation of Memcached APIs and the emergence of Spark and Redis have led to applications starting to devour memory,” warns Diablo Technologies president Riccardo Badalone. “We need to find an alternative to DRAM.”

New architectures for new code

Today it is quite possible to consider flash memory, and not storage. All-flash DIMMs allow you to transfer four or even ten times more operational data over the server bus. The result is a dramatic change in the structure of the data warehouse: a small amount of DRAM becomes a large array of fast flair memory.

If we delve into the structure of the data center, we will see high-capacity and highly reliable SSDs for dozens or hundreds of terabytes that work with server-side DIMMs in direct access mode - thus costing the OS and the hypervisor reduces latency. Some architects call this disaggregation when data storage is distributed across the entire data center, as close as possible to the servers.

As a result, DRAM, DIMM flash, memory connected by the RDMA channel, and cold storage form concentric cache layers, creating a seamless architecture that seamlessly transitions from the first-level cache to the permanent storage at the other end of the network. From the point of view of the application, all data will be in DRAM, and the data center operator will not notice fluctuations in the magnitude of the delays.

However, this system has a flaw. Small delays require synchronism in reading and writing. "The temporal characteristics of DDR4 are strictly defined," one of the speakers warned. The DDR4 protocol does not work with memory if its reading behavior is deterministic, but the write behavior is completely unpredictable.

If the recording is not frequent, the controller with a sufficiently fast memory buffer can solve this problem. You just need to put the information in the appropriate buffer and issue it as the equipment is released. Fortunately, most modern applications satisfy this condition [the condition of infrequent recording]. Programs such as Spark and Redis rarely write to memory.

Even in older SQL applications, writing occurs less frequently than the data center managers consider. Zaher Finblit (Shacher Fienblit), the chief engineer of Kaminario, found that 97% of users record the entire set of (their) data less than once a day. With a good controller, he says, you can keep the write load level at 15% of all data received in a day. Write buffers can handle this.

Conclusion

With the advent of new technologies, the data center architecture most likely will not enter the SSD era, avoiding the problems associated with disk software drivers and new memory connection schemes - it will enter a new unknown world. In this world, the software will consider all memory operative, there will be no disk drivers, an API for data warehouses or virtualization of levels - all these functions will be transferred to program-defined controllers and switches.

Server CPU SRAM caches, DRAM, non-volatile high-speed memory, and network SSD flash are combined, controller to controller, into a topology that can change with applications. Most of the data center data will now be stored closer to the CPU.

Terabytes of data will begin to be transmitted directly through the DDR memory bus. Low-cost, high-capacity disk drives will take their place as storage of “cold” data. This architecture will be very different from the existing ones.

Source: https://habr.com/ru/post/273939/

All Articles