Comparison of free software accounting traffic SQUID

Recently, our company needed to transfer a proxy server from MS ISA Server to free software. The proxy server did not have to choose for a long time (squid). Using a few tutorials, set up a proxy for our needs. Some difficulties have arisen when choosing a program for traffic accounting.

The requirements were:

1) free software

2) the ability to process logs from different proxies on the same server

3) the ability to build standard reports with mailing, or a link to a web server

4) building reports on individual departments and sending such reports to heads of departments, or providing access via a link to a web server

')

The traffic accounting software provided by the developers is rather scant information: a concise description of the purpose of the program plus an optional bonus pair of screenshots. Yes, it is clear that any program will calculate the amount of traffic per day / week / month, but additional interesting features that distinguish one program from others are not described.

I decided to write this post, in which I will try to describe the possibilities and shortcomings of such programs, as well as some of their key "chips", to help a little in choosing those who need to do it.

Our candidates:

Sarg

free-sa

lightsquid

SquidAnalyzer

ScreenSquid

So let's get started.

The elder among the supported programs of this class (development started in 1998, the former name is sqmgrlog). The latest release (version 2.3.10) is April 2015. After that, there were several improvements and fixes that are available in the master version (can be downloaded using git from sourceforge).

The program is launched manually or by cron. You can run without parameters (then all parameters will be taken from the sarg.conf configuration file), or you can specify parameters in the command line or a script, for example, the dates for which the report is built.

Reports are created as html-pages and are added to the / var / www / html / squid-reports directory (by default). You can set the parameter that determines the number of stored reports in the directory. For example, 10 daily and 20 weekly, older ones will be automatically deleted.

It is possible to use several config-files with different parameters for different report options (for example, for daily reports you can create your config in which the option to create graphs will be disabled and a different directory will be specified for the report output).

Domestic development. Since November 2013 there have been no new versions. It is stated that reports are generated faster than competing programs and less space is taken up for ready-made reports. Check it out!

This program is the closest to the logic of work for SARG (and the author himself compares with this program (for example, here )), therefore we will compare with it.

Pleased with the presence of several themes. The theme is 3 css-files and 4 png-icons, corresponding to them.

Reports really are made faster. The daily report was created at 4:30, when SARG had 12 minutes. True, the volume occupied was not the case: the volume occupied by the reports was 440 MB (free-sa) and 336 MB (SARG).

Let's try to give the task more difficult: to process the log file of 3.2 GB in 10 days, in which 26.3 million lines.

Free-sa also made a report faster, in 46 minutes, the report takes 3.7 GB on disk. SARG spent 1 hour 10 minutes, the report takes 2.5 GB.

But both of these reports will be inconvenient to read. Who, for example, wants to manually consider which domain is more popular - vk.com or googlevideo.com and manually calculate the traffic of all their subdomains? If you leave only Level 2 domains in the SARG settings, then creating the report will take about the same time, but now the report itself takes 1.5 GB of disk space (the daily one has decreased from 336 MB to 192 MB).

Perhaps the most popular traffic counters. It works quickly, reports do not take a lot of disk space. Although this program has not been updated for a long time, I still decided to consider its possibilities in this article.

The logic of the program is different: the program reads the log and creates a set of data files, which it then uses to create web pages. That is, there are no pre-created data reports here, pages with data are generated on the fly. The advantages of this solution are obvious: to get a report, it is not necessary to parse all the logs for the period, it’s enough to feed the lightsquid magazine once a day. You can cron-do this several times a day to quickly add a new piece of information.

It was not without drawbacks: it is impossible to process logs from different servers and collect statistics in one place: when processing a log for any day from another server, the existing statistics for that day are erased.

There is a strange restriction: lightsquid "perceives" as uncompressed log files, as well as compressed (gz - exactly), but in the second case the file name should be in this format: access.log.X.gz, files with the format name access.log- YYYYMMDD.gz will not accept.

By simple manipulations, we overcome this limitation and see what happens.

Similar to lightsquid program and also written in Perl. More cute design. The latest version 6.4 is currently released in mid-December of this year, many improvements have been made. Program website: squidanalyzer.darold.net .

SquidAnalyzer can use multiple computer processors (the -j option), making reports faster, but this applies only to uncompressed files. For packed (gz format supported), processing takes place using a single processor core.

And one more comparison with lightsquid: the same report was made on the same server for about a day, on a disk it takes 3.7 GB.

Just like lightsquid, SquidAnalyzer will not be able to combine two or more log files from different servers for the same period.

This program has a different logic: the log is imported into the MySQL database, then the data is requested from it when working in the web interface. The base with the processed ten-day log mentioned earlier takes up 1.5 GB.

That's all. I hope this material will come in handy. Thank you all for your attention.

The requirements were:

1) free software

2) the ability to process logs from different proxies on the same server

3) the ability to build standard reports with mailing, or a link to a web server

4) building reports on individual departments and sending such reports to heads of departments, or providing access via a link to a web server

')

The traffic accounting software provided by the developers is rather scant information: a concise description of the purpose of the program plus an optional bonus pair of screenshots. Yes, it is clear that any program will calculate the amount of traffic per day / week / month, but additional interesting features that distinguish one program from others are not described.

I decided to write this post, in which I will try to describe the possibilities and shortcomings of such programs, as well as some of their key "chips", to help a little in choosing those who need to do it.

Our candidates:

Sarg

free-sa

lightsquid

SquidAnalyzer

ScreenSquid

Retreat

Information about the "age" of the program and the release of the last release is not a comparison parameter and is provided for information only. I will try to compare only the functionality of the program. I also deliberately did not consider too old programs that have not been updated for many years.

Logs are sent to the analyzer for processing in the form in which squid created them and will not be subjected to any preliminary processing in order to make changes to them. Processing of incorrect entries and various transformations of the log fields should be made by the analyzer itself and be present only in the report. This article is not a setup guide. Configuration and usage issues can be covered in separate articles.

Logs are sent to the analyzer for processing in the form in which squid created them and will not be subjected to any preliminary processing in order to make changes to them. Processing of incorrect entries and various transformations of the log fields should be made by the analyzer itself and be present only in the report. This article is not a setup guide. Configuration and usage issues can be covered in separate articles.

So let's get started.

SARG - Squid Analysis Report Generator

The elder among the supported programs of this class (development started in 1998, the former name is sqmgrlog). The latest release (version 2.3.10) is April 2015. After that, there were several improvements and fixes that are available in the master version (can be downloaded using git from sourceforge).

The program is launched manually or by cron. You can run without parameters (then all parameters will be taken from the sarg.conf configuration file), or you can specify parameters in the command line or a script, for example, the dates for which the report is built.

Reports are created as html-pages and are added to the / var / www / html / squid-reports directory (by default). You can set the parameter that determines the number of stored reports in the directory. For example, 10 daily and 20 weekly, older ones will be automatically deleted.

It is possible to use several config-files with different parameters for different report options (for example, for daily reports you can create your config in which the option to create graphs will be disabled and a different directory will be specified for the report output).

In detail

When entering the main page with reports, we can select the period for which it was created (defined in the report creation parameters), the date of its creation, the number of unique users, the total traffic for the period, the average amount of traffic per user.

When choosing one of the periods we will be able to get a report of topusers for this period. Below are descriptions and examples of all types of reports that SARG can do.

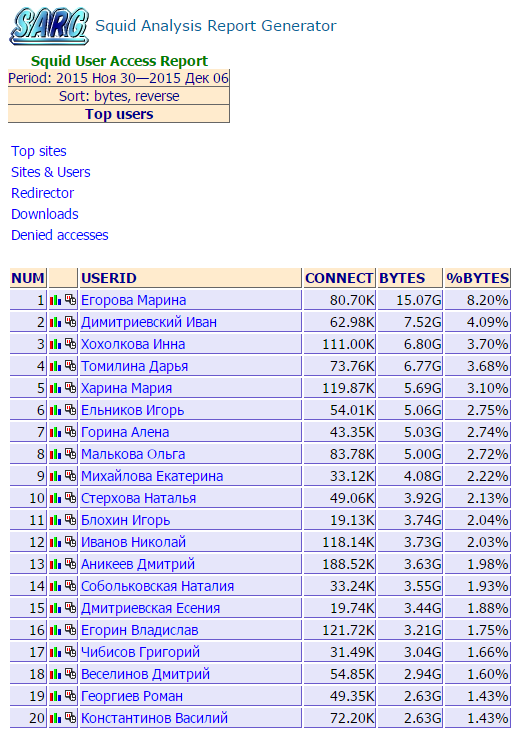

1) topusers - total traffic by users. A user is understood as either the name of the host that has access to the Internet, or a user login. Report example:

IP addresses are displayed here. When configured to enable the corresponding option, IP addresses are converted to domain names.

Do you use authentication? Accounts are converted to real names:

Appearance can be configured in the css-file. Output columns are also configured, unnecessary can be removed. Column sorting is supported (sorttable.js).

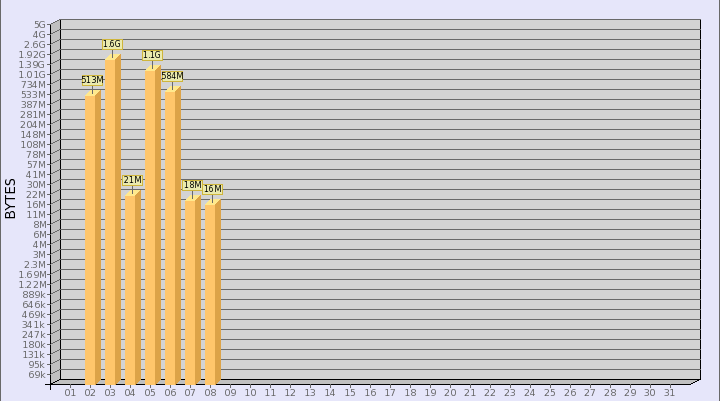

When you click on the icon with the graph on the left we will see a similar graph:

When you click on the icon on the right, we get a report 5.

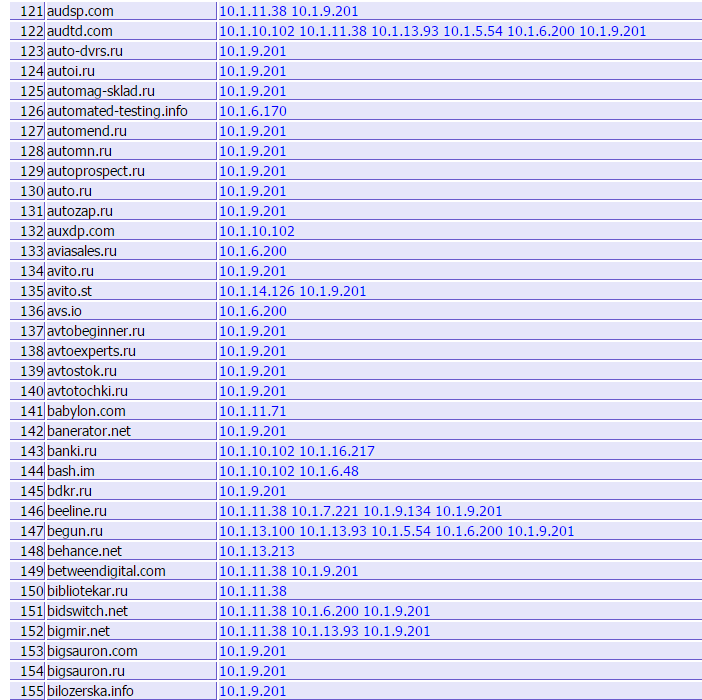

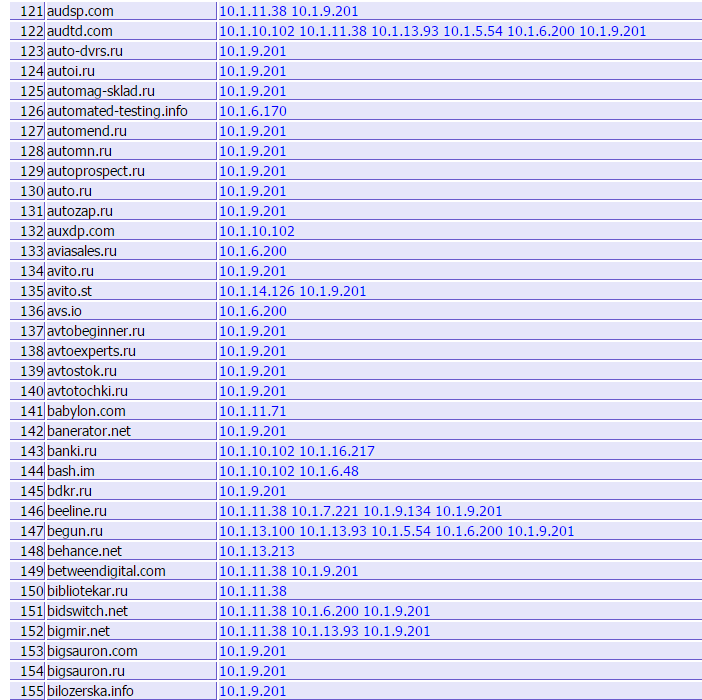

2) topsites - a report on the most popular sites. By default, a list of the 100 most popular sites is displayed (the value is adjusted). Using regular expressions or setting aliases, you can combine traffic of domains of the 3rd and higher levels up to the domain of the 2nd level (as in the screenshot) or specify any other rule. For each domain, you can set the rule separately, for example, for yandex.ru and mail.ru to combine up to the 3rd level. The meaning of the fields is quite obvious.

3) sites_users - a report about who visited a specific site. Everything is simple: the domain name and who addressed it. Traffic is not displayed here.

4) users_sites - a report on the visited sites by each user.

Here, too, everything is clear. If you click on the icon in the first column, we get a report 8).

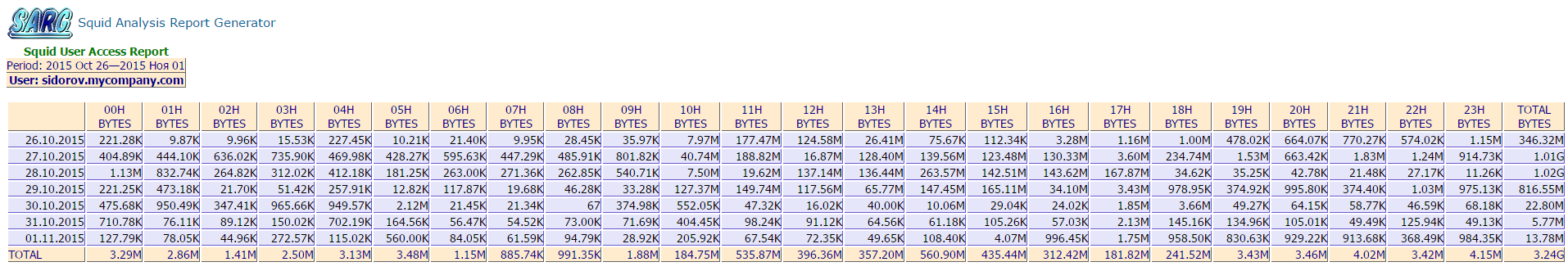

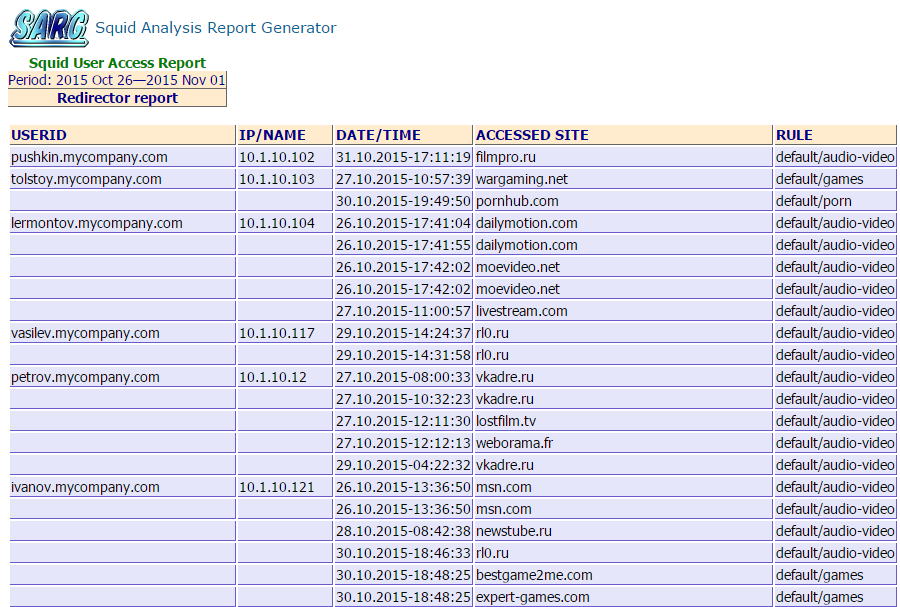

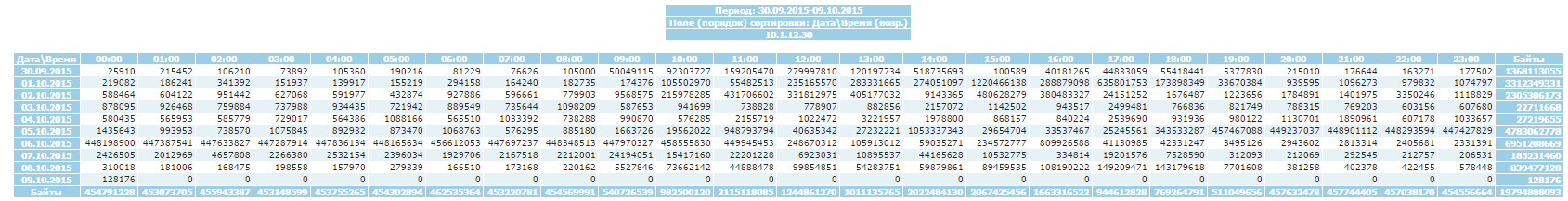

5) date_time - distribution of user traffic by day and hour.

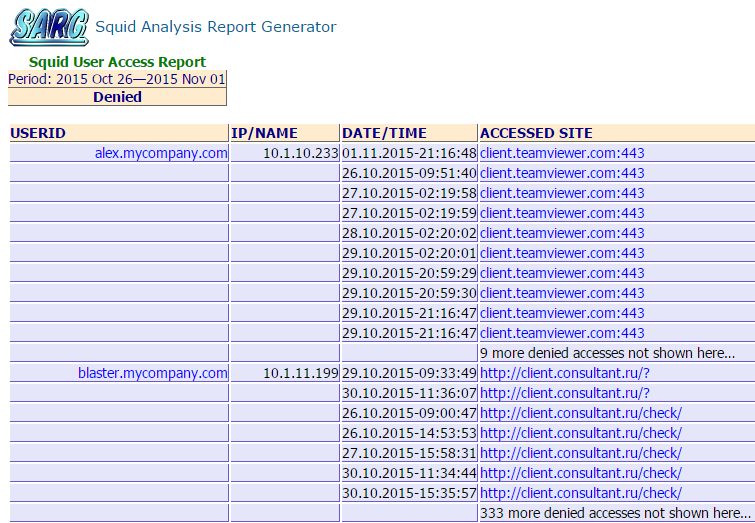

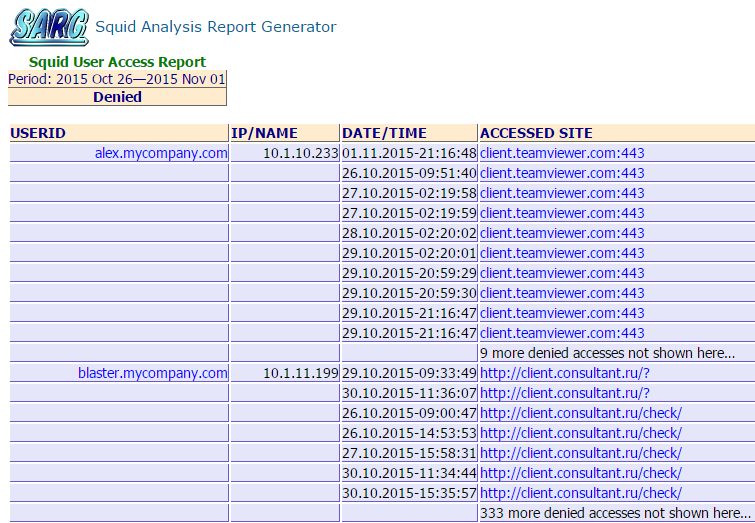

6) denied - blocked squid requests. This shows who, when and where access was denied. The number of entries is configured (10 by default).

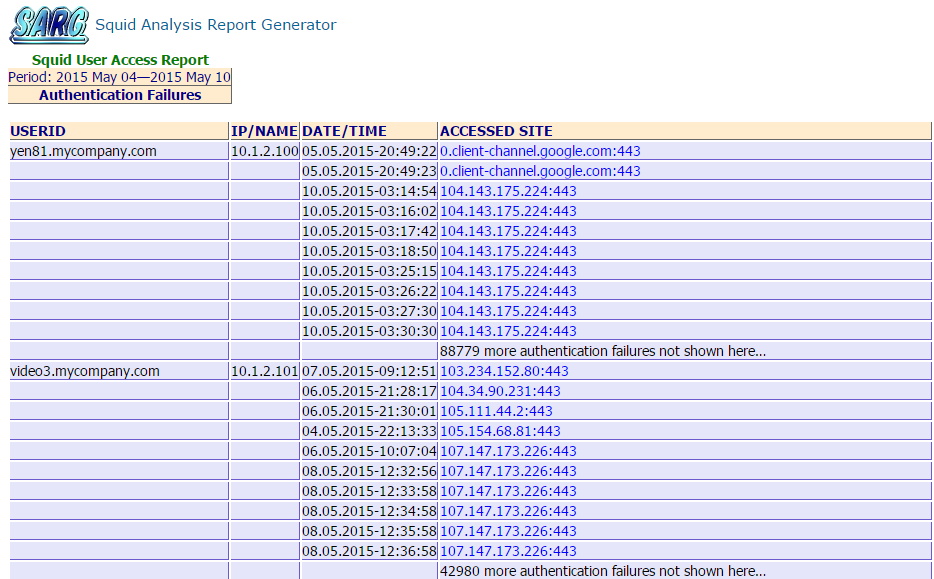

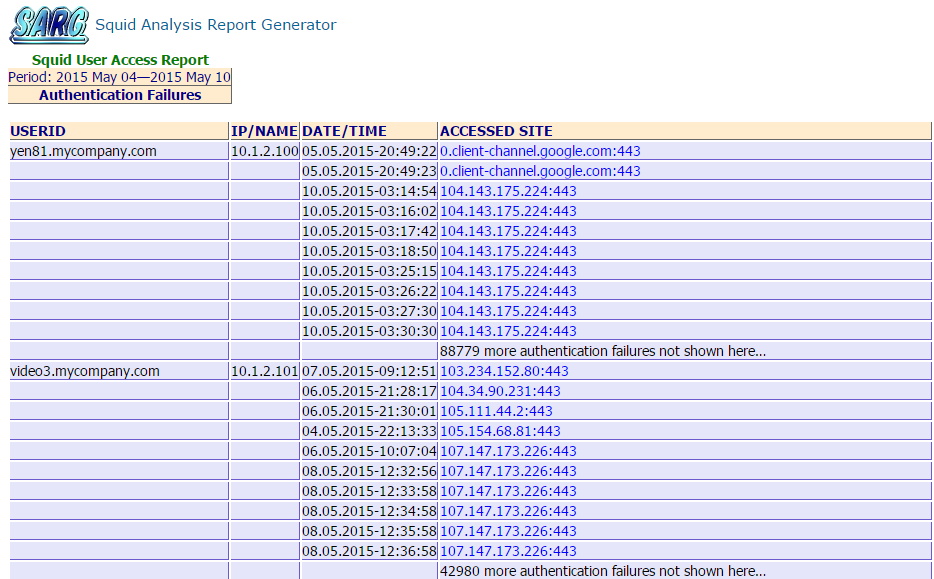

7) auth_failures - authentication failures. HTTP / 407.

The number of entries is configured (10 by default).

8) site_user_time_date - shows what time on which site and from which machine the user visited.

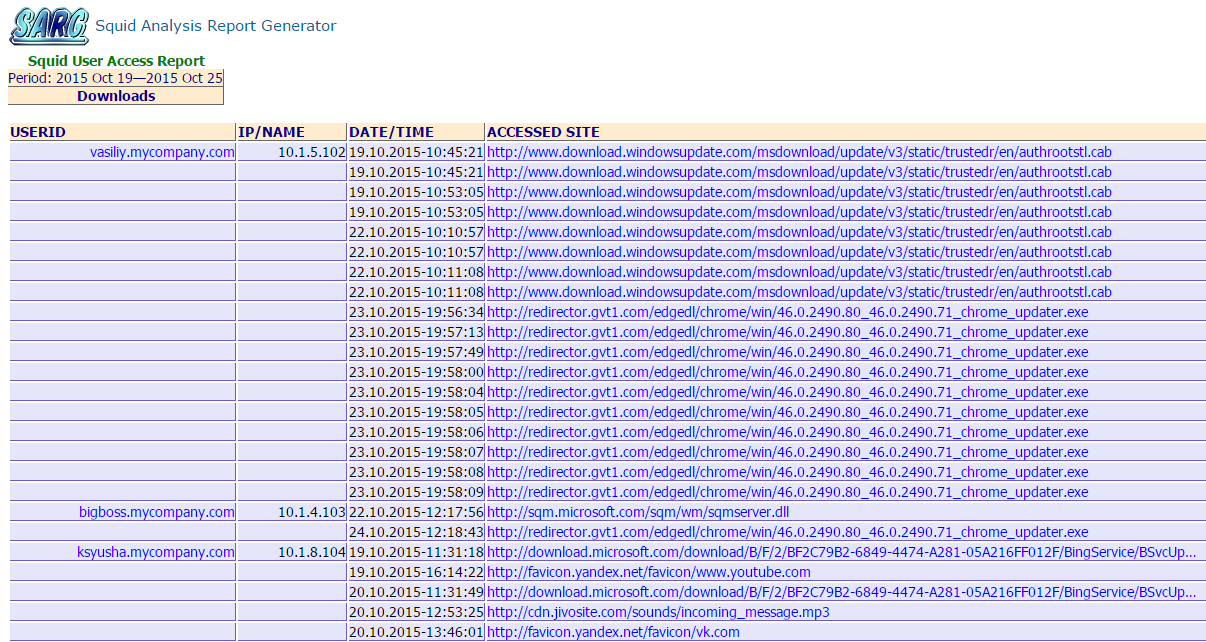

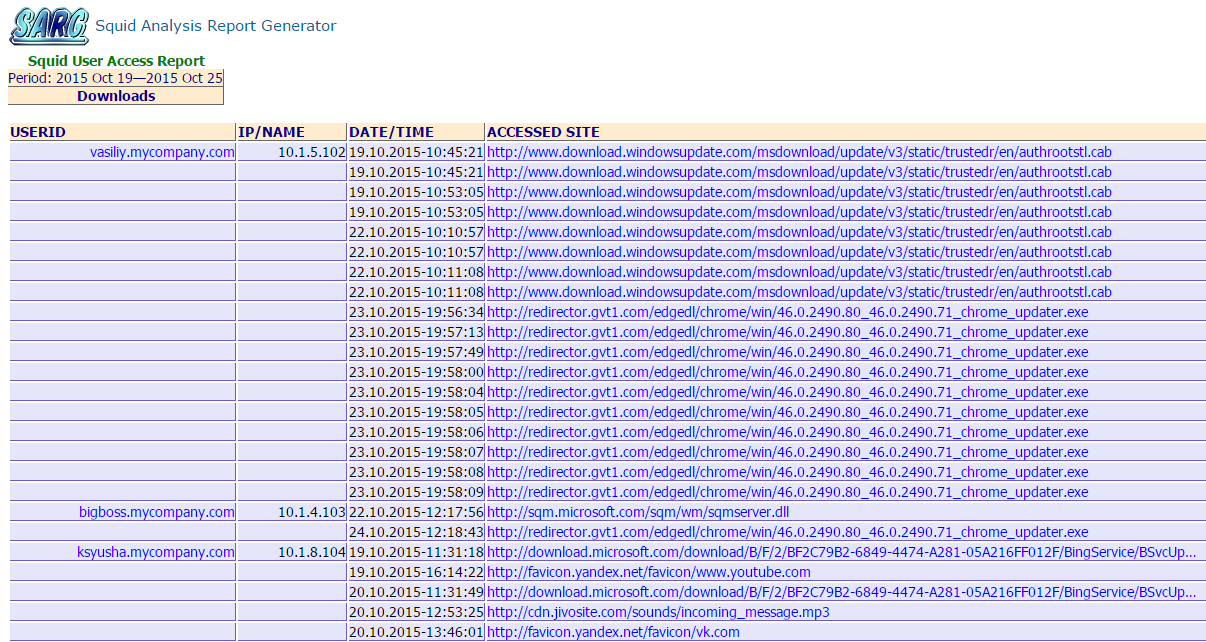

9) downloads - download list.

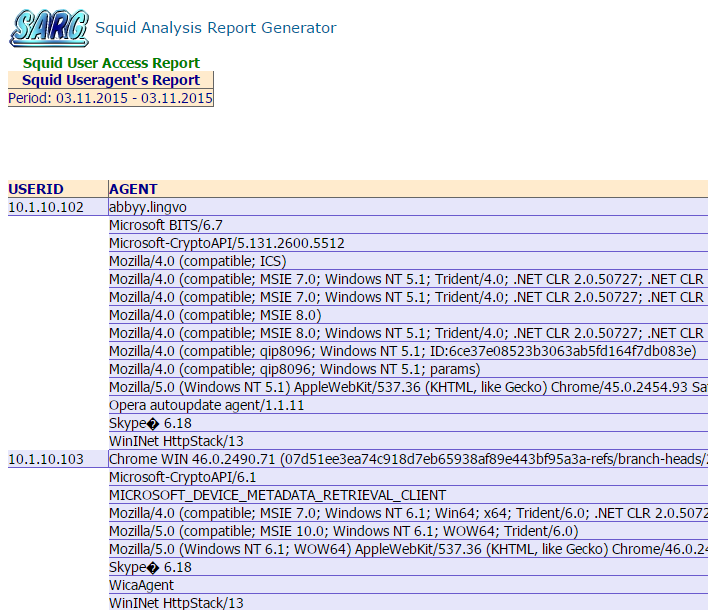

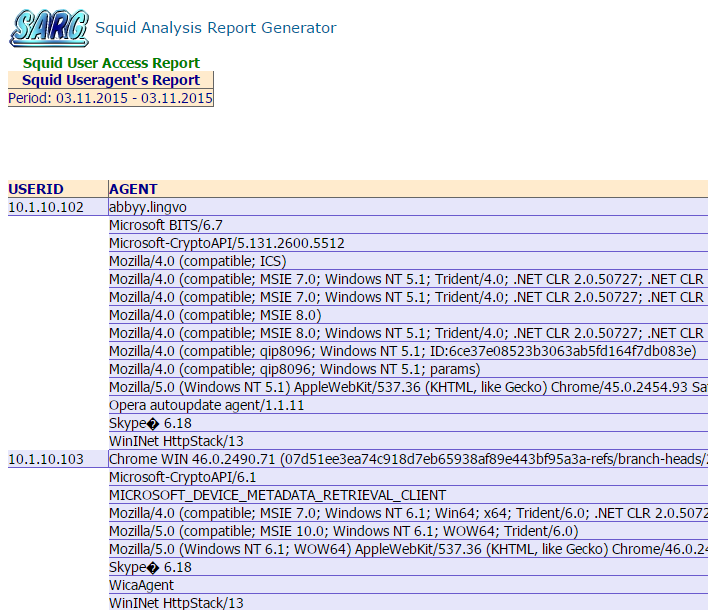

10) useragent - report on the programs used

The first part of the report displays the IP address and the useragents used.

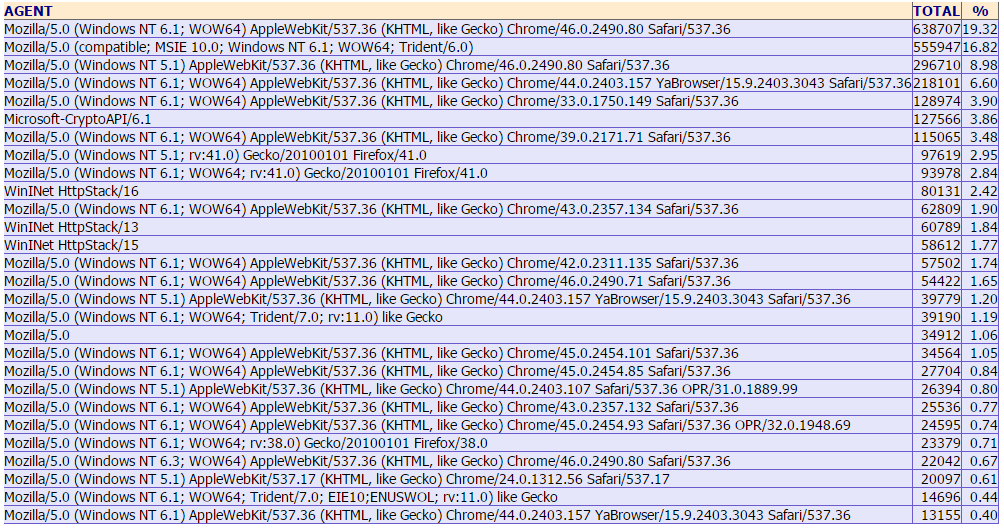

In the second - a general list of useragents with a percentage distribution based on versions.

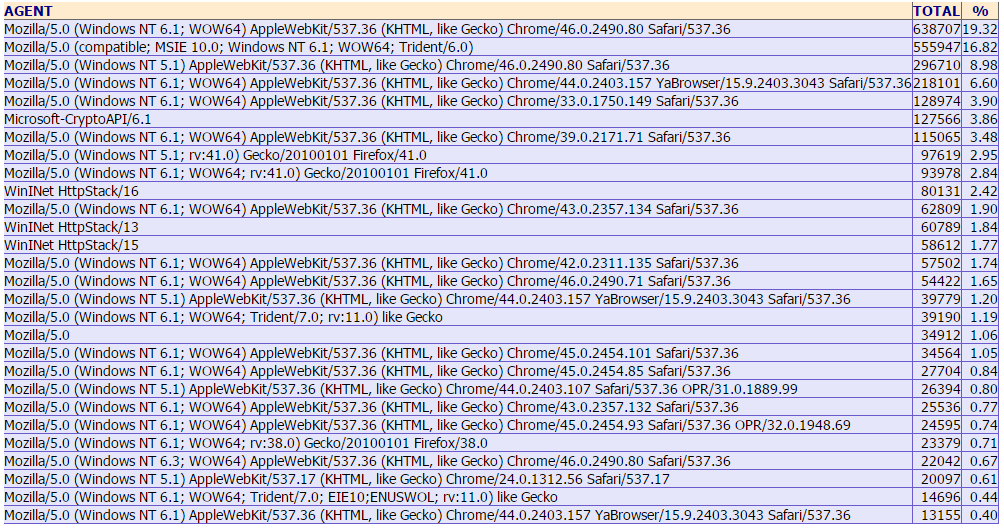

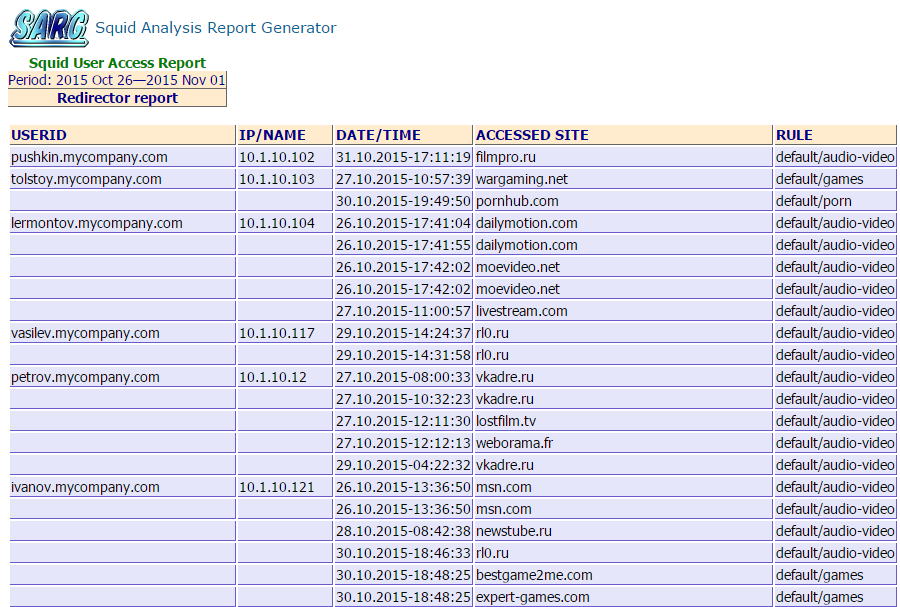

11) redirector - the report shows to whom access was blocked by means of the blocker. Squidguard, dansguardian, rejik are supported, the format of logs is configured.

SARG has more than 120 settings, language support (100% of messages are translated into Russian), regular expression support, work with LDAP, the ability to provide users with access only to their reports on the web server (via .htaccess), the ability to convert logs into their format to save space, upload reports to a text file to populate the database, work with squid log files (splitting one or several log files by day).

It is possible to create reports on a specific set of specified groups, for example, if you need to make a separate report on a department. In the future, access to the web page with the reports of the unit can be provided, for example, to managers using the means of a web server.

You can send reports by e-mail, however, while only the topusers report is supported, and the letter itself will be plain text without HTML support.

You can exclude certain users or certain hosts from processing. You can set aliases for users by combining the traffic of several accounts into one, for example, all the out-owners. You can also set aliases for sites, for example, combine several social networks into a certain alias, in this case all the parameters for the specified domains (number of connections, traffic volume, processing time) will be added up. Or, using a regular expression, you can “drop” domains above the 3rd level.

It is possible to upload to separate files a list of users who have exceeded certain volumes for the period. The output will be several files, for example: userlimit_1G.txt - exceeding 1 Gb, userlimit_5G.txt - exceeding 5 Gb, and so on - only 16 limits.

SARG also has a couple of PHP pages in its arsenal: view current connections to squid and add domain names to squidguard block lists.

In general, it is a very flexible and powerful tool, easy to learn. All parameters are described in the default configuration file, in the projectformat's wiki section on sourceforge there is a more detailed description of all parameters in the wiki section, broken down into groups, and examples of their use.

When choosing one of the periods we will be able to get a report of topusers for this period. Below are descriptions and examples of all types of reports that SARG can do.

1) topusers - total traffic by users. A user is understood as either the name of the host that has access to the Internet, or a user login. Report example:

IP addresses are displayed here. When configured to enable the corresponding option, IP addresses are converted to domain names.

Do you use authentication? Accounts are converted to real names:

Appearance can be configured in the css-file. Output columns are also configured, unnecessary can be removed. Column sorting is supported (sorttable.js).

When you click on the icon with the graph on the left we will see a similar graph:

When you click on the icon on the right, we get a report 5.

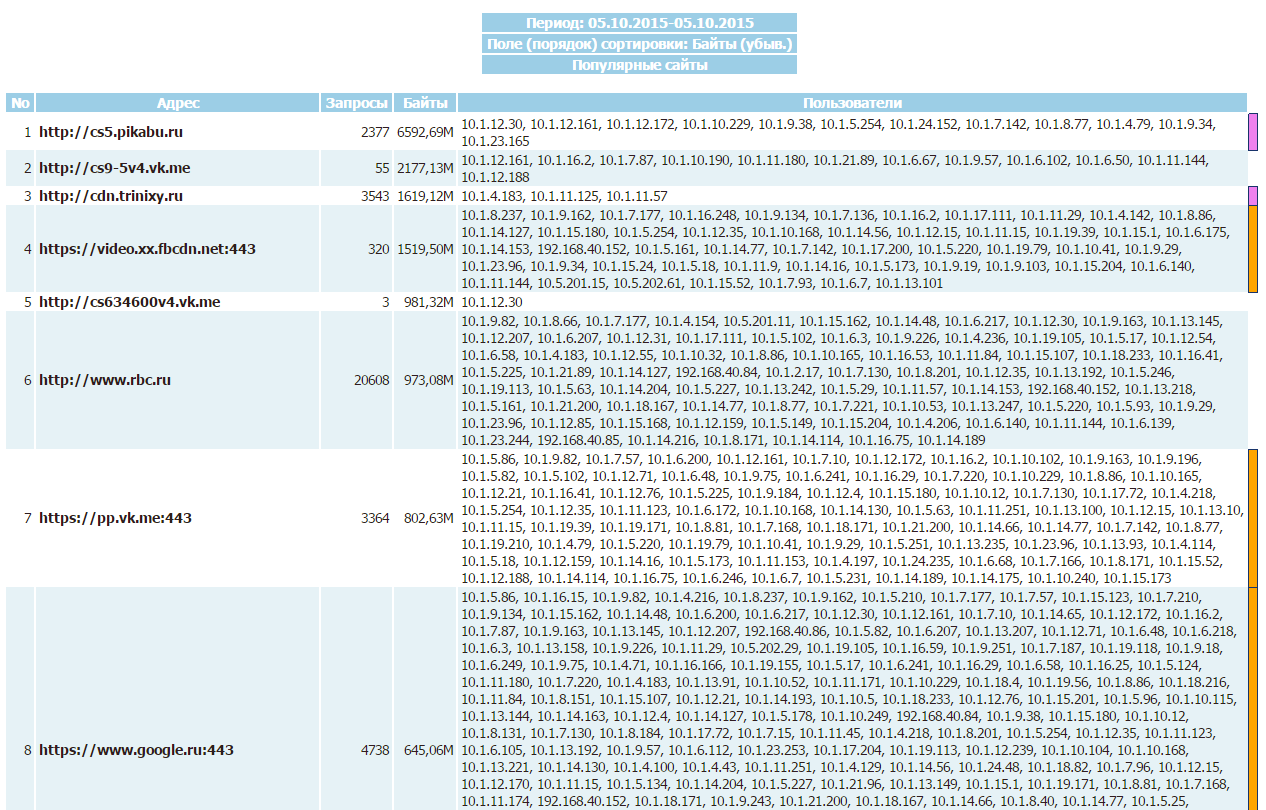

2) topsites - a report on the most popular sites. By default, a list of the 100 most popular sites is displayed (the value is adjusted). Using regular expressions or setting aliases, you can combine traffic of domains of the 3rd and higher levels up to the domain of the 2nd level (as in the screenshot) or specify any other rule. For each domain, you can set the rule separately, for example, for yandex.ru and mail.ru to combine up to the 3rd level. The meaning of the fields is quite obvious.

3) sites_users - a report about who visited a specific site. Everything is simple: the domain name and who addressed it. Traffic is not displayed here.

4) users_sites - a report on the visited sites by each user.

Here, too, everything is clear. If you click on the icon in the first column, we get a report 8).

5) date_time - distribution of user traffic by day and hour.

6) denied - blocked squid requests. This shows who, when and where access was denied. The number of entries is configured (10 by default).

7) auth_failures - authentication failures. HTTP / 407.

The number of entries is configured (10 by default).

8) site_user_time_date - shows what time on which site and from which machine the user visited.

9) downloads - download list.

10) useragent - report on the programs used

The first part of the report displays the IP address and the useragents used.

In the second - a general list of useragents with a percentage distribution based on versions.

11) redirector - the report shows to whom access was blocked by means of the blocker. Squidguard, dansguardian, rejik are supported, the format of logs is configured.

SARG has more than 120 settings, language support (100% of messages are translated into Russian), regular expression support, work with LDAP, the ability to provide users with access only to their reports on the web server (via .htaccess), the ability to convert logs into their format to save space, upload reports to a text file to populate the database, work with squid log files (splitting one or several log files by day).

It is possible to create reports on a specific set of specified groups, for example, if you need to make a separate report on a department. In the future, access to the web page with the reports of the unit can be provided, for example, to managers using the means of a web server.

You can send reports by e-mail, however, while only the topusers report is supported, and the letter itself will be plain text without HTML support.

You can exclude certain users or certain hosts from processing. You can set aliases for users by combining the traffic of several accounts into one, for example, all the out-owners. You can also set aliases for sites, for example, combine several social networks into a certain alias, in this case all the parameters for the specified domains (number of connections, traffic volume, processing time) will be added up. Or, using a regular expression, you can “drop” domains above the 3rd level.

It is possible to upload to separate files a list of users who have exceeded certain volumes for the period. The output will be several files, for example: userlimit_1G.txt - exceeding 1 Gb, userlimit_5G.txt - exceeding 5 Gb, and so on - only 16 limits.

SARG also has a couple of PHP pages in its arsenal: view current connections to squid and add domain names to squidguard block lists.

In general, it is a very flexible and powerful tool, easy to learn. All parameters are described in the default configuration file, in the projectformat's wiki section on sourceforge there is a more detailed description of all parameters in the wiki section, broken down into groups, and examples of their use.

free-sa

Domestic development. Since November 2013 there have been no new versions. It is stated that reports are generated faster than competing programs and less space is taken up for ready-made reports. Check it out!

This program is the closest to the logic of work for SARG (and the author himself compares with this program (for example, here )), therefore we will compare with it.

Pleased with the presence of several themes. The theme is 3 css-files and 4 png-icons, corresponding to them.

Reports really are made faster. The daily report was created at 4:30, when SARG had 12 minutes. True, the volume occupied was not the case: the volume occupied by the reports was 440 MB (free-sa) and 336 MB (SARG).

Let's try to give the task more difficult: to process the log file of 3.2 GB in 10 days, in which 26.3 million lines.

Free-sa also made a report faster, in 46 minutes, the report takes 3.7 GB on disk. SARG spent 1 hour 10 minutes, the report takes 2.5 GB.

But both of these reports will be inconvenient to read. Who, for example, wants to manually consider which domain is more popular - vk.com or googlevideo.com and manually calculate the traffic of all their subdomains? If you leave only Level 2 domains in the SARG settings, then creating the report will take about the same time, but now the report itself takes 1.5 GB of disk space (the daily one has decreased from 336 MB to 192 MB).

In detail

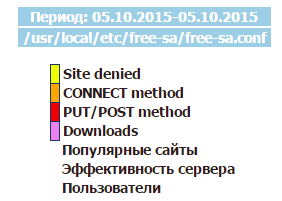

When entering the main page, we see something like the following (the blues theme is selected):

Honestly, the purpose of the output of the year and months is not clear; nothing happens when you click on them. You can write something in the search field, but again nothing happens. You can select the period of interest.

List of blocked URLs:

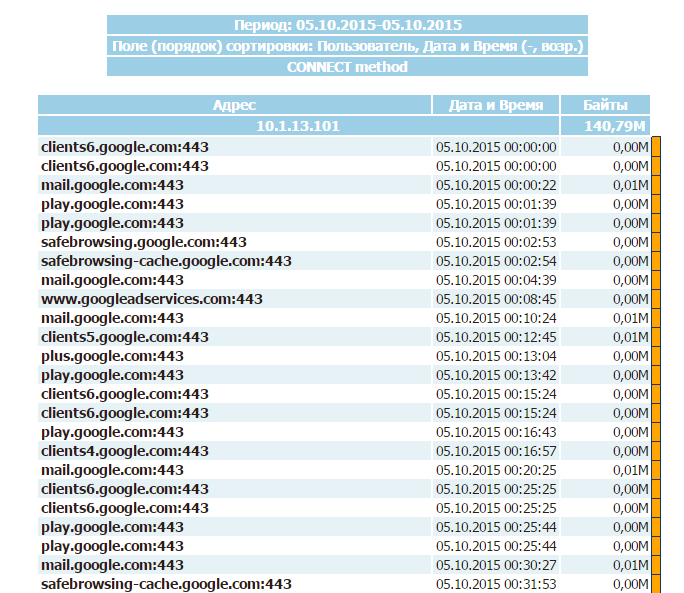

CONNECT metdod report:

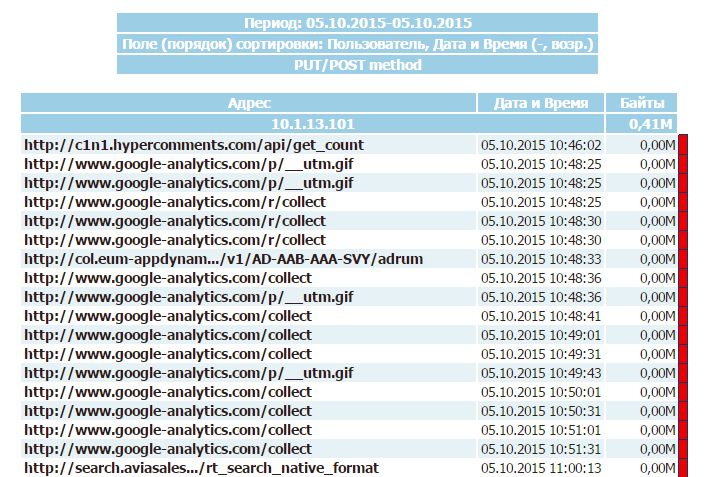

PUT / POST metdod report:

Load Report:

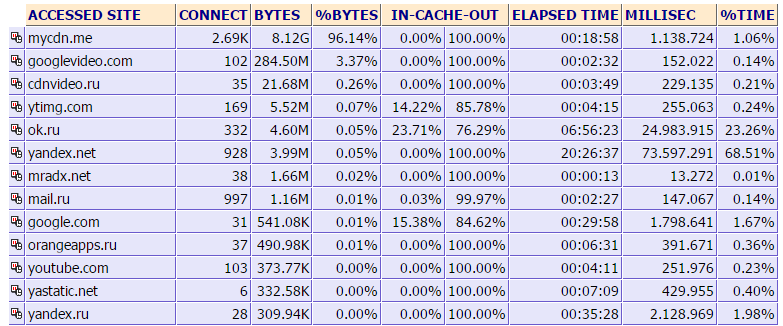

Popular sites:

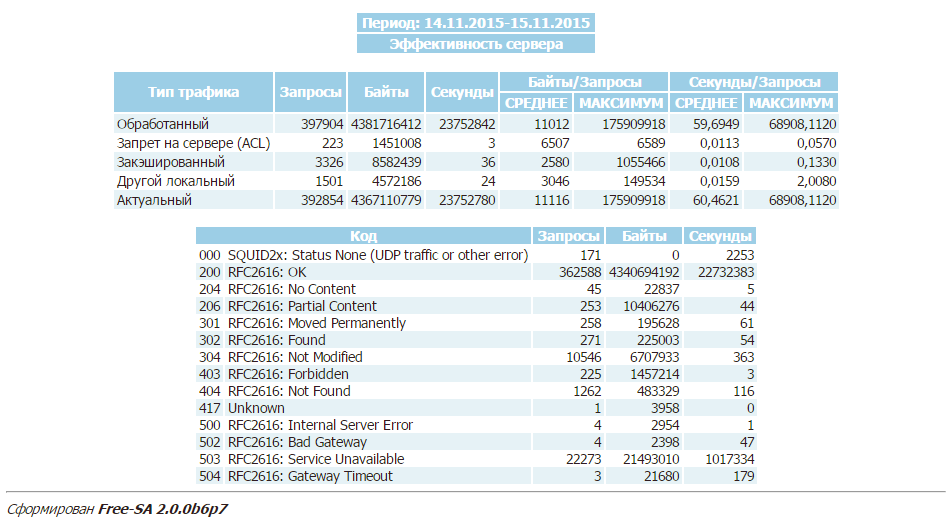

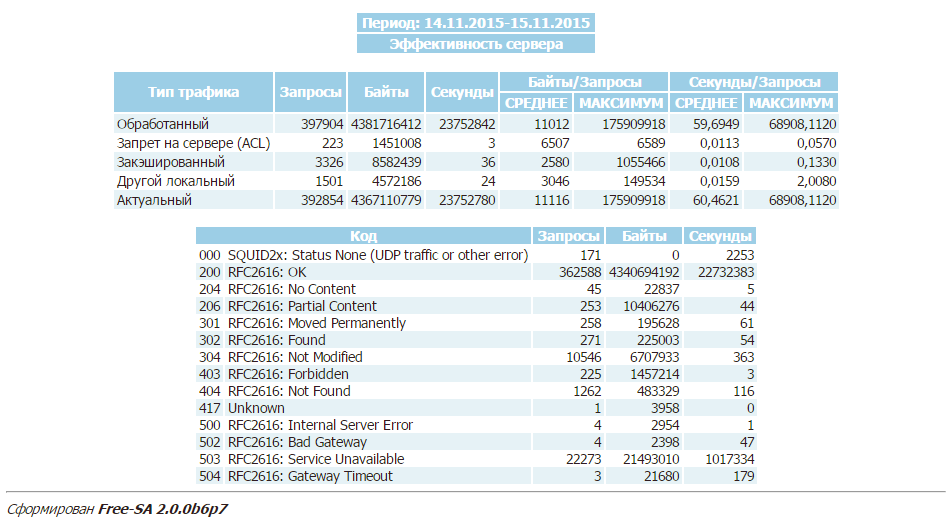

The report on the effectiveness of the proxy server seemed interesting:

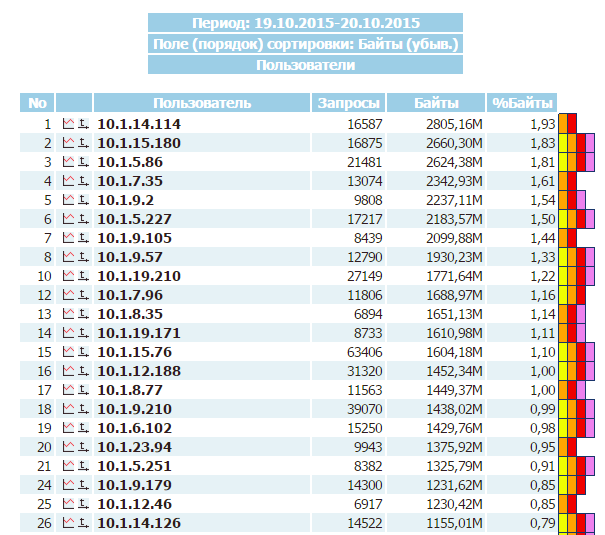

User report:

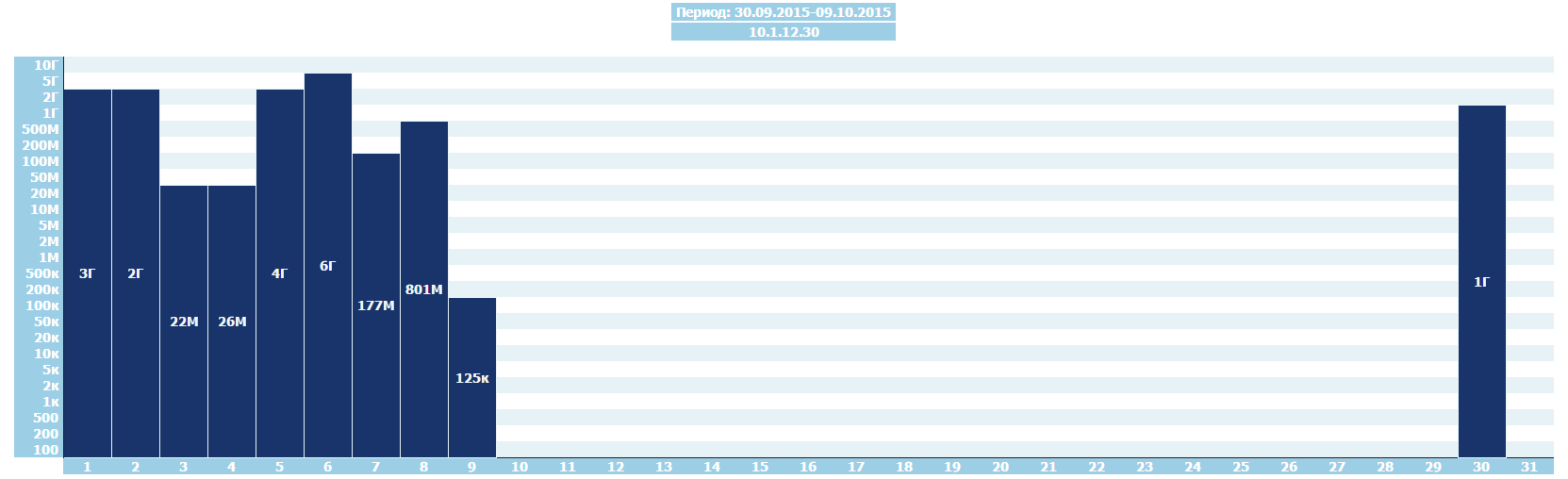

When you click on the graph icon in the second column, we get a graph of Internet usage by a specific user:

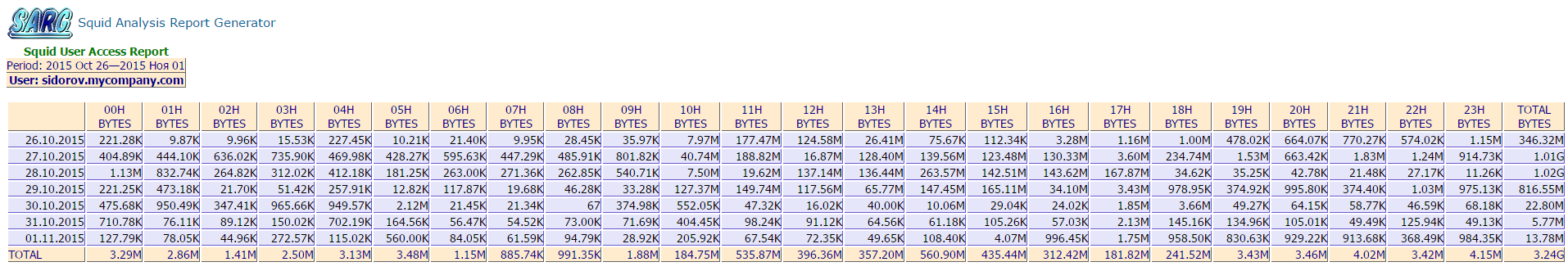

When you click on the second icon, we get the download table of the Internet channel by the hour:

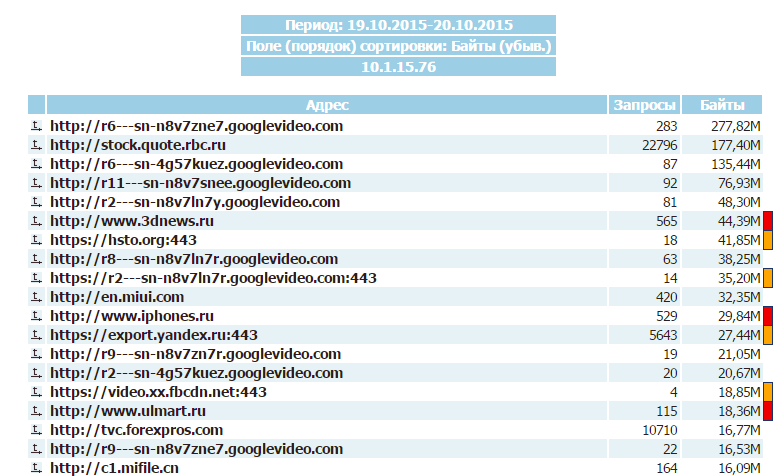

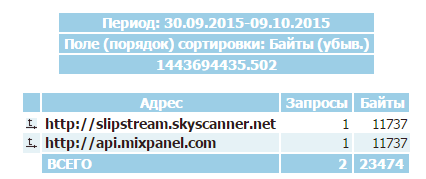

When choosing an IP address, we will get a list of sites by user in descending order of traffic:

All statistics are displayed in bytes. To switch to megabytes, you must set the parameter

The program does not accept compressed log files, does not accept more than one file with the -l parameter, does not support the selection of files by mask. The author of the program proposes to circumvent these restrictions by creating named pipes.

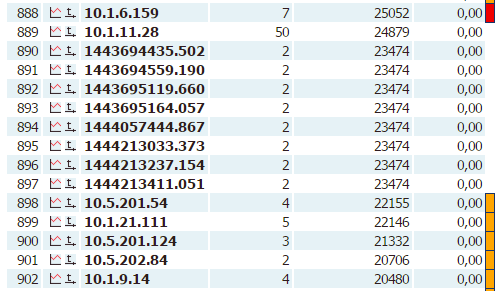

An annoying bug was detected - when the length of the log line is too large, instead of addresses, timestamps fit in:

When viewing the traffic of this “user”, you can see the domain with the source of the error:

Thus, the number of users has increased several times.

If you compare these two programs, free-sa creates a report a little faster. The 20-fold increase in speed, as stated by the author, I could not detect. Perhaps it can be seen under certain conditions. I think it is not important how much it takes to create a weekly report at night - 30 minutes or 50. There is no advantage for free-sa in terms of the amount of space occupied by reports.

Honestly, the purpose of the output of the year and months is not clear; nothing happens when you click on them. You can write something in the search field, but again nothing happens. You can select the period of interest.

List of blocked URLs:

CONNECT metdod report:

PUT / POST metdod report:

Load Report:

Popular sites:

The report on the effectiveness of the proxy server seemed interesting:

User report:

When you click on the graph icon in the second column, we get a graph of Internet usage by a specific user:

When you click on the second icon, we get the download table of the Internet channel by the hour:

When choosing an IP address, we will get a list of sites by user in descending order of traffic:

All statistics are displayed in bytes. To switch to megabytes, you must set the parameter

reports_bytes_divisor = "M"

The program does not accept compressed log files, does not accept more than one file with the -l parameter, does not support the selection of files by mask. The author of the program proposes to circumvent these restrictions by creating named pipes.

An annoying bug was detected - when the length of the log line is too large, instead of addresses, timestamps fit in:

When viewing the traffic of this “user”, you can see the domain with the source of the error:

Thus, the number of users has increased several times.

If you compare these two programs, free-sa creates a report a little faster. The 20-fold increase in speed, as stated by the author, I could not detect. Perhaps it can be seen under certain conditions. I think it is not important how much it takes to create a weekly report at night - 30 minutes or 50. There is no advantage for free-sa in terms of the amount of space occupied by reports.

lightsquid

Perhaps the most popular traffic counters. It works quickly, reports do not take a lot of disk space. Although this program has not been updated for a long time, I still decided to consider its possibilities in this article.

The logic of the program is different: the program reads the log and creates a set of data files, which it then uses to create web pages. That is, there are no pre-created data reports here, pages with data are generated on the fly. The advantages of this solution are obvious: to get a report, it is not necessary to parse all the logs for the period, it’s enough to feed the lightsquid magazine once a day. You can cron-do this several times a day to quickly add a new piece of information.

It was not without drawbacks: it is impossible to process logs from different servers and collect statistics in one place: when processing a log for any day from another server, the existing statistics for that day are erased.

There is a strange restriction: lightsquid "perceives" as uncompressed log files, as well as compressed (gz - exactly), but in the second case the file name should be in this format: access.log.X.gz, files with the format name access.log- YYYYMMDD.gz will not accept.

By simple manipulations, we overcome this limitation and see what happens.

In detail

Report for the month (total traffic 3 TB, 110 million lines) ranked on a 1 GB disk.

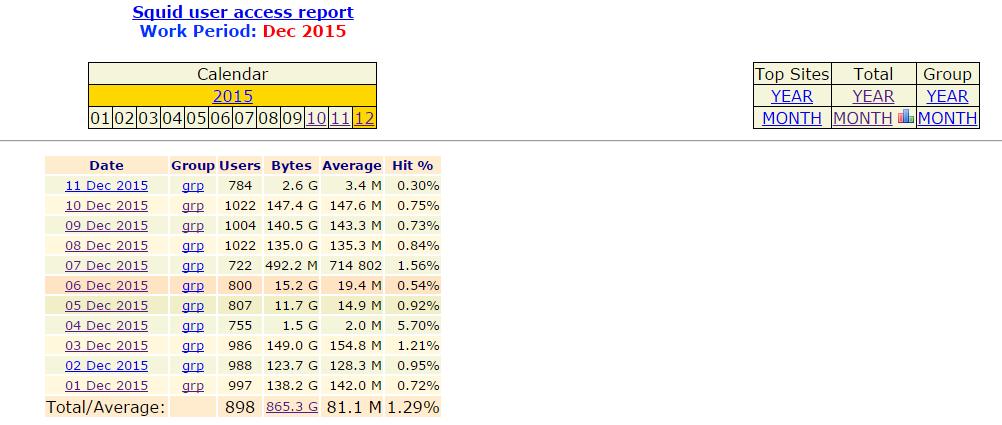

On the start page we see traffic by day for the current month.

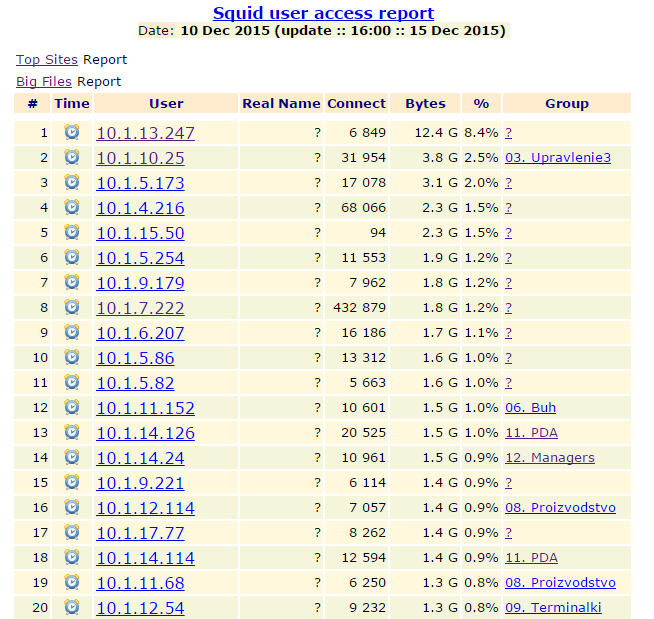

When choosing a day, we see a report for the day for all users:

If groups are specified, the name of the group to which the user belongs is displayed in the right column. Users who are not in any group are combined into the group 00 no in group (in this report, they are marked with a question mark).

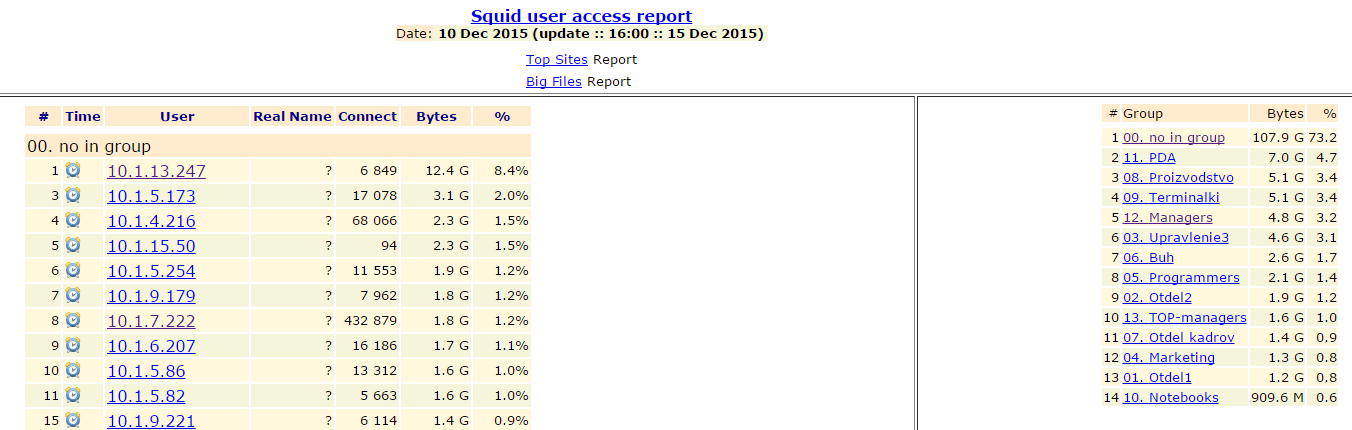

When you select grp on the main page for the corresponding date, we get to the report page of users divided into groups. First listed are not included in any group, then the group in order.

When you click on the name of the group in the table on the right, go below to the place in the page where the report on this group begins:

When you click on the "Top sites report" we get a report on popular sites for the day:

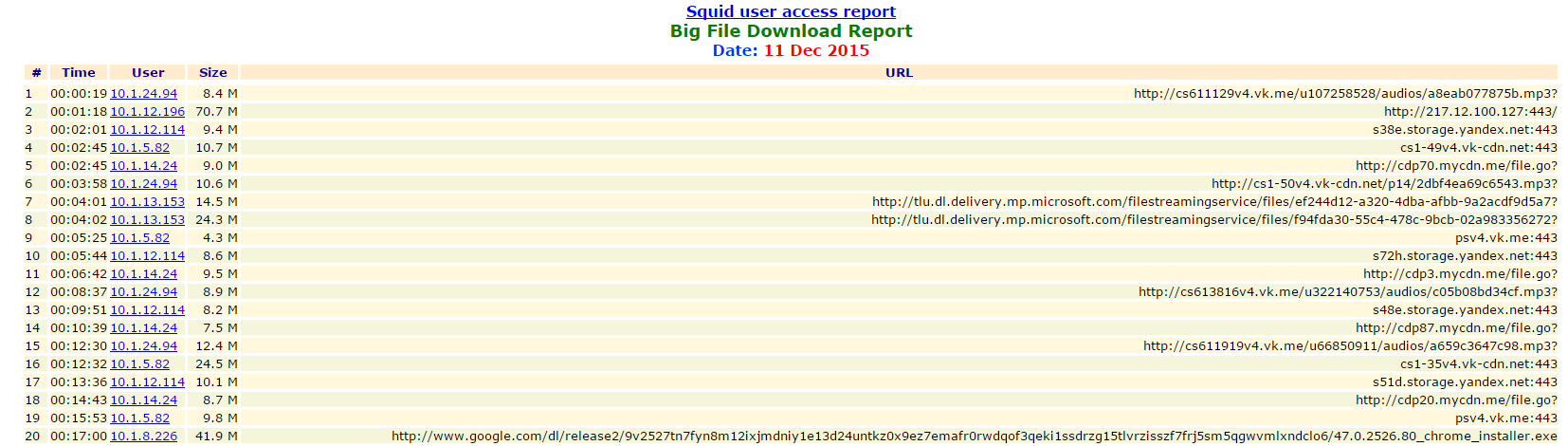

Big files report:

Go to the table on the right.

Here you can get a list of top sites for the month and for the whole year (they look the same, so without a screenshot), general statistics for the year and month, as well as statistics for the year and month by groups.

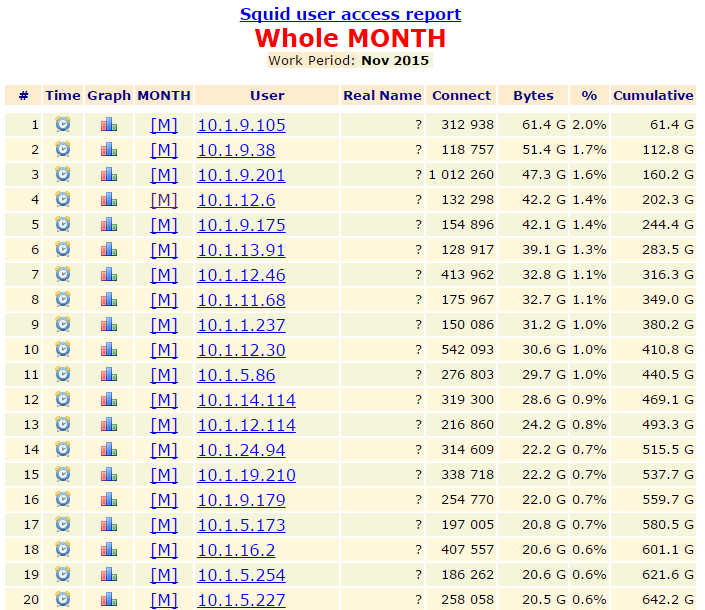

Monthly statistics:

When you click on the clock icon, we will be able to see the table by sites, access time and traffic consumed per hour:

Statistics for the day are displayed here, but for the month and for the year it will look about the same, hourly statistics on domains will be summed up.

When you click on the graph icon, you can see the user’s traffic consumption for the month:

Graph columns are clickable: when you click on a column, we switch to user statistics for another day.

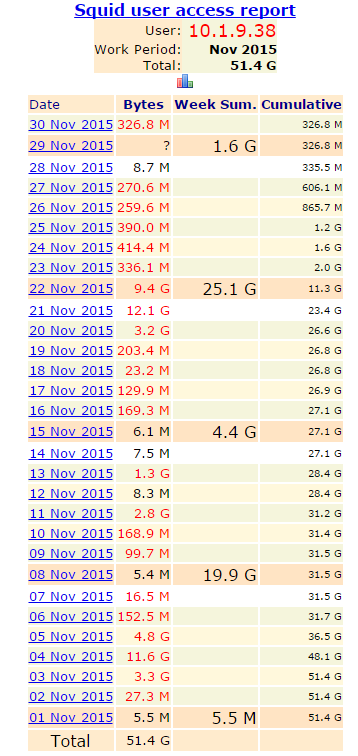

When you click on [M], we get a report on the consumption of traffic by the user within a month, indicating the volume for each day and for the full week.

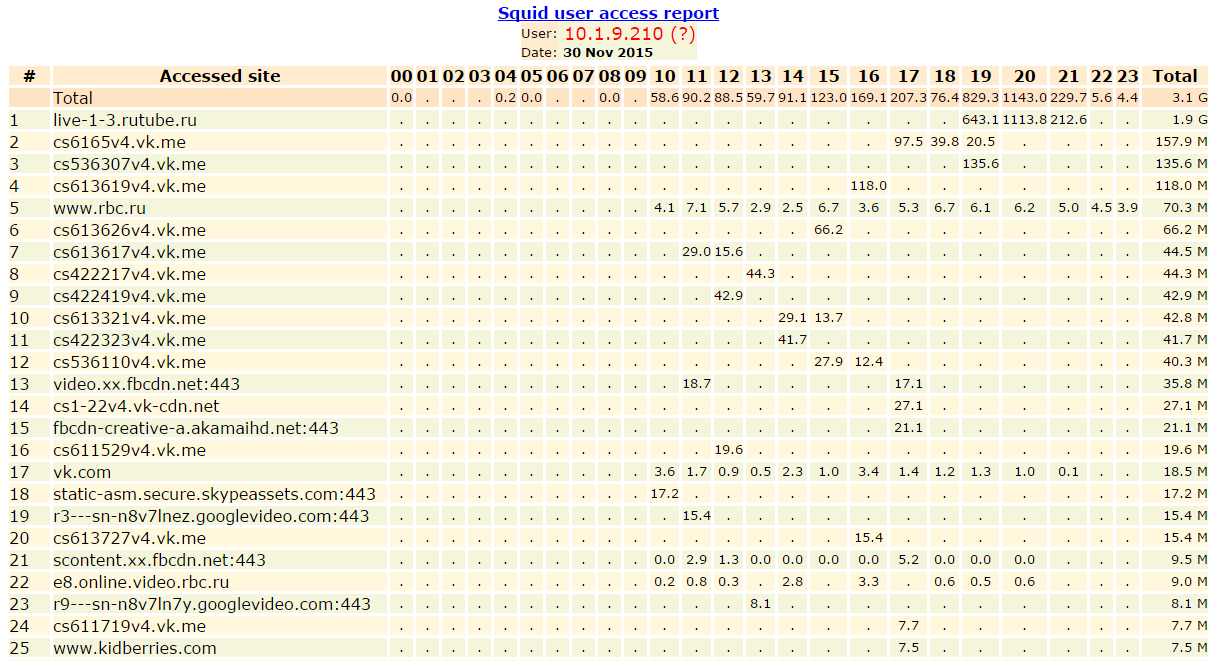

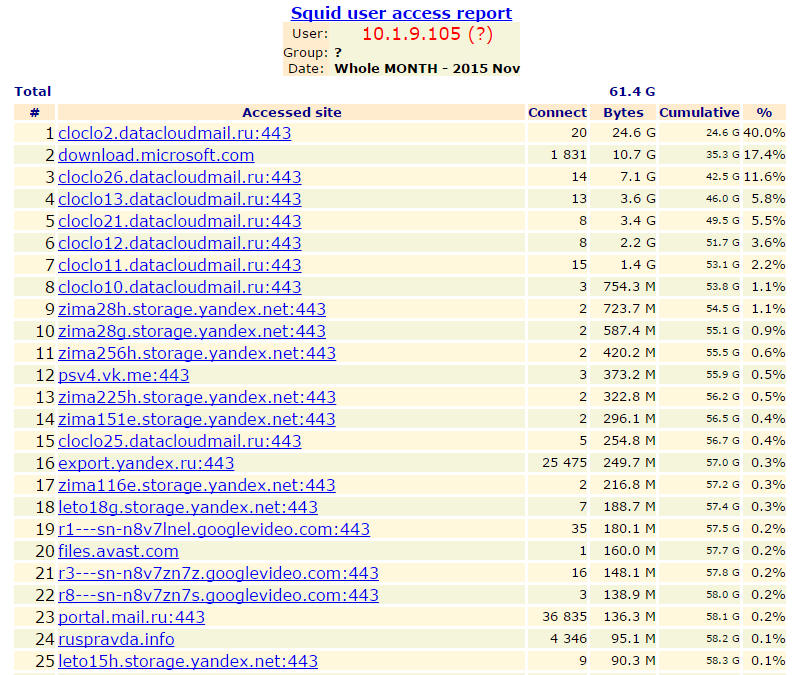

When you click on the username, we get a list of sites that the user visited in order of decreasing traffic:

Well, that seems to be all. Everything is simple and concise. IP addresses can be converted to domain names. Using regular expressions, domain names can be combined into domains of the 2nd level, just in case I quote a regular expression:

With perl skills you can customize it.

On the start page we see traffic by day for the current month.

When choosing a day, we see a report for the day for all users:

If groups are specified, the name of the group to which the user belongs is displayed in the right column. Users who are not in any group are combined into the group 00 no in group (in this report, they are marked with a question mark).

When you select grp on the main page for the corresponding date, we get to the report page of users divided into groups. First listed are not included in any group, then the group in order.

When you click on the name of the group in the table on the right, go below to the place in the page where the report on this group begins:

When you click on the "Top sites report" we get a report on popular sites for the day:

Big files report:

Go to the table on the right.

Here you can get a list of top sites for the month and for the whole year (they look the same, so without a screenshot), general statistics for the year and month, as well as statistics for the year and month by groups.

Monthly statistics:

When you click on the clock icon, we will be able to see the table by sites, access time and traffic consumed per hour:

Statistics for the day are displayed here, but for the month and for the year it will look about the same, hourly statistics on domains will be summed up.

When you click on the graph icon, you can see the user’s traffic consumption for the month:

Graph columns are clickable: when you click on a column, we switch to user statistics for another day.

When you click on [M], we get a report on the consumption of traffic by the user within a month, indicating the volume for each day and for the full week.

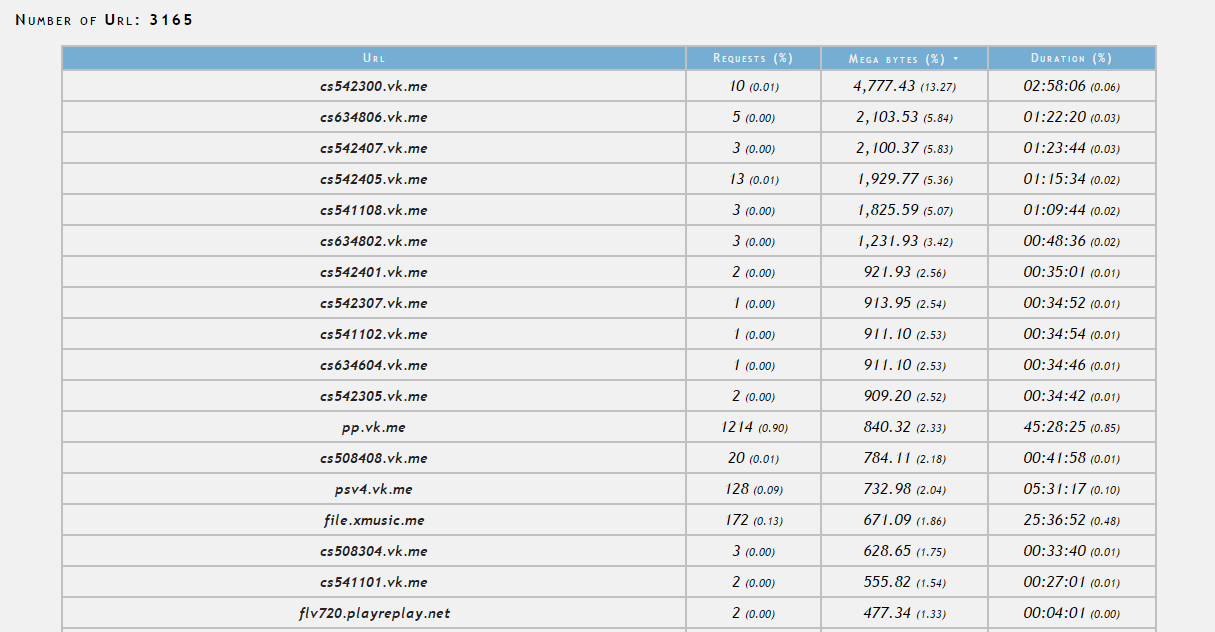

When you click on the username, we get a list of sites that the user visited in order of decreasing traffic:

Well, that seems to be all. Everything is simple and concise. IP addresses can be converted to domain names. Using regular expressions, domain names can be combined into domains of the 2nd level, just in case I quote a regular expression:

$url =~ s/([az]+:\/\/)??([a-z0-9\-]+\.){0,}([a-z0-9\-]+\.){1}([az]+)(.*)/$3$4/o;

With perl skills you can customize it.

SquidAnalyzer

Similar to lightsquid program and also written in Perl. More cute design. The latest version 6.4 is currently released in mid-December of this year, many improvements have been made. Program website: squidanalyzer.darold.net .

SquidAnalyzer can use multiple computer processors (the -j option), making reports faster, but this applies only to uncompressed files. For packed (gz format supported), processing takes place using a single processor core.

And one more comparison with lightsquid: the same report was made on the same server for about a day, on a disk it takes 3.7 GB.

Just like lightsquid, SquidAnalyzer will not be able to combine two or more log files from different servers for the same period.

Read more

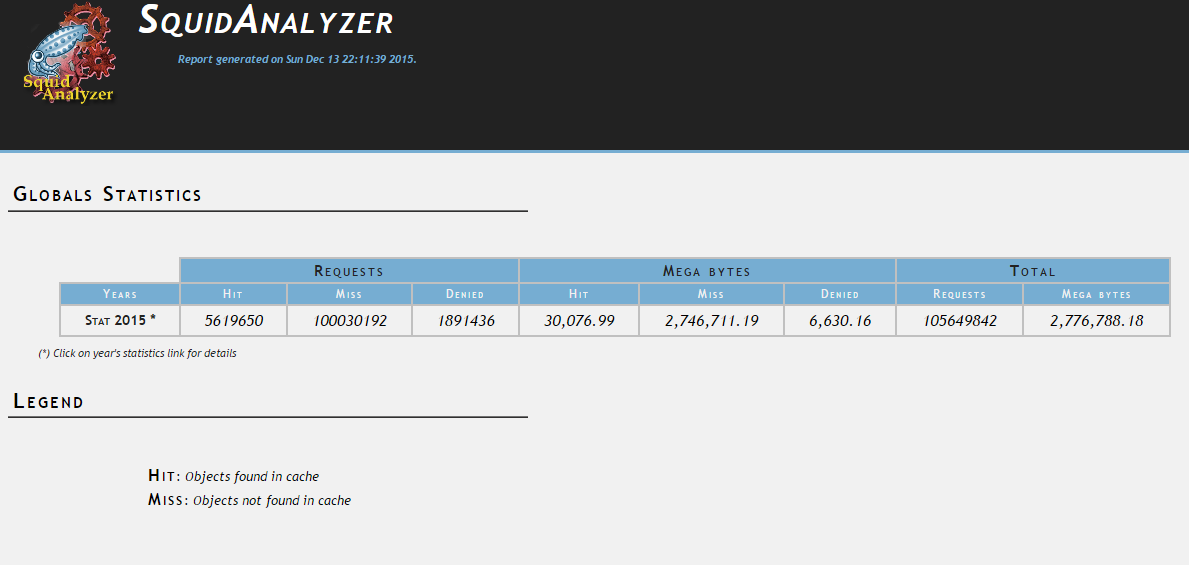

Home page - you can select the year of the report.

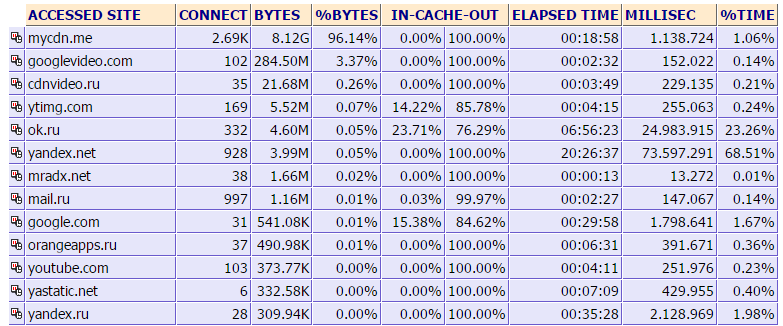

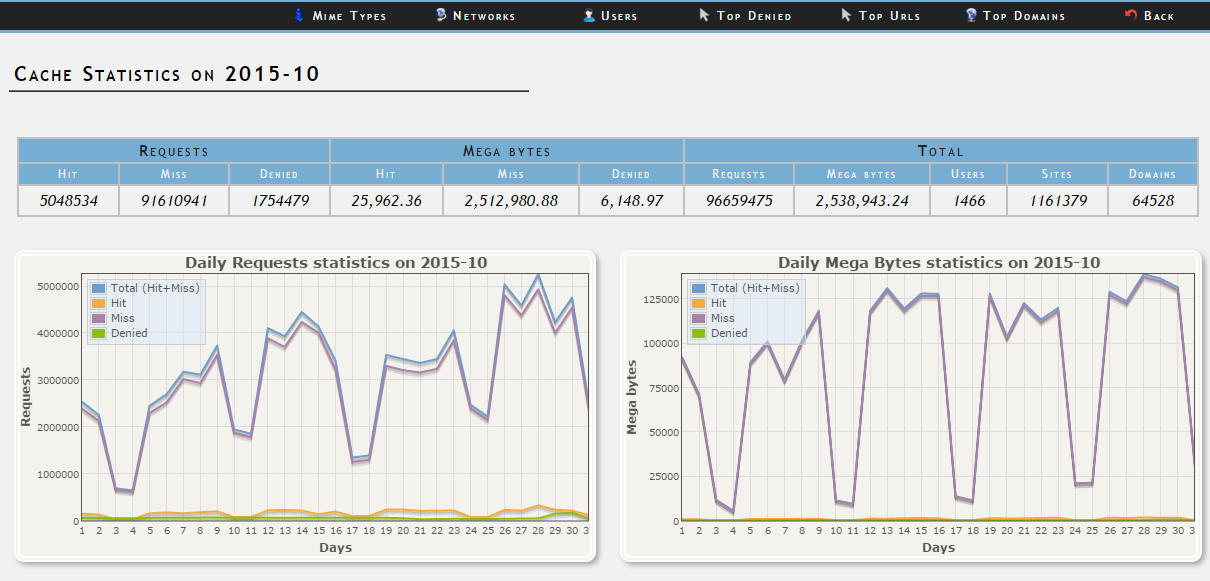

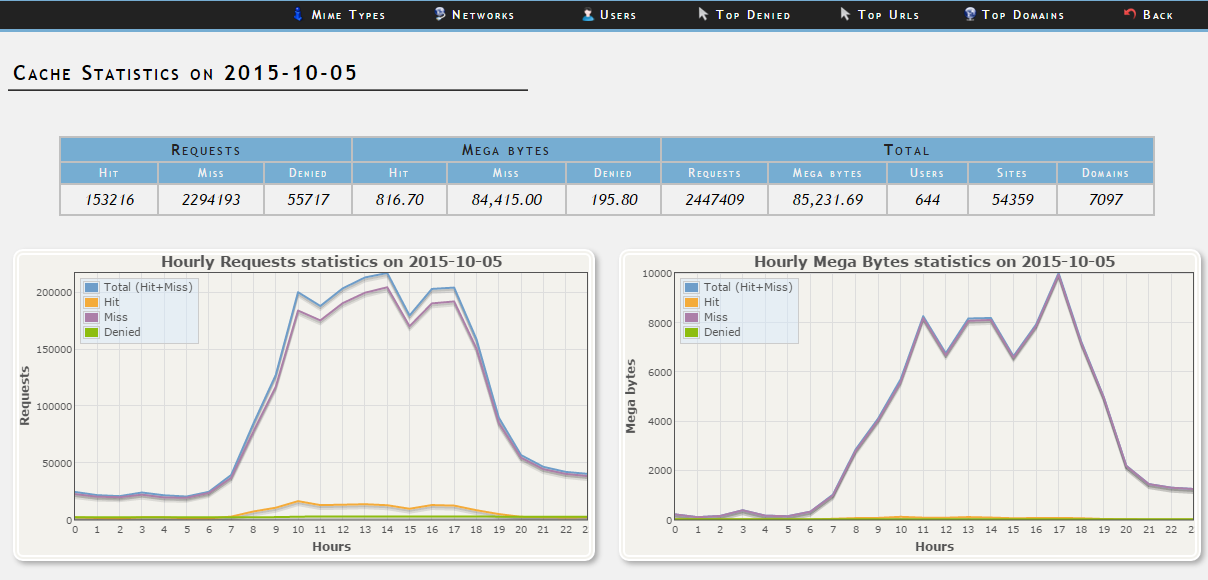

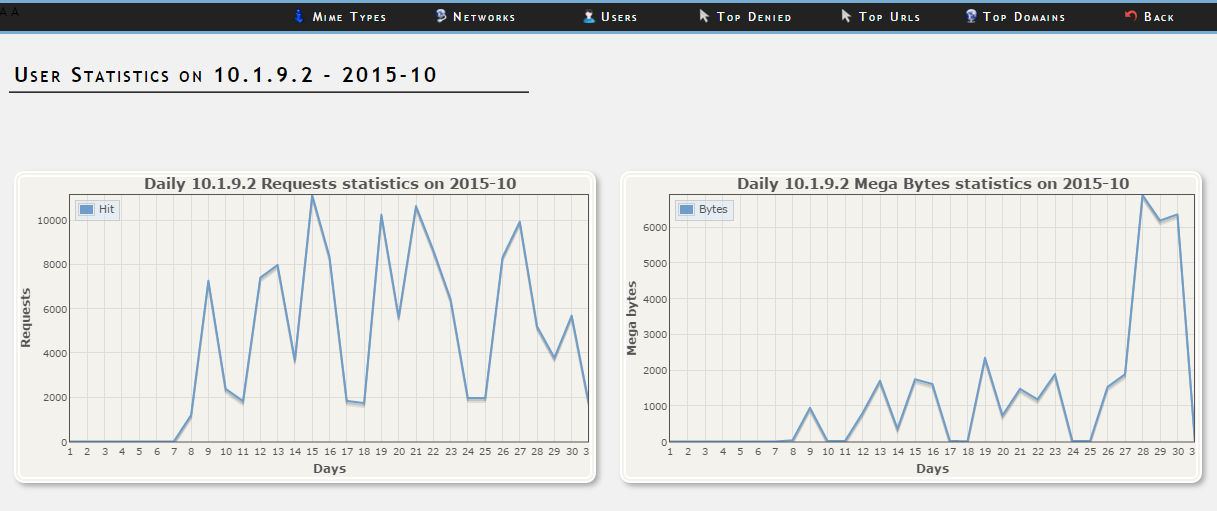

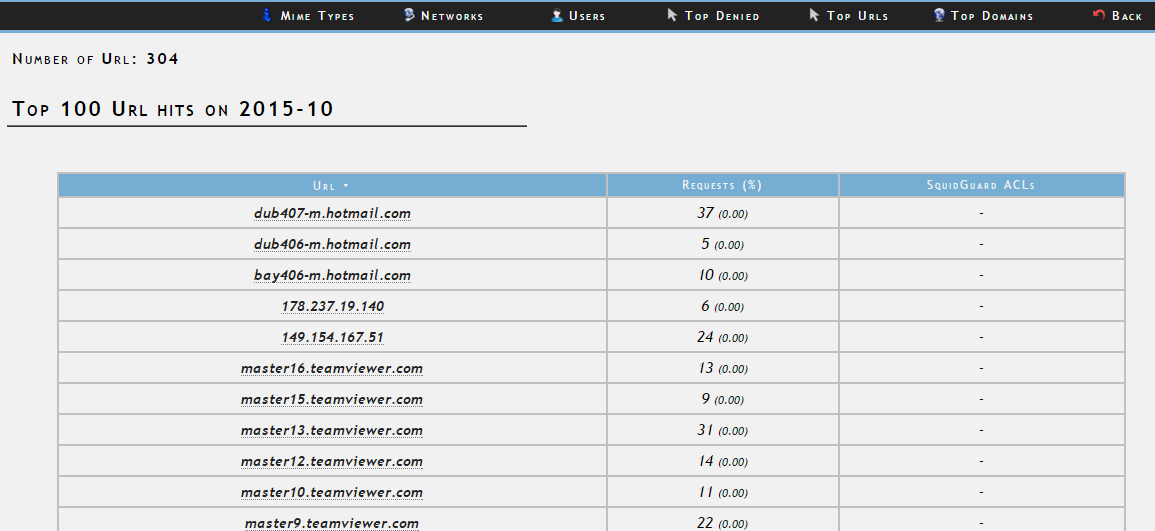

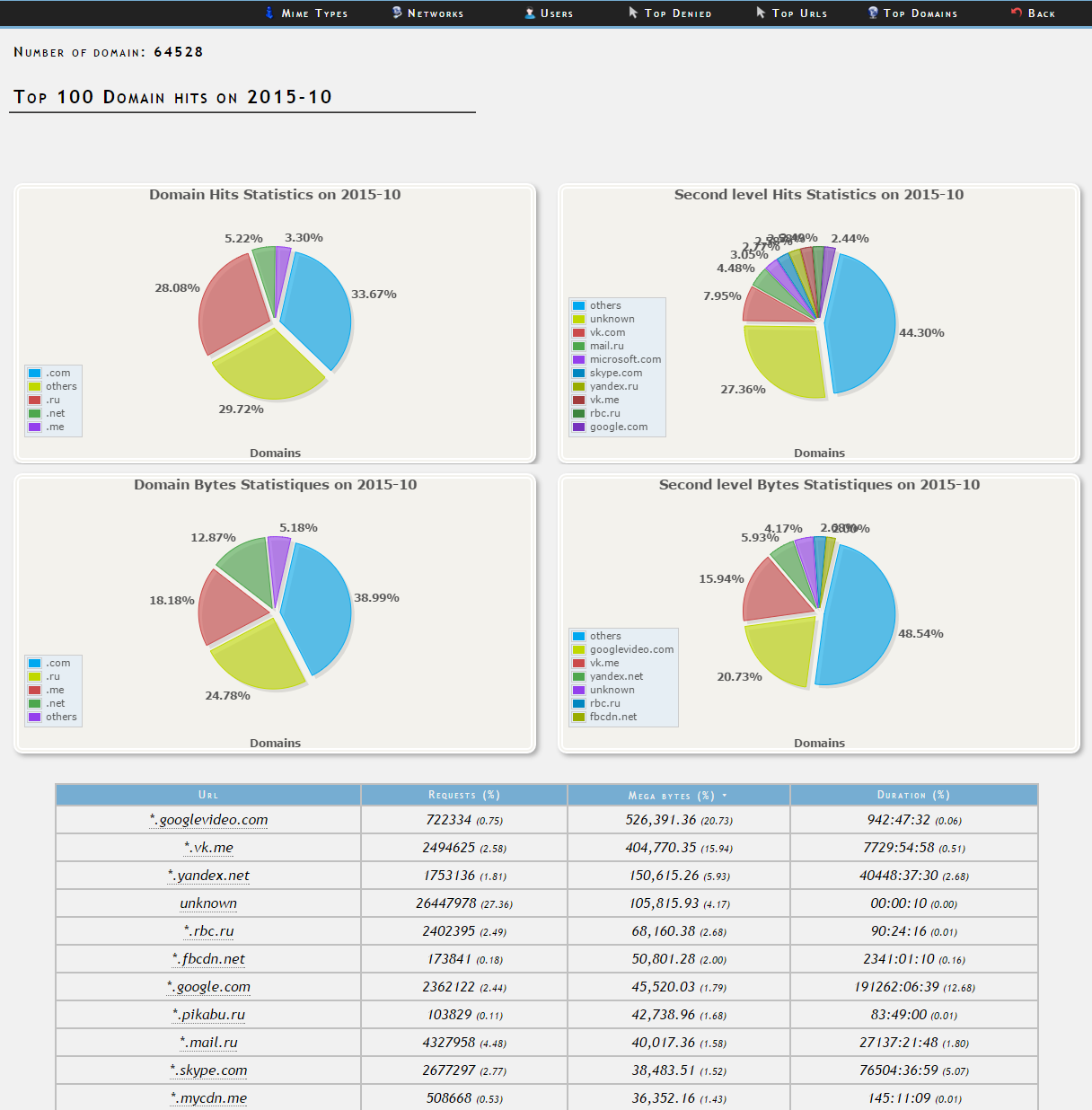

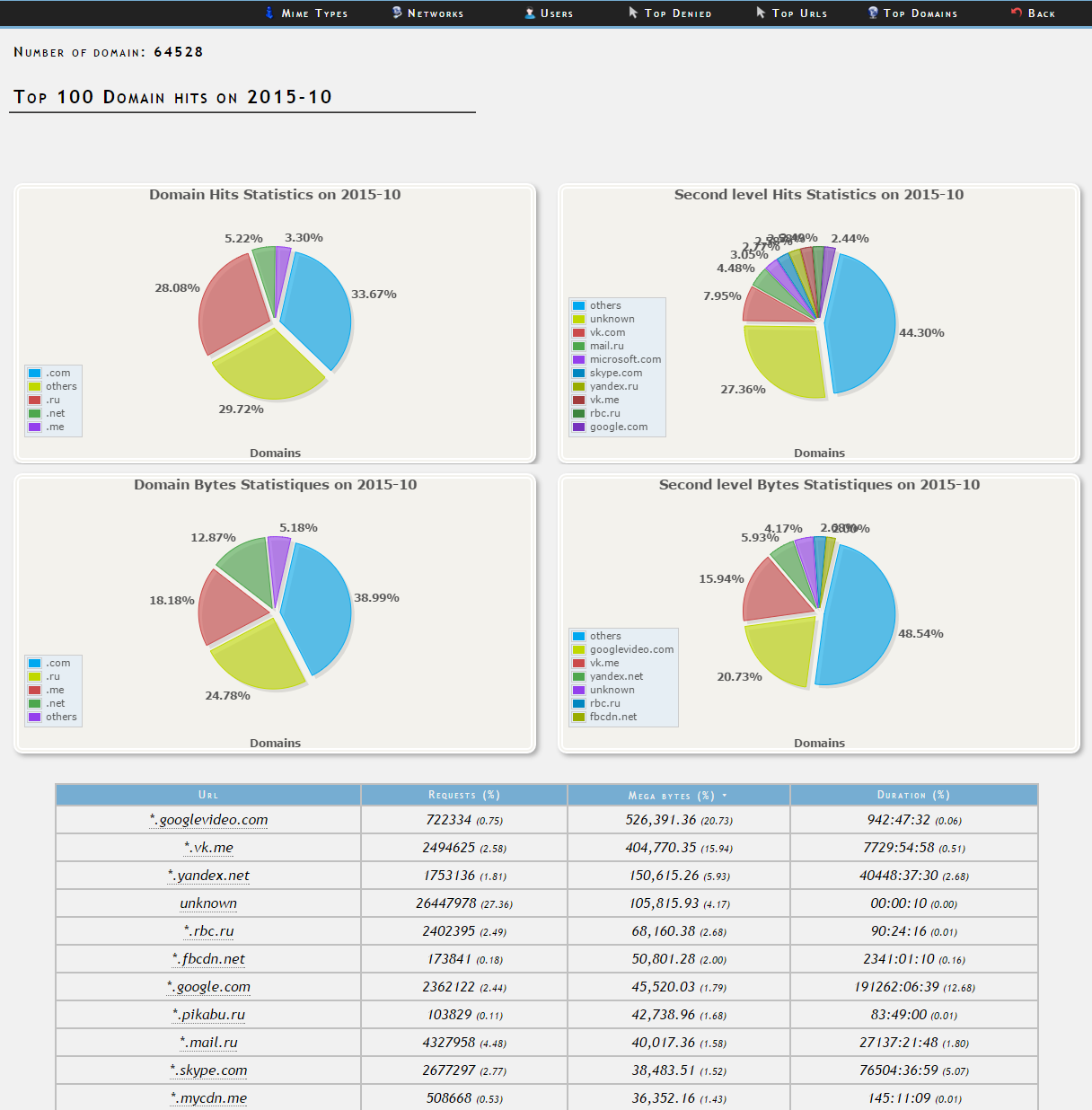

When choosing any period (year, month, week, day), the web pages look similar: top menu with the following reports: MIME types, Networks, Users, Top Denied, Top URLs, Top Domains. Slightly lower proxy statistics for the selected period: Requests (Hit / Miss / Denied), Megabytes (Hit / Miss / Denied), Total (Requests / Megabytes / Users / Sites / Domains). Further, the schedule by the number of requests for the period and by traffic.

In the upper right corner there is a calendar. When choosing a month, you can see a brief statistic and download schedule by day:

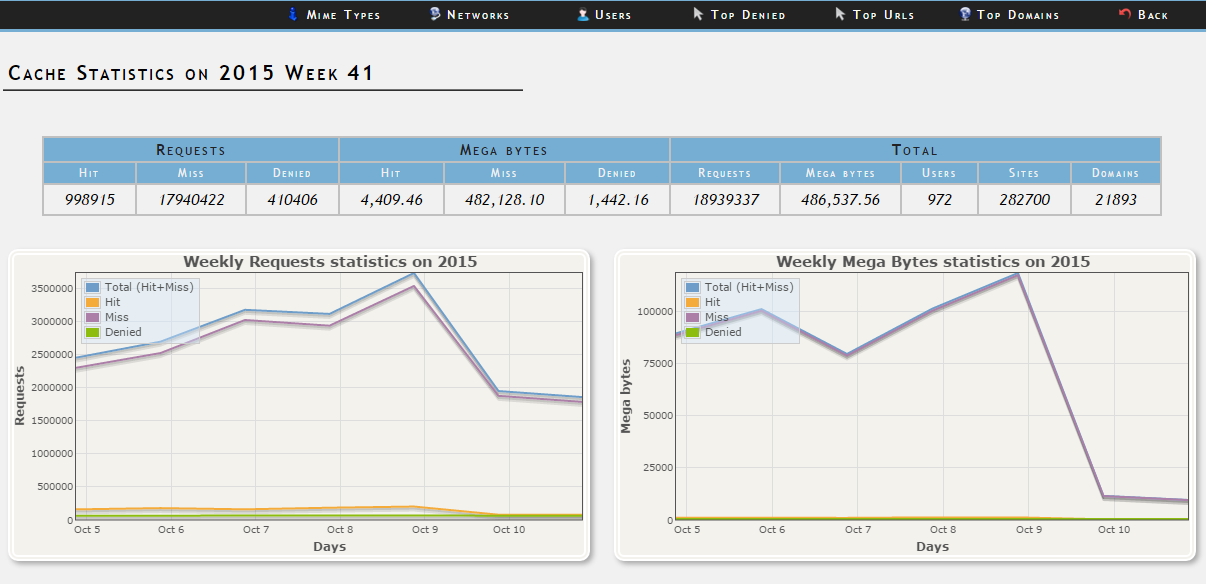

In the calendar there is a choice of the week. When choosing, we will see similar statistics:

When choosing a day, we see the statistics by the hour:

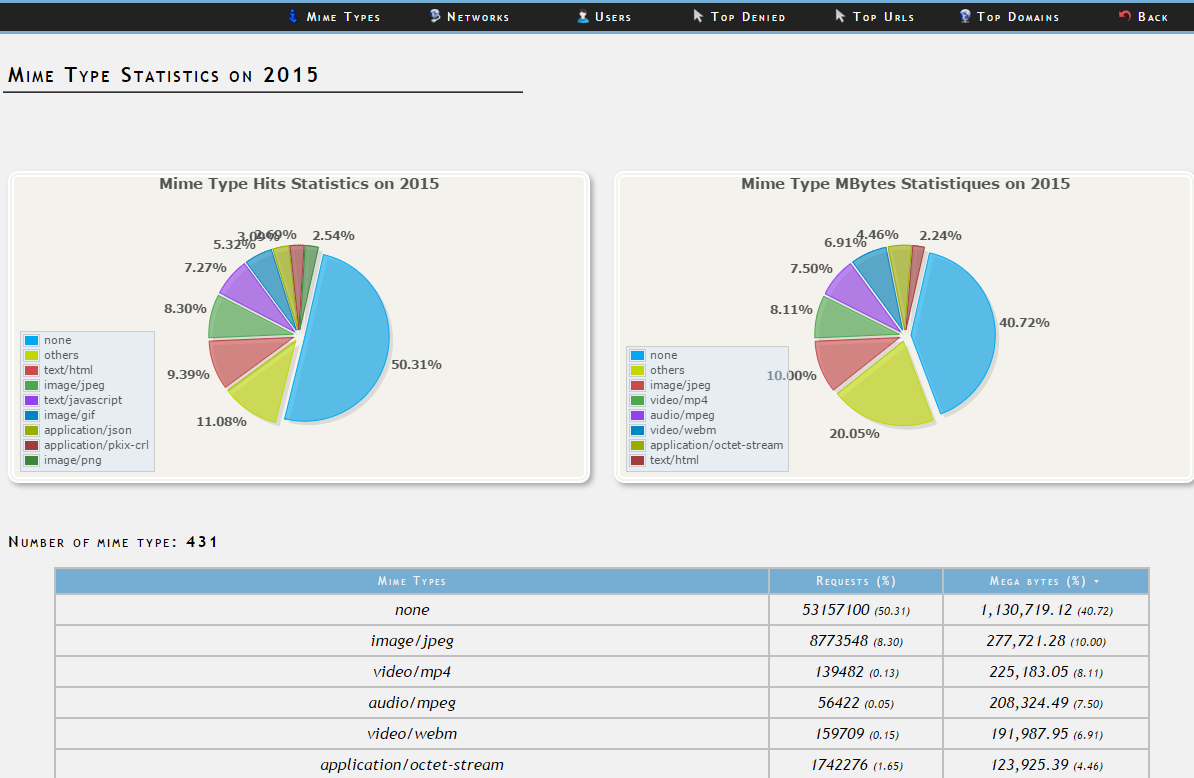

Content Type Report:

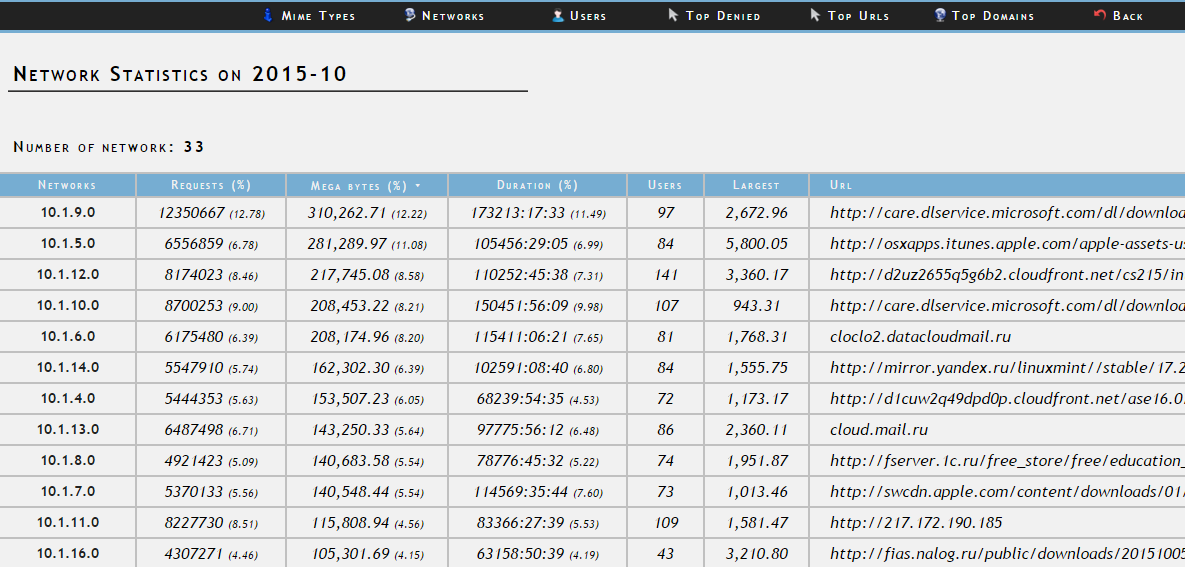

Network report.

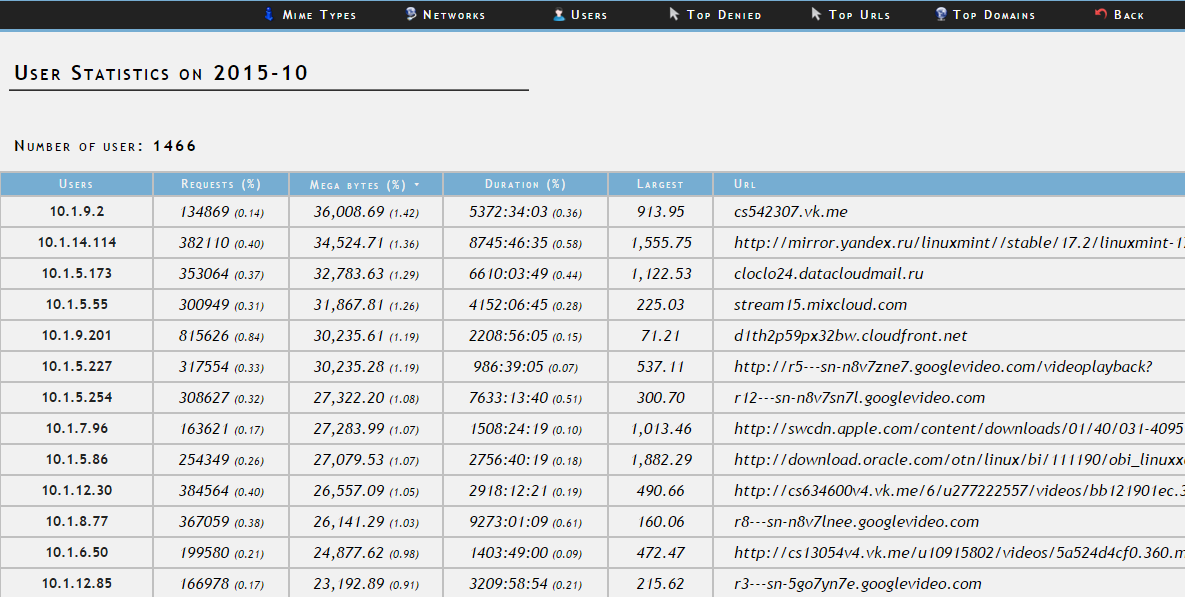

User report.

When choosing a user, we get his statistics for the period.

Prohibited resources:

Level 2 domain report.

From my own words, I would like to note the very slow work of the program as information accumulates. With each new log, statistics for the week, month and year are recalculated. Therefore, I would not recommend this program for processing logs from a server with a large amount of traffic.

When choosing any period (year, month, week, day), the web pages look similar: top menu with the following reports: MIME types, Networks, Users, Top Denied, Top URLs, Top Domains. Slightly lower proxy statistics for the selected period: Requests (Hit / Miss / Denied), Megabytes (Hit / Miss / Denied), Total (Requests / Megabytes / Users / Sites / Domains). Further, the schedule by the number of requests for the period and by traffic.

In the upper right corner there is a calendar. When choosing a month, you can see a brief statistic and download schedule by day:

In the calendar there is a choice of the week. When choosing, we will see similar statistics:

When choosing a day, we see the statistics by the hour:

Content Type Report:

Network report.

User report.

When choosing a user, we get his statistics for the period.

Prohibited resources:

Level 2 domain report.

From my own words, I would like to note the very slow work of the program as information accumulates. With each new log, statistics for the week, month and year are recalculated. Therefore, I would not recommend this program for processing logs from a server with a large amount of traffic.

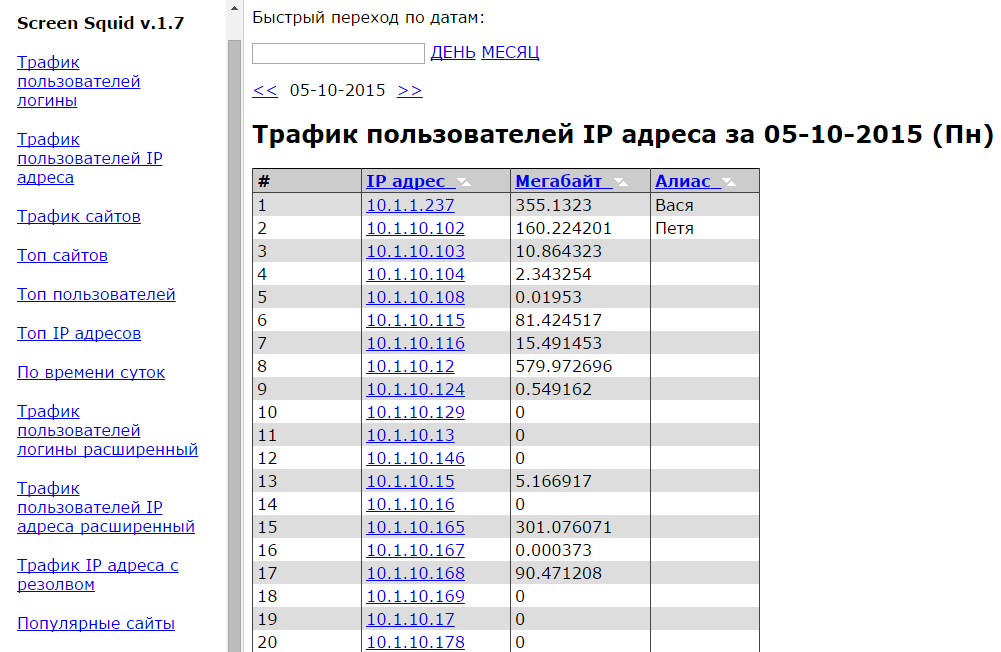

ScreenSquid

This program has a different logic: the log is imported into the MySQL database, then the data is requested from it when working in the web interface. The base with the processed ten-day log mentioned earlier takes up 1.5 GB.

Read more

The program cannot import log files with an arbitrary name, binding only to access.log.

Home Page:

Brief statistics:

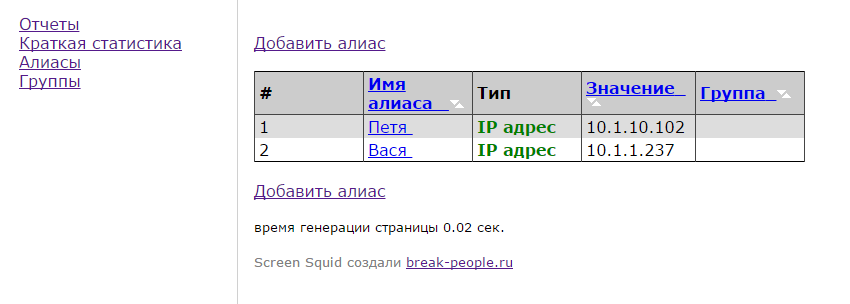

For IP addresses, you can create aliases:

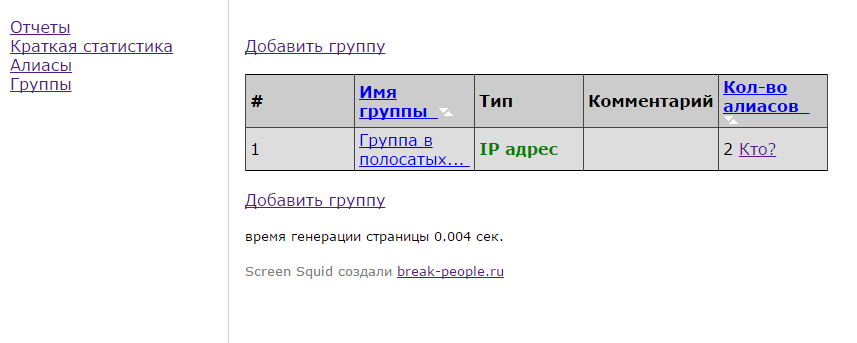

... and then they can be combined into groups:

Go to the main - reports.

On the left menu with report types:

User Logins Traffic

IP address user traffic

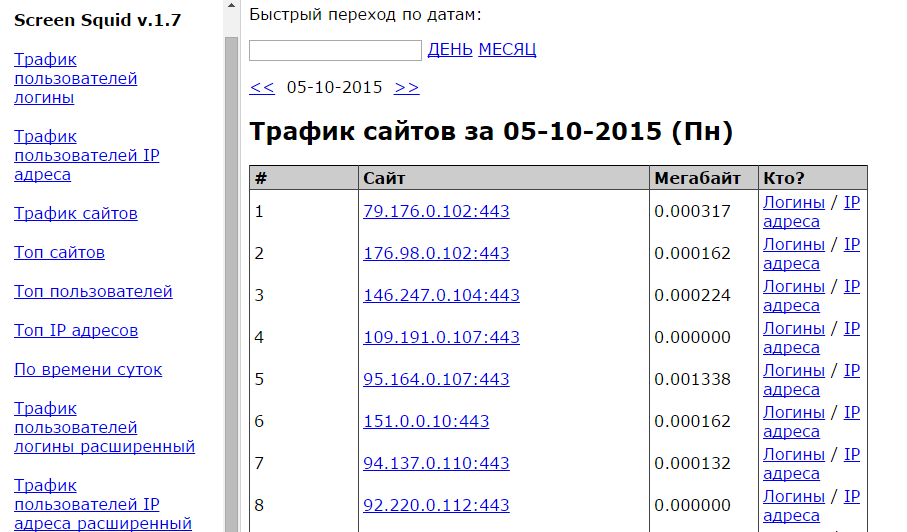

Traffic sites

Top sites

Top Users

Top IP Addresses

By time of day

User traffic logins extended

Traffic of users IP address extended

IP address rezolvom traffic

Popular sites

Who downloaded large files

Traffic by period (days)

Traffic by period (name of the day)

Traffic by period (months)

HTTP statuses

Login IPs

Logins from IP addresses

Examples of reports.

IP address user traffic:

Site traffic:

Top sites:

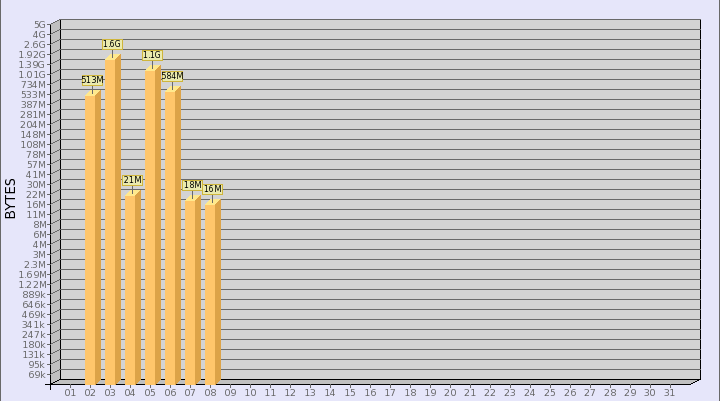

... further, to be honest, I didn’t have the patience to explore the possibilities, since the pages were generated for 3-5 minutes. The report “by time of day” for the day, for which the log was not imported at all, was generated for more than 30 seconds. For a day with traffic - 4 minutes:

Home Page:

Brief statistics:

For IP addresses, you can create aliases:

... and then they can be combined into groups:

Go to the main - reports.

On the left menu with report types:

User Logins Traffic

IP address user traffic

Traffic sites

Top sites

Top Users

Top IP Addresses

By time of day

User traffic logins extended

Traffic of users IP address extended

IP address rezolvom traffic

Popular sites

Who downloaded large files

Traffic by period (days)

Traffic by period (name of the day)

Traffic by period (months)

HTTP statuses

Login IPs

Logins from IP addresses

Examples of reports.

IP address user traffic:

Site traffic:

Top sites:

... further, to be honest, I didn’t have the patience to explore the possibilities, since the pages were generated for 3-5 minutes. The report “by time of day” for the day, for which the log was not imported at all, was generated for more than 30 seconds. For a day with traffic - 4 minutes:

That's all. I hope this material will come in handy. Thank you all for your attention.

Source: https://habr.com/ru/post/273809/

All Articles