Parallel calculations on CPU and GPU

Introduction

This article briefly describes the parallelization of calculations on the computing power of CPU and GPU. Before proceeding to the description of the algorithms themselves, I will acquaint you with the task.

It is necessary to simulate a system for solving problems by the method of finite differences. From a mathematical point of view, it looks like this. Given some final mesh:

')

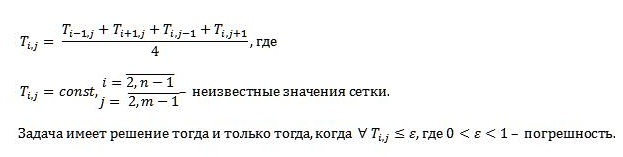

Unknown grid values are found using the following formula by the finite difference method:

Parallelization on CPU

For parallel computing on a CPU, Parallel technology is used, which is similar to OpenMP. Parallel is an internal technology used in the C # language that provides support for parallel loops and regions.

Parallel computing on Parallel:

/* n*m T eps */ /* , , . , .*/ private void Parallelization(int n, int m, float[,] T, float eps) { int time; // bool flag = false; // int interetion = 0; // float epsilint; // float[,] count_eps = new float[n,m]; // float[,] T_new = new float[n, m]; // - time = Environment.TickCount; // do { epsilint = eps; Parallel.For(1, n-1, i => { Parallel.For(1, m - 1, j => { // T_new[i, j] = (T[i - 1, j] + T[i + 1, j] + T[i, j - 1] + T[i, j + 1]) / 4; // count_eps[i, j] = Math.Abs(T_new[i, j] - T[i, j]); // if (count_eps[i,j] > epsilint) { epsilint = count_eps[i,j]; } T[i, j] = T_new[i, j]; }); }); interetion++; }while(epsilint > eps || epsilint != eps); // time = Environment.TickCount - time; // Output(n, m, time, interetion, "OpenMP Parallezetion"); // } Parallelization on the GPU

For parallel computing on a GPU, CUDA technology is used. CUDA is NVIDIA's parallel computing architecture, which significantly increases computational performance through the use of a GPU.

Parallel computing on CUDA:

/* CUDA, */ #define CUDA_DEBUG #ifdef CUDA_DEBUG #define CUDA_CHECK_ERROR(err) \ if (err != cudaSuccess) { \ printf("Cuda error: %s\n", cudaGetErrorString(err)); \ printf("Error in file: %s, line: %i\n", __FILE__, __LINE__); \ } \ #else #define CUDA_CHECK_ERROR(err) #endif /* GPU*/ __global__ void VectorAdd(float* inputMatrix, float* outputMatrix, int n, int m) { int i = threadIdx.x + blockIdx.x * blockDim.x; // int j = threadIdx.y + blockIdx.y * blockDim.y; // if(i < n -1 && i > 0) { if( j < m - 1 && j > 0) // outputMatrix[i * n + j] = (inputMatrix[(i - 1) * n + j ] + inputMatrix[(i + 1) * n + j] + inputMatrix[i * n + (j - 1)] + inputMatrix[i * n + (j + 1)])/4; } } /* n*m T eps */ /* GPU , T */ void OpenCL_Parallezetion(int n, int m, float *T, float eps) { int matrixsize = n * m; // CPU int byteSize = matrixsize * sizeof(float); // GPU time_t start, end; // float time; // float* T_new = new float[matrixsize]; // - float *cuda_T_in; // - GPU float *cuda_T_out; // - GPU CUDA_CHECK_ERROR(cudaMalloc((void**)&cuda_T_in, byteSize)); // GPU CUDA_CHECK_ERROR(cudaMalloc((void**)&cuda_T_out, byteSize)); float epsilint; // float count_eps; // int interetaion = 0; // start = clock(); // dim3 gridsize = dim3(n,m,1); // (x,y,z) GPU do{ epsilint = eps; CUDA_CHECK_ERROR(cudaMemcpy(cuda_T_in, T, byteSize, cudaMemcpyHostToDevice)); // GPU VectorAdd<<< gridsize, m >>>(cuda_T_in, cuda_T_out, n, m); CUDA_CHECK_ERROR(cudaMemcpy(T_new, cuda_T_out, byteSize, cudaMemcpyDeviceToHost)); // GPU for(int i = 1; i < n -1; i++) { for(int j = 1; j < m -1; j++) { // count_eps = T_new[i* n + j ] - T[i* n + j]; // if(count_eps > epsilint) { epsilint = count_eps; } T[i * n + j] = T_new[i * n + j]; } } interetaion++; }while(epsilint > eps || epsilint != eps);// end = clock(); // time = (end - start); // /* CPU GPU*/ free(T); free(T_new); cudaFree(cuda_T_in); cudaFree(cuda_T_out); Output(n, m, time, interetaion); // } Source: https://habr.com/ru/post/273771/

All Articles