History of the 5th place in the Russian AI Cup 2015

At first this text was written in the form of a commentary on the topic of the winner of this competition . But in the end, the volume of the text became so large that it was decided to allocate it into a separate topic. So it is assumed that the reader is aware of the contest and read the topic of the winner. You can also read the history of the 30th place .

Immediately I will give a link to the source code repository - in addition to the source code itself, there is also the whole history of commits. For example, the time at which a commit was made with the comment “it's time to sleep” may seem interesting.

')

In general, it seemed that most of the leaders had approximately the same basic ideas for the final strategy:

- Finding a path on a map between waypoints

- Simulation of motion, collisions, and other physics

- Enumerate different actions that lead to different paths from the current position to the future.

- Choosing the best action or trajectory based on some evaluation function

So in this topic I will tell you a little more about how these ideas were implemented in my case.

Finding the way

I looked at the task of finding a way a little more broadly than it might seem. I wanted to make such a data structure and algorithm so that I could quickly find out the answer to a question of the following form: “if I am at the point X, Y and my next waypoint N, then how much time is left for the next waypoint?”. Such a requirement came from the fact that such requests will come from the "pereborshchik" several thousand times during one decision-making in order to evaluate the trajectories obtained.

The first version worked simply by finding a path from an arbitrary cell, where the point XY fell to the next waypoint, and as a response gave the length of the path. At the same time, for the relative location of the XY point inside the cell, a certain bonus was also given to make the evaluation a little more like a continuous one.

Somewhere in the second round, maps began to appear, where such a simple search for a path through the cells had problems. For example:

- Which way to approach the waypoint?

- How would you make it so that when taking a point, the car did not try to go backwards to the next one, if you can just go straight ahead without any loss and make a small detour?

- How to make a search for the way, taking into account the turn for reversing?

As a result, an idea appeared to change the data structure and the query itself: “if I am at the point X, Y, my angle is A and the next waypoint N, how much time is left for me to finish ?”

In order to respond to such a request, it was necessary to come up with a graph device, which will be followed by a search path. The result is the following:

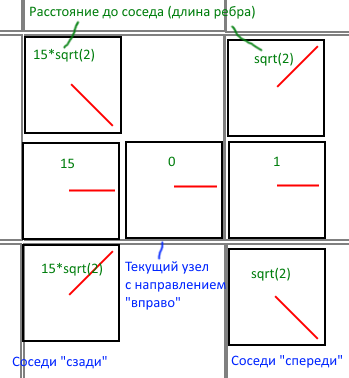

- The graph node is a “subcell with direction” —the cell is 400x400 in size, with 8 possible directions (in 45 degree steps).

- Neighbors at such a node had 6 units: 1 neighbor - go “straight”, 2 neighbors - go “straight with turns”, 1 neighbor - go “back”, 2 neighbors - go “back with turns”.

- The length of the edges, respectively, was 1 for direct movement, sqrt (2) for diagonal, and for reverse, multiplied by some more constant. In the final version, this constant was equal to 15. Such a value was picked up by hands so that the car did not go backwards for a long time and turned around at the first opportunity, but at the same time it allowed us to take all sorts of idiotic waypoints that are a couple of tiles behind the current one .

- Taking into account the walls of the "big" 800x800 cells, so as not to generate unnecessary neighbors at the level of the "subcell" 400x400.

There were so many bugs with such a search for the way. In particular, because the path itself was sought not from the point of the query, but from the finish. That is, if we were asked “if I am at the point 0, 0, my angle is 0 and the next waypoint 1”, then I had to first find the distance from the finish (0th waypoint) to the previous waypoint (N-1th waypoint) , then from the previous waypoint, recursively find the path to the 1st waypoint and only then find the path from the 1st waypoint to the query point. Due to the fact that such a search took place from the “end” to the “beginning”, there was a lot of confusion with the definition of neighbors for the node - it was especially difficult to determine the direction of the neighbors.

Above is an example of neighbors for a node with a multiple of 90 degrees. Neighbors for “diagonal” directions look a little different, but the essence is the same.

Closer to the finals, we managed to debug such a mechanism, and on unknown maps the car chose rather interesting paths so that we could go round the waypoints in the correct order and with minimal backing. At the same time, the penalty for the reverse was not so high at first, and in the absence of interference such a solution even drove faster. But in the final on the “problem” waypoints there was always a mess and chaos, so it was better to slip such a waypoint without slowing down and make a hook - this will avoid the hustle on the waypoint and take more bonuses along the way. It should also be understood that the trajectory itself is not obliged to follow the path obtained from the point with the car - the main thing is that the best trajectory leads to the point that will be “closest to the finish”.

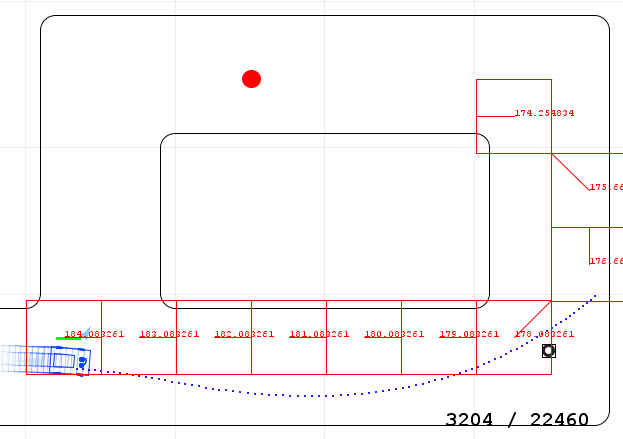

That somehow looked like the “best way” found from the current point of the car to the future. It can be noted that the car decided not to turn immediately “greedily” to the waypoint, but to call it “behind”.

The pathfinding algorithm itself was a lazy implementation of the Dijkstra algorithm, where all paths were considered to be on demand and cached. In the final, the cache was sometimes dropped if new unknown cells were opened.

Source code - see class CWaypointsDistanceMap

Simulation

Initially, physics worked only in the form of the following mechanism: the “machine” + “action” was fed to the input, the output was “the machine on the next tick”. The simulation code itself was honestly borrowed from Mr.Smile from his message on the forum and adapted further by adding the simplest checks for collisions with walls - in this case, the search simply stopped. Then the correct calculations for braking, nitro, and oil were slowly screwed to this code. In the end, it became necessary to correctly calculate collisions, since Some turns were more profitable to pass with collisions than without. Especially at the start with an unsuccessful position.

In general, somewhere before the end of the second round, we managed to understand the code of collisions from notreal2d, to achieve almost absolute accuracy, and then even simplify the code - after all, not real2d is too “common” engine, and in our world there are only 3 types of walls - straight vertical or horizontal line, a circle with a radius of 80 and a concave arch. On the concave arches, it was decided to score, because the code could not be debugged with them in order to work stably and accurately.

But also the thought did not leave my head at the same time simulating all the objects in the world - all cars, shells, etc. In the end, the simulator of one machine turned into a simulator of the “world”, i.e. he was given an “peace” + “actions of all cars” at the entrance, and the output was “the world on the next tick”. The actions of the enemy cars were supposed to be "sneaker on the floor."

The implementation and debugging of such a simulator probably took most of the time. The friction coefficients were selected manually, the search code and collision resolution were copied and copied from notreal2d, and in the end the collision search was written manually for optimization. There were a huge number of bugs related to collisions with the “ends” of the walls - in the end I just got rid of the ends of the walls. It was also necessary to carefully debug collisions of cars with each other and with tires. And collisions with bonuses were never written correctly. A lot of weaknesses in speed helped to find the profiler built into VS 2015 - a huge thanks to him.

Source code — see the CWorldSimulator class.

Chopper

Comparing to what santa324 did, it can be said that the retailer was the weakest point of my strategy. There were ideas to make something essentially new: a genetic algorithm, or "mutating" trees, or something else, but there was no longer enough strength.

In general, the main idea of the selector that was eventually used was that the trajectories would be searched using a search of some fixed set of actions. For example:

- Go straight / left / right 0/7/15/40/60 ticks with a brake / without brake

- Then go straight / left / right 0/40 ticks

- Then once again go straight / left / right 0/40 ticks

- And as a last action - go straight to the end of the search depth.

One of the first versions was able to go through only one turn and one braking and it was not enough to perform maneuvers such as “move away from the turn, then go into a turn with a large radius” or drift. In the end, the structure described above was selected with three turns / brakes and driving right before the end of the search depth, which was equal to 150 ticks. But even this structure had to be significantly curtailed when the physics simulation was changed from one car without collisions to the simulation of “the whole world” with collisions. In order to meet the time limit, some branches were switched on and off with a 50% chance. And also the length of the action was smeared with a random.

Significant improvement of the trajectories occurred when the best sequence of actions found on the current tick was preserved in order to recall it in the next tick and re-evaluate with correction of durations of all actions to minus 1. Such “memorizing the best trajectory” added an element of the evolutionary algorithm to the chooser and helped to steer more or less adequately, even when, due to the randomness, several ticks in a row did not find a new good trajectory.

As a function of evaluation, the sum of various components served as a chooser. The main component is the same “how much is left to the finish if I am at the point XY with angle A and my next waypoint N?”. Then the terms for bonuses, entry to puddles, loss of lives, etc. were added - this made it possible to choose perhaps not the most optimal trajectories in terms of the distance to the finish, but then pick up bonuses, dodge the projectiles and drive around the puddles.

Also, at the end, heuristics for nitro / shooting / puddles were moved to this selector as follows - for the best trajectory found, it was checked “what if I turn on the nitro / shot / pour puddle right now?” own assessment. If this assessment overcame the threshold, then the action was performed.

At the very last moment, the “long reverse” was added to the re-finisher before the final; it was launched if the best path from the current cell was “back”. Unfortunately, my tests did not show a significant slowdown from such a “long reverse”, because on all maps I tested locally, the reverse was practically not used. And the code that was responsible for reversing before this was a set of crutches in the style of smart and, accordingly, practically did not eat up time. The increased time consumption of the strategy I missed led to a fall by time-limit in the first wave of the final cards, but this will be discussed below.

A lot of time was spent on reversing and experimenting with the evaluation function. After all, such a "simulation of the whole world" gave great opportunities. For example, as a result of some experiments, a jeep could shoot a tire at an ally so that it would drive faster. Or the jeep could slow down on purpose, so that an adversary driving behind him with 1% of lives would crash into the jeep and die. Or when the cars at the start tried to push the extreme opponent to collide with the wall. But to my great regret, I could not debug such complex evaluation functions. Yes, and I could not afford a deep simulation of all the collisions - in the final version of “honest physics” only the first 40 ticks were calculated with an accuracy of 2 subticles. And then the collisions were not considered, and there was only one subtik.

Video with visualization of the best path found and the trajectory for the jeep (the rest draw a trajectory of 40 ticks).

Source code - see class CBestMoveFinder

Time control

In the first part of the final, my strategy lost 40 games because of the time limits, which I did not control because of my carelessness - the strategy ate at an average of 42ms per tick, while the allotted 30ms averaged at a tick. The first wave of cards in the final was different from all the others in that it was oh-so-very slow and most of the cards passed "right next" in the allotted ticks, and in my case even in the end it did not succeed, because running out of processor time. On the other maps, it was often possible to finish faster than the allotted time, because it was set aside "in reserve".

At KDPV just a screenshot of a typical case - the strategy did not reach the finish line. But in this game, I was practically shoved to the finish by the participant under the Antmsu nickname.

So I was very upset, angry and almost depressed due to the

Other

This time it was completely unclear how to test strategies and make decisions about whether it became better or worse. So, additional scripts were written to run local tests on all maps, as well as to continuously launch games with closest rivals via the site interface. Plus separate scripts for downloading and parsing the results. The strategy code was also slightly modified so that it was possible to “save cards from a repeater,” which were completely random in the final.

There was also a stupid attempt to rewrite the class-to-class completely the entire engine, notreal2d, it killed 10 hours and everything was removed.

There was also an attempt to remake the searchator for a genetic algorithm, where a gene is one action with a duration. But something working could not be achieved, as well as to come up with an adequate operation of crossing.

A little more offensive, but in MyStrategy there was a crutch code for reversing, if the machine didn’t move for a long time, as well as for smartgai style, if for some reason the selector didn’t find any good trajectories at all. It was not possible to make an honest account of such situations in the recruiter.

Total

As a result, a very large amount of time was spent on the whole competition. Not less than 100 hours, or rather even 150 or more. Of course, not all of this is coding time, but the brain was boiling and working continuously, even in a dream. In general, I am satisfied with the result and I am very glad that I managed to snatch the prize. My previous participation in the contest was only in 2013 and was limited to 30th place.

Many thanks to the organizers - in my opinion, it was the most spectacular and possibly the most interesting contest of the past. The rivals were very strong, they had to “run as fast just to stay in place,” thanks to them too. Well, some thanks to Mr.Smile for previously laying out the "physics of motion" of the car, JustAMan for a convenient visualizer on sockets, Romka for interesting video news and to a colleague Angor for the brainstorms.

Source: https://habr.com/ru/post/273745/

All Articles