Background segmentation in the Intel RealSense SDK

This document describes how developers can use background segmentation (BGS) in the Intel RealSense SDK to create exciting new collaboration applications. Describes the expected behavior of programs and their performance in different scenarios, specifies restrictions that developers should remember to deliver products to customers. The primary audience for this article is the development teams using BGS and OEMs.

On KDPV - the application Cyberlink YouCam RX as an example of the use of BGS.

Application area

Background segmentation (BGS technology) is an important distinguishing feature of the Intel RealSense camera for collaboration and content creation applications. The possibility of separating the background in real time without special equipment or post-processing is a very interesting additional feature for existing teleconferencing applications.

')

There is a huge potential to refine existing applications or create new ones using BGS technology. For example, consumers can view shared content with friends on YouTube * through another program during a video chat session. Employees can see each other's image superimposed on a shared workspace during a virtual meeting. Developers can integrate BGS to create new usage scenarios, such as changing background or background video in applications that use a camera or screen sharing. Above and below are applications using an Intel RealSense camera. In addition, developers can invent other usage scenarios, such as taking a selfie and changing the background, using collaboration tools (browsers, office applications) to share and edit together, say, to create karaoke videos with different backgrounds.

Personify application using BGS

Creating a sample application with background segmentation

Requirements

- Platform with an Intel Core processor with USB3.0 root port enabled

- Memory: 4 GB

- Intel RealSense F200 Camera

- Intel RealSense SDK

- Intel Depth Camera Manager software or a system in which this component is already installed by an OEM manufacturer

- Microsoft Windows * 8.1, 64-bit version

- Microsoft Visual Studio * 2010–2013 with the latest service pack

In this article, we explain how developers can replace the background with video or other images in a sample application. We also provide a code snippet to blend the output image with any background image and talk about the effect on performance.

The current implementation of background segmentation supports YUY2 and RGB formats. Permissible resolutions are from 360p to 720p (for color image) and 480p (for image depth).

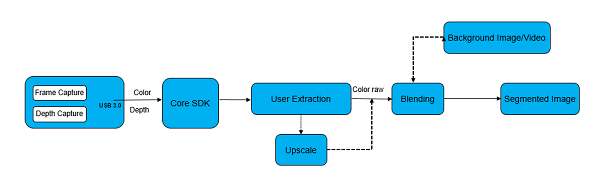

The figure below shows a general representation of the BGS conveyor. Depth and color frames are recorded by the Intel RealSense camera and transferred to the main SDK (that is, the Intel RealSense SDK runtime). Based on the request from the application, the frames are delivered to the User Extraction block, where a segmented image in RGBA format is formed. You can apply alpha blending to this image with any other RGB image to get the final background image on the output. Developers can use any mechanism for mixing images on the screen, but the best performance is achieved when using a graphics processor.

BGS conveyor

The following explains how to integrate 3D segmentation into a developer's application.

1. Install the following Intel RealSense SDK components.

- Core Intel RealSense SDK Runtime

- Background segmentation module

2. Use the web installer or standalone installer to install only core and individual components. The runtime can only be set in UAC mode.

intel_rs_sdk_runtime_websetup_x.xxxxxxxx --silent --no-progress --accept-license = yes --finstall = core, personify --fnone = all ”

You can determine which runtime is installed on the system using the following Intel RealSense SDK API:

// session is a PXCSession instance PXCSession::ImplVersion sdk_version=session->QueryVersion(); 3. Create an instance to use the 3D camera. This creates a pipeline for the operation of any three-dimensional algorithm.

PXCSenseManager* pSenseManager = PXCSenseManager::CreateInstance(); 4. Turn on the desired intermediate module. It is recommended to include only the module required by the application.

pxcStatus result = pSenseManager->Enable3DSeg(); 5. Specify the profile you need for your application. Starting at a higher resolution and a higher frame rate will increase the load. Transfer profiles to get the right stream from the camera.

PXC3DSeg* pSeg = pSenseManager->Query3DSeg(); pSeg->QueryInstance<PXCVideoModule>()->QueryCaptureProfile(profile, &VideoProfile); pSenseManager->EnableStreams(&VideoProfile); 6. Initialize the camera conveyor and transfer the first frame to the intermediate level. This stage is required for all intermediate levels, it is necessary for the operation of the conveyor.

result = pSenseManager->Init(); 7. Get a segmented camera image. The output image is output from the intermediate level in the RGBA format, it contains only the segmented part.

PXCImage *image=seg->AcquireSegmentedImage(...); 8. Blend the segmented image with your own background.

Note. Mixing significantly affects performance if it is performed on the CPU and not on the GP. The sample application runs on the CPU.

- You can use any technique to blend a segmented RGBA image with another bitmap image.

- You can use zero copying of data into system memory when using GP instead of CPU.

- You can use Direct3D * or OpenGL * for mixing.

Here is the code snippet for getting the image transfer to system memory, where srcData is of type pxcBYTE.

segmented_image->AcquireAccess(PXCImage::ACCESS_READ, PXCImage::PIXEL_FORMAT_RGB32, &segmented_image_data); srcData = segmented_image_data.planes[0] + 0 * segmented_image_data.pitches[0]; Blending and rendering

- Write: read color and depth data streams from the camera.

- Segmentation: the separation into background and foreground pixels.

- Copy colors and segmented images (depth masks) into textures.

- Resize the segmented image (depth mask) to the same resolution as the color image.

- (Optional) Download or update a background image (when replaced) to a texture.

- Compile / load shader.

- Set the color, depth, and (optionally) background textures for the shader.

- Run the shader and display.

- (In video conferencing applications) Copy the merged image onto the surface of an NV12 or YUY2.

- (In video conferencing applications) Transferring the surface to the hardware encoder Intel Media SDK H.264.

Performance

The following factors affect the operation of the application.

- Frame rate

- Mixing

- Resolution

The table below shows the 5th generation Intel Core i5 processor load.

| No rendering | CPU rendering | Rendering with GP | |

|---|---|---|---|

| 720p, 30 fps | 29.20% | 43.49% | 31.92% |

| 360p, 30 fps | 15.39% | 25.29% | 16.12% |

| 720p, 15 fps | 17.93% | 28.29% | 18.29% |

To check the effect of rendering on your own computer, run the sample application with the -noRender parameter, and then without this parameter.

BGS technology limitations

User segmentation is still evolving, and with each new version of the SDK, the quality improves.

What should be remembered in assessing quality.

- Avoid having objects on the body of the same color as the background image. For example, a black T-shirt with a black background.

- Too bright lighting of the head can affect the image quality of the hair.

- If lying on the bed or on the couch, the system may not work properly. For video conferencing sitting position is better.

- Transparent and translucent objects (such as a glass cup) will not display correctly.

- Accurate hand tracking is difficult; quality may be unstable.

- Bangs on the forehead can lead to problems with segmentation.

- Do not move your head too fast. Camera limitations affect quality.

Intel BGS Technology Reviews

How to improve the quality of software? Best of all - leave feedback. Scripting in a similar environment can be difficult if the developer wants to repeat testing with the new release of Intel RealSense SDK.

To reduce the discrepancy between different launches, it is recommended to remove the input sequences that are used to reproduce problems, to see if the quality improves.

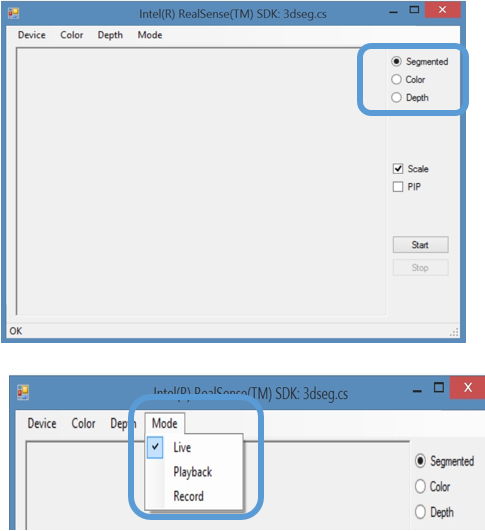

The Intel RealSense SDK comes with a sample application that helps collect sequences for playback.

- Important to provide quality feedback.

- Not for performance analysis

When installed by default, the sample application is located in the C: \ Program Files (x86) \ Intel \ RSSDK \ bin \ win32 \ FF_3DSeg.cs.exe folder . Launch the app and follow the steps shown in the screenshots below.

You will see yourself on the screen, while the background will be removed.

Reproduction of sequences

If you select the Record mode, you can save a copy of your session. Now you can open the FF_3DSeg.cs.exe application and select the playback mode for viewing the recording.

Conclusion

The Intel RealSense Technology Background Segmentation Intermediate Module provides users with interesting new features. Among the new models of use - changing the background on a video or another image, creating a selfie with a segmented image.

Reference materials

Source: https://habr.com/ru/post/273663/

All Articles