Accidents are not accidental?

annotation

The article is devoted to the systematization of the basic provisions on random and pseudo-random sequences (SP and SRP) numbers. A brief review of known approaches to testing the randomness of generated sequences is given. The applied significance of this topic is determined by the fact that the memory bandwidth is widely used in cryptographic information security systems for generating key and auxiliary information (random numbers, initialization vectors, etc.).

Introduction

Since ancient times, not only mathematics has been studying the randomness. Accident has become the subject of research in many mathematical disciplines - in statistics, in game theory, the theory of fuzzy sets, in physics and chemistry - in studying the properties of the microworld, in economics - in studying the influence of random factors on the origin and development of economic crises, on the trajectories of world currencies in history and sociology - in the study of the value of the factor of chance on the development of historical events and the change of epochs. The development of science in the 20th century showed that the chain of random, truly unpredictable events leads the researcher not to chaos, but to very tough logical conclusions of a global nature. This thesis can be easily illustrated with the help of the central limit theorem (and other theorems) of probability theory. It should be noted feature of systems of random objects, which may seem in a certain sense paradoxical. More often it is possible to describe, as a last resort, global predictions, rather than local laws of large systems. This defines two unconditionally demanded modern research directions: the search for global patterns of random systems and the rationale for a random system of the unpredictability of local events. Random sequences of numbers or elements of other sets are effectively used for experimental study of the properties of complex objects and systems.

')

Random sequences

SPs play an important role in cryptography, they are used to form key and initial parameters of cryptographic algorithms, as well as sequences of encryption substitutions in stream cryptosystems. Randomness and cryptography are closely interrelated: the main purpose of cryptographic systems is to convert non-random meaningful plaintext into a seemingly random sequence of ciphertext characters. This property of cryptosystems is used to generate PSP.

The best cryptographic properties of a cryptosystem are achieved by using the so-called ideal random sequences (ICP), whose mathematical model is represented by the implementation of a sequence of independent random variables with a uniform probability distribution on a given finite alphabet. Such sequences are also called uniformly distributed (on some finite set) random sequences (RRSP). Many studies have been devoted to the description of the properties of the RSTD, in particular, [1], [4], [5] and [7].

Approaches to the definition of the term "randomness"

There are various approaches to the formal definition of the term “randomness”, based on the concepts of computability and algorithmic complexity [2]. Historically, the first approach, the frequency one , was proposed by von Mises at the beginning of the 20th century and was developed by Church, Kolmogorov and Loveland. The idea of the frequency approach is that the frequency of occurrence of elements should be stable in the SP. For example, signs 0 and 1 must occur independently and with equal probabilities not only in the binary SP itself, but also in any of its subsequences chosen independently of the initial generation conditions.

Another approach, a complexity approach, proposed independently by Kolmogorov and Chaitin, is based on the fact that any description of the implementation of a joint venture cannot be significantly shorter than this implementation itself. That is, the joint venture must have a complex structure, and the entropy of its initial elements must be large. With this approach, it is shown that the sequence is random if its algorithmic complexity is close to the length of the sequence.

The third, quantitative , approach is developed by Martin-Lof. It consists in dividing the probabilistic space of sequences into nonrandom and random (relatively few of the latter). Sequences in which any patterns are observed are considered non-random. A sequence is considered random if it passes a set of specific tests designed to identify patterns. However, if you require that the sequence passes any statistical test, it turns out that the SP does not exist at all. In practice, a certain set of tests is used, where the proportion of SPs that do not satisfy them tends to 0 with an unlimited increase in the length of the sequences.

In recent decades, due to the requirements arising in cryptographic applications, a cryptographic approach has been formed to determine the SP, where the sequence is considered random if the computational complexity of finding patterns is not less than a given value. This approach is consistent with the existing systems approach to determining the strength of cryptographic systems.

These approaches to the definition of chance have disadvantages. The frequency approach is complicated in practice by the uncertainty of the rules for choosing subsequences, the complexity approach implies the need to reduce the redundancy of the programming language. The use of the quantitative approach is difficult to determine the set of tests applied to the sequences under study. Lower estimates of the complexity of the cryptographic approach are focused on a set of well-known tests, and it may eventually increase, which leads to a re-evaluation of complexity. At the same time, for cryptographic applications, a cryptographic approach that takes into account the statistical properties of sequences and their area of application is most appropriate.

PSP testing

It is proved (Herring, C., Palmore, JI Random Number Generators are Chaotic, 1995) that any algorithmic implementation of a sequence is imperfect, that is, a truly random sequence cannot be calculated by some rule and, therefore, it must be non-periodic infinite sequence. Sequences that are generated by a particular rule that mimic RRSP are called pseudo-random sequences (PSP). Successful imitation is understood as the closeness of a number of characteristics generated by the memory bandwidth to similar characteristics of ICP.

The generation of the SRP used in cryptographic applications is based on the implementation of multiple iterations of a certain transformation of a finite set X (often X is a set of binary n-dimensional vectors). This output sequence is periodic. However, the length of the period can be quite large, it is guaranteed, in particular, the use of linear shift registers with primitive characteristic polynomials. For many PSP generators, good statistical properties and high linear complexity, a long period, have been proved, but it is often not possible to prove the analytical and statistical properties. Then, statistical tests are used to justify many properties.

The variety of criteria for evaluating PSP is extremely large. Each of the approaches to sequence analysis can be attributed to one of two groups.

The first group is related to the search for patterns that allow reproducing a sequence along its segment of small length. At the same time, the main requirements for the sequence are reduced to the absence of relatively simple inter-character dependencies.

The second group of criteria is related to the evaluation of the statistical properties of a sequence: is there any frequency imbalance in the studied sequence that allows the analyst to predict the next (previous) sign from a known segment of the sequence, that is, to assume the value of the next (previous) bit with a probability greater than with a random choosing? The basic requirements for the CBS for the second group of criteria are to ensure that a number of its statistical properties correspond to the properties of the ICP, in particular, the frequency of occurrence of individual characters and the s-gram should be evenly distributed for sufficiently large values of s.

In the systems approach, well-known tests are used to analyze sequences and new tests are developed taking into account the characteristics of the object being analyzed. If, in the course of analysis, a new weakness is detected in a certain class of sequences, then a corresponding new test is developed, which supplements the used set of known tests.

Both groups of sequence analysis methods constitute a systematic approach to the analysis of the CBS, in particular, to the development of stream ciphers.

One of the first formulations of the fundamental requirements for the statistical properties of periodic binary SRP was given by S. Golomb. These requirements for sequences are known in cryptography as Golomb postulates (Golomb SW Shift Register Sequences, 1967). Golomb postulates are given, in particular, in the monograph [7]. The postulates proceed from the condition of preserving the positive statistical properties inherent in linear recurrent sequences with a maximum period length.

Golomb rules do not guarantee high quality bandwidth and are rather necessary. In practice, the use of the Golomb postulates for assessing the quality of the memory bandwidth is difficult, because of the complexity of the theoretical calculation of the length of the memory bandwidth, the s – gram frequency on the period, and the values of the autocorrelation function. Calculation on a computer is possible only for the SRP with a short period, which limits the use of Golomb's postulates in cryptographic applications.

The purpose and objectives of the statistical testing of PSP

Testing a random memory bandwidth test is a way to identify its specific properties by comparing the characteristics of the memory bandwidth with similar characteristics of the ICP.

With the help of statistical testing, the following problems are solved:

1) evaluation of the properties of the output sequence of the generator CAP in terms of use in a cryptographic algorithm (for example, as a secret key);

2) assessment of the quality of cryptographic primitives (hash functions, block and stream ciphers) by their output sequences, from which indistinguishability from ICP is required, in particular, testing of such cryptographic properties, such as the avalanche effect of changing the output of algorithms for distortions, correlation of intermediate and output sequences;

3) identification of PSP generators, issuing "non-random" sequences; development of new generators PSP; verification of the correctness of the implementation of generators PSP.

The essence of testing usually comes down to testing the so-called “null hypothesis” H0 with respect to the studied sequence x (k) = (x_1, ..., x_k) of length k, according to which x is obtained on the basis of k independent tests of a probability scheme with a uniform distribution.

In general, the statistical test T is a two-digit function.

,

,

where A * is the set of sequences in the alphabet A. The statistical test T divides the set V (k) of sequences of length k into the set V (k, 0) of “non-random” sequences (as a rule, relatively small) and the set V (k, 1) = V (k) / V (k, 0) of random sequences. The probability P that the test rejects the randomly selected binary sequence x (k) is V (k, 0) / 2 ^ k. As a rule, in real tests P does not exceed 0.01.

The complexity of the exact calculation of the function T is great due to the bulkiness of the domain of definition. Therefore, statistical testing for acceptance / rejection of the H0 hypothesis is performed using the f_T statistic, that is, a relatively simple computable function mapping the set of sequences into a set of real numbers, and the probability distribution of statistics for the H0 hypothesis, determined by probability-theoretic methods. The statistical test is a set of statistics calculated from the source data, and the decision rule, according to which the H0 hypothesis is determined by the statistical value.

The statistical test is probabilistic, that is, errors of two kinds occur, which are important characteristics of the test:

1) error a of the first kind, if the sequence is random, but H0 is rejected;

2) error b of the second kind, if the sequence is not random, but H0 is accepted.

The decision to pass a statistical test sequence is made on the basis of various criteria [7].

Based on the threshold . The approach is based on calculating for the given sequence x (k) statistics of the test f_T (x (k)) and its subsequent comparison with a certain threshold value c (k). According to the criterion, the binary sequence x (k) does not pass the statistical test if f_T (x (k)) <c (k). Such an approach gives erroneous decisions relatively often and is considered not entirely reliable.

Based on a fixed confidence interval . With this approach, the sequence x (k) does not pass the statistical test if f_T (x (k)) is outside the limits of the confidence interval of the statistics values calculated for a given level of significance a. At lower levels of significance, the width of the confidence interval is larger. This criterion is more reliable than the first.

Based on p-value calculation . The third class of criteria is based on the calculation of the characteristics of the test from the [0,1] segment, called the p-value (p-value). Statistics test, considered as a random variable with a known distribution law, is constructed so that large values indicate a certain defect in the randomness of the sequence. The probability value of p-value is the probability that test statistics will take on a value greater than that observed with experience assuming randomness of the sequence. That is, small values of p-value correspond to non-randomness of the sequence. Decision rule: at the significance level a, the sequence x (k) does not pass the statistical test if p-value <a. Values of a should be taken from the interval [10 ^ (- 3); 10 ^ (- 2)]. The advantage of this approach in comparison with the previous ones is that the once calculated probability of the p-value is compared with any chosen level of significance without additional calculations.

Thus, the main stages of PSP testing are as follows:

1. formulation of the hypothesis H0 randomness of the sequence;

2. setting the level of significance a (probability of error of the 1st kind), usually a is taken from the interval [10 ^ (- 3); 10 ^ (- 2)];

3. calculating the value of the statistics f_T (x (k)) for the studied sequence x (k);

4. calculation of p-value from the interval (0; 1) according to the formula, depending on the specific test;

5. p-value comparison with a value: if p-value> a, then the test is passed.

Existing tools for statistical PSP testing

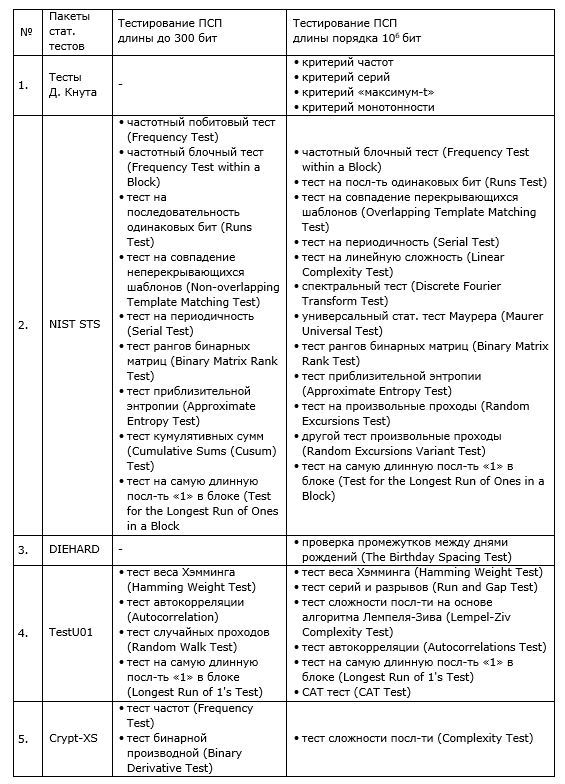

To identify patterns for the analyzed SRP (as well as to their segments of different lengths) a wide range of different statistical tests are applied. In recent decades, a large number of tests have been developed for PSP analysis. We give the well-known sets of statistical tests:

1. 11 tests: Donald Knuth (Stanford University), The Art Of Computer Programming Vol. 2 Seminumerical Algorithms, www-cs-faculty.stanford.edu/~uno/taocp.html

2. 15 tests: Andrew Rukhin, et. al. (NIST ITL), NIST Statistical Test Suite, csrc.nist.gov/groups/ST/toolkit/rng/documentation_software.html

3. 12 tests: George Marsaglia (Florida State University), DIEHARD, stat.fsu.edu/pub/diehard

4. 11 tests: Pierre L'Ecuyer, Richard Simard (Departement d'Informatique et de Recherche Operationnelle Universite de Montreal), TestU01, www.iro.umontreal.ca/~simardr/testu01/tu01.html

5. 5 tests: Helen Gustafson, et. al. (Queensland University of Technology), Crypt-XS, commercial version, www.qut.edu.au/institute-for-future-environments

There are other descriptions and implementations of statistical tests, largely repeating tests from the sets presented above:

1. Alfred Menezes et al., Handbook of Applied Cryptography

2. Peter Hellekalek (University of Salzburg), The pLab Project, random.mat.sbg.ac.at

3. John Walker (Autodesk, Inc.), ENT, www.fourmilab.ch/random

4. Robert G. Brown (Duke University), Dieharder, www.phy.duke.edu/~rgb/General/dieharder.php

5. George Marsaglia (Florida State University), Wai Wan Tsang (The University of Hong Kong), “Distilled” version of Diehard, www.jstatsoft.org/v07/i03

Despite a considerable number of existing implementations of statistical tests of the SRP, this direction is constantly evolving, and now new projects are actively emerging that offer new implementations of the tests examined, including in distributed computing systems.

The considered sets of statistical tests PSP constitute a convenient and flexible tool for studying PSP generators used in cryptographic applications.

The NIST STS package has greater flexibility, extensibility and efficiency (in terms of time spent on testing) and is the most comprehensive package available for statistical testing of binary sequences (for more details, see [5], [8], [9]) . Tests of the NIST STS package are discussed in detail in the article “Statistical testing of random numbers using NIST methods” habrahabr.ru/company/securitycode/blog/237695 [3]. Based on the NIST STS package, techniques for more complete statistical and structural analysis of sequences can be built, given that for a reliable assessment of the quality of the memory bandwidth generated by the generator, it is advisable to carry out not one but several tests.

. [8].

. , , . -, -. , -.

1 , .

1.

, .

1. .. . Tutorial. : - , 2005. – 116 .

2. .. . , , 2008. – 182 .

3. : NIST. – habrahabr.ru/company/securitycode/blog/237695

4. .., .., .. . . , .: , 2000. – 272 .

5. .., .., .. // . – www.tusur.ru/filearchive/reports-magazine/2012-25-2/108.pdf

6. .., .., .. NIST STS.

7. .. , .: -, 2010. – 424.

8. Rukhin, A., Soto, J., Nechvatal, J., Smid, M., Barker, E., Leigh, S., Levenson, M., Van- gel, M., Banks, D., Heckert, A., Dray, J., Vo, S. A Statistical Test Suite for Random and Pseudorandom Number Generators for Cryptographic Applications. – www.csrc.nist.gov/publications/nistpubs/800-22/sp-800-22-051501.pdf .

9. Soto Juan, Statistical Testing of Random Number Generators, National Institute of Standards & Technology. – csrc.nist.gov/groups/ST/toolkit/rng/documents/nissc-paper.pdf

The article is devoted to the systematization of the basic provisions on random and pseudo-random sequences (SP and SRP) numbers. A brief review of known approaches to testing the randomness of generated sequences is given. The applied significance of this topic is determined by the fact that the memory bandwidth is widely used in cryptographic information security systems for generating key and auxiliary information (random numbers, initialization vectors, etc.).

Introduction

Since ancient times, not only mathematics has been studying the randomness. Accident has become the subject of research in many mathematical disciplines - in statistics, in game theory, the theory of fuzzy sets, in physics and chemistry - in studying the properties of the microworld, in economics - in studying the influence of random factors on the origin and development of economic crises, on the trajectories of world currencies in history and sociology - in the study of the value of the factor of chance on the development of historical events and the change of epochs. The development of science in the 20th century showed that the chain of random, truly unpredictable events leads the researcher not to chaos, but to very tough logical conclusions of a global nature. This thesis can be easily illustrated with the help of the central limit theorem (and other theorems) of probability theory. It should be noted feature of systems of random objects, which may seem in a certain sense paradoxical. More often it is possible to describe, as a last resort, global predictions, rather than local laws of large systems. This defines two unconditionally demanded modern research directions: the search for global patterns of random systems and the rationale for a random system of the unpredictability of local events. Random sequences of numbers or elements of other sets are effectively used for experimental study of the properties of complex objects and systems.

')

Random sequences

SPs play an important role in cryptography, they are used to form key and initial parameters of cryptographic algorithms, as well as sequences of encryption substitutions in stream cryptosystems. Randomness and cryptography are closely interrelated: the main purpose of cryptographic systems is to convert non-random meaningful plaintext into a seemingly random sequence of ciphertext characters. This property of cryptosystems is used to generate PSP.

The best cryptographic properties of a cryptosystem are achieved by using the so-called ideal random sequences (ICP), whose mathematical model is represented by the implementation of a sequence of independent random variables with a uniform probability distribution on a given finite alphabet. Such sequences are also called uniformly distributed (on some finite set) random sequences (RRSP). Many studies have been devoted to the description of the properties of the RSTD, in particular, [1], [4], [5] and [7].

Approaches to the definition of the term "randomness"

There are various approaches to the formal definition of the term “randomness”, based on the concepts of computability and algorithmic complexity [2]. Historically, the first approach, the frequency one , was proposed by von Mises at the beginning of the 20th century and was developed by Church, Kolmogorov and Loveland. The idea of the frequency approach is that the frequency of occurrence of elements should be stable in the SP. For example, signs 0 and 1 must occur independently and with equal probabilities not only in the binary SP itself, but also in any of its subsequences chosen independently of the initial generation conditions.

Another approach, a complexity approach, proposed independently by Kolmogorov and Chaitin, is based on the fact that any description of the implementation of a joint venture cannot be significantly shorter than this implementation itself. That is, the joint venture must have a complex structure, and the entropy of its initial elements must be large. With this approach, it is shown that the sequence is random if its algorithmic complexity is close to the length of the sequence.

The third, quantitative , approach is developed by Martin-Lof. It consists in dividing the probabilistic space of sequences into nonrandom and random (relatively few of the latter). Sequences in which any patterns are observed are considered non-random. A sequence is considered random if it passes a set of specific tests designed to identify patterns. However, if you require that the sequence passes any statistical test, it turns out that the SP does not exist at all. In practice, a certain set of tests is used, where the proportion of SPs that do not satisfy them tends to 0 with an unlimited increase in the length of the sequences.

In recent decades, due to the requirements arising in cryptographic applications, a cryptographic approach has been formed to determine the SP, where the sequence is considered random if the computational complexity of finding patterns is not less than a given value. This approach is consistent with the existing systems approach to determining the strength of cryptographic systems.

These approaches to the definition of chance have disadvantages. The frequency approach is complicated in practice by the uncertainty of the rules for choosing subsequences, the complexity approach implies the need to reduce the redundancy of the programming language. The use of the quantitative approach is difficult to determine the set of tests applied to the sequences under study. Lower estimates of the complexity of the cryptographic approach are focused on a set of well-known tests, and it may eventually increase, which leads to a re-evaluation of complexity. At the same time, for cryptographic applications, a cryptographic approach that takes into account the statistical properties of sequences and their area of application is most appropriate.

PSP testing

It is proved (Herring, C., Palmore, JI Random Number Generators are Chaotic, 1995) that any algorithmic implementation of a sequence is imperfect, that is, a truly random sequence cannot be calculated by some rule and, therefore, it must be non-periodic infinite sequence. Sequences that are generated by a particular rule that mimic RRSP are called pseudo-random sequences (PSP). Successful imitation is understood as the closeness of a number of characteristics generated by the memory bandwidth to similar characteristics of ICP.

The generation of the SRP used in cryptographic applications is based on the implementation of multiple iterations of a certain transformation of a finite set X (often X is a set of binary n-dimensional vectors). This output sequence is periodic. However, the length of the period can be quite large, it is guaranteed, in particular, the use of linear shift registers with primitive characteristic polynomials. For many PSP generators, good statistical properties and high linear complexity, a long period, have been proved, but it is often not possible to prove the analytical and statistical properties. Then, statistical tests are used to justify many properties.

The variety of criteria for evaluating PSP is extremely large. Each of the approaches to sequence analysis can be attributed to one of two groups.

The first group is related to the search for patterns that allow reproducing a sequence along its segment of small length. At the same time, the main requirements for the sequence are reduced to the absence of relatively simple inter-character dependencies.

The second group of criteria is related to the evaluation of the statistical properties of a sequence: is there any frequency imbalance in the studied sequence that allows the analyst to predict the next (previous) sign from a known segment of the sequence, that is, to assume the value of the next (previous) bit with a probability greater than with a random choosing? The basic requirements for the CBS for the second group of criteria are to ensure that a number of its statistical properties correspond to the properties of the ICP, in particular, the frequency of occurrence of individual characters and the s-gram should be evenly distributed for sufficiently large values of s.

In the systems approach, well-known tests are used to analyze sequences and new tests are developed taking into account the characteristics of the object being analyzed. If, in the course of analysis, a new weakness is detected in a certain class of sequences, then a corresponding new test is developed, which supplements the used set of known tests.

Both groups of sequence analysis methods constitute a systematic approach to the analysis of the CBS, in particular, to the development of stream ciphers.

One of the first formulations of the fundamental requirements for the statistical properties of periodic binary SRP was given by S. Golomb. These requirements for sequences are known in cryptography as Golomb postulates (Golomb SW Shift Register Sequences, 1967). Golomb postulates are given, in particular, in the monograph [7]. The postulates proceed from the condition of preserving the positive statistical properties inherent in linear recurrent sequences with a maximum period length.

Golomb rules do not guarantee high quality bandwidth and are rather necessary. In practice, the use of the Golomb postulates for assessing the quality of the memory bandwidth is difficult, because of the complexity of the theoretical calculation of the length of the memory bandwidth, the s – gram frequency on the period, and the values of the autocorrelation function. Calculation on a computer is possible only for the SRP with a short period, which limits the use of Golomb's postulates in cryptographic applications.

The purpose and objectives of the statistical testing of PSP

Testing a random memory bandwidth test is a way to identify its specific properties by comparing the characteristics of the memory bandwidth with similar characteristics of the ICP.

With the help of statistical testing, the following problems are solved:

1) evaluation of the properties of the output sequence of the generator CAP in terms of use in a cryptographic algorithm (for example, as a secret key);

2) assessment of the quality of cryptographic primitives (hash functions, block and stream ciphers) by their output sequences, from which indistinguishability from ICP is required, in particular, testing of such cryptographic properties, such as the avalanche effect of changing the output of algorithms for distortions, correlation of intermediate and output sequences;

3) identification of PSP generators, issuing "non-random" sequences; development of new generators PSP; verification of the correctness of the implementation of generators PSP.

The essence of testing usually comes down to testing the so-called “null hypothesis” H0 with respect to the studied sequence x (k) = (x_1, ..., x_k) of length k, according to which x is obtained on the basis of k independent tests of a probability scheme with a uniform distribution.

In general, the statistical test T is a two-digit function.

,

,where A * is the set of sequences in the alphabet A. The statistical test T divides the set V (k) of sequences of length k into the set V (k, 0) of “non-random” sequences (as a rule, relatively small) and the set V (k, 1) = V (k) / V (k, 0) of random sequences. The probability P that the test rejects the randomly selected binary sequence x (k) is V (k, 0) / 2 ^ k. As a rule, in real tests P does not exceed 0.01.

The complexity of the exact calculation of the function T is great due to the bulkiness of the domain of definition. Therefore, statistical testing for acceptance / rejection of the H0 hypothesis is performed using the f_T statistic, that is, a relatively simple computable function mapping the set of sequences into a set of real numbers, and the probability distribution of statistics for the H0 hypothesis, determined by probability-theoretic methods. The statistical test is a set of statistics calculated from the source data, and the decision rule, according to which the H0 hypothesis is determined by the statistical value.

The statistical test is probabilistic, that is, errors of two kinds occur, which are important characteristics of the test:

1) error a of the first kind, if the sequence is random, but H0 is rejected;

2) error b of the second kind, if the sequence is not random, but H0 is accepted.

The decision to pass a statistical test sequence is made on the basis of various criteria [7].

Based on the threshold . The approach is based on calculating for the given sequence x (k) statistics of the test f_T (x (k)) and its subsequent comparison with a certain threshold value c (k). According to the criterion, the binary sequence x (k) does not pass the statistical test if f_T (x (k)) <c (k). Such an approach gives erroneous decisions relatively often and is considered not entirely reliable.

Based on a fixed confidence interval . With this approach, the sequence x (k) does not pass the statistical test if f_T (x (k)) is outside the limits of the confidence interval of the statistics values calculated for a given level of significance a. At lower levels of significance, the width of the confidence interval is larger. This criterion is more reliable than the first.

Based on p-value calculation . The third class of criteria is based on the calculation of the characteristics of the test from the [0,1] segment, called the p-value (p-value). Statistics test, considered as a random variable with a known distribution law, is constructed so that large values indicate a certain defect in the randomness of the sequence. The probability value of p-value is the probability that test statistics will take on a value greater than that observed with experience assuming randomness of the sequence. That is, small values of p-value correspond to non-randomness of the sequence. Decision rule: at the significance level a, the sequence x (k) does not pass the statistical test if p-value <a. Values of a should be taken from the interval [10 ^ (- 3); 10 ^ (- 2)]. The advantage of this approach in comparison with the previous ones is that the once calculated probability of the p-value is compared with any chosen level of significance without additional calculations.

Thus, the main stages of PSP testing are as follows:

1. formulation of the hypothesis H0 randomness of the sequence;

2. setting the level of significance a (probability of error of the 1st kind), usually a is taken from the interval [10 ^ (- 3); 10 ^ (- 2)];

3. calculating the value of the statistics f_T (x (k)) for the studied sequence x (k);

4. calculation of p-value from the interval (0; 1) according to the formula, depending on the specific test;

5. p-value comparison with a value: if p-value> a, then the test is passed.

Existing tools for statistical PSP testing

To identify patterns for the analyzed SRP (as well as to their segments of different lengths) a wide range of different statistical tests are applied. In recent decades, a large number of tests have been developed for PSP analysis. We give the well-known sets of statistical tests:

1. 11 tests: Donald Knuth (Stanford University), The Art Of Computer Programming Vol. 2 Seminumerical Algorithms, www-cs-faculty.stanford.edu/~uno/taocp.html

2. 15 tests: Andrew Rukhin, et. al. (NIST ITL), NIST Statistical Test Suite, csrc.nist.gov/groups/ST/toolkit/rng/documentation_software.html

3. 12 tests: George Marsaglia (Florida State University), DIEHARD, stat.fsu.edu/pub/diehard

4. 11 tests: Pierre L'Ecuyer, Richard Simard (Departement d'Informatique et de Recherche Operationnelle Universite de Montreal), TestU01, www.iro.umontreal.ca/~simardr/testu01/tu01.html

5. 5 tests: Helen Gustafson, et. al. (Queensland University of Technology), Crypt-XS, commercial version, www.qut.edu.au/institute-for-future-environments

There are other descriptions and implementations of statistical tests, largely repeating tests from the sets presented above:

1. Alfred Menezes et al., Handbook of Applied Cryptography

2. Peter Hellekalek (University of Salzburg), The pLab Project, random.mat.sbg.ac.at

3. John Walker (Autodesk, Inc.), ENT, www.fourmilab.ch/random

4. Robert G. Brown (Duke University), Dieharder, www.phy.duke.edu/~rgb/General/dieharder.php

5. George Marsaglia (Florida State University), Wai Wan Tsang (The University of Hong Kong), “Distilled” version of Diehard, www.jstatsoft.org/v07/i03

Despite a considerable number of existing implementations of statistical tests of the SRP, this direction is constantly evolving, and now new projects are actively emerging that offer new implementations of the tests examined, including in distributed computing systems.

The considered sets of statistical tests PSP constitute a convenient and flexible tool for studying PSP generators used in cryptographic applications.

The NIST STS package has greater flexibility, extensibility and efficiency (in terms of time spent on testing) and is the most comprehensive package available for statistical testing of binary sequences (for more details, see [5], [8], [9]) . Tests of the NIST STS package are discussed in detail in the article “Statistical testing of random numbers using NIST methods” habrahabr.ru/company/securitycode/blog/237695 [3]. Based on the NIST STS package, techniques for more complete statistical and structural analysis of sequences can be built, given that for a reliable assessment of the quality of the memory bandwidth generated by the generator, it is advisable to carry out not one but several tests.

. [8].

. , , . -, -. , -.

1 , .

1.

, .

1. .. . Tutorial. : - , 2005. – 116 .

2. .. . , , 2008. – 182 .

3. : NIST. – habrahabr.ru/company/securitycode/blog/237695

4. .., .., .. . . , .: , 2000. – 272 .

5. .., .., .. // . – www.tusur.ru/filearchive/reports-magazine/2012-25-2/108.pdf

6. .., .., .. NIST STS.

7. .. , .: -, 2010. – 424.

8. Rukhin, A., Soto, J., Nechvatal, J., Smid, M., Barker, E., Leigh, S., Levenson, M., Van- gel, M., Banks, D., Heckert, A., Dray, J., Vo, S. A Statistical Test Suite for Random and Pseudorandom Number Generators for Cryptographic Applications. – www.csrc.nist.gov/publications/nistpubs/800-22/sp-800-22-051501.pdf .

9. Soto Juan, Statistical Testing of Random Number Generators, National Institute of Standards & Technology. – csrc.nist.gov/groups/ST/toolkit/rng/documents/nissc-paper.pdf

Source: https://habr.com/ru/post/273629/

All Articles