Parallelizing the Strassen Algorithm on Intel® Xeon Phi (TM)

Intel Xeon Phi (TM) coprocessors are a PCI Express device and have x86 architecture, providing high peak performance - up to 1.2 teraflops (a trillion floating-point operations per second) of double precision per coprocessor. Xeon Phi (TM) can provide simultaneous operation of up to 244 threads, and this must be considered when programming for maximum efficiency.

Recently, together with Intel, we conducted a small study on the effectiveness of the implementation of the Strassen algorithm for the Intel Xeon Phi (TM) coprocessor. Who are interested in the subtleties of working with this device and just loving parallel programming, please under the cat.

')

The total number of operations of the standard multiplication algorithm for square matrices of size n (additions and multiplications):

The Strassen Algorithm (1969), which belongs to the fast matrix multiplication algorithms, requires:

Thus, the Strassen algorithm has less asymptotic complexity and is potentially faster on large matrix sizes. But the recursive nature of this algorithm presents a certain complexity for efficient parallelization on modern computing systems and the use of data from memory.

In this paper, we consider a combined approach in which the standard matrix multiplication algorithm is called when the threshold value is reached from the Strassen algorithm (we used Intel MKL DGEMM as the standard algorithm). This is due to the fact that with small matrix sizes (tens or hundreds, depending on the processor architecture), Strassen’s algorithm begins to lose to the standard algorithm both in the number of operations and due to a larger number of cash misses. Theoretical estimates for the matrix size threshold for the transition to the standard algorithm (excluding caching and pipelining) give various estimates - 8 for Hayem and 12 for Hass-Lederman; in practice, the threshold value depends on the architecture of the computing system and should be evaluated experimentally. For our system with Intel Xeon Phi (TM) 7120D, the value of 1024 turned out to be the most effective.

The results of computational experiments were compared with the DGEMM function from the Intel MKL library. Considered multiplication of square size matrices where

where  and

and  . M here means the threshold size of the matrices, after which the standard algorithm is called. The multiplication of two matrices is written as

. M here means the threshold size of the matrices, after which the standard algorithm is called. The multiplication of two matrices is written as  where a, b and c are matrix size

where a, b and c are matrix size  . The Strassen method for multiplying matrices is based on the recursive division of each multiplied matrix into 4 submatrices and performing operations on them. Matrix size requirement (

. The Strassen method for multiplying matrices is based on the recursive division of each multiplied matrix into 4 submatrices and performing operations on them. Matrix size requirement (  and

and  ) it is necessary that it was possible to split each matrix into 4 submatrices at the required recursion depth.

) it is necessary that it was possible to split each matrix into 4 submatrices at the required recursion depth.

The Strassen method is described by the following formula:

Where

Below is shown how 4 groups of operations can be distinguished; in each of them, all operations can be performed in parallel.

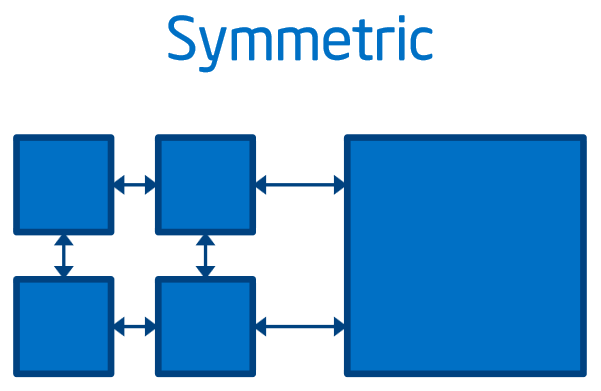

There is nothing difficult in the implementation of parallelization. OpenMP and the task mechanism ( omp task ) were used, the load distribution between tasks is shown in the figure. The first groups of operations were combined into one, so there are fewer synchronization points, since this is a very expensive operation.

To measure the time, a standard approach was used with a “warming up” start and averaging the time of several starts. As a timer, the Intel MKL dsecnd function is used.

After the implementation of parallelization, we conducted a series of tests. Tests were conducted in native mode on Intel Xeon Phi (TM) 7120D, 16 GB.

In order not to complicate the article, all tests will be carried out on 8192 × 8192 matrix sizes (this size is almost marginal in terms of memory consumption for the Strassen parallelized algorithm — about 10GB — and is large enough to experience the effect of a smaller asymptotic complexity of the algorithm).

On a small number of threads, the Strassen algorithm turned out to be faster than the Intel MKL DGEMM. It was also noted that a large number of threads experience a drop in performance (the task almost does not scale over 60 threads). For efficient use of Intel Xeon Phi (TM) resources in a multi-threaded application, it is recommended to use the number of threads, multiple of NP-1 , where NP is the number of processors in the device (61 in our case). You can read more here .

The idea arose that the use of parallelism within the DGEMM called from Strassen could help further scaling. There are several functions for managing the number of threads in MKL: mkl_set_num_threads , mkl_set_num_threads_local , mkl_domain_set_num_threads . When trying to use mkl_set_num_threads, we found that this function does not affect the number of threads in the MKL DGEMM called from the Strassen algorithm (the number of threads in the MKL outside of Straßen was affected by this function). Nested parallelism was enabled ( OMP_NESTED = true ).

As it turned out, MKL actively resists nested parallelism. MKL uses the environment variable MKL_DYNAMIC , which is true by default. This variable allows MKL to reduce the number of threads that the user sets, in particular, when calling MKL functions from the parallel area, the number of threads will be set to 1. To enable parallelism in MKL DGEMM, we used the mkl_set_dynamic (0) function.

The results of the Strassen algorithm for 120 threads using the multi-threaded MKL DGEMM became a little better, but we did not get much benefit.

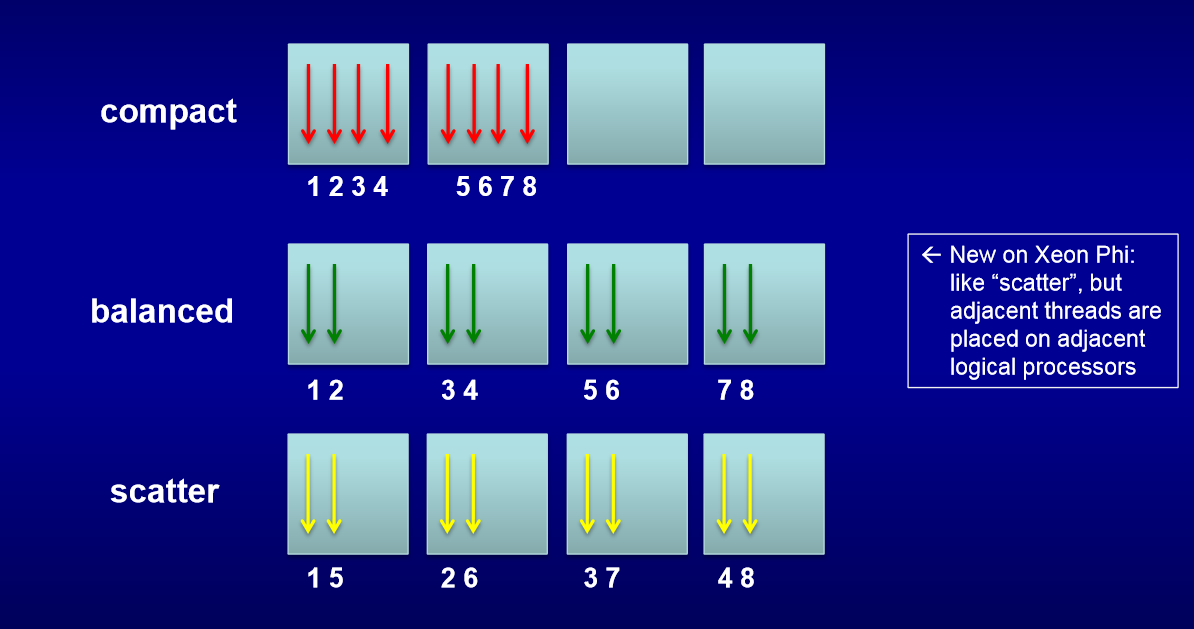

Our next step was to study the binding of OpenMP software threads to physical and logical cores (binding). On Xeon Phi, the environment variables KMP_AFFINITY and KMP_PLACE_THREADS are used to control the binding of threads to the kernels. A good description found here .

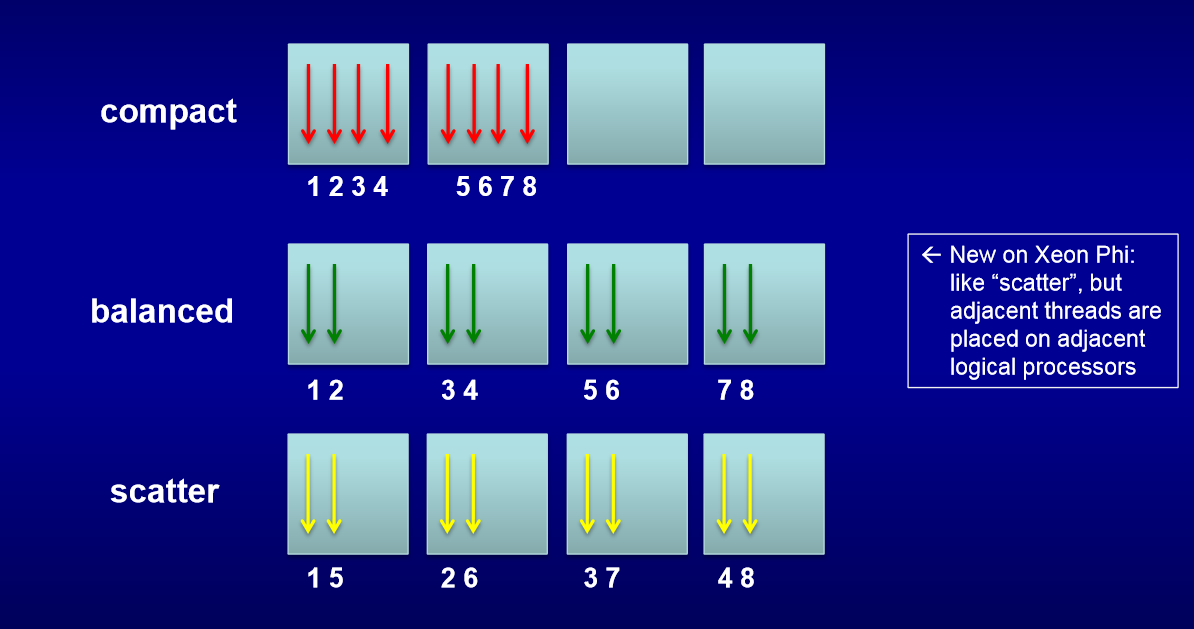

The most important among the KMP_AFFINITY parameters is type , which controls the order in which the threads are assigned to the kernels (see figure below).

The following KMP_AFFINITY values were used:

KMP_AFFINITY = granularity = fine, balanced

KMP_AFFINITY = granularity = fine, compact

By default, the variable KMP_AFFINITY = granularity = core, balanced . When testing, it turned out that the best parameters for matrix multiplication are KMP_AFFINITY = granularity = fine, balanced , and the parameter does not affect the results of the MKL DGEMM as much as the Strassen algorithm, where, compared to KMP_AFFINITY = granularity = fine, compact, there is a two-time reduction work. Also note that changing the environment variable KMP_AFFINITY from its default value ( KMP_AFFINITY = granularity = core, balanced ) reduced the running time of the MKL DGEMM from the minimum 1.90 s to 1.15 s (approximately 1.5 times). The MKL DGEMM results with compact and balanced differ in a predictable way, for example, with 120 threads, the compact option uses 30 cores with 4 threads each , and balanced - 60 through 2, due to a larger number of cores and almost double acceleration is obtained in the balanced version.

We also tried to profile the program on Xeon Phi, how to do this you can read here . To profile only the multiplication algorithm, we used the capabilities of the VTune API .

As a result, we could not catch up with the MKL DGEMM on the maximum number of threads, but learned a little more about programming such a powerful calculator like Intel Xeon Phi (TM).

Recently, together with Intel, we conducted a small study on the effectiveness of the implementation of the Strassen algorithm for the Intel Xeon Phi (TM) coprocessor. Who are interested in the subtleties of working with this device and just loving parallel programming, please under the cat.

')

The total number of operations of the standard multiplication algorithm for square matrices of size n (additions and multiplications):

The Strassen Algorithm (1969), which belongs to the fast matrix multiplication algorithms, requires:

Thus, the Strassen algorithm has less asymptotic complexity and is potentially faster on large matrix sizes. But the recursive nature of this algorithm presents a certain complexity for efficient parallelization on modern computing systems and the use of data from memory.

In this paper, we consider a combined approach in which the standard matrix multiplication algorithm is called when the threshold value is reached from the Strassen algorithm (we used Intel MKL DGEMM as the standard algorithm). This is due to the fact that with small matrix sizes (tens or hundreds, depending on the processor architecture), Strassen’s algorithm begins to lose to the standard algorithm both in the number of operations and due to a larger number of cash misses. Theoretical estimates for the matrix size threshold for the transition to the standard algorithm (excluding caching and pipelining) give various estimates - 8 for Hayem and 12 for Hass-Lederman; in practice, the threshold value depends on the architecture of the computing system and should be evaluated experimentally. For our system with Intel Xeon Phi (TM) 7120D, the value of 1024 turned out to be the most effective.

The results of computational experiments were compared with the DGEMM function from the Intel MKL library. Considered multiplication of square size matrices

where

where  and

and  . M here means the threshold size of the matrices, after which the standard algorithm is called. The multiplication of two matrices is written as

. M here means the threshold size of the matrices, after which the standard algorithm is called. The multiplication of two matrices is written as  where a, b and c are matrix size

where a, b and c are matrix size  . The Strassen method for multiplying matrices is based on the recursive division of each multiplied matrix into 4 submatrices and performing operations on them. Matrix size requirement (

. The Strassen method for multiplying matrices is based on the recursive division of each multiplied matrix into 4 submatrices and performing operations on them. Matrix size requirement (  and

and  ) it is necessary that it was possible to split each matrix into 4 submatrices at the required recursion depth.

) it is necessary that it was possible to split each matrix into 4 submatrices at the required recursion depth.The Strassen method is described by the following formula:

Where

Below is shown how 4 groups of operations can be distinguished; in each of them, all operations can be performed in parallel.

There is nothing difficult in the implementation of parallelization. OpenMP and the task mechanism ( omp task ) were used, the load distribution between tasks is shown in the figure. The first groups of operations were combined into one, so there are fewer synchronization points, since this is a very expensive operation.

To measure the time, a standard approach was used with a “warming up” start and averaging the time of several starts. As a timer, the Intel MKL dsecnd function is used.

function of measuring the operation time of multiplication algorithms

double run_method(MatrixMultiplicationMethod mm_method, int n, double *A, double *B, double *C) { int runs = 5; mm_method(n, A, B, C); double start_time = dsecnd(); for (int i = 0; i < runs; ++i) mm_method(n, A, B, C); double end_time = dsecnd(); double elapsed_time = (end_time - start_time) / runs; return elapsed_time; } After the implementation of parallelization, we conducted a series of tests. Tests were conducted in native mode on Intel Xeon Phi (TM) 7120D, 16 GB.

What are the Xeon Phi (TM) and usage modes?

| Name | TDP (WATTS) | Number of cores | Frequency (GHz) | Peak Performance (GFLOP) | Peak Bandwidth (GB / s) | Memory Capacity (GB) |

| 3120A | 300 | 57 | 1.1 | 1003 | 240 | 6 |

| 3120P | 300 | 57 | 1.1 | 1003 | 240 | 6 |

| 5110P | 225 | 60 | 1.053 | 1011 | 320 | eight |

| 5120D | 245 | 60 | 1.053 | 1011 | 352 | eight |

| 7120P | 300 | 61 | 1.238 | 1208 | 352 | sixteen |

| 7120X | 300 | 61 | 1.238 | 1208 | 352 | sixteen |

| 7120A | 300 | 61 | 1.238 | 1208 | 352 | sixteen |

| 7120D | 270 | 61 | 1.238 | 1208 | 352 | sixteen |

|  |  |

| Suitable for high-parallel and vectorized code, sequential code is slow | Suitable for serial code with large parallel regions, a potential problem is data transfer over PCIe | Suitable for high-parallel code that runs efficiently on both platforms, a potential problem is load imbalance |

In order not to complicate the article, all tests will be carried out on 8192 × 8192 matrix sizes (this size is almost marginal in terms of memory consumption for the Strassen parallelized algorithm — about 10GB — and is large enough to experience the effect of a smaller asymptotic complexity of the algorithm).

| Number of threads | one | four | eight | sixteen | 60 | 120 | 180 | 240 |

| Strassen, with | 114.89 | 29.9 | 15.75 | 8.85 | 3.68 | 3.58 | 4.22 | 8.17 |

| MKL DGEMM, c | 131.79 | 34.38 | 17.27 | 9 | 2.61 | 1.90 | 2.45 | 2.54 |

On a small number of threads, the Strassen algorithm turned out to be faster than the Intel MKL DGEMM. It was also noted that a large number of threads experience a drop in performance (the task almost does not scale over 60 threads). For efficient use of Intel Xeon Phi (TM) resources in a multi-threaded application, it is recommended to use the number of threads, multiple of NP-1 , where NP is the number of processors in the device (61 in our case). You can read more here .

The idea arose that the use of parallelism within the DGEMM called from Strassen could help further scaling. There are several functions for managing the number of threads in MKL: mkl_set_num_threads , mkl_set_num_threads_local , mkl_domain_set_num_threads . When trying to use mkl_set_num_threads, we found that this function does not affect the number of threads in the MKL DGEMM called from the Strassen algorithm (the number of threads in the MKL outside of Straßen was affected by this function). Nested parallelism was enabled ( OMP_NESTED = true ).

As it turned out, MKL actively resists nested parallelism. MKL uses the environment variable MKL_DYNAMIC , which is true by default. This variable allows MKL to reduce the number of threads that the user sets, in particular, when calling MKL functions from the parallel area, the number of threads will be set to 1. To enable parallelism in MKL DGEMM, we used the mkl_set_dynamic (0) function.

the code of what we got

mkl_set_dynamic(0); omp_set_nested(1); set_num_threads(num_threads); mkl_set_num_threads(mkl_num_threads); strassen( … ); … mkl_set_num_threads(num_threads * mkl_num_threads); dgemm( … ); | Total number of threads | Strassen, with | MKL DGEMM, with | ||

| Number of threads in MKL DGEMM | ||||

| one | 2 | four | ||

| 60 | 3.68 | 3.89 | 5.19 | 2.61 |

| 120 | 3.58 | 3.50 | 3.82 | 1.90 |

| 240 | 8.17 | 4.23 | 3.59 | 2.54 |

The results of the Strassen algorithm for 120 threads using the multi-threaded MKL DGEMM became a little better, but we did not get much benefit.

Our next step was to study the binding of OpenMP software threads to physical and logical cores (binding). On Xeon Phi, the environment variables KMP_AFFINITY and KMP_PLACE_THREADS are used to control the binding of threads to the kernels. A good description found here .

The most important among the KMP_AFFINITY parameters is type , which controls the order in which the threads are assigned to the kernels (see figure below).

The following KMP_AFFINITY values were used:

KMP_AFFINITY = granularity = fine, balanced

| Total number of threads | Strassen, with | MKL DGEMM, with | ||

| Number of threads in MKL DGEMM | ||||

| one | 2 | four | ||

| 60 | 3.67 | 4.07 | 5.53 | 2.64 |

| 120 | 3.54 | 3.51 | 3.95 | 1.51 |

| 240 | 7.11 | 4.33 | 3.41 | 1.15 |

KMP_AFFINITY = granularity = fine, compact

| Total number of threads | Strassen, with | MKL DGEMM, with | ||

| Number of threads in MKL DGEMM | ||||

| one | 2 | four | ||

| 60 | 9.29 | 9.96 | 10.27 | 4.58 |

| 120 | 6.23 | 6.79 | 6.04 | 2.31 |

| 240 | 9.32 | 5.21 | 4.21 | 1.15 |

By default, the variable KMP_AFFINITY = granularity = core, balanced . When testing, it turned out that the best parameters for matrix multiplication are KMP_AFFINITY = granularity = fine, balanced , and the parameter does not affect the results of the MKL DGEMM as much as the Strassen algorithm, where, compared to KMP_AFFINITY = granularity = fine, compact, there is a two-time reduction work. Also note that changing the environment variable KMP_AFFINITY from its default value ( KMP_AFFINITY = granularity = core, balanced ) reduced the running time of the MKL DGEMM from the minimum 1.90 s to 1.15 s (approximately 1.5 times). The MKL DGEMM results with compact and balanced differ in a predictable way, for example, with 120 threads, the compact option uses 30 cores with 4 threads each , and balanced - 60 through 2, due to a larger number of cores and almost double acceleration is obtained in the balanced version.

We also tried to profile the program on Xeon Phi, how to do this you can read here . To profile only the multiplication algorithm, we used the capabilities of the VTune API .

As a result, we could not catch up with the MKL DGEMM on the maximum number of threads, but learned a little more about programming such a powerful calculator like Intel Xeon Phi (TM).

Source: https://habr.com/ru/post/273585/

All Articles