Castanedovskiy warrior risk management

Want to know what risk management is and how to deal with them with ninja dexterity?

Then welcome under the cat!

(This article presents the thoughts inspired by the work of Tom DeMarco, and most likely the article will be uninteresting for those who are already familiar with his works)

')

In 1988, the city of Denver decided to build a new airport instead of the old one. The existing airport did not meet the needs of the growing city, and was unsuitable for expansion. A budget was allocated, construction began. But, unfortunately, the project could not be completed on time. The reason for the delay was not written in time software. The airport was equipped with low narrow tunnels designed to transport baggage. The software had to scan the baggage barcode and direct it in the right direction through the network of tunnels. Since, due to software incompleteness, baggage delivery was impossible, the airport was idle until the software was ready. Since the construction of the airport is associated with huge capital investments, all this capital was frozen while the programmers were in a hurry trying to play catch-up. And time is money. Loss of profits + losses amounted to half a billion dollars ... The media destroyed the reputation of the software supplier, putting all the blame on it.

Now let's imagine that the managers of this project spent half a day of their precious time and engaged in risk assessment after brainstorming. Imagine that they have gathered, and analyzed, and what losses they will incur if the runway or software is not ready on time, and also thought how to reduce the losses in the event of force majeure. By golly, a network of tiny tunnels is not the only way to transport baggage! If a couple extra million dollars were spent on building slightly more spacious tunnels, then luggage could be transported using cheap labor or small trucks. Two million - much less than half a billion. In the end, you could not even invest in the expansion of the tunnels, it would be possible to use sled dogs! Hire a dog handler, agree with the owner of the nearest kennel for stray dogs, and start training. If the software were ready on time, then the price of hot dogs would remain unchanged at the airport shawry-ball airport, but in case of difficulties, riding dogs could save the situation. In any case, this is at least some kind of safety net. But there is one thing. About such things you need to think in advance. If the contractor resorts on the appointed day of delivery of the project, shouting, “Chef, everything is gone!”

When you put in the project budget potential losses from risk multiplied by the probability of risk, this is called risk containment . The risk is either there or not. If it does not come, then your money will remain with you. If it does, then perhaps the extra money that you put into the budget will not be enough. But if you appreciate all the risks, and some of them come, and some do not, then you will probably be in the budget.

When you spend money in advance to minimize the risk of loss, this is called risk mitigation . For example, when you hire a dog handler for dog training. It doesn’t matter whether the risk comes or not, the handler will eat and have fun at your expense.

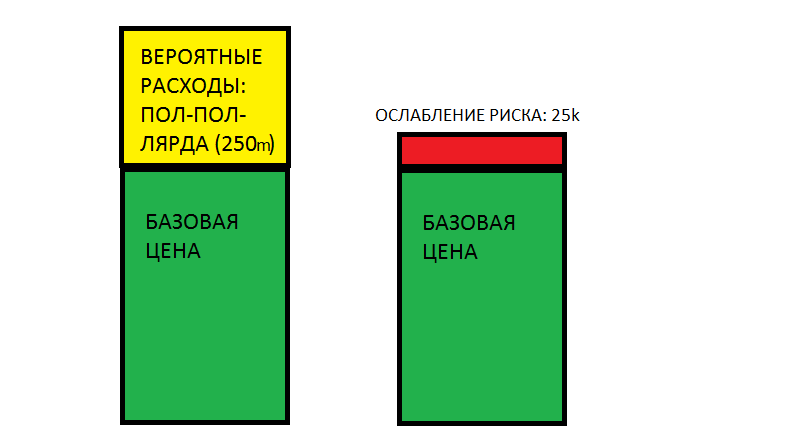

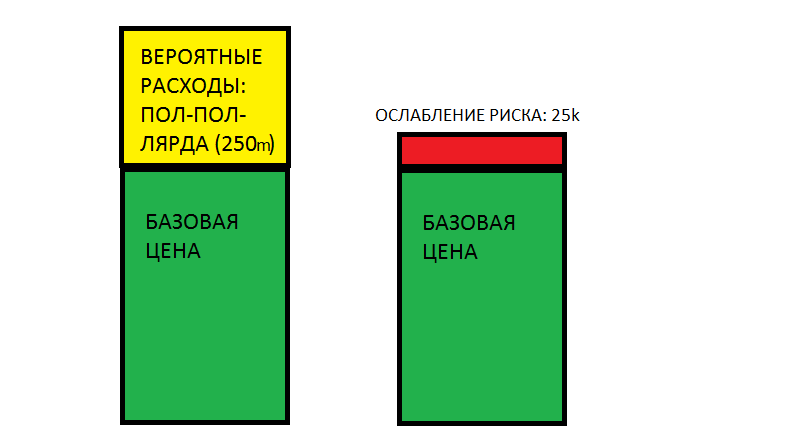

Let's take a look at the ratio of easing and containing risks to airport construction.

It is known that another programmers team has done this program twice as long. In addition, programmers constantly complain that they fail to catch up with the schedule, so we assume that the probability of keeping up with the deadline is 50%. In fact, if you are familiar with risk diagrams, you will agree that the probability of the most optimal scenario occurring is equal to the nan interest, but we, like construction managers, will slightly raise expectations and assume that the success probability is 50%. As in one bearded joke: either it will, or not, therefore 50%. In IT in general, in most cases, temporal and probabilistic estimates are given intuitively and “from the ceiling.” So, the probability of risk is 1 \ 2, the price is half a billion, we’ll be mortgaging half of half a billion to contain the risk. To reduce the risk will require several monthly salaries dog handlers, a ton of dog food, + salary cleaner, tidying up for dogs living in an unfinished airport. Well, or the price of more spacious tunnels - to your taste. It would look something like this:

As you can see, reducing the risk in this particular case looks much more profitable. Of course, if the risk does not come, then you will spend resources that could not spend. But, on the other hand, if it comes, and you are not insured, the consequences will be catastrophic. This is how to spare money on a safety cable when you are going to walk a tightrope. Reducing risk is much cheaper than containing it, and its benefits are obvious. But let's consider the opposite situation - what if risk containment is much cheaper than loosening it? Does this mean that in this case it is necessary to contain the risk? In fact, not always. Here the philosophy of the warrior will help us.

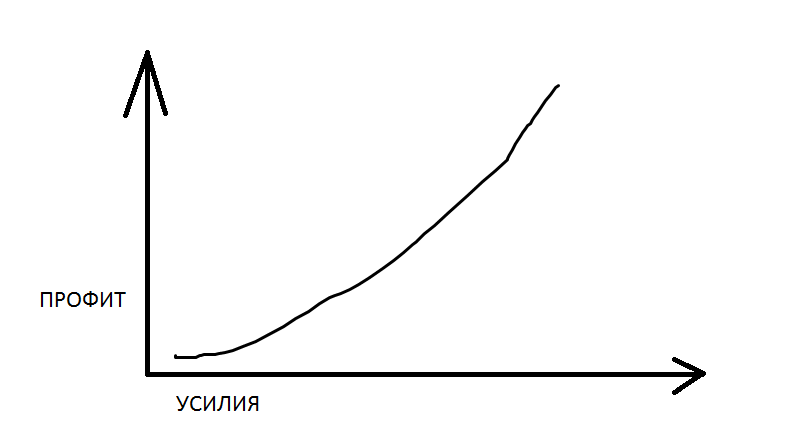

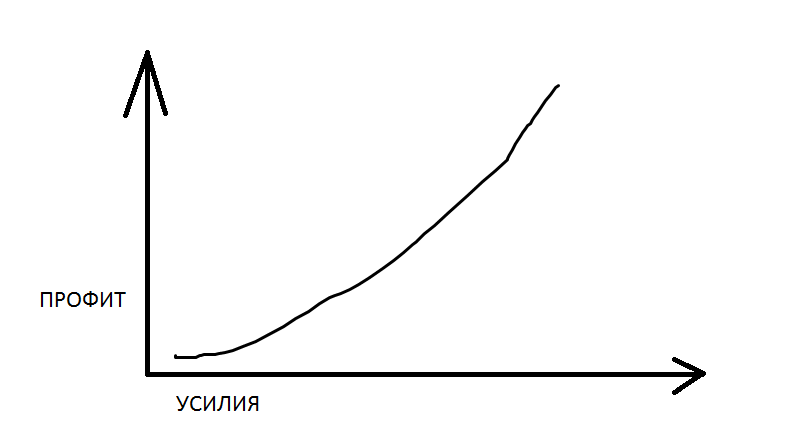

Meet this Carefree Farmer. He knows that the harder he will water and weed his field, the richer he will harvest. The picture of the world of carefree farmer looks like this:

The more I tried, the more I got. Dependency is exponential: over time, for the same efforts you get more reward (increasing qualifications and position in society, increasing passive income, etc.), until some fixed ceiling is reached. Everything is predictable and simple.

And this is the Fearless Warrior. A warrior may receive a thousand minor wounds, but continue to stand firmly on his feet; until you get a fatal blow. During the confrontation of the two sides, each side is trying to maximize the fatal risks of the enemy. Tiring the enemy to death is much less effective than delivering a calculated blow to the heart. For a warrior, the “more effort, more reward” formula doesn't work. He can lose everything because of a single mistake. For the concept of risk, both philosophies are true - both the landowner and the warrior - but the concept of the warrior is nevertheless closer. The risk is either there or not. This will either lead to the failure of the project, or not. With a shield or shield. On the other hand, we do not live in ancient Japan, and it is not customary in our country for us to chop off guilty heads to the left and right in any incomprehensible situation. Not every failed project means the breakup of an organization and the end of a management career. From any seemingly hopeless situation, you can try to extract at least some benefit. You can complete the project with reduced functionality, change the target audience, use the created developments in the next project, draw conclusions about the reasons for the failure of the project and try to prevent this from happening in the future. From this failure will not cease to be a failure, but at least some of your efforts will pay off.

White goes knight on e5 with the next move. If black beats the queen, then mate will follow:

7 ... C: d1 The black elephant chops the white queen

8. C: f7 + Kp: e7 White elephant, under the cover of a horse, puts the check to black. Black has the only possible move by the king on e7

9. K: d5 # Second horse on d5. Mat

There is an opportunity to cut down the opponent's queen and allow yourself to set a check, but if the opponent is able to realize the risks that have appeared, then you will lose the game. This is a fatal (or critical) risk to your party.

Consider an example of a command intellectual game - dots, where each team consists of 5 people. The more local skirmishes a player wins, the stronger his character becomes, and the more chances his team has to win the game. However, even if one team wins time after time, and it would seem that there is no hope anymore, one single player error from the dominant team can mean a loss for the whole team. If in chess the goal is to cut down the king, then in DotA the goal is to cut down the source of the power of the opposing team (the world tree of the “white” or the magic ice throne of the “blacks”). It happens that a player takes a risk by attacking a weaker player from the opposing team, or by attacking together one. The risk is that it may actually be a trap, and the player is secured from an ambush. If two players are caught in a well-organized ambush, the nearest player will most likely be “killed” and temporarily out of the game before the “resurrection”, and the second player may try to save him (with the risk of being out of the game for some time) or run as fast as the opposing team cracks down on the first player. If the second player takes the risk and also “dies”, the team will remain in the minority. After that, the enemy team can launch a full-scale offensive, and, taking advantage of the numerical advantage, break the defense and destroy the enemy’s “source of strength”.

This is similar to the final battle of some action movie, when one character gets a lot of lyuley throughout the fight and generally looks like a weak wimp against the background of the fight favorite, but at the end, by some miracle, he still knocks the enemy out. Yes, the philosophy of a warrior, she is. It is not enough just to throw further, jump higher, hit harder and have more experience. It takes wisdom and courage to anticipate possible risks .

Let's see what the losing team did wrong. Maybe they shouldn't have attacked and risked being ambushed? In fact, it was worth it. Risk and benefit go hand in hand. Payback risks approaching victory. Who does not risk, he does not drink champagne. Another thing is that the risk must be wisely . In this case, the second player, when he realized that the case smells like roast, was worth saving his team, and not his partner. On the other hand, if you are forced to leave a friend in need, this is a clear sign that you are doing something wrong. In this case, it was necessary to reduce the risk , after conducting exploration. Yes, it would require additional resources and time, but it significantly reduces the risks. Moreover, the team was dominant and could afford to spend some resources on exploration. Apparently, the sensation of a quick victory stupefied them, and they completely forgot about the possible risks. Disrespecting the enemy is a fatal mistake. Remember, any relationship between people is built on mutual respect.

And now let us return to the question, and if risk containment is much cheaper than weakening it, then is it worth restraining the risk? Let me remind you that risk containment = probability of risk * loss from the occurrence of risk. If the risk comes, you will need to pay the full cost, which can be many times greater than the amount inherent in containing the risk. If you do not have the opportunity to pay this amount, and this will mark the failure of the project, then, in my opinion, it is better to do the risk mitigation. If risk mitigation is not possible, then it is worth thinking carefully, and is it worth taking up this project at all? In my opinion, it is wiser to get half of the possible profits than to risk losing everything. Of course, you can not control all the risks. Risk and profit go hand in hand, so sometimes it makes sense to just take a chance. You cannot guarantee that the meteorite will not fall on your house tomorrow, but this is not a reason not to acquire your own housing. Maybe it will fall, and maybe not. But it's worth it! But, on the other hand, this is not a reason not to hedge against the case that it does fall. A small amount of money in the bank, sufficient to rent a house for several months, is quite able to contain the risk of freezing from cold outside.

In the case of innovative technologies, risk management is becoming quite a subtle game. On the one hand, working with a new technology offers many risks, but on the other hand, not taking the risk to use it means risking allowing competitors to bypass you. In this case, not taking risks is a much greater risk than taking risks (it sounds rather complicated, right?). As I said, in my opinion, it is wiser to get half of the possible profits than to risk losing everything. Of course, there needs to be an assessment of the specific situation. Reducing profits can be guaranteed to push you out of the market by competitors if you initially did not have any trump cards up your sleeve. Create a universal algorithm of action in this case will not work. As Field Marshal Helmut von Moltke said, no plan can withstand a battle with the enemy. Understanding the current situation in tandem with forecasting is useful at any stage of the project.

In the situation with the airport in Denver, all the blame was placed on specific performers, although in fact the responsibility and losses were borne by the city municipality. How could managers understand that they did something wrong? I noticed the following pattern: if a small failure leads to more and more critical consequences, then somewhere in the risk management a mistake has been made . For example, if the entire airport is idle due to unavailability of the software. Or, for example, if due to a power outage the signal lights do not work (for such cases, you can stock up on backup generators). If due to the wrong pressing of the wrong button half of the globe flies into space in the form of fragments. But do not have a button from the nuclear suitcase located next to the button pouring coffee in the coffee machine in the lobby, yeah. For a manager, it looks like this: some performer was wrong, pressed the wrong button, and now there is no Earth, all the blame lies on the performer. Well, it seems to the performer that he was mistaken quite a bit, just awake hit the wrong button. If there is a sense of insignificance of error, and it sounds plausible, then this is a signal that risk management was carried out very badly. This, of course, does not justify the artist who destroyed humanity. But you do not need to work in offices in which corporate policy requires the presence of a button from a nuclear suitcase in each coffee machine, yeah. Well, or at least, it is not necessary to approach these machines closer than 3 meters. You can also try to achieve a change in corporate policy or to enclose these machines fences. Unfortunately, in most companies “the initiative is punishable”, so you have to install fences in your own time, for your money, and also be responsible for all risks associated with these fences, even if you are not a manager and do not have the authority and ability to managing these risks.

Another case is possible - if the risk lies on the surface, periodically brings a lot of problems, but everyone ignores it, then the performer will feel that mistakes are inevitable, as well as the problems they bring. Since the problems will be developed addictive, there will be no sense of minor errors and the significance of their consequences, but there will be a feeling of hellishness of what is happening.

Once upon a time there were two teams working in different cities for the benefit of one startup. The first team consisted of a product-ovner (deputy director) and his assistant. Both of them combined the functions of managers, analysts and testers. The second team consisted of several programmers, a tester and a team leader. They were engaged in the automated collection and processing of information taken from the Internet, and publishing it on their website. Well, since the sources from which the information was collected had a different format, and could change at the whim of the developers of these sources, it was not always possible to correctly gather information from them. To this end, the tester compared the information from the source with the information on the project site day and night. Having found an error, the tester starts a corresponding card with a bug on the board, the programmers correct it, and in the next version of the program, this information comes from the source correctly. In another city, another tester, an assistant ovner, controls the quality. He will find a long-standing, already fixed bug, but he does not get the card, but reports the product. Then programmers cheerfully report that, they say, this bug has already been fixed, we have just passed the information for this particular case to our most recent algorithm, and everything went without error. Ovner did not trust programmers, and believed that they covered themselves and the tester, who did not find bugs. Unfortunately, due to the specifics of the project, it was not possible to transfer all information from the source for all the time with the release of each new version: first, the information volumes were large, and, second, the information lost its relevance and value over time. In general, after some time, in order to convey the seriousness of the situation to the remote team, the manager started threatening to dismiss the tester and punish the entire team, and the team leader did not hesitate to scold the assistant manager for the eyes, and the working atmosphere was not very working.

Was it possible to predict this situation in advance? It would be difficult, but possible. It was enough to alternately present oneself at the place of each of the participants in the process, take into account his official duties and imagine what he would do and what difficulties he would face. Ovner from another city should ensure that the remote team does not cool down and do not idle, and also set the overall strategic direction of the project. An assistant should help him in this. The team should work. Naturally, in the absence of full transparency of the process, suspicions can creep into your head. Moreover, full transparency could not be achieved, because neither the manager nor his assistant had ever been engaged in programming, and therefore could not objectively evaluate the productivity of the remote team. Accordingly, the only possible way to prevent conflict is to make sure that the "overseers" cannot find fault with anything. It was necessary to predict this risk and take action. But even when the risk came, the team leader and the manager did not begin to look for ways to solve the problem. They began to look for the guilty. The problem was partially solved by the programmer, suggesting that the test site should delete and refill the data for the previous month every week, so that the assistant engineer would look for errors in the data obtained by the most recent algorithm. The main problem here is the lack of trust and respect between the teams in different cities, and this problem has not been resolved. But now at the ovner at least there were less reasons for discontent.

Moral: solve problems, but do not shift the responsibility. (Responsibility for the whole project in any case lies with the project manager, you can read more about this in the manager’s black book .) Solving a problem means analyzing the situation, finding the fundamental cause of the problem, assessing the feasibility of reducing the risk to prevent the problem from recurring, assigning risk mitigation measures to subordinates, monitoring the implementation of these measures. Of course, before that, it is necessary to give out instructions on how to eliminate the consequences of the risk that has already occurred.

There was one accounting program, conventionally called its program A. It was decided to write a new program B, transmitting data to program A through the database. In the new program B, more detailed data on the production process was entered than in the old program A, then in B the necessary data were calculated and transferred to A. Users had to enter data into B, and then use the old program A. B was used not only for transmission data in A, but also gave users some new features. The developer of the program B did not know the business logic of program A, and the Delphi language in which it was written, so he asked the author A what data and what table to transfer.

Unfortunately, this organization did not have a testing department, and the analyst who knew business logic was part-time boss, and preferred to shift testing and partly analytics to his subordinates, the programmers. If the programmer misunderstood the rather abstract technical tasks of the analyst, then the unchecked by the analyst and the incorrectly implemented program were sent to the user.

To prevent users from continuing to enter data in the old program, it was decided to forcibly remove such an opportunity from them, sharply transferring them to a new, insufficiently tested, program. The head gave the task to the author of the old program to make changes to it, prohibiting data entry, and he promptly completed the task. I don’t know, maybe the author of the old program naively believed that this time the testing was carried out. Perhaps the above-described problem of habituation to the inevitability of problems played a role. Be that as it may, he did not say about the risks. Well, maybe he subconsciously understood that he, as the only person who is aware of the business logic of the old program (except for the head-analyst, of course), will have to do testing and he will fail his current task. However, the unprotested program was forcibly supplied to users. As expected, it was found that some special cases were not taken into account. Because of this, the work of users several times stalled for several hours, and the program hastily been edited. The responsibility for this was assigned by the boss to the developer of the new program.

Let's analyze what mistakes were made in this case from the point of view of management. If in a previous story a team leader could at least theoretically foresee risks and weaken them, then this leader, who had never worked in programming, did not have such an opportunity. Since the head had to lead IT-processes, which she did not understand, she tried to shift more responsibility to those who understood them - to the final performers. But she did it implicitly. That is, after a couple of miscalculated risks, the programmers should have understood the quite clear and unhidden position of the boss: you are a programmer, you do all sorts of magic things that I don’t understand, you are responsible for everything that happens on the project. But the task of studying and starting risk management was not given to anyone directly. It happens that a manager is incompetent in some matters, but it does not matter, because he has subordinates who can be assigned to certain tasks. You can’t draw - assign it to a designer, you don’t know how to write websites - assign it to a programmer, you don’t know how to manage risk - assign it to a senior programmer, you don’t know how to check the effectiveness of risk management - outsource it. The main thing is to have a clear understanding of who is responsible for what. It is considered good tone when work duties are negotiated at the time of employment. In this case, virtually no risk management involved. Well, do not forget that the majority of managers have a superior manager. If the project manager is responsible for the project as a whole, then the supervisor is responsible for the projects of the entire unit as a whole. Accordingly, the fact that the analyst, unable to lead, is engaged in the management of the project, and the analyst does his job poorly, because his own boss - this is a mistake of a supervisor. Peter principle in action. Alas, there are even fewer good managers than good programmers.

In an organization without a testing department from a past history, a mechanism was provided for putting emergency patches in the event of bugs being detected during combat testing by users. The user finds a bug and sends a request to the first support line. The first line of support, if it cannot solve the problem on its own, sends the application to the project manager. , , , , . , . . . . . . , . «» ? . , , - . , , , .

- . , . . , , . , . , . , ? , . , , . , -, , , , . , . . , «» , , . , , . , , . , TimCity . Changing the process of laying out the patch solves the problem and reduces the risks, but the search for the guilty is not.

, . ( , , ..), , , , . . , . , -, , , . , , . , . IT. .

.

, « ».

, .

, . , ( ) ( ). , , , , , . , , , , — , .

Thanks for attention! :)

Then welcome under the cat!

(This article presents the thoughts inspired by the work of Tom DeMarco, and most likely the article will be uninteresting for those who are already familiar with his works)

')

In 1988, the city of Denver decided to build a new airport instead of the old one. The existing airport did not meet the needs of the growing city, and was unsuitable for expansion. A budget was allocated, construction began. But, unfortunately, the project could not be completed on time. The reason for the delay was not written in time software. The airport was equipped with low narrow tunnels designed to transport baggage. The software had to scan the baggage barcode and direct it in the right direction through the network of tunnels. Since, due to software incompleteness, baggage delivery was impossible, the airport was idle until the software was ready. Since the construction of the airport is associated with huge capital investments, all this capital was frozen while the programmers were in a hurry trying to play catch-up. And time is money. Loss of profits + losses amounted to half a billion dollars ... The media destroyed the reputation of the software supplier, putting all the blame on it.

Now let's imagine that the managers of this project spent half a day of their precious time and engaged in risk assessment after brainstorming. Imagine that they have gathered, and analyzed, and what losses they will incur if the runway or software is not ready on time, and also thought how to reduce the losses in the event of force majeure. By golly, a network of tiny tunnels is not the only way to transport baggage! If a couple extra million dollars were spent on building slightly more spacious tunnels, then luggage could be transported using cheap labor or small trucks. Two million - much less than half a billion. In the end, you could not even invest in the expansion of the tunnels, it would be possible to use sled dogs! Hire a dog handler, agree with the owner of the nearest kennel for stray dogs, and start training. If the software were ready on time, then the price of hot dogs would remain unchanged at the airport shawry-ball airport, but in case of difficulties, riding dogs could save the situation. In any case, this is at least some kind of safety net. But there is one thing. About such things you need to think in advance. If the contractor resorts on the appointed day of delivery of the project, shouting, “Chef, everything is gone!”

Terminology

When you put in the project budget potential losses from risk multiplied by the probability of risk, this is called risk containment . The risk is either there or not. If it does not come, then your money will remain with you. If it does, then perhaps the extra money that you put into the budget will not be enough. But if you appreciate all the risks, and some of them come, and some do not, then you will probably be in the budget.

When you spend money in advance to minimize the risk of loss, this is called risk mitigation . For example, when you hire a dog handler for dog training. It doesn’t matter whether the risk comes or not, the handler will eat and have fun at your expense.

Let's take a look at the ratio of easing and containing risks to airport construction.

It is known that another programmers team has done this program twice as long. In addition, programmers constantly complain that they fail to catch up with the schedule, so we assume that the probability of keeping up with the deadline is 50%. In fact, if you are familiar with risk diagrams, you will agree that the probability of the most optimal scenario occurring is equal to the nan interest, but we, like construction managers, will slightly raise expectations and assume that the success probability is 50%. As in one bearded joke: either it will, or not, therefore 50%. In IT in general, in most cases, temporal and probabilistic estimates are given intuitively and “from the ceiling.” So, the probability of risk is 1 \ 2, the price is half a billion, we’ll be mortgaging half of half a billion to contain the risk. To reduce the risk will require several monthly salaries dog handlers, a ton of dog food, + salary cleaner, tidying up for dogs living in an unfinished airport. Well, or the price of more spacious tunnels - to your taste. It would look something like this:

As you can see, reducing the risk in this particular case looks much more profitable. Of course, if the risk does not come, then you will spend resources that could not spend. But, on the other hand, if it comes, and you are not insured, the consequences will be catastrophic. This is how to spare money on a safety cable when you are going to walk a tightrope. Reducing risk is much cheaper than containing it, and its benefits are obvious. But let's consider the opposite situation - what if risk containment is much cheaper than loosening it? Does this mean that in this case it is necessary to contain the risk? In fact, not always. Here the philosophy of the warrior will help us.

Meet this Carefree Farmer. He knows that the harder he will water and weed his field, the richer he will harvest. The picture of the world of carefree farmer looks like this:

The more I tried, the more I got. Dependency is exponential: over time, for the same efforts you get more reward (increasing qualifications and position in society, increasing passive income, etc.), until some fixed ceiling is reached. Everything is predictable and simple.

And this is the Fearless Warrior. A warrior may receive a thousand minor wounds, but continue to stand firmly on his feet; until you get a fatal blow. During the confrontation of the two sides, each side is trying to maximize the fatal risks of the enemy. Tiring the enemy to death is much less effective than delivering a calculated blow to the heart. For a warrior, the “more effort, more reward” formula doesn't work. He can lose everything because of a single mistake. For the concept of risk, both philosophies are true - both the landowner and the warrior - but the concept of the warrior is nevertheless closer. The risk is either there or not. This will either lead to the failure of the project, or not. With a shield or shield. On the other hand, we do not live in ancient Japan, and it is not customary in our country for us to chop off guilty heads to the left and right in any incomprehensible situation. Not every failed project means the breakup of an organization and the end of a management career. From any seemingly hopeless situation, you can try to extract at least some benefit. You can complete the project with reduced functionality, change the target audience, use the created developments in the next project, draw conclusions about the reasons for the failure of the project and try to prevent this from happening in the future. From this failure will not cease to be a failure, but at least some of your efforts will pay off.

Examples of risks in the confrontation of two forces

White goes knight on e5 with the next move. If black beats the queen, then mate will follow:

7 ... C: d1 The black elephant chops the white queen

8. C: f7 + Kp: e7 White elephant, under the cover of a horse, puts the check to black. Black has the only possible move by the king on e7

9. K: d5 # Second horse on d5. Mat

There is an opportunity to cut down the opponent's queen and allow yourself to set a check, but if the opponent is able to realize the risks that have appeared, then you will lose the game. This is a fatal (or critical) risk to your party.

Consider an example of a command intellectual game - dots, where each team consists of 5 people. The more local skirmishes a player wins, the stronger his character becomes, and the more chances his team has to win the game. However, even if one team wins time after time, and it would seem that there is no hope anymore, one single player error from the dominant team can mean a loss for the whole team. If in chess the goal is to cut down the king, then in DotA the goal is to cut down the source of the power of the opposing team (the world tree of the “white” or the magic ice throne of the “blacks”). It happens that a player takes a risk by attacking a weaker player from the opposing team, or by attacking together one. The risk is that it may actually be a trap, and the player is secured from an ambush. If two players are caught in a well-organized ambush, the nearest player will most likely be “killed” and temporarily out of the game before the “resurrection”, and the second player may try to save him (with the risk of being out of the game for some time) or run as fast as the opposing team cracks down on the first player. If the second player takes the risk and also “dies”, the team will remain in the minority. After that, the enemy team can launch a full-scale offensive, and, taking advantage of the numerical advantage, break the defense and destroy the enemy’s “source of strength”.

This is similar to the final battle of some action movie, when one character gets a lot of lyuley throughout the fight and generally looks like a weak wimp against the background of the fight favorite, but at the end, by some miracle, he still knocks the enemy out. Yes, the philosophy of a warrior, she is. It is not enough just to throw further, jump higher, hit harder and have more experience. It takes wisdom and courage to anticipate possible risks .

Let's see what the losing team did wrong. Maybe they shouldn't have attacked and risked being ambushed? In fact, it was worth it. Risk and benefit go hand in hand. Payback risks approaching victory. Who does not risk, he does not drink champagne. Another thing is that the risk must be wisely . In this case, the second player, when he realized that the case smells like roast, was worth saving his team, and not his partner. On the other hand, if you are forced to leave a friend in need, this is a clear sign that you are doing something wrong. In this case, it was necessary to reduce the risk , after conducting exploration. Yes, it would require additional resources and time, but it significantly reduces the risks. Moreover, the team was dominant and could afford to spend some resources on exploration. Apparently, the sensation of a quick victory stupefied them, and they completely forgot about the possible risks. Disrespecting the enemy is a fatal mistake. Remember, any relationship between people is built on mutual respect.

And now let us return to the question, and if risk containment is much cheaper than weakening it, then is it worth restraining the risk? Let me remind you that risk containment = probability of risk * loss from the occurrence of risk. If the risk comes, you will need to pay the full cost, which can be many times greater than the amount inherent in containing the risk. If you do not have the opportunity to pay this amount, and this will mark the failure of the project, then, in my opinion, it is better to do the risk mitigation. If risk mitigation is not possible, then it is worth thinking carefully, and is it worth taking up this project at all? In my opinion, it is wiser to get half of the possible profits than to risk losing everything. Of course, you can not control all the risks. Risk and profit go hand in hand, so sometimes it makes sense to just take a chance. You cannot guarantee that the meteorite will not fall on your house tomorrow, but this is not a reason not to acquire your own housing. Maybe it will fall, and maybe not. But it's worth it! But, on the other hand, this is not a reason not to hedge against the case that it does fall. A small amount of money in the bank, sufficient to rent a house for several months, is quite able to contain the risk of freezing from cold outside.

In the case of innovative technologies, risk management is becoming quite a subtle game. On the one hand, working with a new technology offers many risks, but on the other hand, not taking the risk to use it means risking allowing competitors to bypass you. In this case, not taking risks is a much greater risk than taking risks (it sounds rather complicated, right?). As I said, in my opinion, it is wiser to get half of the possible profits than to risk losing everything. Of course, there needs to be an assessment of the specific situation. Reducing profits can be guaranteed to push you out of the market by competitors if you initially did not have any trump cards up your sleeve. Create a universal algorithm of action in this case will not work. As Field Marshal Helmut von Moltke said, no plan can withstand a battle with the enemy. Understanding the current situation in tandem with forecasting is useful at any stage of the project.

Training on your own mistakes in the risk management process

In the situation with the airport in Denver, all the blame was placed on specific performers, although in fact the responsibility and losses were borne by the city municipality. How could managers understand that they did something wrong? I noticed the following pattern: if a small failure leads to more and more critical consequences, then somewhere in the risk management a mistake has been made . For example, if the entire airport is idle due to unavailability of the software. Or, for example, if due to a power outage the signal lights do not work (for such cases, you can stock up on backup generators). If due to the wrong pressing of the wrong button half of the globe flies into space in the form of fragments. But do not have a button from the nuclear suitcase located next to the button pouring coffee in the coffee machine in the lobby, yeah. For a manager, it looks like this: some performer was wrong, pressed the wrong button, and now there is no Earth, all the blame lies on the performer. Well, it seems to the performer that he was mistaken quite a bit, just awake hit the wrong button. If there is a sense of insignificance of error, and it sounds plausible, then this is a signal that risk management was carried out very badly. This, of course, does not justify the artist who destroyed humanity. But you do not need to work in offices in which corporate policy requires the presence of a button from a nuclear suitcase in each coffee machine, yeah. Well, or at least, it is not necessary to approach these machines closer than 3 meters. You can also try to achieve a change in corporate policy or to enclose these machines fences. Unfortunately, in most companies “the initiative is punishable”, so you have to install fences in your own time, for your money, and also be responsible for all risks associated with these fences, even if you are not a manager and do not have the authority and ability to managing these risks.

Another case is possible - if the risk lies on the surface, periodically brings a lot of problems, but everyone ignores it, then the performer will feel that mistakes are inevitable, as well as the problems they bring. Since the problems will be developed addictive, there will be no sense of minor errors and the significance of their consequences, but there will be a feeling of hellishness of what is happening.

Consider a few stories from IT.

History 1. Suspicious manager.

Once upon a time there were two teams working in different cities for the benefit of one startup. The first team consisted of a product-ovner (deputy director) and his assistant. Both of them combined the functions of managers, analysts and testers. The second team consisted of several programmers, a tester and a team leader. They were engaged in the automated collection and processing of information taken from the Internet, and publishing it on their website. Well, since the sources from which the information was collected had a different format, and could change at the whim of the developers of these sources, it was not always possible to correctly gather information from them. To this end, the tester compared the information from the source with the information on the project site day and night. Having found an error, the tester starts a corresponding card with a bug on the board, the programmers correct it, and in the next version of the program, this information comes from the source correctly. In another city, another tester, an assistant ovner, controls the quality. He will find a long-standing, already fixed bug, but he does not get the card, but reports the product. Then programmers cheerfully report that, they say, this bug has already been fixed, we have just passed the information for this particular case to our most recent algorithm, and everything went without error. Ovner did not trust programmers, and believed that they covered themselves and the tester, who did not find bugs. Unfortunately, due to the specifics of the project, it was not possible to transfer all information from the source for all the time with the release of each new version: first, the information volumes were large, and, second, the information lost its relevance and value over time. In general, after some time, in order to convey the seriousness of the situation to the remote team, the manager started threatening to dismiss the tester and punish the entire team, and the team leader did not hesitate to scold the assistant manager for the eyes, and the working atmosphere was not very working.

Was it possible to predict this situation in advance? It would be difficult, but possible. It was enough to alternately present oneself at the place of each of the participants in the process, take into account his official duties and imagine what he would do and what difficulties he would face. Ovner from another city should ensure that the remote team does not cool down and do not idle, and also set the overall strategic direction of the project. An assistant should help him in this. The team should work. Naturally, in the absence of full transparency of the process, suspicions can creep into your head. Moreover, full transparency could not be achieved, because neither the manager nor his assistant had ever been engaged in programming, and therefore could not objectively evaluate the productivity of the remote team. Accordingly, the only possible way to prevent conflict is to make sure that the "overseers" cannot find fault with anything. It was necessary to predict this risk and take action. But even when the risk came, the team leader and the manager did not begin to look for ways to solve the problem. They began to look for the guilty. The problem was partially solved by the programmer, suggesting that the test site should delete and refill the data for the previous month every week, so that the assistant engineer would look for errors in the data obtained by the most recent algorithm. The main problem here is the lack of trust and respect between the teams in different cities, and this problem has not been resolved. But now at the ovner at least there were less reasons for discontent.

Moral: solve problems, but do not shift the responsibility. (Responsibility for the whole project in any case lies with the project manager, you can read more about this in the manager’s black book .) Solving a problem means analyzing the situation, finding the fundamental cause of the problem, assessing the feasibility of reducing the risk to prevent the problem from recurring, assigning risk mitigation measures to subordinates, monitoring the implementation of these measures. Of course, before that, it is necessary to give out instructions on how to eliminate the consequences of the risk that has already occurred.

History 2. A sharp transition to a new program

There was one accounting program, conventionally called its program A. It was decided to write a new program B, transmitting data to program A through the database. In the new program B, more detailed data on the production process was entered than in the old program A, then in B the necessary data were calculated and transferred to A. Users had to enter data into B, and then use the old program A. B was used not only for transmission data in A, but also gave users some new features. The developer of the program B did not know the business logic of program A, and the Delphi language in which it was written, so he asked the author A what data and what table to transfer.

Unfortunately, this organization did not have a testing department, and the analyst who knew business logic was part-time boss, and preferred to shift testing and partly analytics to his subordinates, the programmers. If the programmer misunderstood the rather abstract technical tasks of the analyst, then the unchecked by the analyst and the incorrectly implemented program were sent to the user.

To prevent users from continuing to enter data in the old program, it was decided to forcibly remove such an opportunity from them, sharply transferring them to a new, insufficiently tested, program. The head gave the task to the author of the old program to make changes to it, prohibiting data entry, and he promptly completed the task. I don’t know, maybe the author of the old program naively believed that this time the testing was carried out. Perhaps the above-described problem of habituation to the inevitability of problems played a role. Be that as it may, he did not say about the risks. Well, maybe he subconsciously understood that he, as the only person who is aware of the business logic of the old program (except for the head-analyst, of course), will have to do testing and he will fail his current task. However, the unprotested program was forcibly supplied to users. As expected, it was found that some special cases were not taken into account. Because of this, the work of users several times stalled for several hours, and the program hastily been edited. The responsibility for this was assigned by the boss to the developer of the new program.

Let's analyze what mistakes were made in this case from the point of view of management. If in a previous story a team leader could at least theoretically foresee risks and weaken them, then this leader, who had never worked in programming, did not have such an opportunity. Since the head had to lead IT-processes, which she did not understand, she tried to shift more responsibility to those who understood them - to the final performers. But she did it implicitly. That is, after a couple of miscalculated risks, the programmers should have understood the quite clear and unhidden position of the boss: you are a programmer, you do all sorts of magic things that I don’t understand, you are responsible for everything that happens on the project. But the task of studying and starting risk management was not given to anyone directly. It happens that a manager is incompetent in some matters, but it does not matter, because he has subordinates who can be assigned to certain tasks. You can’t draw - assign it to a designer, you don’t know how to write websites - assign it to a programmer, you don’t know how to manage risk - assign it to a senior programmer, you don’t know how to check the effectiveness of risk management - outsource it. The main thing is to have a clear understanding of who is responsible for what. It is considered good tone when work duties are negotiated at the time of employment. In this case, virtually no risk management involved. Well, do not forget that the majority of managers have a superior manager. If the project manager is responsible for the project as a whole, then the supervisor is responsible for the projects of the entire unit as a whole. Accordingly, the fact that the analyst, unable to lead, is engaged in the management of the project, and the analyst does his job poorly, because his own boss - this is a mistake of a supervisor. Peter principle in action. Alas, there are even fewer good managers than good programmers.

History 3. “Continuous Integration” via “broken phone”

In an organization without a testing department from a past history, a mechanism was provided for putting emergency patches in the event of bugs being detected during combat testing by users. The user finds a bug and sends a request to the first support line. The first line of support, if it cannot solve the problem on its own, sends the application to the project manager. , , , , . , . . . . . . , . «» ? . , , - . , , , .

- . , . . , , . , . , . , ? , . , , . , -, , , , . , . . , «» , , . , , . , , . , TimCity . Changing the process of laying out the patch solves the problem and reduces the risks, but the search for the guilty is not.

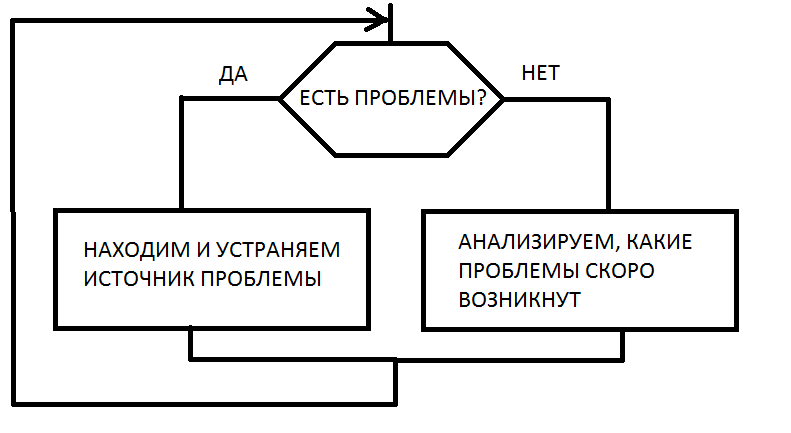

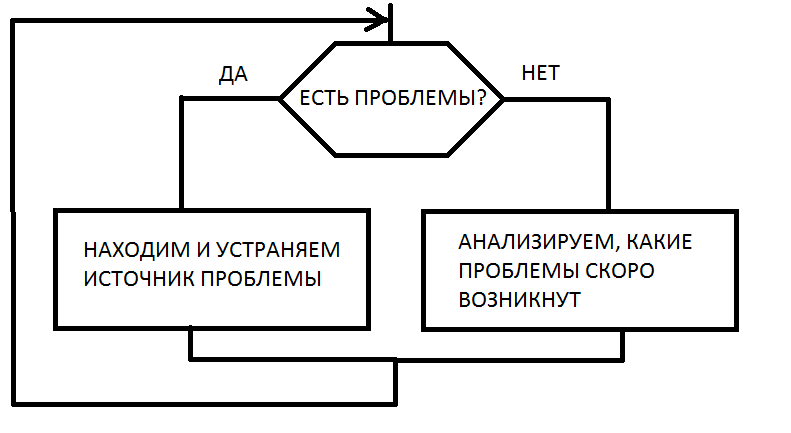

Consider a few simplified algorithms for the behavior of managers.

Algorithm 1.

, . ( , , ..), , , , . . , . , -, , , . , , . , . IT. .

2.

.

, « ».

, .

, . , ( ) ( ). , , , , , . , , , , — , .

Thanks for attention! :)

Source: https://habr.com/ru/post/273293/

All Articles