Evolution in the cloud: the experience of the service to work with social networks

Thanks to Artem Zukovets - CTO NovaPress Publisher for preparing the article. Winning project “Heroes of Russian startups”

Hello! Today we will talk about the architecture of the NovaPress Publisher service and the experience of its transfer to the Microsoft Azure cloud.

NovaPress Publisher is a web service that makes it easier for companies to work with social networks. It allows you to plan content in social networks for days and months ahead. And also automatically take content as they appear on the company's website and place it on all social networks.

Currently, this service is already used in the work of more than 9,500 companies, including well-known brands - magazines, newspapers, television channels, commercial and government organizations.

')

How it all began

Our service appeared in 2010, but we didn’t come to work with Azure immediately. In the first years of the life of the service, we used virtual machines on various hosting sites to work.

Our task was to process and post on social networks several thousand records per minute 24/7 without delay .

And while the real problem were periodic interruptions in the work of virtual machines. They led to the fact that the queue accumulated a huge number of unpublished entries, or user-created content was temporarily unavailable.

In addition, during peak hours, the service load was too high, and there were delays in sending records.

At that time, the service was supposed to send up to 6000 entries per minute to social networks and synchronize up to 1500 RSS feeds per minute. Now these values are much higher.

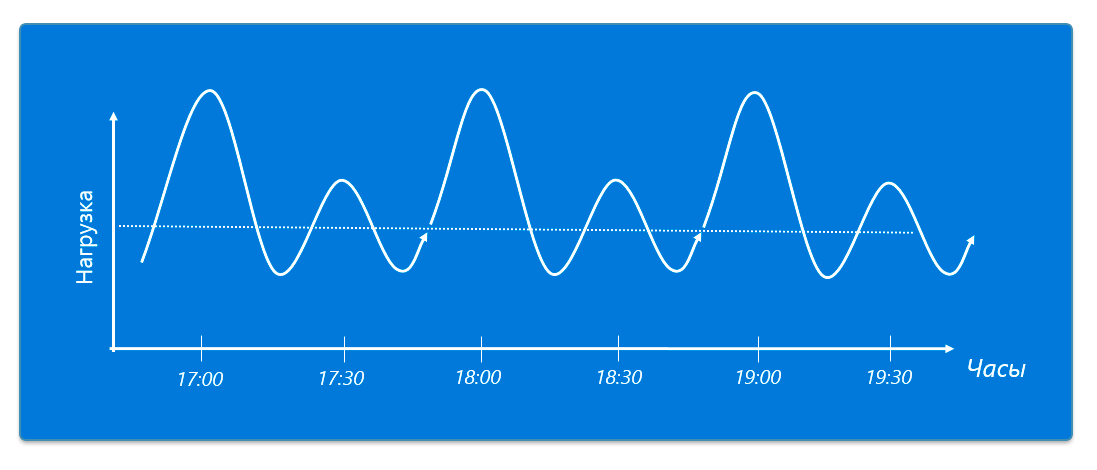

Above all, the load was in the morning and evening hours, especially at 00 minutes and 30 minutes (for example, 17:00, 17:30, 18:00, and so on).

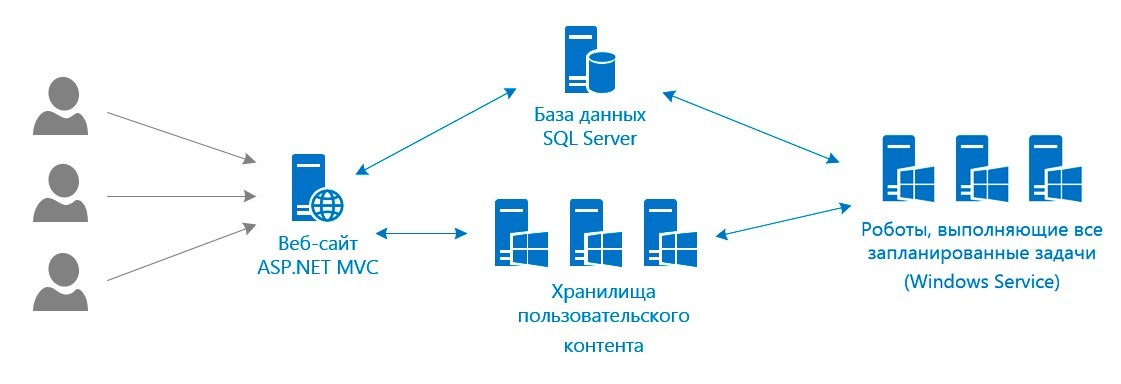

Our decision at that time was this:

Robots performing scheduled tasks (publishing content to social networks) were duplicated on several servers, as well as user-generated content. With the growing number of users, we connected additional servers.

However, over time, scaling was made more difficult, new bottlenecks were opened. In addition, periodic failures at hosting providers created us significant problems. And instead of introducing new features into our service, we spent a lot of time trying to cope with the increased load and to ensure, despite everything, high availability of the service and the publication of records without delay.

Therefore, we began to look for a new solution that could protect us from failures and provide flexible scaling depending on the load.

And finally, in 2013, we opted for the Azure cloud, in which scaling, replication and load balancing options were immediately available, out of the box.

Switch to Azure

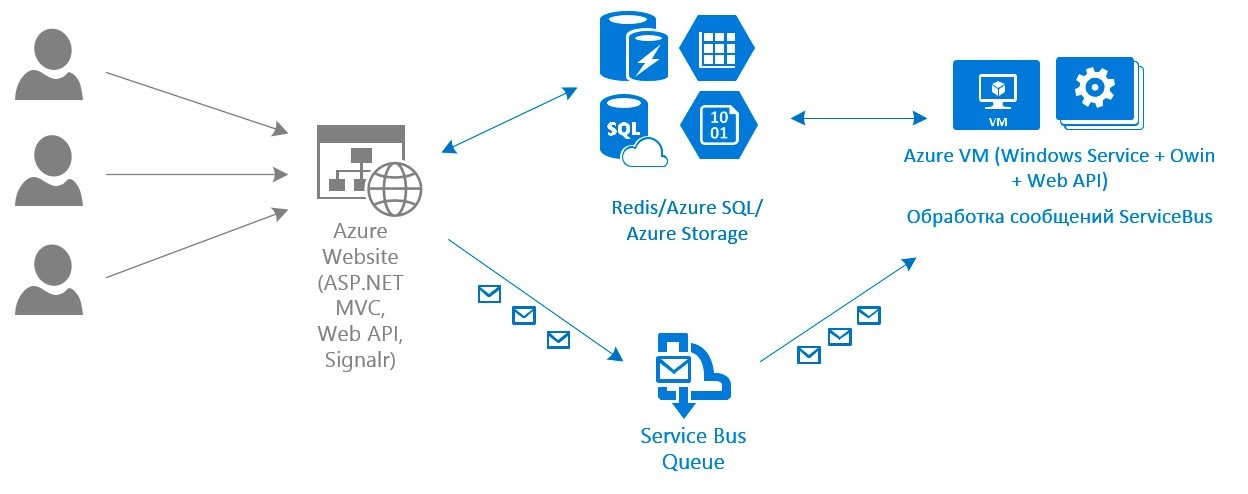

This was the scheme of our service after the transition to the Azure cloud:

Users work with the service through the website (Azure Website, ASP.NET MVC), data is stored in the Azure SQL database and Azure Storage. For quick access to data, the Redis cache in the cloud is used.

All automation (posting to social networks, copying records from sites or other social networks) is performed by Windows services hosted on a large number of Azure VM virtual machines. These services operate in multiple threads, performing tasks as they arrive in the ServiceBus queue. If there are more tasks and the load increases, we increase the number of virtual machines.

In addition, at peak minutes (18:00, 18:30, etc.), the service is reconfigured (automatically reduces the number of robots that perform minor tasks and increases the number of robots publishing to social networks) so that as soon as possible and without delay publish content on social networks.

Transition process

We went to Azure in several stages, starting with the most important ones:

- Database Transfer Before moving to the cloud, we used the SQL Server database. Migrating the SQL Server database to SQL Azure Cloud was quite simple, using a special application. After the transition, we got a stable database without fail. (availability 99.99%).

- Migrate user content. Before moving to the cloud, we ourselves duplicated user-generated content (photos, text) on several servers to ensure availability in the event of a crash. But it demanded a lot of resources and disk space. When moving to the cloud, we transferred all content to Azure Storage with automatic geo-replication (all content is automatically duplicated in 3 Azure data centers). Availability Level: 99.99%

- Transferring the task queue to the ServiceBus. Previously, a queue of tasks (for example, records that need to be published) was tied to a database. After moving to Azure, the service sends all the tasks that need to be completed to the ServiceBus queue. This removed some of the load from the database and significantly increased the potential for further scaling up the service.

- Transfer the website to the cloud. The site was transferred to the Azure Website and the automatic scaling was turned on so that the site was always available and kept load better.

- Cache Redis in the cloud. We use Redis to ensure the fastest possible posting to social networks. The content prepared for publication and its publication settings are stored in the cache so that at the right moment, as soon as possible go to social networks. Redis in the cloud already out of the box has automatic replication and guarantees availability at least 99.99% of the time.

Further steps

In December 2015, we released a new beta version , which improved the filling of the service:

- The site of the service is now a SPA-application AngularJS. All html-forms, scripts and styles are minified and loaded at the start, so that further switching between forms is instant.

- Working with data goes through a separate ASP.NET Web API web application. You have made Web API calls to get the result of the operation as quickly as possible. Actively used caching using Redis.

In the future we have the following improvements:

- Work with the service in real time thanks to WebSocket (SignalR). Since several employees of the company often work simultaneously with the service, I would like the changes made by each user to be instantly displayed by other employees. Also, the user will immediately see as soon as the service performs a particular task (for example, publishes entries in social networks, or downloads new entries from the site).

- Monitoring and analytics in social networks. It will show how effectively the company has worked with social media and will show you how to improve these indicators. In this regard, we are looking towards Stream Analytics and Machine Learning .

Results

As a result of the transition to Azure, we:

- ensured high availability of our service to customers. Everything works like a clock and customers can not but like it.

- received a performance margin for further growth of the service. Now the service places in social networks at 23,000 entries per minute, but this is far from the limit.

- freed up our time for other important tasks by shifting the provision of high availability and scaling to Azure. So we were able to fully concentrate on improving the service and adding new features.

about the author

Artem Zhukovets - Technical Director, NovaPress Publisher

Source: https://habr.com/ru/post/273039/

All Articles