Raising a complex project on Django using Docker

Good afternoon, colleagues.

Today I will talk about the not quite simple concept of fast (up to an hour after several workouts) deploying a project for a team consisting of at least individual frontend and backend developers.

We have the baseline data: the development of the project begins, in which a “thin backend” is planned. Those. our back-up consists of cached pages (rendered by any template engine), three-dimensional models with accompanying logic (ORM), and a REST API acting as a controller. In fact, View in such a system is reduced and rendered to JS, since there are various reagents, angulyar and other things that allow front-line workers to consider themselves “white people”.

Our development environment looks like this: Ubuntu LTS (14.04), PyCharm, Python of any version (we will take 2.7 to launch a virtual environment on which there will be a similar version). Django (1.8)

')

We solve the following problems:

For our application, we will use Docker . About this tool on Habré said a lot. At once I will make a reservation that we are not planning to complicate the Production server yet. It is important for us to build a development environment with a basis for the subsequent application of the CI concept. But, in the framework of the current article we will work only with docker-compose and do not touch on the quick deployment methods. Fortunately, those of Docker are abundant.

Docker can be installed on Mac and Windows machines with varying success. But we will consider installing it on Ubuntu 14.04. There is an instruction for installing Docker on this system, but it can cause problems. From the part, you can write them off from this instruction note:

Therefore, we do not show off and set as recommended by another instruction :

And check the installation with the command:

Now let's create a virtual environment for running Docker:

Open PyCharm and create a project for work.

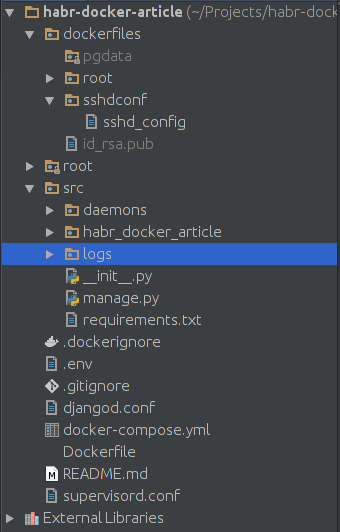

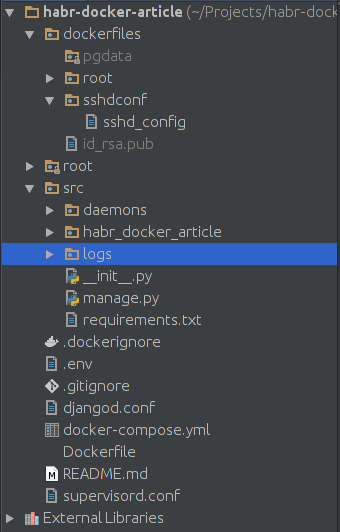

We create a project for any interpreter. Let it be a pure python project. In the diagram above, you see the minimum composition of the project. Supervisord will be responsible for starting the server. The .gitignore and .dockerignore files will allow you to specify those files that will not be committed to the project repository or will not be mounted in docker containers. Containers will be managed by the docker-compose.yml file, since it is simple as a stick and effective as a Kalashnikov rifle. For the main project, we will additionally create a Dockerfile to install the missing libraries.

The dockerfiles folder has a pgdata subfolder - in it we will have a PostgreSQL database in case we want to transfer data from one place to another. In dockerfiles / sshdconf let's put the settings for the SSH server. For the direct connection, we will not need it, but for setting up the environment in PyCharm - how. The id_rsa.pub key will allow PyCharm to connect to the container without dancing around the password. All you need is to create a bunch of SSH keys and copy (or transfer) the public key to the dockerfiles directory.

The src directory is the root of our project. Now our task is to expand the containers.

The docker-compose.yml file will look like this:

Notice the first container, postgresql. We uniquely give it the .env for the formation of primary data. The ports directive is responsible for port forwarding. The first digit before the colon is the port number by which this base will be available in our ubunt. The second digit is the port number that is forwarded from the container. Default PostgreSQL Port

We will collect the second container from the Dockerfile. Therefore, there is a build here. The launch command comes with a slight delay - in case we need time to launch the database and other tools inside the containers. Here we see all the connected directories and files. When forwarding ports, we have port 2225 - for SSH and 8005 - for the server. In sshd_config, we need to configure these directives for ourselves:

PermitRootLogin without-password

StrictModes no

RSAAuthentication yes

PubkeyAuthentication yes

AuthorizedKeysFile% h / .ssh / authorized_keys

Remember that all this stuff will work only on the development team. On the production, we will not post it. Although, in principle, the ssh server will be available only locally.

/home/USERNAME/.pycharm_helpers/:/root/.pycharm_helpers/ - This mount command will allow us to run tests and debugs directly from PyCharm. Do not forget to register your USERNAME here.

In supervisord.conf we write the following:

[unix_http_server]

file = / opt / project / daemons / supervisor.sock; path to your socket file

[supervisord]

logfile = / opt / project / logs / supervisord.log; supervisord log file

logfile_maxbytes = 50MB; maximum size of logfile before rotation

logfile_backups = 10; number of backed up logfiles

loglevel = info; info, debug, warn, trace

pidfile = / opt / project / daemons / supervisord.pid; pidfile location

nodaemon = false; run supervisord as a daemon

minfds = 1024; number of startup file descriptors

minprocs = 200; number of process descriptors

user = root; default user

childlogdir = / opt / project / logs /; where child log files will live

[rpcinterface: supervisor]

supervisor.rpcinterface_factory = supervisor.rpcinterface: make_main_rpcinterface

[supervisorctl]

serverurl = unix: ///opt/project/daemons/supervisor.sock; use unix: // schem for a unix sockets.

[include]

# Uncomment this line for celeryd for Python

files = djangod.conf

In djangod.conf:

[program: django_project]

command = python /opt/project/manage.py runserver 0.0.0.0:8000

directory = / opt / project /

stopasgroup = true

stdout_logfile = / opt / project / logs / django.log

stderr_logfile = / opt / project / logs / django_err.log

Anyone who carefully reads configs, should pay attention to the fact that we have announced two folders that have not been created yet. So create the logs and daemons directories in src. Add. / Src / logs / * and / src / daemons / * respectively to .gitignore

Note that in django, usually, stdout_logfile is not written. All logs are showered in stderr_logfile. The setting was taken from some ready-made instruction, and you don’t really want to delete the line, because stdout_logfile is a fairly standard directive.

Now let's not forget about our .env file:

POSTGRES_USER = habrdockerarticle

POSTGRES_DB = habrdockerarticle

POSTGRES_PASSWORD = qwerty

POSTGRES_HOST = postgresql

POSTGRES_PORT = 5432

PGDATA = / var / lib / postgresql / data / pgdata

C_FORCE_ROOT = true

It can be added or not added to .gitignore - does not matter.

At the end, fill in the Dockerfile

FROM python: 2.7

RUN apt-get update && apt-get install -y openssh-server \

&& apt-get purge -y --auto-remove -o APT :: AutoRemove :: RecommendsImportant = false -o APT :: AutoRemove :: SuggestsImportant = false $ buildDeps

COPY ./src/requirements.txt ./requirements.txt

RUN pip install -r requirements.txt

Docker Hub does not hide from us the fact that our container will be serviced by Debian Jessie. In Dockerfile, we have planned the installation of the ssh server, the cleaning of unnecessary package lists and the installation requirements. By the way, the dependency file has not been created yet. We need to fix this bug and create requirements.txt in the src folder:

Django == 1.8

psycopg2

supervisor

The project is ready for the first launch! We will run one by one. First perform:

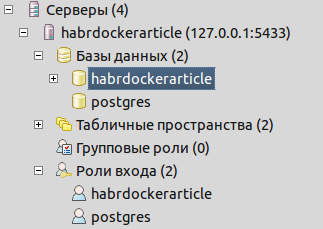

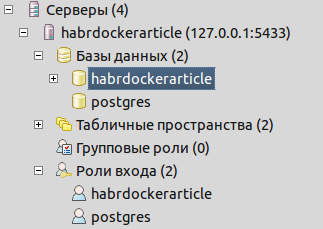

This operation will download the image we need to run the postgresql server. The server will start, the user and the database specified in .env will be created automatically. The team will block data entry for us, but we will not stop it yet. Make sure that the database and login roles are available by connecting via pgadmin

As we see, everything is already created for work:

Now, using the ctrl + C key combination in the console, stop the process. We need to collect the image of the project. So let's do it:

This command will compile a project for us, as well as execute all the commands from the Dockerfile. Those. we will have an ssh server installed, as well as dependencies from requirements.txt. Now we have the question of creating a Django project. You can create it in several ways. The most bulletproof is to put the required version of Django in our docker virtualenv on ubunt:

Django from venv can be removed or left for other projects. All that is left for us is to transfer the project internals to the root of the src folder.

Now we need to check our project and configure the connection to the database. First, change the settings in settings.py:

Then run the project containers:

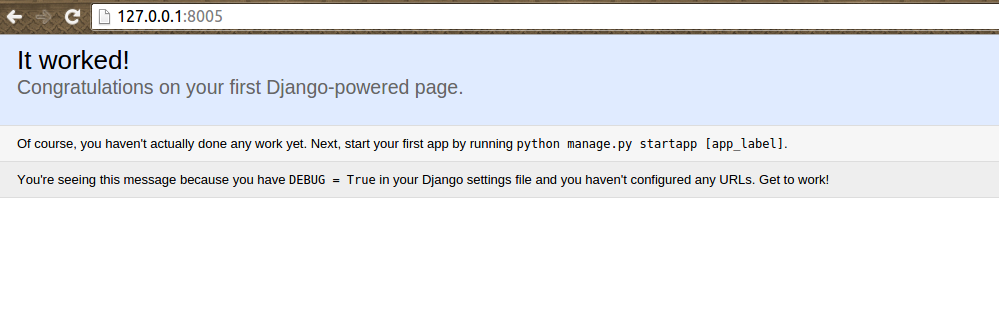

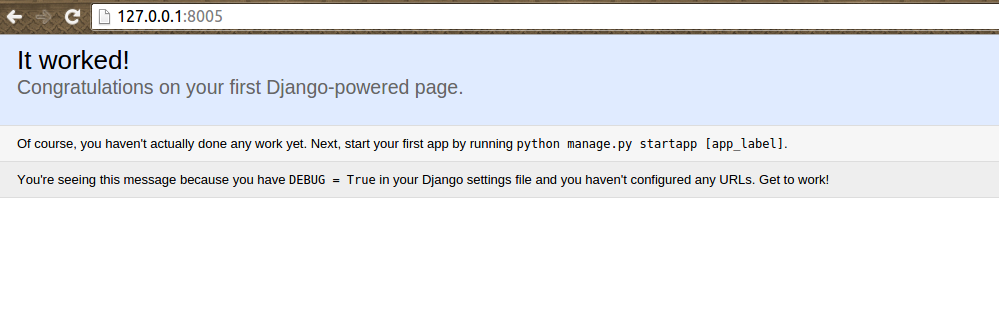

And make sure the positive result:

To stop a project and delete temporary files you can use:

If we have something changing in requirements.txt, use the following command to quickly reassemble

Let's check what structure of the project we have turned out:

The root folder in my code contains the ready helpers of PyCharm.

Now you can do something for which we started all this - connect Gulp to control the statics. The docker-compose.yml file will now look like this:

I added a new container and added it in the dependencies to the project.

Now I need to create a gulp folder in src for sources and static / scripts for compiled files. In the src / gulp folder, create a package.json file with the following contents:

Create gulpfile.js. in the src / gulp folder. I used my old sample file:

As we see from the config, we should upload some popular libraries to the src / gulp / front folder and create the src / gulp / front / project folder for scripts written by JS. Also, do not forget about creating a Dockerfile in src / gulp

FROM neo9polska / nodejs-bower-gulp

COPY package.json ./package.json

COPY node_modules ./node_modules

RUN npm install --verbose

Now a rather important question is node_modules. Without this folder, the container with Gulp will be frankly messed up. Here we have two options for obtaining this folder:

However, changing the rights is not necessary. Just developers will be forced to constantly execute the gulp rebuild command. However, all the code described in the article, I will lay out on Github and you can take the node_modules from there. This problem is related to docker-compose. But defeating her is easy.

So, as a result of the launch of containers

We should get this compiled file:

.

.

Done! The project can be poured into git and start working with it.

The complete restart command with rebuild looks like this:

To launch a project, a new participant in the process just needs to run:

To view the main log of the container running the application:

The Django logs from the project are written to the src / logs folder.

You can see the source code of the project in my GitHub .

PS Another important aspect is setting up the python interpreter in PyCharm. For this setting, just add the remote interpreter:

And note that PyCharm has a plugin for integrating with Docker. We use SSH connection, because we did not touch upon the issue of deploying the project to the docker-machine.

Today I will talk about the not quite simple concept of fast (up to an hour after several workouts) deploying a project for a team consisting of at least individual frontend and backend developers.

We have the baseline data: the development of the project begins, in which a “thin backend” is planned. Those. our back-up consists of cached pages (rendered by any template engine), three-dimensional models with accompanying logic (ORM), and a REST API acting as a controller. In fact, View in such a system is reduced and rendered to JS, since there are various reagents, angulyar and other things that allow front-line workers to consider themselves “white people”.

Our development environment looks like this: Ubuntu LTS (14.04), PyCharm, Python of any version (we will take 2.7 to launch a virtual environment on which there will be a similar version). Django (1.8)

')

We solve the following problems:

- You must fully emulate the Production space, and even better, supply the code to the participants in the development process along with the environment.

- It is necessary to separate the execution environment of our project from the operating system environment. We don’t need problems with Python versions, node.js settings, or database deployment. Let our desktop system be clean and bright.

- It is necessary to automate the deployment of the project, which will work on more and the guru JS, and possibly a cool layout designer. Yes, so that the project can be raised and the tester, and the manager with basic knowledge in the technical field.

- It is necessary to separate the dev version from production without any problems. Downtime should be minimal. No one will wait for the lead programmer to fix all the variables in settings and fix other problems.

- It is necessary to make so that participants of development did not solve problems each other. JS developer should not delve into the subtleties of launching Celery, merging JS files, etc. The formatter should not be interested in what compiles his Sass code, etc. This refers to the deployment automation, but it is important to emphasize that these problems can be inconvenient and it will take time to write detailed deployment instructions if it occurs in manual mode.

Docker installation

For our application, we will use Docker . About this tool on Habré said a lot. At once I will make a reservation that we are not planning to complicate the Production server yet. It is important for us to build a development environment with a basis for the subsequent application of the CI concept. But, in the framework of the current article we will work only with docker-compose and do not touch on the quick deployment methods. Fortunately, those of Docker are abundant.

Docker can be installed on Mac and Windows machines with varying success. But we will consider installing it on Ubuntu 14.04. There is an instruction for installing Docker on this system, but it can cause problems. From the part, you can write them off from this instruction note:

Note: Ubuntu Utopic 14.10 is not officially supported.

Therefore, we do not show off and set as recommended by another instruction :

$ sudo apt-get update $ sudo apt-get install wget wget -qO- https://get.docker.com/ | sh And check the installation with the command:

$ docker run hello-world Now let's create a virtual environment for running Docker:

$ mkdir ~/venvs $ virtualenv ~/venvs/docker $ source ~/venvs/docker/bin/activate (docker) $ pip install docker-compose (docker) $ docker-compose -v Create a project

Open PyCharm and create a project for work.

We create a project for any interpreter. Let it be a pure python project. In the diagram above, you see the minimum composition of the project. Supervisord will be responsible for starting the server. The .gitignore and .dockerignore files will allow you to specify those files that will not be committed to the project repository or will not be mounted in docker containers. Containers will be managed by the docker-compose.yml file, since it is simple as a stick and effective as a Kalashnikov rifle. For the main project, we will additionally create a Dockerfile to install the missing libraries.

The dockerfiles folder has a pgdata subfolder - in it we will have a PostgreSQL database in case we want to transfer data from one place to another. In dockerfiles / sshdconf let's put the settings for the SSH server. For the direct connection, we will not need it, but for setting up the environment in PyCharm - how. The id_rsa.pub key will allow PyCharm to connect to the container without dancing around the password. All you need is to create a bunch of SSH keys and copy (or transfer) the public key to the dockerfiles directory.

The src directory is the root of our project. Now our task is to expand the containers.

Create containers

The docker-compose.yml file will look like this:

postgresql: image: postgres:9.3 env_file: .env volumes: - ./dockerfiles/pgdata:/var/lib/postgresql/data/pgdata ports: - "5433:5432" project: build: ./ env_file: .env working_dir: /opt/project command: bash -c "sleep 3 && /etc/init.d/ssh start && supervisord -n" volumes: - ./src:/opt/project - ./dockerfiles/sshdconf/sshd_config:/etc/ssh/sshd_config - ./dockerfiles/id_rsa.pub:/root/.ssh/authorized_keys - /home/USERNAME/.pycharm_helpers/:/root/.pycharm_helpers/ - ./supervisord.conf:/etc/supervisord.conf - ./djangod.conf:/etc/djangod.conf links: - postgresql ports: - "2225:22" - "8005:8000" Notice the first container, postgresql. We uniquely give it the .env for the formation of primary data. The ports directive is responsible for port forwarding. The first digit before the colon is the port number by which this base will be available in our ubunt. The second digit is the port number that is forwarded from the container. Default PostgreSQL Port

We will collect the second container from the Dockerfile. Therefore, there is a build here. The launch command comes with a slight delay - in case we need time to launch the database and other tools inside the containers. Here we see all the connected directories and files. When forwarding ports, we have port 2225 - for SSH and 8005 - for the server. In sshd_config, we need to configure these directives for ourselves:

PermitRootLogin without-password

StrictModes no

RSAAuthentication yes

PubkeyAuthentication yes

AuthorizedKeysFile% h / .ssh / authorized_keys

Remember that all this stuff will work only on the development team. On the production, we will not post it. Although, in principle, the ssh server will be available only locally.

/home/USERNAME/.pycharm_helpers/:/root/.pycharm_helpers/ - This mount command will allow us to run tests and debugs directly from PyCharm. Do not forget to register your USERNAME here.

In supervisord.conf we write the following:

[unix_http_server]

file = / opt / project / daemons / supervisor.sock; path to your socket file

[supervisord]

logfile = / opt / project / logs / supervisord.log; supervisord log file

logfile_maxbytes = 50MB; maximum size of logfile before rotation

logfile_backups = 10; number of backed up logfiles

loglevel = info; info, debug, warn, trace

pidfile = / opt / project / daemons / supervisord.pid; pidfile location

nodaemon = false; run supervisord as a daemon

minfds = 1024; number of startup file descriptors

minprocs = 200; number of process descriptors

user = root; default user

childlogdir = / opt / project / logs /; where child log files will live

[rpcinterface: supervisor]

supervisor.rpcinterface_factory = supervisor.rpcinterface: make_main_rpcinterface

[supervisorctl]

serverurl = unix: ///opt/project/daemons/supervisor.sock; use unix: // schem for a unix sockets.

[include]

# Uncomment this line for celeryd for Python

files = djangod.conf

In djangod.conf:

[program: django_project]

command = python /opt/project/manage.py runserver 0.0.0.0:8000

directory = / opt / project /

stopasgroup = true

stdout_logfile = / opt / project / logs / django.log

stderr_logfile = / opt / project / logs / django_err.log

Anyone who carefully reads configs, should pay attention to the fact that we have announced two folders that have not been created yet. So create the logs and daemons directories in src. Add. / Src / logs / * and / src / daemons / * respectively to .gitignore

Note that in django, usually, stdout_logfile is not written. All logs are showered in stderr_logfile. The setting was taken from some ready-made instruction, and you don’t really want to delete the line, because stdout_logfile is a fairly standard directive.

Now let's not forget about our .env file:

POSTGRES_USER = habrdockerarticle

POSTGRES_DB = habrdockerarticle

POSTGRES_PASSWORD = qwerty

POSTGRES_HOST = postgresql

POSTGRES_PORT = 5432

PGDATA = / var / lib / postgresql / data / pgdata

C_FORCE_ROOT = true

It can be added or not added to .gitignore - does not matter.

At the end, fill in the Dockerfile

FROM python: 2.7

RUN apt-get update && apt-get install -y openssh-server \

&& apt-get purge -y --auto-remove -o APT :: AutoRemove :: RecommendsImportant = false -o APT :: AutoRemove :: SuggestsImportant = false $ buildDeps

COPY ./src/requirements.txt ./requirements.txt

RUN pip install -r requirements.txt

Docker Hub does not hide from us the fact that our container will be serviced by Debian Jessie. In Dockerfile, we have planned the installation of the ssh server, the cleaning of unnecessary package lists and the installation requirements. By the way, the dependency file has not been created yet. We need to fix this bug and create requirements.txt in the src folder:

Django == 1.8

psycopg2

supervisor

First start

The project is ready for the first launch! We will run one by one. First perform:

(docker) $ docker-compose run --rm --service-ports postgresql This operation will download the image we need to run the postgresql server. The server will start, the user and the database specified in .env will be created automatically. The team will block data entry for us, but we will not stop it yet. Make sure that the database and login roles are available by connecting via pgadmin

As we see, everything is already created for work:

Now, using the ctrl + C key combination in the console, stop the process. We need to collect the image of the project. So let's do it:

(docker) $ docker-compose build project This command will compile a project for us, as well as execute all the commands from the Dockerfile. Those. we will have an ssh server installed, as well as dependencies from requirements.txt. Now we have the question of creating a Django project. You can create it in several ways. The most bulletproof is to put the required version of Django in our docker virtualenv on ubunt:

(docker) $ pip install django==1.8 (docker) $ cd ./src (docker) $ django-admin startproject projectname (docker) $ cd ../ Django from venv can be removed or left for other projects. All that is left for us is to transfer the project internals to the root of the src folder.

Now we need to check our project and configure the connection to the database. First, change the settings in settings.py:

DATABASES = { 'default': { 'ENGINE': 'django.db.backends.postgresql_psycopg2', 'NAME': os.getenv('POSTGRES_DB'), 'USER': os.getenv('POSTGRES_USER'), 'PASSWORD': os.getenv('POSTGRES_PASSWORD'), 'HOST': os.getenv('POSTGRES_HOST'), 'PORT': int(os.getenv('POSTGRES_PORT')) } } Then run the project containers:

(docker) $ docker-compose up -d And make sure the positive result:

To stop a project and delete temporary files you can use:

(docker) $ docker-compose stop && docker-compose rm -f If we have something changing in requirements.txt, use the following command to quickly reassemble

(docker) $ docker-compose stop && docker-compose rm -f && docker-compose build --no-cache project && docker-compose up -d Let's check what structure of the project we have turned out:

The root folder in my code contains the ready helpers of PyCharm.

We connect the container for the JS programmer

Now you can do something for which we started all this - connect Gulp to control the statics. The docker-compose.yml file will now look like this:

... gulp: build: ./src/gulp command: bash -c "sleep 3 && gulp" volumes: - ./src/gulp:/app - ./src/static/scripts:/app/build project: ... links: - postgresql - gulp ... I added a new container and added it in the dependencies to the project.

Now I need to create a gulp folder in src for sources and static / scripts for compiled files. In the src / gulp folder, create a package.json file with the following contents:

{ "name": "front", "version": "3.9.0", "description": "", "main": "gulpfile.js", "scripts": { "test": "echo \"Error: no test specified\" && exit 1" }, "author": "", "license": "BSD-2-Clause", "devDependencies": { "gulp": "~3.9.0", "gulp-uglify": "~1.4.2", "gulp-concat": "~2.6.0", "gulp-livereload": "~3.8.1", "gulp-jade": "~1.1.0", "gulp-imagemin": "~2.3.0", "tiny-lr": "0.2.1" } } Create gulpfile.js. in the src / gulp folder. I used my old sample file:

/** * Created by werevolff on 18.10.15. */ var gulp = require('gulp'), uglify = require('gulp-uglify'), concat = require('gulp-concat'), refresh = require('gulp-livereload'), lr = require('tiny-lr'), server = lr(); /** * Mainpage */ gulp.task('mainpage', function () { gulp.src(['./front/jquery/*.js', './front/bootstrap/*.js', './front/angularjs/angular.min.js', './front/angularjs/i18n/angular-locale_ru-ru.js', './front/project/**/*.js']) .pipe(uglify()) .pipe(concat('mainpage.js')) .pipe(gulp.dest('./build')) .pipe(refresh(server)); }); /** * Rebuild JS files */ gulp.task('lr-server', function () { server.listen(35729, function (err) { if (err) return console.log(err); }); }); /** * Gulp Tasks */ gulp.task('default', ['mainpage', 'lr-server'], function () { gulp.watch('./front/**/*.js', ['mainpage']); }); As we see from the config, we should upload some popular libraries to the src / gulp / front folder and create the src / gulp / front / project folder for scripts written by JS. Also, do not forget about creating a Dockerfile in src / gulp

FROM neo9polska / nodejs-bower-gulp

COPY package.json ./package.json

COPY node_modules ./node_modules

RUN npm install --verbose

Now a rather important question is node_modules. Without this folder, the container with Gulp will be frankly messed up. Here we have two options for obtaining this folder:

- Build the project on the local machine and transfer the folder with modules from it

- Remove everything from the Dockerfile that is below the FROM directive, docker-compose run --rm gulp npm install --verbose, and then change the permissions on the directory with node_modules and return what was below the FROM back.

However, changing the rights is not necessary. Just developers will be forced to constantly execute the gulp rebuild command. However, all the code described in the article, I will lay out on Github and you can take the node_modules from there. This problem is related to docker-compose. But defeating her is easy.

So, as a result of the launch of containers

(docker) $ docker-compose up -d We should get this compiled file:

.

.Done! The project can be poured into git and start working with it.

The complete restart command with rebuild looks like this:

(docker) $ docker-compose stop && docker-compose rm -f && docker-compose build --no-cache gulp && docker-compose build --no-cache project && docker-compose up -d To launch a project, a new participant in the process just needs to run:

(docker) $ docker-compose build --no-cache gulp && docker-compose build --no-cache project && docker-compose up -d To view the main log of the container running the application:

(docker) $ docker-compose logs CONTAINER NAME The Django logs from the project are written to the src / logs folder.

You can see the source code of the project in my GitHub .

PS Another important aspect is setting up the python interpreter in PyCharm. For this setting, just add the remote interpreter:

And note that PyCharm has a plugin for integrating with Docker. We use SSH connection, because we did not touch upon the issue of deploying the project to the docker-machine.

Source: https://habr.com/ru/post/272811/

All Articles