School of Data "Beeline", opening the curtain

Hi, Habr!

You have already heard many times about the fact that we conduct machine learning and data analysis courses at the Beeline Data School . Today we will open the curtain and tell you what our students are learning, and what tasks they have to solve.

')

So, we have completed our first course. Now is the second and the third starts on January 25th. In previous publications , we have already begun to tell what we teach in our classes. Here we will talk in more detail about topics such as automatic text processing, recommendation systems, Big Data analysis and successful participation in Kaggle competitions.

So, one of the lessons in the Beeline Data School is devoted to NLP - but not to neuro-linguistic programming, but to automatic text processing, Natural Language Processing.

Automatic text processing is an area with a high threshold of occurrence: to make an interesting business proposal or to take part in a text analysis competition, for example, in SemEval or Dialogue , you need to understand machine learning methods, be able to use special text processing libraries to not program from scratch routine operations, and have a basic understanding of linguistics.

Since it is impossible to tell about all the tasks of word processing and the intricacies of solving them in several classes, we concentrated on the most basic tasks: tokenization, morphological analysis, the task of identifying keywords and phrases, and determining the similarities between texts.

We have dismantled the main difficulties that occur when working with texts. For example, the task of tokenization (splitting text into sentences and words) is far from being as simple as it seems at first glance, because the concept of a word and a token is rather vague. For example, the name of the city New York, formally, consists of two separate words. Of course, for any reasonable processing, these two words should be considered as one token and not processed one by one. In addition, the point - is not always the end of the sentence, in contrast to the question and exclamation marks. Points may be part of an abbreviation or number entry.

Another example, morphological analysis (by simple definition of parts of speech) is also not an easy task due to morphological homonymy: different words may have coinciding forms, that is, be homonyms. For example, in the sentence “He was surprised by a simple soldier” there are two whole homonyms: simple and soldier.

Fortunately, students didn’t have to implement these algorithms from scratch: they were all implemented in the Natural Language Toolkit, a library for processing texts in Python. Due to this, our students immediately tried all the methods in practice.

Next, we moved to such a popular topic as recommendation systems.

Learn about how classic collaborative filtering algorithms work based on similarity by users or features, and also study SVD models like SVDfunk is not enough to develop your own solution. Therefore, after studying the theory, we moved on to practice and use case studies.

So, programming in Python, we learned how to get item-based from user-based with a few changes and tested their work using the example of the classic benchmark - MovieLens data. We studied how to correctly evaluate models based on bimodal cross-qualification (not only the test set of users is highlighted, but some of the evaluated objects are hidden), how these methods behave when the top-n recommendations and k closest neighbors vary.

We learned about the Boolean matrix factorization and decomposition into singular numbers, as well as about how these techniques can not only reduce the dimension of the data, but also reveal the hidden taste / thematic similarity between users and recommendation objects.

As a use-case of a hybrid recommender system for data with implicit response, we considered the recommendation of radio stations for online hosting.

On the one hand, even with stable tastes, user needs change with changes in activities (work or jogging with invigorating music, cooking dinner for pacifying), on the other hand, the real DJ controls the radio station and the repertoire may change. At the same time, users are lazy and there are not so many likes or additions to favorites.

It would seem that only the ability to use an implicit response and do collaborative filtering based on the listening frequency, but in new and still little-known radio stations there is almost no chance to be recommended and a dramatic change in the user's tastes (switched from classics to rock) will not make it instantly look like rock fans ...

Adaptive profiles based on the tags of musical compositions, which services such as last.fm give for free, come to the rescue. By composing a profile from tags for the radio station and for the user, we can automatically make it dynamic, so each time you play / listen to a song with the track indie rock, the unit of the radio station and the listener will be put into the corresponding component.

The more plays / plays of tracks with such a tag, the greater the contribution of this tag to the corresponding profiles. Profile vectors can be normalized and tagged as similar or based on Kullback-Leibler divergence, which is considered more suitable for distributions.

But the collaborative component does not have to be thrown out, it can (and should) be used at least in a linear combination with ranking by profiles, and the coefficient can be trained.

As practice has shown, the SVD-like model on such data with an implicit response was unable to produce high results, and the hybrid solution demonstrated an acceptable average absolute error, NDCG , as well as accuracy and completeness.

The best part is that the quality of the final hybrid model turned out to be better than its individual components.

We also talked about the specifics of analyzing large amounts of data, about the fact that intuition, which works well in the case of small samples, brings when moving to large volumes. We got acquainted with the system of parallel computing in the Apache Spark RAM, how it differs from Hadoop , what are the specifics of implementing scalable machine learning algorithms. The practice was to count the words in the Shakespeare essay and analyze server logs using Apache Spark .

We talked in more detail about the Apache Spark MLLib library of scalable machine learning algorithms , discussed how to train linear and logistic regression, a tree and a forest of solutions on several machines, how to do matrix factorization. The practical task was to refine the film recommendation system using the parallel version of the ALS algorithm for collaborative filtering.

In conclusion, we considered data analysis competitions - why they are needed, what technology stack is used for this, how the research process proceeds. The Caterpillar Tube Pricing Competition problem was considered in detail - the task of predicting the price of a pipe construction by size and material. The solution was mainly based on the generation of new signs from existing categorical ones. Various methods based on One-Hot coding, Label coding, and aggregation of several features were considered. Ways of constructing validation for repetitive objects were explained.

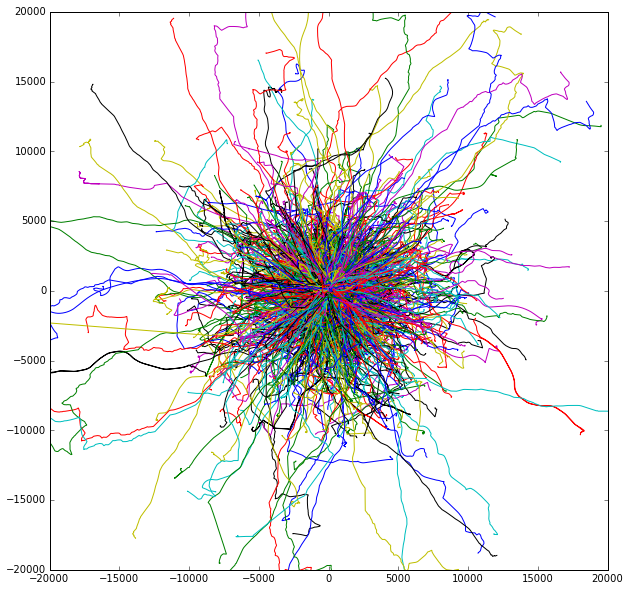

Also, the task of AXA Driver Telematics Analysis was dismantled - the task of determining the unique driving style. Such tasks are increasingly encountered with the increasing popularity of insurance telematics. The task was as follows: tracks were given (x, y coordinates, calculated at regular intervals) for drivers. In total, 200 tracks were given for 2300 drivers. It is also known that about 5-10% of the tracks are erroneous and do not belong to the driver to whom they are assigned. The task was to determine for each driver exactly where his tracks, and where - not. This task is also solved in real life - for example, in order to keep track of driving style when another person is driving.

The data was obfuscated, and all the tracks started from the origin. So they looked like:

To solve such a problem, you first need to come up with signs for machine learning algorithms - something that will distinguish one driver from another. For example, you can take the average speed, average acceleration, etc. Descriptive statistics for speed, 1 derivative of velocity (acceleration), 2, 3 velocities, velocities along the main motion — for example, 0, 10, 20 ... 100 percentiles for each of the listed distributions, were well suited for this task. Also consider the dimensions of the track itself - the maximum and minimum x, y coordinates. This task of classifying without a teacher can be reduced to the task of training with a teacher by creating a training sample. We will solve the problem individually for each driver: we determine which tracks belong to him and which do not; We know that most of the driver’s tracks are his. We can confidently say the opposite about the tracks of other drivers: they belong to other people. Then mark your own tracks with units (1), and tracks of other drivers with zeros (0). Thus, we generate a target feature, on the basis of which we can classify the tracks as belonging to the driver and not belonging.

Then we will build a random forest model ( RandomForest ) and use it as a binary classifier into two classes: 0 and 1. Then, the constructed model will be applied to the 200 tracks of the original driver. And the probabilities already obtained for a track belonging to class 1 will be used as an answer. This classification is repeated for each driver.

Thus, the lack of targeted features in the set of analyzed data does not mean that we cannot use learning algorithms with a teacher. Depending on the task, we can independently formulate formal criteria for setting the target feature and create it. This is the analysis of the data: in attentive and thoughtful work with the data and understanding of their nature.

So, the first course is over, the second course is in full swing, and we recruit the third one. Start third January 25, 2016 . Record is open now. Details, as usual, on bigdata.beeline.digital , our official page.

Source: https://habr.com/ru/post/272799/

All Articles