VMware NSX Demo Review

We offer you an overview of the VMware NSX solution based on the demonstration report at the MUK-Expo 2015 exhibition. Video demonstration in good quality - 720p - everything is clearly visible. For those who do not want to watch the video - under the cut decoding demonstrations and screenshots.

Disclaimers:

1. Transcript with small editing.

2. Please remember the difference in written and oral speech.

3. Screenshots in large size, otherwise nothing can be disassembled.

2. Please remember the difference in written and oral speech.

3. Screenshots in large size, otherwise nothing can be disassembled.

')

Colleagues, good afternoon. We are starting a demo of VMware NSX. So, what would you like to show today. Our demo infrastructure is deployed on the basis of our distributor MUK. The infrastructure is rather small - these are three servers, the servers are ESXI, in the 6th version, the update 1 v-center is updated, that is, the freshest that we can offer.

In this structure, before using NSX, we received several Vlans from network engineers MUK, in which you could place a server segment, video desktops, a segment that has access to the Internet, so that you can get access from a video desktop to the Internet, and access to transit networks so that colleagues from MUK can connect from their internal network, right? Any operations related, for example, if I want for some demo or some pilot to create some more port-group networks there, to make a more complex configuration, I need to contact my colleagues from MUK, ask them on the equipment that something done there.

If I need interaction between segments, then either negotiate with networkers again, or simply - a small machine, a router inside, two interfaces, back and forth, as we all used to do.

Accordingly, the use of the NSX 10 has two objectives: on the one hand, it shows how it works not on virtual machines, on hardware, yes, that it really works and it is convenient; The second point is to really simplify certain of your tasks. Accordingly, what do we see at the moment? At the moment, we see what virtual machines appeared in this infrastructure after the NSX was implemented. Some of them are mandatory, without them it is impossible. Some came as a result of a certain setup of the infrastructure itself.

Accordingly, the NSX-manager is obligatory - it is the main server through which we can interact with the NSX, it provides the V-center web client its graphical interface, it allows you to access it via the rest api in order to automate some actions, which can be done with the NSX or some scripts, or, for example, from various cloud portals. For example, VMware vRealize Automation or portals of other vendors. To work technically, the NSX needs a cluster of NSX controllers, that is, these servers perform a service role. They determine, they know, yes, they keep information about which physical esxi server, which ip-addresses and mac-addresses are present at the moment, which new servers we have added, which ones have fallen off, spread this information to esxi -servers, that is, in fact, when we have a segment created with the help of NSX, L2, which should be available on several esxi -servers and the virtual machine tries to send an ip-package to another virtual machine in the same L2-segment, NSX- controllers know exactly which host is actually network pa The ket needs to be delivered. And they regularly give this information to the hosts. Each host owns a table, which ip, which mac-addresses on which hosts are located, which really, physically packets need to be transmitted.

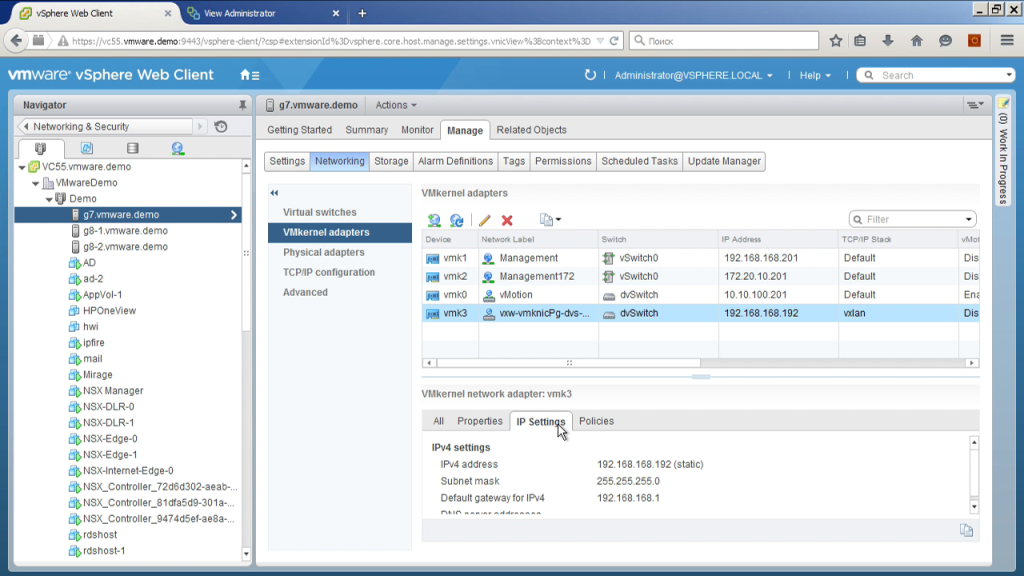

If we open the configuration of a host, we can see the very interface through which packets will be transmitted over the network. That is, we have the parameters of the VMkernel adapter, this host will be using this IP address in the VXLan network packets. We have a little monitor here a little bit, so we have to get out.

And we see that a separate TCP / IP stack is used to transmit these packets, that is, there may be a separate default gateway that is different from the usual VMkernel interface. That is, from the point of view of physics: in order to deploy it in a demo, I needed one VLan in which I can place these interfaces; preferably - and I checked that this VLan not only extends to these three servers, it stretches a little further if a fourth or fifth server appears there, for example, not in the same chassis where these blades are standing, but somewhere separate maybe not even in this VLan, but which is routed here, I can add, for example, a fourth server, which will be on another network, but they will be able to communicate with each other, I will be able to stretch my L2 networks created using NSX from these three servers including this new fourth.

Well, now we’re actually moving on to what the NSX itself looks like, that is: what can we do with it with the mouse and keyboard, right? We are not talking about rest api now when we want to automate something.

The Installation section (now we will go there, I hope, we will switch) allows us to see who our manager is, how many controllers, the controllers themselves are deployed from here. That is, we download the manager from the VMware website, this is the template, we deploy it and implement it in the V-center. Next, we set up the manager, give him a login-password to connect to the V-center server, so that he can register the plugin here. After that, we connect to this already interface, saying: “We need to implement controllers”.

Minimally, if we are talking, for example, about some kind of test bench, the controller may even be one, the system will work, but this is not recommended. The recommended configuration is three controllers, preferably on different servers, on different stores, so that the failure of some component could not lead to the loss of all three controllers at once.

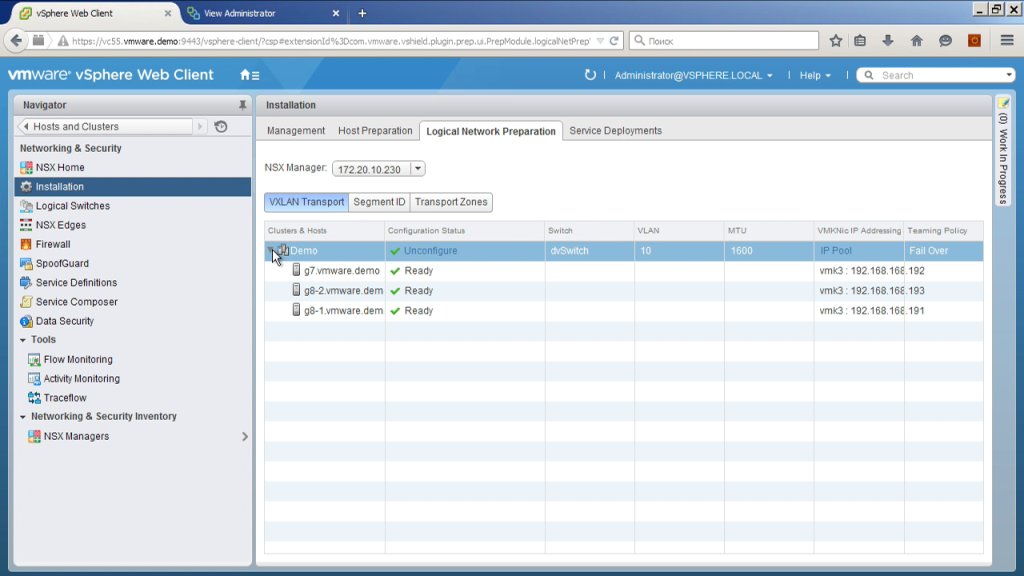

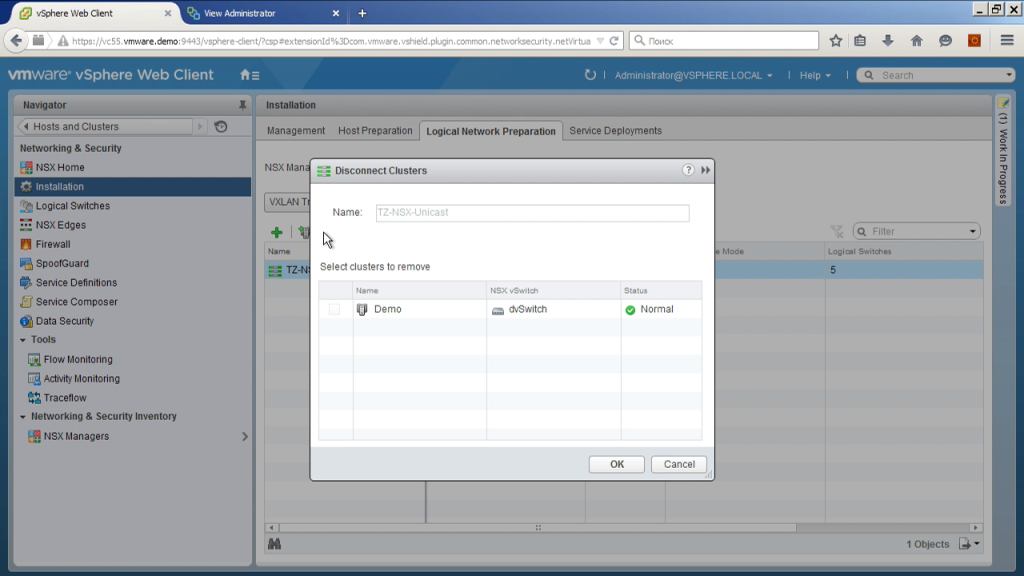

We can also determine which hosts and which clusters managed by this V-center server will interact with the NSX. In fact, it is not necessary that the entire infrastructure managed by a single V-center server will work with this, there may be some selective clusters. Having chosen the clusters, we need to install additional modules on them that allow the esxi server to encapsulate / decapsulate VXLan packets, this is done from this interface again. That is, you don’t need to go to SSH, you don’t need to manually copy any modules;

Next, we must choose, strictly speaking, how this setting of the VMkernel interface will occur. That is, we choose a distributed switch, choose uplinks, that is, where it happens; we choose load balancing parameters when there are several links, depending on this, when we have only one ip-address of this type on one host, sometimes there may be several. Now we use mode one by one.

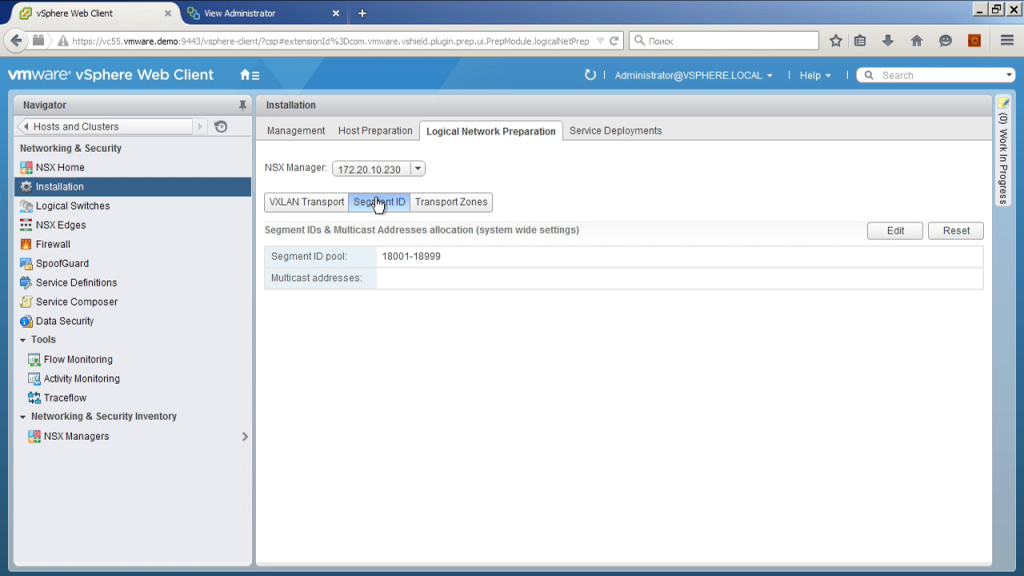

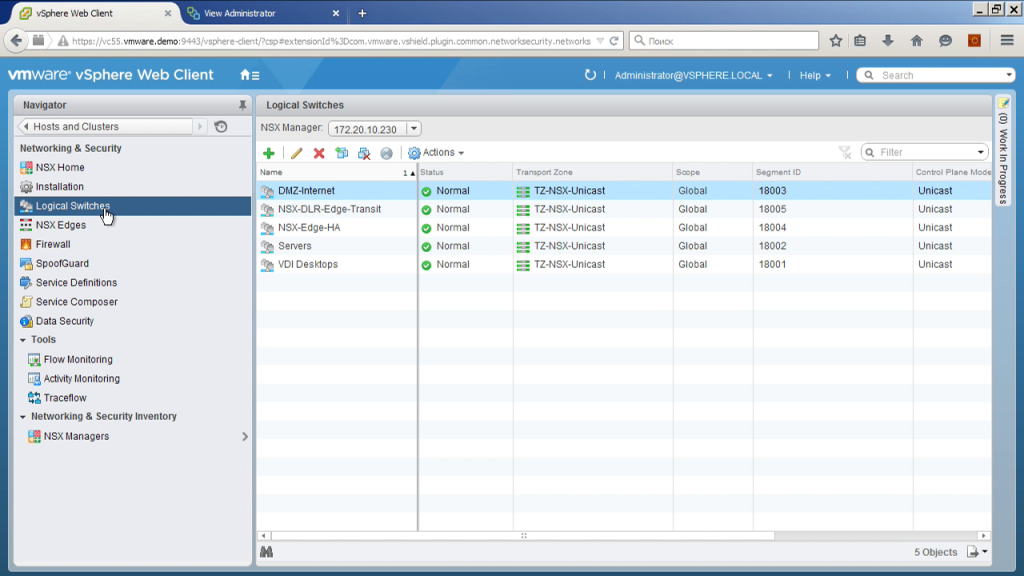

Next, we must select the VXLan ID. That is, VXLan is a technology reminiscent of VLan, but these are additional tags that allow you to isolate different types of traffic in one real segment. If VLan identifiers are 4 thousand, VXLan identifiers are 16 million. Here we actually choose a certain range of VXLan number of segments that will be assigned to them by automatic creation of logical switches.

How to choose them? In fact, as you wish, from this range, if you have a large infrastructure and there may be several NSX implementations so that they do not overlap. Quite simply. Actually, the same as VLan. That is, I use the range from 18001 to 18999.

Next, we can create a so-called transport zone. What is a transport zone? If the infrastructure is large enough, imagine that you have about hundreds of esxi servers there, about 10 servers per cluster, you have 10 clusters. We can not use all of them to work with the NSX. Those of them that we have used, we can use as one infrastructure, and we can divide, for example, into several groups. To say that we have the first three clusters - this is one island, from the fourth to the tenth - this is some other island. That is, by creating transport zones, we indicate how far this VXLan segment can spread. I have three servers here, especially you will not get bored, right? Therefore, everything is simple. Just all the hosts I get into this zone.

And one more important point. When setting up a zone, we manage how information will be exchanged to search for information about ip-addresses, mac-addresses. It can be Unicast, it can be Multycast of L2 level, it can be Unicast routed through L3 level. Again, depending on the network topology.

These are some kind of preliminary things, that is, I repeat once again: all that was required of the network infrastructure is that I have all the hosts on which the NSX should work could communicate with each other via ip, using, if necessary, including and normal routing. And the second point is that the MTU here in this segment, where they interact, was 1600 bytes, and not 1500 - as it usually happens. If I can’t get 1600 bytes, so I’ll just have to tweak the MTU, for example in 1400 in all constructions that I create in the NSX, for example, so that I fit in the physical transport in 1500.

Further. Using NSX, I can create a logical switch. This is easiest compared to the traditional network (VLan cut). The only thing is that no matter how I know where my physical servers are connected, yes, here they are the same switches. In theory, the network could be more complex. You may have some servers connected to one switch, some to another, somewhere L2, somewhere L3. As a result, actually creating a logical switch, we cut the VLan on all the switches through which the traffic will go. Why? Because in fact we are creating VXLan, and the actual physical traffic that switches will see is traffic from the ip address on one hypervisor to the ip address of another hypervisor, udp type, inside the VXLan content.

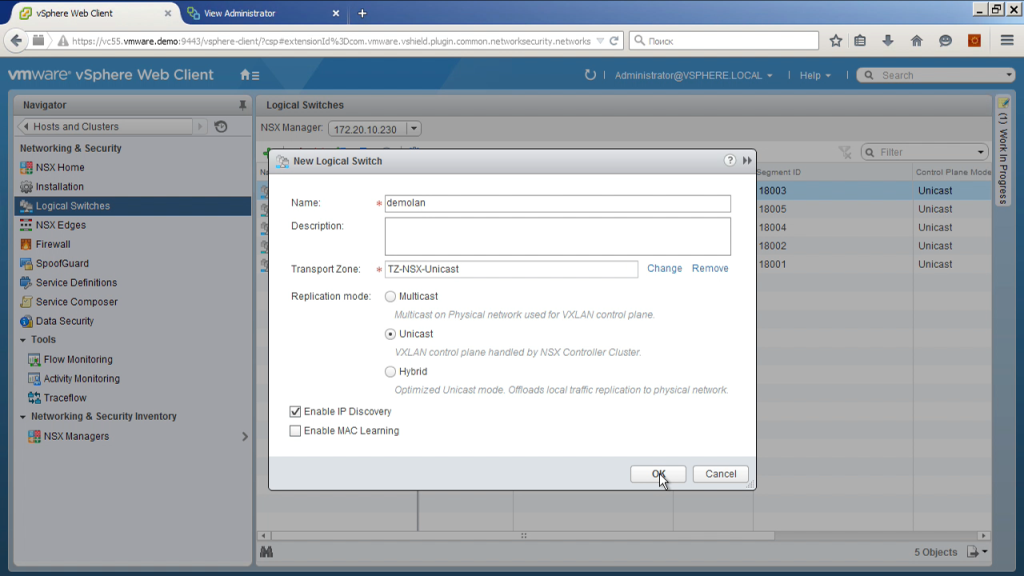

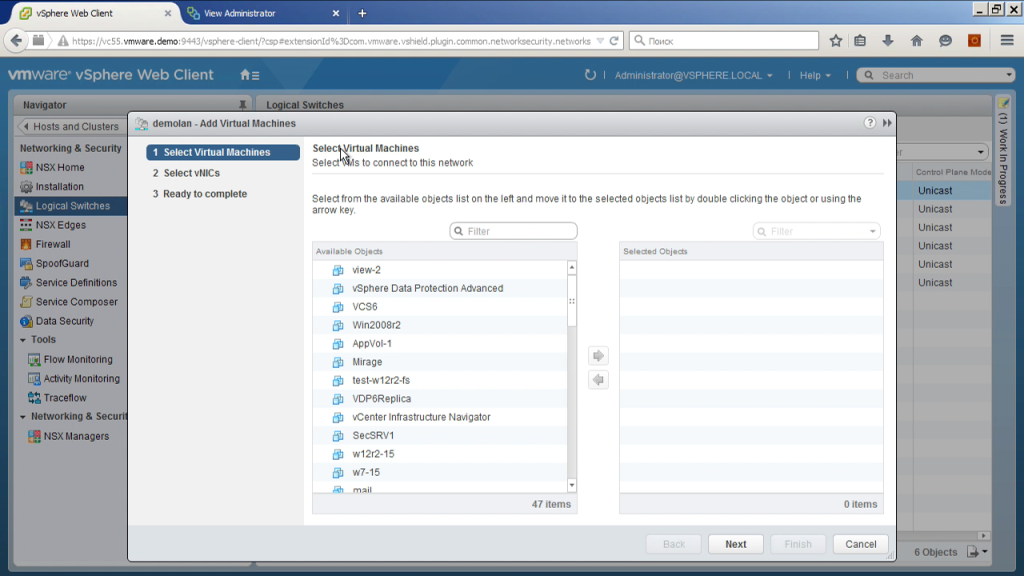

That is, in this way, cutting networks is very easy. We simply say: “Create a new segment”, choose the type of unicast transport, choose which transport zone, that is, in fact, on which esxi clusters this segment will be available. We wait a little bit - and now this segment will appear. In fact, what happens when we create these segments? That is, how to connect a virtual machine there? There are two options for this.

Option number one.

We are right from here saying that we connect a virtual machine to the physical network. And some of our customers said, "Oh, this is what our networkers want." They go to the network settings, and he says, here you are, the cord from the machine, including the port of the logical switch, right? And choose, here - the car, here, respectively, the interface.

Or the second option. In fact, when creating a logical switch, the NSX manager accesses the server V-center and creates a port-group on the distributed switch. Therefore, in fact, we can simply go to the properties of the virtual machine, select the desired port-group, turn on the virtual machine there. Since the name is generated programmatically, it will include the name of this logical switch, the number of the VXLan segment. That is, in principle, from the usual V-center-client is quite understandable, you switch on the virtual machine in the logical segment.

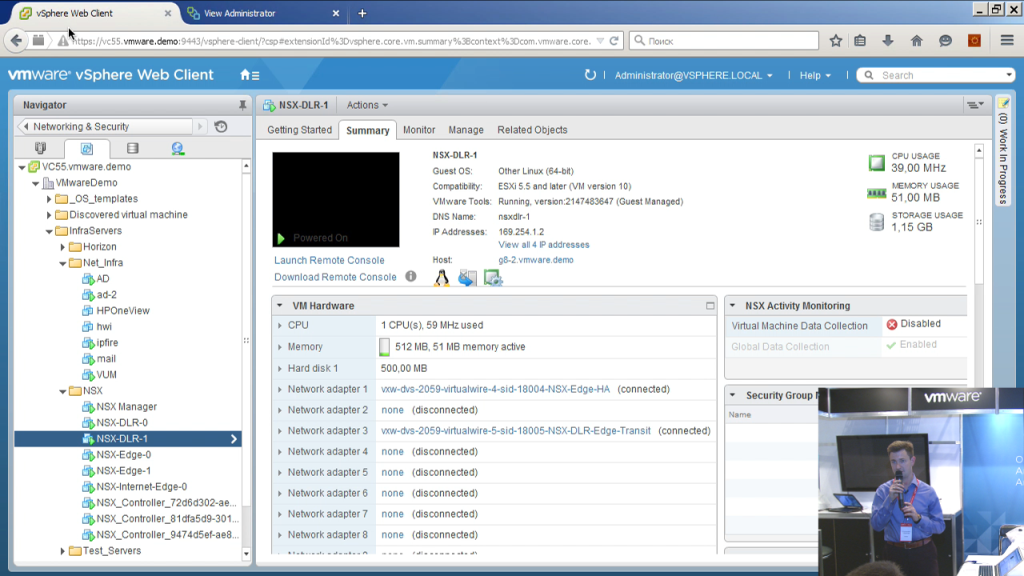

Further. A few more machines that were visible at the very beginning, right? And that's where they come from. Some functions of the NSX are implemented directly at the module level in the kernel esxi-i. This, for example, routing between these segments, this is the firewall when switching between these segments, or even within this segment, some functions are implemented using additional virtual machines. The so-called EDGE gateways or additional services when they are needed. What do we see here? We see that there are three of them in my infrastructure, one of them is called NSX-dlr, dlr is the distributed logical router. This is a service virtual machine that allows the distributed router to work in the NSX, network traffic does not go through it, it is not a date-plan, but if we have a distributed router, for example, bgp, ospf, dynamic routing protocols, from somewhere these all routes should get, other routers should contact someone and share this information. Someone should be responsible for the status of "running a distributed router or not." That is, it is actually a kind of management module of a distributed router. When configuring it, we can specify that it should work reliably, respectively, two virtual machines in the HA pair will be implemented. If one for some reason became unavailable, then the second one will work in its place. The other two edges, which are of type NSX edge, are virtual machines through which traffic is routed or routed to external networks that are not controlled by the NSX. In my scenario, they are used for two tasks: NSX edge is simply connected to the internal networks of the MUK data center, then it eats, for example, my V-center - as it was on the usual standard port-group, it works there. In order for me to reach a V-center with some kind of virtual machine on an NSX logical switch, I need someone to connect them. I connect them with this virtual machine. It has, indeed, one interface connected to a logical switch, the other interface is connected to a regular port-group on a standart switch on esxi, which is called the NSX Internet edge. Guess what is different? Approximately the same thing, but the port-group to which it will connect is the port-group that connects to the DNZ network, in which honest white Internet addresses are used. That is, on it now, on one of its interfaces, a white ip-address is configured and you can connect to this demo environment using NSX Networking. Accordingly, additional services such as distributed routing, we set up here in the firewall, if we want to do firewalling, if we want to do nat, if we want to do log balancing or, for example, VPN, with an external connection, for this we open the properties internet edge.

We are a little bit rounded in time, so I will not show everything that I wanted to show.

Accordingly, in the edge properties we can control the firewall, this is the firewall, which will be applied when the traffic through this virtual machine passes, that is, it is actually a kind of our perimeter firewall obtained. Further, it can have a dhcp-server, or it can do the forwarding as an ip-helper, since dhcp-helper. He can do NAT - what I need here for the edge, which looks at one side to the honest Internet, and the other to the internal networks. It has a load balancer. It can act as a point for a VPN tunnel or as a terminator for client connections. Here are two bookmarks: VPN and VPN Plus. VPN is the site of that site, between the edge and the other edge, between the edge and our cloud, between the edge and the provider’s cloud, which uses our VCNS or NSX technologies. SSL VPN Plus - there is a client for various operating systems that you can put on your laptop or user, they can connect to the VPN infrastructure.

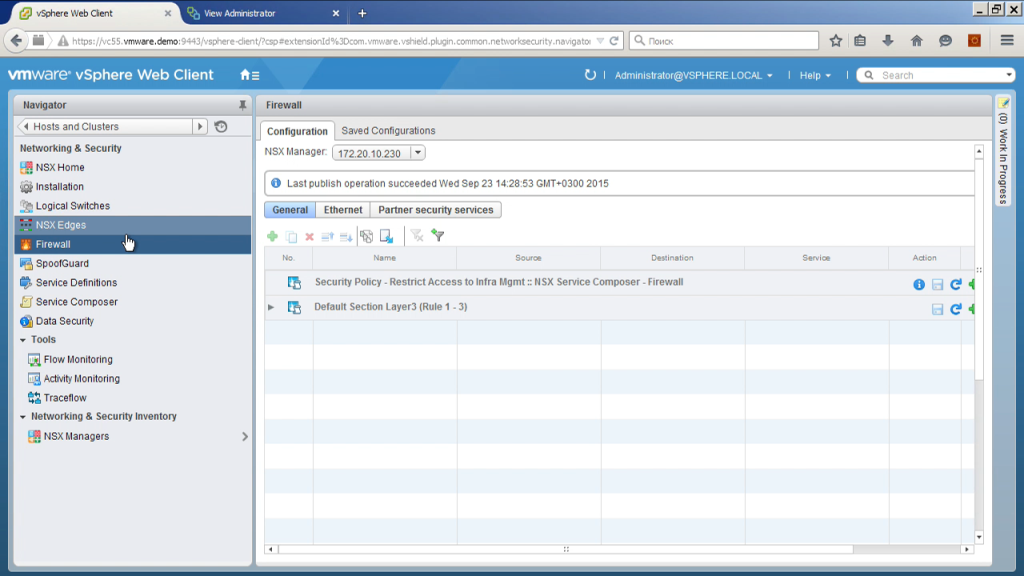

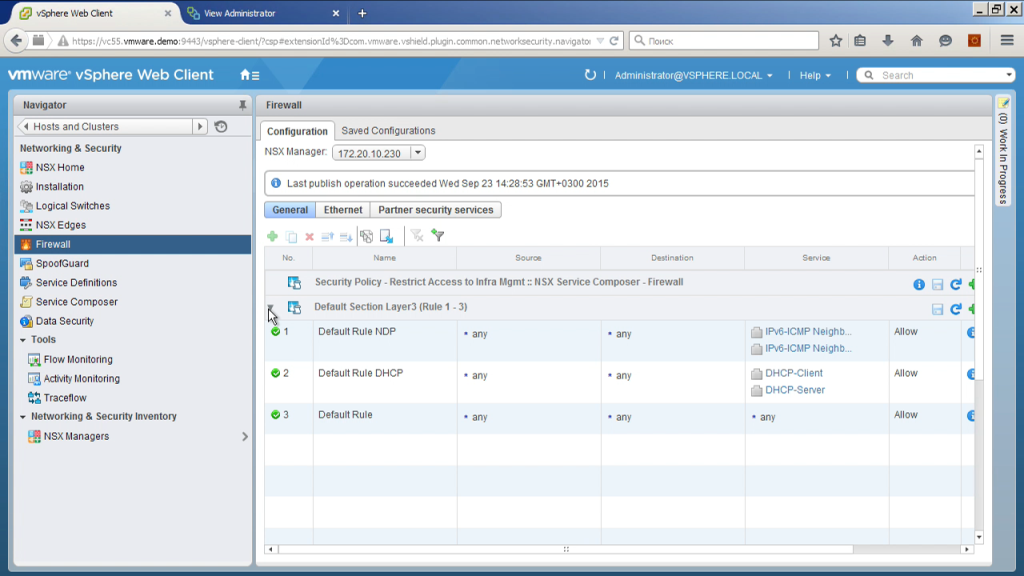

Well, just a few last moments. Distributed firewall, that is, firewall, is applied on each host, as rules we can specify ip-addresses, packet numbers, port numbers, virtual machine names, including masks, for example. Make a rule that from all machines whose name begins with up, allow to go to 14:33 on all machines whose name begins with db. allow traffic from a machine that is in the V-center daddy of one, will walk in the other's daddy, connect to the active directory, say that if we have a machine in a certain ad group, then allow this traffic, if not, then deny traffic. Well, various other options.

Plus, again, what to do with traffic? Three actions: allow, deny, deny. What is different to prohibit refuse? And one and the other blocks traffic. But one just does it very quietly, and the second sends back a message that your traffic has been killed. And much easier then in the diagnosis.

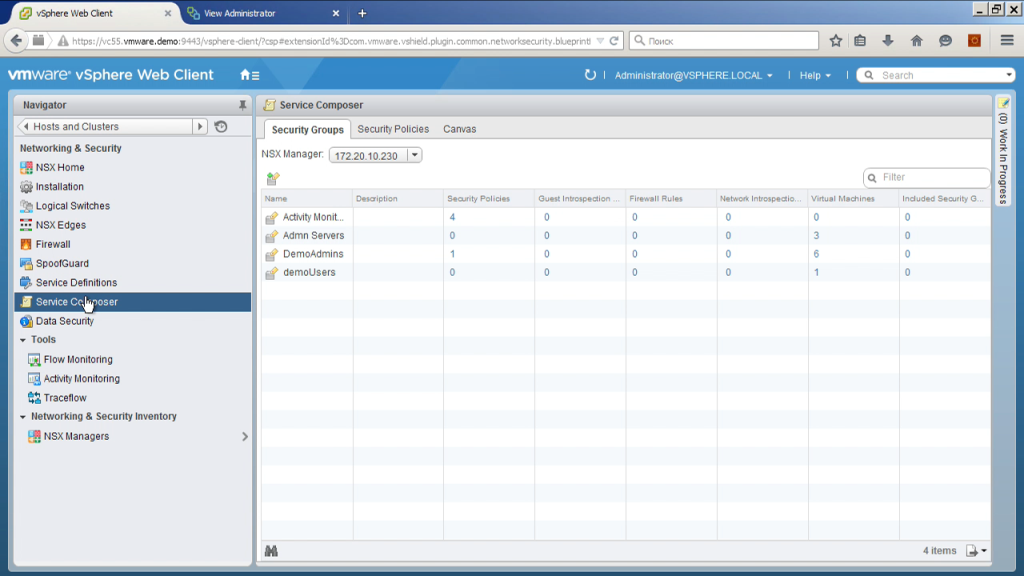

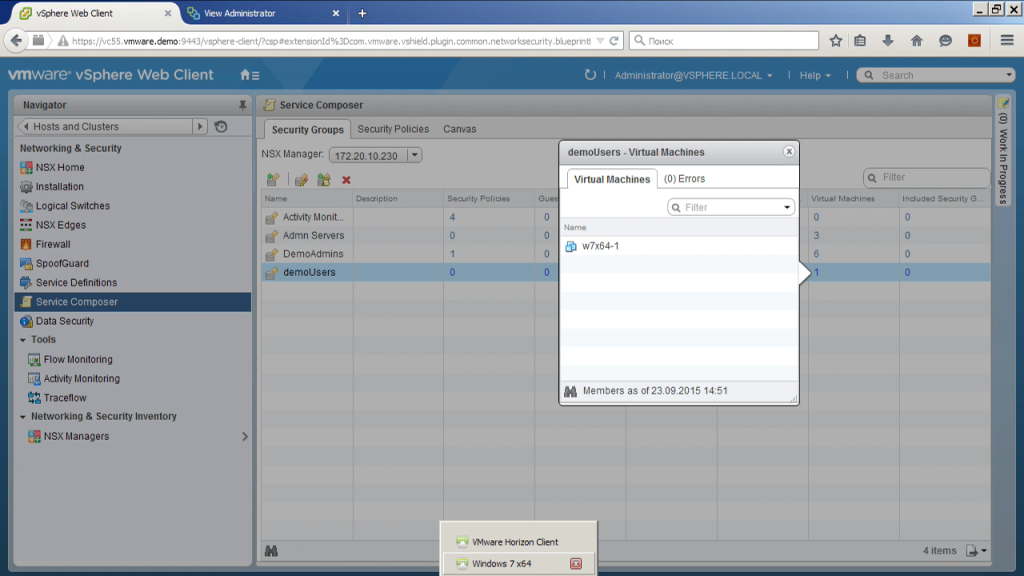

And literally an important addition at the end. That is, such a component as a service composer. Here we can integrate NSX with some additional modules, for example, it is an external load balancer, this is an antivirus, this is some kind of IDS / IPS system. That is, we can register them. We can see here what configs are there, we can describe those same security groups. For example, the demoUsers group is a group that includes itself in the machines on which a user from the demoUsers group logged on. What do we see now? That now one virtual machine gets to this group. Where is she? Here it is.

Virtual desktop, the user is connected there. I can make a rule in the firewall in which to say what to allow users of one group access to one file servers, and users of another group to allow access to other file servers. And even if these are two users who access the same, for example, VDI desktop, but at different times, the firewall will dynamically apply different policies to different users. Thus you can build a much more flexible infrastructure. There is no need to allocate some kind of network segments, separate machines for different types of users. That is, network policies can be dynamically reconfigured depending on who is using the network now.

Distribution of VMware solutions in Ukraine , Belarus , Georgia , CIS countries

VMware training courses

MUK-Service - all types of IT repair: warranty, non-warranty repair, sale of spare parts, contract service

Source: https://habr.com/ru/post/272791/

All Articles