Postgres NoSQL is better than MongoDB?

In general, relational database management systems have been conceived as a “one-size-fits-all solution for storing and retrieving data” for decades. But the growing need for scalability and new application requirements have created new challenges for traditional RDBMS management systems, including some dissatisfaction with the one-size-fits-all approach in a number of scalable applications.

The answer was a new generation of lightweight, high-performance databases created to challenge the dominance of relational databases.

A big reason for the movement of NoSQL was the fact that different implementations of web, corporate and cloud applications have different requirements for their bases.

Example: for such large sites as eBay, Amazon, Twitter, or Facebook, scalability and high availability are basic requirements that cannot be compromised. For these applications, even the slightest disabling can have significant financial implications and an impact on customer confidence.

')

Thus, a ready-made database solution often has to address not only transaction integrity, but, moreover, higher data volumes, increased data speed and performance, and a growing variety of formats. New technologies have emerged that specialize in optimizing one or two of the above aspects, sacrificing others. Postgres with JSON takes a more holistic approach to user needs, successfully solving most NoSQL workloads.

A smart new technology approach relies on a close assessment of your needs, with the tools available to meet those needs. The table below compares the characteristics of a non-relational document-oriented database (such as MongoDB) and the characteristics of the Postgres relational / document-oriented database to help you find the right solution for your needs.

Source: EnterpriseDB site.

A document in MongoDB is automatically supplied with the _id field if it is not present. When you want to receive this document, you can use _id - it behaves exactly like the primary key in relational databases. PostgreSQL stores data in the fields of tables, MongoDB stores them in the form of JSON documents. On the one hand, MongoDB looks like a great solution, since you can have all the various data from several tables in PostgreSQL in one JSON document. This flexibility is achieved by the absence of restrictions on the data structure, which can be really attractive at the first moment and really terrifying on a large database, in which some records have incorrect values, or empty fields.

PostgreSQL 9.3 comes bundled with excellent functionality that allows you to turn it into a NoSQL database, with full transaction support and storage of JSON documents with restrictions on data fields.

I will show how to do this using a very simple example of the Servants table. Each Employee has a name, description, id number and salary.

PostgreSQL Version

A simple table in PostgreSQL might look like this:

This table allows us to add employees like this:

Alas, the above table allows you to add blank lines without some important values:

This can be avoided by adding restrictions to the database. Suppose we always want to have a non-empty unique name, a non-empty description, not a negative salary. Such a table with restrictions will look like:

Now all operations, such as adding or updating a record, which contradict any of these restrictions, will fall off with an error. Let's check:

NoSQL version

In MongoDB, the record from the table above will look like the following JSON document:

similarly, in PostgreSQL we can save this entry as a row in the emp table:

This works like in most non-relational databases, no checks, no errors with bad fields. As a result, you can convert the data as you wish, problems begin when your application expects a salary to be this number, but in reality it is either a string or it is completely absent.

Checking json

PostgreSQL 9.2 has a good data type for this, it is called JSON. This type can store only the correct JSON in itself; before converting to this type, validation is checked.

Let's change the table description to:

We can add some valid JSON to this table:

This will work, but adding incorrect JSONa will fail:

The problem with formatting can be difficult to notice (I added a comma to the last line, JSON doesn't like it).

Checking fields

So, we have a solution that looks almost like the first pure PostgreSQL solution: we have data that is validated. This does not mean that the data makes sense. Let's add checks to validate the data. PostgreSQL 9.3 has new powerful functionality for managing JSON objects. There are certain operators for the JSON type that will give you easy access to fields and values. I will only use the " - >> " operator, but you can find more information in the Postgres documentation .

Also, I need to check the field types, including the id field. This is what Postgres simply checks for the definition of data types. I will use a different syntax for the checks, since I want to give it a name. It will be much easier to search for a problem in a specific field, and not across a huge JSON document.

The table with restrictions will look like this:

The operator " - >> " allows me to extract a value from the desired JSON'a field, check whether it exists and its validity.

Let's add JSON without a description:

There is one more problem. The name and id fields must be unique. This is easily achieved as follows:

Now, if you try to add a JSON document to the database, the id of which is already contained in the database, the following error will appear:

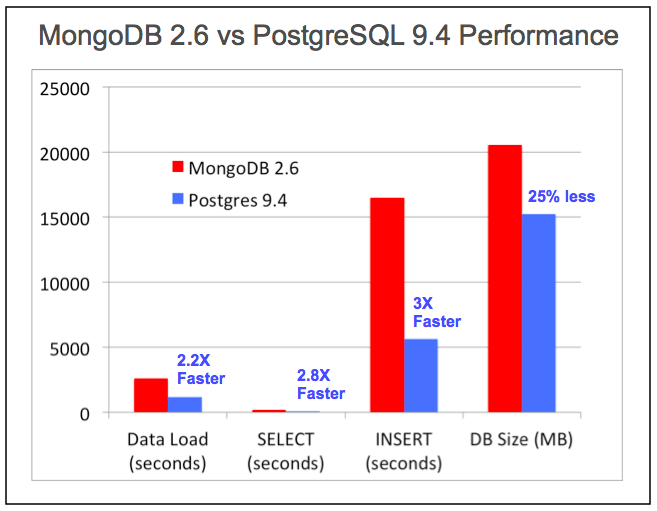

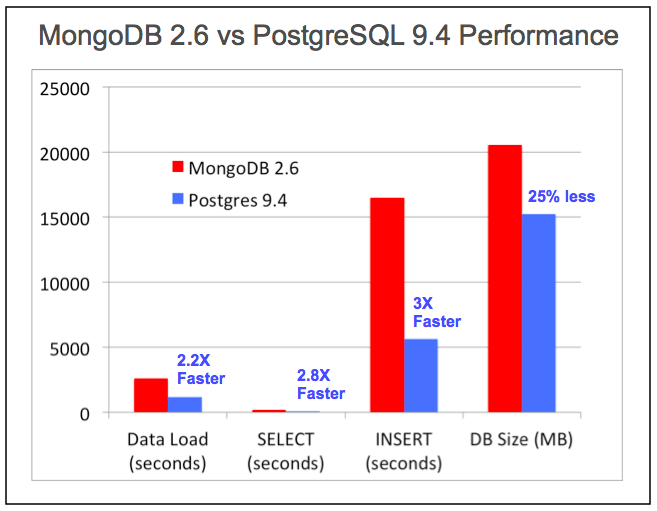

Performance

PostgreSQL copes with the most demanding needs of the largest insurance companies, banks, brokers, government agencies, and defense contractors in the world today, as well as coping over the years. PostgreSQL performance improvements are continuous with the annual release of versions, and include improvements for its unstructured data types as well.

Source: EnterpriseDB White Paper: Using NoSQL capabilities in Postgres

To personally test the performance of NoSQL in PostgreSQL, download pg_nosql_benchmark from GitHub.

The answer was a new generation of lightweight, high-performance databases created to challenge the dominance of relational databases.

A big reason for the movement of NoSQL was the fact that different implementations of web, corporate and cloud applications have different requirements for their bases.

Example: for such large sites as eBay, Amazon, Twitter, or Facebook, scalability and high availability are basic requirements that cannot be compromised. For these applications, even the slightest disabling can have significant financial implications and an impact on customer confidence.

')

Thus, a ready-made database solution often has to address not only transaction integrity, but, moreover, higher data volumes, increased data speed and performance, and a growing variety of formats. New technologies have emerged that specialize in optimizing one or two of the above aspects, sacrificing others. Postgres with JSON takes a more holistic approach to user needs, successfully solving most NoSQL workloads.

Comparison of document-oriented / relational databases

A smart new technology approach relies on a close assessment of your needs, with the tools available to meet those needs. The table below compares the characteristics of a non-relational document-oriented database (such as MongoDB) and the characteristics of the Postgres relational / document-oriented database to help you find the right solution for your needs.

| Special features | MongoDB | PostgreSQL |

| Start Open Source Development | 2009 | 1995 |

| Scheme | Dynamic | Static and dynamic |

| Hierarchical data support | Yes | Yes (since 2012) |

| Support key-event data | Yes | Yes (since 2006) |

| Relational data / normalized storage support | Not | Yes |

| Data limitations | Not | Yes |

| Data Association and Foreign Keys | Not | Yes |

| Powerful query language | Not | Yes |

| Transaction Support and Managing Competitive Access with Multi-Versioning | Not | Yes |

| Atomic transaction | Inside the document | Throughout the base |

| Supported web development languages | JavaScript, Python, Ruby, and others ... | JavaScript, Python, Ruby, and others ... |

| Support for common data formats | JSON (Document), Key-Value, XML | JSON (Document), Key-Value, XML |

| Spatial data support | Yes | Yes |

| The easiest way to scale | Horizontal scaling | Vertical scaling |

| Sharding | Plain | Complicated |

| Server-side programming | Not | Many procedural languages like Python, JavaScript, C, C ++, Tcl, Perl and many, many others. |

| Easy integration with other data sources. | Not | External data collectors from Oracle, MySQL, MongoDB, CouchDB, Redis, Neo4j, Twitter, LDAP, File, Hadoop and others ... |

| Business logic | Distributed by client applications | Centralized with triggers and stored procedures, or distributed across client applications. |

| Availability of learning resources | Difficult to find | Easy to find |

| Primary use | Big data (billions of records) with a large number of parallel updates, where integrity and consistency of data is not required. | Transactional and operational applications whose benefits are in normalized form, associations, data limitations, and transaction support. |

Source: EnterpriseDB site.

A document in MongoDB is automatically supplied with the _id field if it is not present. When you want to receive this document, you can use _id - it behaves exactly like the primary key in relational databases. PostgreSQL stores data in the fields of tables, MongoDB stores them in the form of JSON documents. On the one hand, MongoDB looks like a great solution, since you can have all the various data from several tables in PostgreSQL in one JSON document. This flexibility is achieved by the absence of restrictions on the data structure, which can be really attractive at the first moment and really terrifying on a large database, in which some records have incorrect values, or empty fields.

PostgreSQL 9.3 comes bundled with excellent functionality that allows you to turn it into a NoSQL database, with full transaction support and storage of JSON documents with restrictions on data fields.

Simple example

I will show how to do this using a very simple example of the Servants table. Each Employee has a name, description, id number and salary.

PostgreSQL Version

A simple table in PostgreSQL might look like this:

CREATE TABLE emp ( id SERIAL PRIMARY KEY, name TEXT, description TEXT, salary DECIMAL(10,2) ); This table allows us to add employees like this:

INSERT INTO emp (name, description, salary) VALUES ('raju', ' HR', 25000.00); Alas, the above table allows you to add blank lines without some important values:

INSERT INTO emp (name, description, salary) VALUES (null, -34, 'sdad'); This can be avoided by adding restrictions to the database. Suppose we always want to have a non-empty unique name, a non-empty description, not a negative salary. Such a table with restrictions will look like:

CREATE TABLE emp ( id SERIAL PRIMARY KEY, name TEXT UNIQUE NOT NULL, description TEXT NOT NULL, salary DECIMAL(10,2) NOT NULL, CHECK (length(name) > 0), CHECK (description IS NOT NULL AND length(description) > 0), CHECK (salary >= 0.0) ); Now all operations, such as adding or updating a record, which contradict any of these restrictions, will fall off with an error. Let's check:

INSERT INTO emp (name, description, salary) VALUES ('raju', 'HR', 25000.00); --INSERT 0 1 INSERT INTO emp (name, description, salary) VALUES ('raju', 'HR', -1); --ERROR: new row for relation "emp" violates check constraint "emp_salary_check" --DETAIL: Failing row contains (2, raju, HR, -1). NoSQL version

In MongoDB, the record from the table above will look like the following JSON document:

{ "id": 1, "name": "raju", "description": "HR, "salary": 25000.00 } similarly, in PostgreSQL we can save this entry as a row in the emp table:

CREATE TABLE emp ( data TEXT ); This works like in most non-relational databases, no checks, no errors with bad fields. As a result, you can convert the data as you wish, problems begin when your application expects a salary to be this number, but in reality it is either a string or it is completely absent.

Checking json

PostgreSQL 9.2 has a good data type for this, it is called JSON. This type can store only the correct JSON in itself; before converting to this type, validation is checked.

Let's change the table description to:

CREATE TABLE emp ( data JSON ); We can add some valid JSON to this table:

INSERT INTO emp(data) VALUES('{ "id": 1, "name": "raju", "description": "HR", "salary": 25000.00 }'); --INSERT 0 1 SELECT * FROM emp; { + "id": 1, + "name": "raju", + "description": "HR",+ "salary": 25000.00 + } --(1 row) This will work, but adding incorrect JSONa will fail:

INSERT INTO emp(data) VALUES('{ "id": 1, "name": "raju", "description": "HR", "price": 25000.00, }'); --ERROR: invalid input syntax for type json The problem with formatting can be difficult to notice (I added a comma to the last line, JSON doesn't like it).

Checking fields

So, we have a solution that looks almost like the first pure PostgreSQL solution: we have data that is validated. This does not mean that the data makes sense. Let's add checks to validate the data. PostgreSQL 9.3 has new powerful functionality for managing JSON objects. There are certain operators for the JSON type that will give you easy access to fields and values. I will only use the " - >> " operator, but you can find more information in the Postgres documentation .

Also, I need to check the field types, including the id field. This is what Postgres simply checks for the definition of data types. I will use a different syntax for the checks, since I want to give it a name. It will be much easier to search for a problem in a specific field, and not across a huge JSON document.

The table with restrictions will look like this:

CREATE TABLE emp ( data JSON, CONSTRAINT validate_id CHECK ((data->>'id')::integer >= 1 AND (data->>'id') IS NOT NULL ), CONSTRAINT validate_name CHECK (length(data->>'name') > 0 AND (data->>'name') IS NOT NULL ) ); The operator " - >> " allows me to extract a value from the desired JSON'a field, check whether it exists and its validity.

Let's add JSON without a description:

INSERT INTO emp(data) VALUES('{ "id": 1, "name": "", "salary": 1.0 }'); --ERROR: new row for relation "emp" violates check constraint "validate_name" There is one more problem. The name and id fields must be unique. This is easily achieved as follows:

CREATE UNIQUE INDEX ui_emp_id ON emp((data->>'id')); CREATE UNIQUE INDEX ui_emp_name ON emp((data->>'name')); Now, if you try to add a JSON document to the database, the id of which is already contained in the database, the following error will appear:

--ERROR: duplicate key value violates unique constraint "ui_emp_id" --DETAIL: Key ((data ->> 'id'::text))=(1) already exists. --ERROR: current transaction is aborted, commands ignored until end of transaction block Performance

PostgreSQL copes with the most demanding needs of the largest insurance companies, banks, brokers, government agencies, and defense contractors in the world today, as well as coping over the years. PostgreSQL performance improvements are continuous with the annual release of versions, and include improvements for its unstructured data types as well.

Source: EnterpriseDB White Paper: Using NoSQL capabilities in Postgres

To personally test the performance of NoSQL in PostgreSQL, download pg_nosql_benchmark from GitHub.

Source: https://habr.com/ru/post/272735/

All Articles