Record and video processing on Android

Writing Android applications related to video recording and processing is quite a challenge. Using standard tools, such as MediaRecorder, is not particularly difficult, but if you try to do something that goes beyond the ordinary, real “fun” begins.

What's wrong with the video on Android

The functionality for working with video in Android up to version 4.3 is very poor: it is possible to record video from a camera using Camera and MediaRecorder , apply standard camera color filters (sepia, black and white, etc.) and that’s probably all.

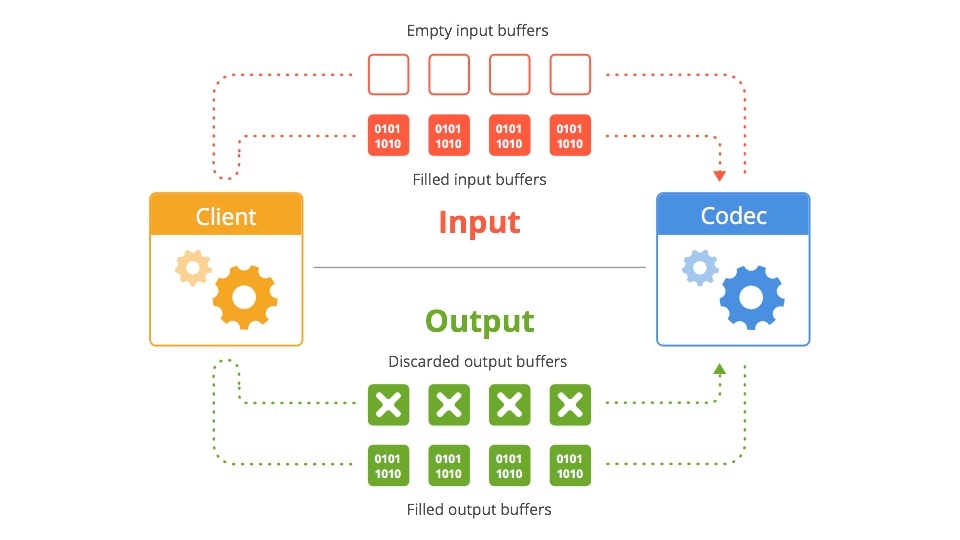

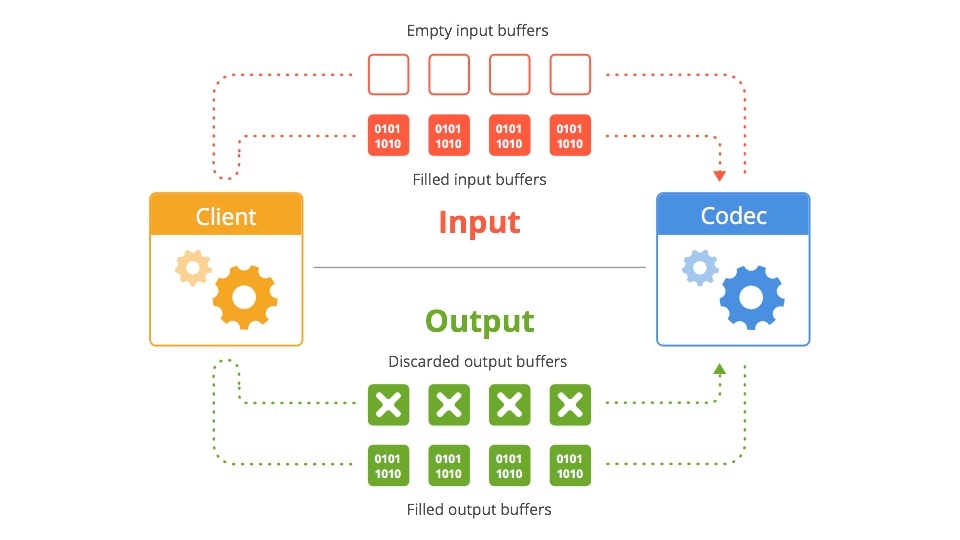

Starting from version 4.1, it became possible to use the MediaCodec class, which gives access to low-level codecs and the MediaExtractor class, which allows you to extract encoded media data from a source.

')

Scheme

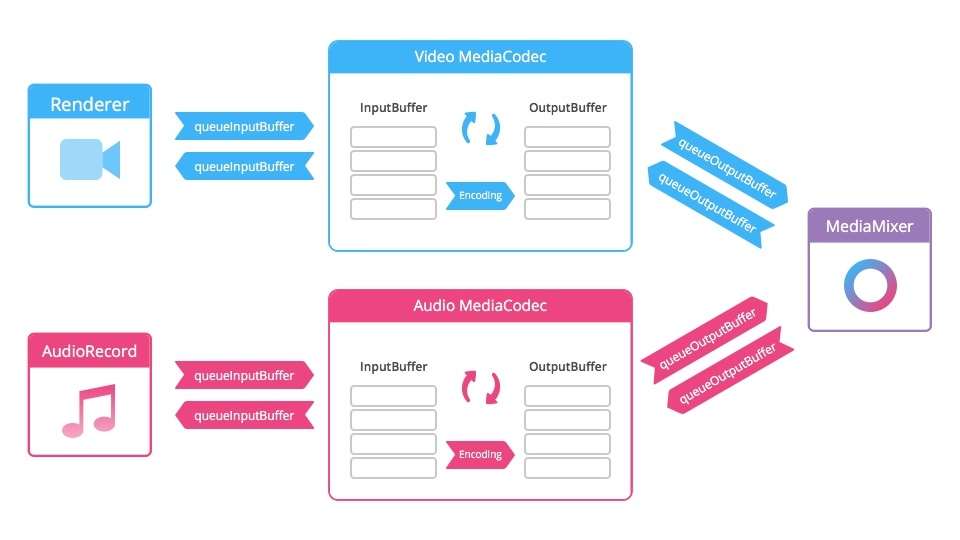

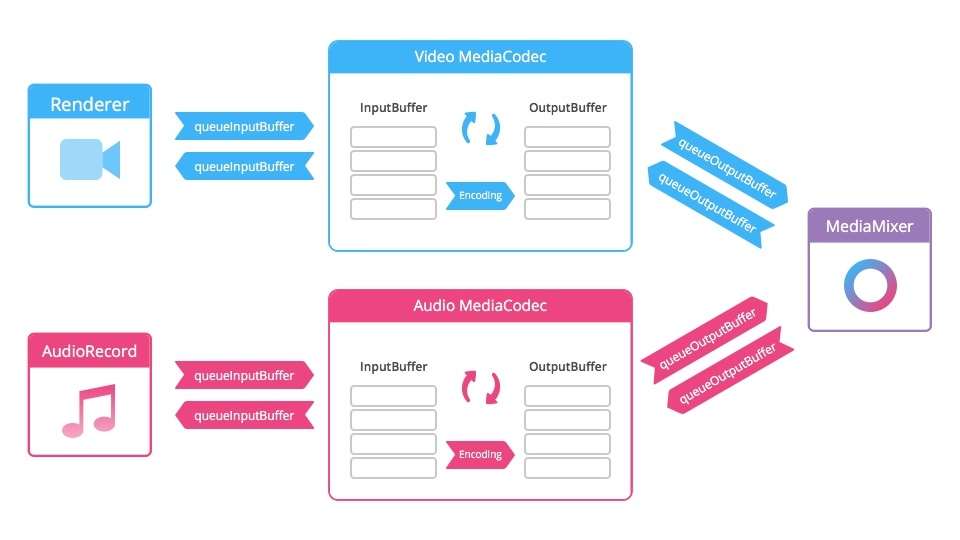

In Android 4.3, the MediaMuxer class has appeared , which can record several video and audio streams into one file.

Scheme

Here we already have more opportunities for creativity: the functionality allows not only to encode and decode video streams, but also to perform some video processing when recording.

In the project I was working on, there were several application requirements:

- Record multiple chunks of video for a total of up to 15 seconds

- "Bonding" recorded chunks into one file

- “Fast Motion” - the effect of accelerated shooting (time-lapse)

- “Slow Motion” - slow motion effect

- “Stop Motion” - recording of very short videos (consisting of a couple of frames), almost a photo in video format

- Crop video and overlay watermark (watermark) for download in the social. network

- Overlay music on video

- Reverse video

Instruments

Initially, the video was recorded using MediaRecorder. This method is the easiest, has long been used, has many examples and is supported by all versions of Android. But it defies customization. In addition, recording starts when using MediaRecorder with a delay of about 700 milliseconds. For recording small pieces of video, an almost second delay is unacceptable.

Therefore, it was decided to increase the minimum compatible version of Android 4.3 and use MediaCodec and MediaMuxer to record video. This solution eliminated the delay in initializing the recording. For rendering and modification of frames captured from the camera, OpenGL was used in conjunction with shaders.

The examples were taken from Google. The project is called Grafika and is a compilation of examples of

For post-video processing was used FFmpeg . The main difficulty with ffmpeg is building the necessary modules and connecting to your project. This is a long process requiring certain skills, so we used the ready-made assembly for Android . The peculiarity of working with the majority of such ffmpeg assemblies is such that it should be used as an executable command line file: pass a string command with input parameters and parameters that should be applied to the final video. The lack of debugging, and indeed to find out what the error is, if something went wrong, is also very depressing. The only source of information is the log file, which is recorded while ffmpeg is running. Therefore, at first, it takes a lot of time to figure out how this or that team works, how to make composite commands that will perform several actions at once, etc.

Slow motion

From the implementation of Slow Motion at the moment refused, because the hardware support for recording video with a sufficient frame rate from the vast majority of Android devices do not. There is also no normal possibility to “activate” this function even on the small fraction of devices on which there is hardware support.

You can make a software slow-mo, for this there are options:

- Duplicate frames when recording, or extend their duration (the time that the frame is shown).

- Record a video and then process - again - duplicating or extending each frame.

But the result is quite low quality:

Fast motion

But with the recording of time-lapse video problems do not arise. When recording with the MediaRecorder, you can set the frame rate, say, 10 (the standard frame rate for video recording is 30), and every third frame will be recorded. As a result, the video will be accelerated 3 times.

Code

private boolean prepareMediaRecorder() { if (camera == null) { return false; } camera.unlock(); if (mediaRecorder == null) { mediaRecorder = new MediaRecorder(); mediaRecorder.setCamera(camera); } mediaRecorder.setVideoSource(MediaRecorder.VideoSource.CAMERA); mediaRecorder.setOutputFormat(MediaRecorder.OutputFormat.MPEG_4); CamcorderProfile profile = getCamcorderProfile(cameraId); mediaRecorder.setCaptureRate(10); // mediaRecorder.setVideoSize(profile.videoFrameWidth, profile.videoFrameHeight); mediaRecorder.setVideoFrameRate(30); mediaRecorder.setVideoEncodingBitRate(profile.videoBitRate); mediaRecorder.setOutputFile(createVideoFile().getPath()); mediaRecorder.setPreviewDisplay(cameraPreview.getHolder().getSurface()); mediaRecorder.setVideoEncoder(MediaRecorder.VideoEncoder.H264); try { mediaRecorder.prepare(); } catch (Exception e) { releaseMediaRecorder(); return false; } return true; } Stop motion

For instant recording of several frames, the standard version with MediaRecorder is not suitable due to the long delay before recording starts. But using MediaCodec and MediaMuxer solves the performance problem.

Pasting recorded pieces into one file

This is one of the main features of the application. As a result, after recording several chunks, the user must receive one solid video file.

Initially, ffmpeg was used for this, but we had to abandon this idea, because ffmpeg stuck video with transcoding, and the process was quite long (on Nexus 5, glued together 7-8 chunks in one 15-second video took more than 15 seconds, and for 100 chunks time increased to a minute or more). If you use a higher bit rate or codecs that give better results at the same bit rate, the process took even longer.

Therefore, the mp4parser library is now used, which, in essence, pulls encoded data from container files, creates a new container, and adds the data one after another into a new container. Then he writes the information into the container's hider and that's it, we get a solid video at the output. The only limitation in this approach is that all chunks must be encoded with the same parameters (codec type, resolution, aspect ratio, etc.). This approach is otrabatyvet in 1-4 seconds, depending on the number of chunks.

An example of using with mp4parser'a for pasting several video files into one

public void merge(List<File> parts, File outFile) { try { Movie finalMovie = new Movie(); Track[] tracks = new Track[parts.size()]; for (int i = 0; i < parts.size(); i++) { Movie movie = MovieCreator.build(parts.get(i).getPath()); tracks[i] = movie.getTracks().get(0); } finalMovie.addTrack(new AppendTrack(tracks)); FileOutputStream fos = new FileOutputStream(outFile); BasicContainer container = (BasicContainer) new DefaultMp4Builder().build(finalMovie); container.writeContainer(fos.getChannel()); } catch (IOException e) { Log.e(TAG, "Merge failed", e); } } Overlaying music on video, framing video and overlaying a watermark

Here you can not do ffmpeg. For example, here’s a command that applies a sound track to the video:

ffmpeg -y -ss 00:00:00.00 -t 00:00:02.88 -i input.mp4 -ss 00:00:00.00 -t 00:00:02.88 -i tune.mp3 -map 0:v:0 -map 1:a:0 -vcodec copy -r 30 -b:v 2100k -acodec aac -strict experimental -b:a 48k -ar 44100 output.mp4 -ss 00: 00: 00.00 - the time from which to start processing in this case

-t 00: 00: 02.88 - the time at which you need to continue processing the input file

-i input.mp4 - input video file

-i tune.mp3 - audio input file

-map - video channel and audio channel mapping

-vcodec - install the video codec (in this case, the same codec is used to encode the video)

-r - set frame rate

-b: v - set the bitrate for the video channel

-acodec - install audio codec (in this case, we use AAC encoding)

-ar - audio channel sample-rate

-b: a - audio channel bit rate

Team to apply watermarks and crop video:

ffmpeg -y -i input.mp4 -strict experimental -r 30 -vf movie=watermark.png, scale=1280*0.1094:720*0.1028 [watermark]; [in][watermark] overlay=main_w-overlay_w:main_h-overlay_h, crop=in_w:in_w:0:in_h*in_h/2 [out] -b:v 2100k -vcodec mpeg4 -acodec copy output.mp4 movie = watermark.png - set the path to the watermark

scale = 1280 * 0.1094: 720 * 0.1028 - we specify the size

[in] [watermark] overlay = main_w-overlay_w: main_h-overlay_h, crop = in_w: in_w: 0: in_h * in_h / 2 [out] - impose a watermark and trim the video.

Reverse video

To create a reverse video, you need to perform several manipulations:

- Extract all frames from the video file, write them to the internal storage (for example, in jpg files)

- Rename frames to reverse their order.

- Collect from video files

The solution does not look elegant or productive, but there are no alternatives.

An example of a command to split a video into files with frames:

ffmpeg -y -i input.mp4 -strict experimental -r 30 -qscale 1 -f image2 -vcodec mjpeg %03d.jpg After that, you need to rename the frame files so that they are in reverse order (ie, the first frame will be the last, the last - the first, the second frame - the last but one, the last but one - the second, etc.)

Then, using the following command, you can collect video from frames:

ffmpeg -y -f image2 -i %03d.jpg -r 30 -vcodec mpeg4 -b:v 2100k output.mp4 Video gif

Also, one of the application functionals is the creation of short videos consisting of several frames, which, if looped, creates the effect of gifs. This topic is now in demand: Instagram even recently launched Boomerang, a special application for creating such “gifs.”

The process is quite simple - we take 8 photos with an equal period of time (in our case, 125 milliseconds), then duplicate all the frames in the reverse order, with the exception of the first and last, in order to achieve a smooth reverse effect, and collect the frames in the video.

For example, using ffmpeg:

ffmpeg -y -f image2 -i %02d.jpg -r 15 -filter:v setpts=2.5*PTS -vcodec libx264 output.mp4 -f - format of incoming files

-i% 02d.jpg - input files with dynamic name format (01.jpg, 02.jpg, etc.)

-filter: v setpts = 2.5 * PTS extend the duration of each frame 2.5 times

At the moment, to optimize the UX (so that the user does not wait for long video processing), we create the video file itself at the stage of saving and sharing the video. Prior to this, work happens with photos that are loaded into RAM and are drawn on Canvas'e TextureView .

Drawing process

private long drawGif(long startTime) { Canvas canvas = null; try { if (currentFrame >= gif.getFramesCount()) { currentFrame = 0; } Bitmap bitmap = gif.getFrame(currentFrame++); if (bitmap == null) { handler.notifyError(); return startTime; } destRect(frameRect, bitmap.getWidth(), bitmap.getHeight()); canvas = lockCanvas(); canvas.drawBitmap(bitmap, null, frameRect, framePaint); handler.notifyFrameAvailable(); if (showFps) { canvas.drawBitmap(overlayBitmap, 0, 0, null); frameCounter++; if ((System.currentTimeMillis() - startTime) >= 1000) { makeFpsOverlay(String.valueOf(frameCounter) + "fps"); frameCounter = 0; startTime = System.currentTimeMillis(); } } } catch (Exception e) { Timber.e(e, "drawGif failed"); } finally { if (canvas != null) { unlockCanvasAndPost(canvas); } } return startTime; } public class GifViewThread extends Thread { public void run() { long startTime = System.currentTimeMillis(); try { if (isPlaying()) { gif.initFrames(); } } catch (Exception e) { Timber.e(e, "initFrames failed"); } finally { Timber.d("Loading bitmaps in " + (System.currentTimeMillis() - startTime) + "ms"); } long drawTime = 0; while (running) { if (paused) { try { Thread.sleep(10); } catch (InterruptedException ignored) {} continue; } if (surfaceDone && (System.currentTimeMillis() - drawTime) > FRAME_RATE_BOUND) { startTime = drawGif(startTime); drawTime = System.currentTimeMillis(); } } } } Conclusion

In general, working with video on the Android platform is still a pain. It takes a lot of time to implement more or less advanced applications, the

Source: https://habr.com/ru/post/272705/

All Articles