Implementing automatic restarting of failed tests in the current build and overcoming attendant troubles

This article will discuss the use of the testNG framework, and specifically about the interfaces implemented in it and quite rarely used: IRetryAnalyzer, ITestListener, IReporter. But first things first.

The eternal problem of every tester when running autotests is the “fall” of individual scenarios from launch to launch randomly. And this is not about the failure of our tests for objective reasons (i.e., there is indeed an error in the operation of the tested functionality, or the test itself is not written correctly), but just about cases where, after a restart, the previously failed tests pass by a miracle. The reasons for such a random drop can be mass: the Internet has fallen off, CPU overload / lack of free RAM on the device, timeout, etc. The question is how to eliminate or at least reduce the number of such non-objectively failed tests?

For me, this challenge came about under the following circumstances:

')

1) the current autotest application was decided to be placed on the server (CI);

2) the implementation of multi-threading in the project turned from desire to mustHave (in view of the need to reduce the time of regression testing of the service).

I personally was very pleased with the second point, because I believe that any process that can take less time is sure to do it this way (whether it is passing the auto test or a queue at the checkout in the supermarket: the faster we can complete these processes, the we have more time to do something really interesting). So, by placing our tests on the server (here the admins and their knowledge of jenkins helped us) and running them in streams (here our assiduity and experiments with testng.xml already helped), we got a reduction in the test passing time from 100 minutes to 18, but at the same time we received an increase in failed tests> 2 times. Therefore, the following were added to the first two points (in fact, the Challenge itself, to which this article is devoted):

3) implement the restart of failed tests in a single build process.

The volume of the third point and the requirements for it gradually grew, but then again, about everything in order.

To implement the restart of the failed test, testng allows out of the box, thanks to the IRetryAnalyzer interface. This interface provides us with the boolean-method retry, which is responsible for restarting the test, in the case of returning true, or the lack of restart when false. To transfer to this method, we need the result of our test (ITestResult result).

Now the failed tests started to restart, but the following unpleasant feature was revealed: all failed attempts to pass the test inevitably fall into the report books (because your test, even the same one, passes several times in a row - it receives the same number of results that honestly get into the report). Probably, this problem may seem far-fetched to some testers (especially if you don’t show the report to anyone, do not provide it to technicals, managers and customers). In this case, indeed, you can use the maven-surefire-report-plugin and periodically get angry, breaking your eyes to see if your test fails or not.

The perspective of a crooked report obviously did not suit me, because the search for solutions was continued.

Considered options for parsing an html report to remove duplicate failed tests. Also suggested mergit results of several reports in the final one. Thinking that the crutch solutions might haunt us when the structure of their html / xml reports was changed with the next update of the report plug-ins, it was decided to implement the creation of our own custom report. The only disadvantage of such a solution is the time for its development and testing. I saw a lot more advantages, and the main one is flexibility. Report can be generated as you need or like. You can always add additional parameters, fields, metrics.

So, it was clear where the failed tests would be added to the report - this is a block of the retry method, in which the number of attempts to restart the tests has already been exhausted. Then we decided on where to put the successful ones. ITestListener interface. Of the seven methods of this interface, onTestSuccess ideally suited us. successful tests always enter this method. In total, we have two points in our application, from where successful and failed tests will be added to our report.

The next question is: at what point should we pull our report so that by this time all the tests have been completed. Here comes the following interface - IReporter and its generateReport method.

So now we have:

- the method from which we will put successful tests into the report;

- a similar method, only for failed tests;

- a method that knows when all tests are completed and can “pull” our report generator itself (which is not yet available).

To work with html in java library gagawa was chosen. Here you can create a report in any way you like, starting from both the parameters you have and the metrics you require at your discretion. After - connect to the project a simple css-ku for better visualization of our report and work with styles.

Now directly about the implementation of these features from me (comments for readability).

The variables retryCount and retryMaxCount allow you to control the number of restarts required in the event of a test failure. For the rest, I think the code is quite readable.

Here we catch successful tests, as you already know.

We pull our report, because we understand that all tests have been completed.

The generator of our html report.

You also need to add the following tag to the testng.xml file with the path to the Reporter class:

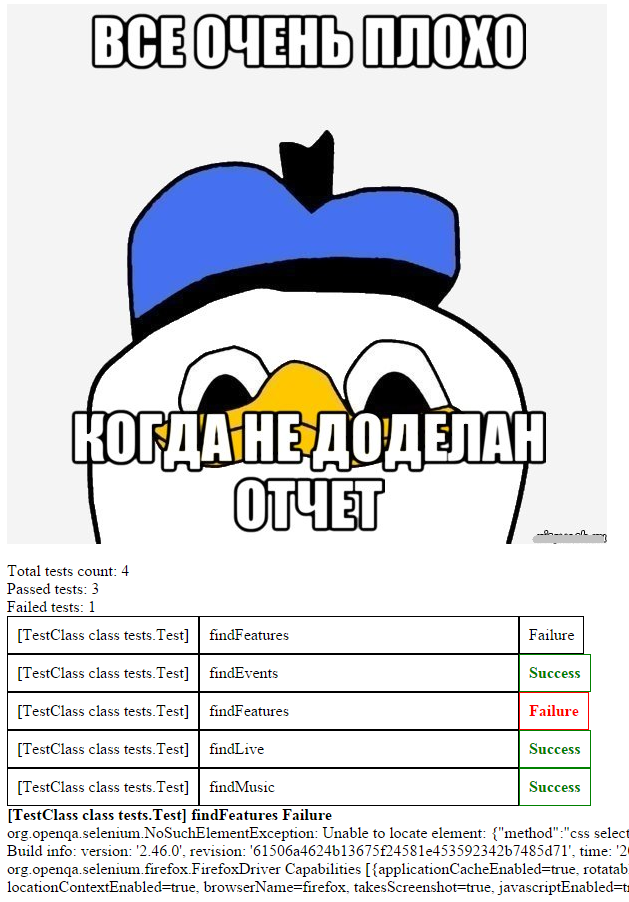

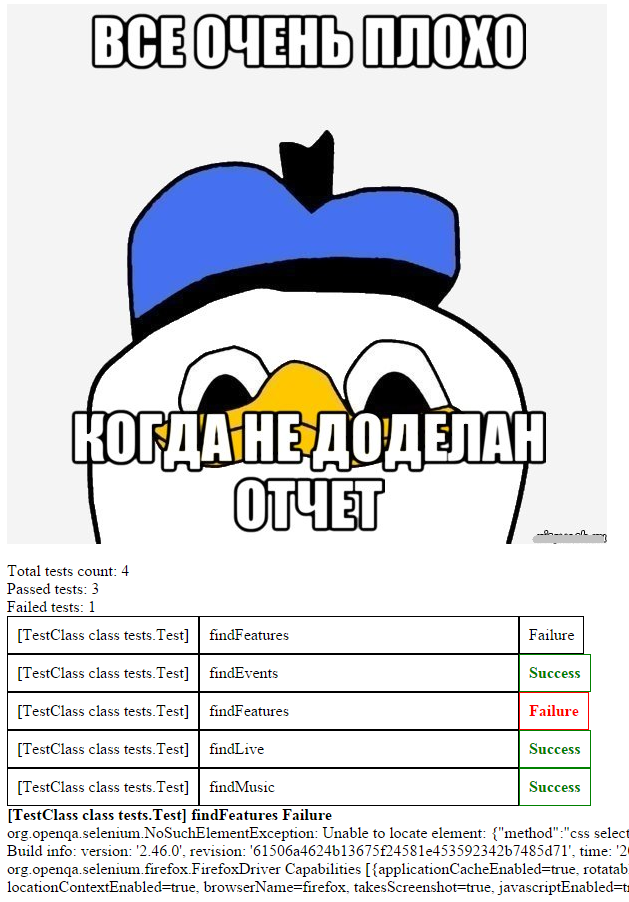

The visualization of the final result remains entirely at your discretion. For example, the report that you see in the code above looks like this:

In conclusion, I want to say that, faced with a rather trivial, at first glance, problem, the solution to the output we got is far from trivial.

Perhaps not elegant enough or simple - in such a welcome criticism in the comments. For myself, the main advantage of this set I see is universality: it will be possible to re-use the development on any java + testng project in the future.

My github with this project.

The eternal problem of every tester when running autotests is the “fall” of individual scenarios from launch to launch randomly. And this is not about the failure of our tests for objective reasons (i.e., there is indeed an error in the operation of the tested functionality, or the test itself is not written correctly), but just about cases where, after a restart, the previously failed tests pass by a miracle. The reasons for such a random drop can be mass: the Internet has fallen off, CPU overload / lack of free RAM on the device, timeout, etc. The question is how to eliminate or at least reduce the number of such non-objectively failed tests?

For me, this challenge came about under the following circumstances:

')

1) the current autotest application was decided to be placed on the server (CI);

2) the implementation of multi-threading in the project turned from desire to mustHave (in view of the need to reduce the time of regression testing of the service).

I personally was very pleased with the second point, because I believe that any process that can take less time is sure to do it this way (whether it is passing the auto test or a queue at the checkout in the supermarket: the faster we can complete these processes, the we have more time to do something really interesting). So, by placing our tests on the server (here the admins and their knowledge of jenkins helped us) and running them in streams (here our assiduity and experiments with testng.xml already helped), we got a reduction in the test passing time from 100 minutes to 18, but at the same time we received an increase in failed tests> 2 times. Therefore, the following were added to the first two points (in fact, the Challenge itself, to which this article is devoted):

3) implement the restart of failed tests in a single build process.

The volume of the third point and the requirements for it gradually grew, but then again, about everything in order.

To implement the restart of the failed test, testng allows out of the box, thanks to the IRetryAnalyzer interface. This interface provides us with the boolean-method retry, which is responsible for restarting the test, in the case of returning true, or the lack of restart when false. To transfer to this method, we need the result of our test (ITestResult result).

Now the failed tests started to restart, but the following unpleasant feature was revealed: all failed attempts to pass the test inevitably fall into the report books (because your test, even the same one, passes several times in a row - it receives the same number of results that honestly get into the report). Probably, this problem may seem far-fetched to some testers (especially if you don’t show the report to anyone, do not provide it to technicals, managers and customers). In this case, indeed, you can use the maven-surefire-report-plugin and periodically get angry, breaking your eyes to see if your test fails or not.

The perspective of a crooked report obviously did not suit me, because the search for solutions was continued.

Considered options for parsing an html report to remove duplicate failed tests. Also suggested mergit results of several reports in the final one. Thinking that the crutch solutions might haunt us when the structure of their html / xml reports was changed with the next update of the report plug-ins, it was decided to implement the creation of our own custom report. The only disadvantage of such a solution is the time for its development and testing. I saw a lot more advantages, and the main one is flexibility. Report can be generated as you need or like. You can always add additional parameters, fields, metrics.

So, it was clear where the failed tests would be added to the report - this is a block of the retry method, in which the number of attempts to restart the tests has already been exhausted. Then we decided on where to put the successful ones. ITestListener interface. Of the seven methods of this interface, onTestSuccess ideally suited us. successful tests always enter this method. In total, we have two points in our application, from where successful and failed tests will be added to our report.

The next question is: at what point should we pull our report so that by this time all the tests have been completed. Here comes the following interface - IReporter and its generateReport method.

So now we have:

- the method from which we will put successful tests into the report;

- a similar method, only for failed tests;

- a method that knows when all tests are completed and can “pull” our report generator itself (which is not yet available).

To work with html in java library gagawa was chosen. Here you can create a report in any way you like, starting from both the parameters you have and the metrics you require at your discretion. After - connect to the project a simple css-ku for better visualization of our report and work with styles.

Now directly about the implementation of these features from me (comments for readability).

RetryAnalyzer:

The variables retryCount and retryMaxCount allow you to control the number of restarts required in the event of a test failure. For the rest, I think the code is quite readable.

public class RetryAnalyzer implements IRetryAnalyzer { private int retryCount = 0; private int retryMaxCount = 3; // , @Override public boolean retry(ITestResult testResult) { boolean result = false; if (testResult.getAttributeNames().contains("retry") == false) { System.out.println("retry count = " + retryCount + "\n" +"max retry count = " + retryMaxCount); if(retryCount < retryMaxCount){ System.out.println("Retrying " + testResult.getName() + " with status " + testResult.getStatus() + " for the try " + (retryCount+1) + " of " + retryMaxCount + " max times."); retryCount++; result = true; }else if (retryCount == retryMaxCount){ // // String testName = testResult.getName(); String className = testResult.getTestClass().toString(); String resultOfTest = resultOfTest(testResult); String stackTrace = testResult.getThrowable().fillInStackTrace().toString(); System.out.println(stackTrace); // ReportCreator.addTestInfo(testName, className, resultOfTest, stackTrace); } } return result; } // saccess / failure public String resultOfTest (ITestResult testResult) { int status = testResult.getStatus(); if (status == 1) { String TR = "Success"; return TR; } if (status == 2) { String TR = "Failure"; return TR; } else { String unknownResult = "not interested for other results"; return unknownResult; } } } TestListener

Here we catch successful tests, as you already know.

public class TestListener extends TestListenerAdapter { // onSuccess @Override public void onTestSuccess(ITestResult testResult) { System.out.println("on success"); // , String testName = testResult.getName(); String className = testResult.getTestClass().toString(); String resultOfTest = resultOfTest(testResult); String stackTrace = ""; ReportCreator.addTestInfo(testName, className, resultOfTest, stackTrace); } // 1 saccess / failure public String resultOfTest (ITestResult testResult) { int status = testResult.getStatus(); if (status == 1) { String TR = "Success"; return TR; } if (status == 2) { String TR = "Failure"; return TR; } else { String unknownResult = "not interested for other results"; return unknownResult; } } } Reporter

We pull our report, because we understand that all tests have been completed.

public class Reporter implements IReporter { // , getReport html string @Override public void generateReport(List<XmlSuite> xmlSuites, List<ISuite> suites, String outputDirectory) { PrintWriter saver = null; try { saver = new PrintWriter(new File("report.html")); saver.write(ReportCreator.getReport()); } catch (FileNotFoundException e) { e.printStackTrace(); } finally { if (saver != null) { saver.close(); } } } } ReportCreator

The generator of our html report.

public class ReportCreator { public static Document document; public static Body body; public static ArrayList<TestData> list = new ArrayList<TestData>(); // public static void headerImage (){ Img headerImage = new Img("", "src/main/resources/baad.jpeg"); headerImage.setCSSClass("headerImage"); body.appendChild(headerImage); } // ( : + ) public static void addTestReport(String className, String testName, String status) { if (status == "Failure"){ Div failedDiv = new Div().setCSSClass("AllTestsFailed"); Div classNameDiv = new Div().appendText(className); Div testNameDiv = new Div().appendText(testName); Div resultDiv = new Div().appendText(status); failedDiv.appendChild(classNameDiv); failedDiv.appendChild(testNameDiv); failedDiv.appendChild(resultDiv); body.appendChild(failedDiv); }else{ Div successDiv = new Div().setCSSClass("AllTestsSuccess"); Div classNameDiv = new Div().appendText(className); Div testNameDiv = new Div().appendText(testName); Div resultDiv = new Div().appendText(status); successDiv.appendChild(classNameDiv); successDiv.appendChild(testNameDiv); successDiv.appendChild(resultDiv); body.appendChild(successDiv); } } // ( - , - ) public static void addCommonRunMetrics (int totalCount, int successCount, int failureCount) { Div total = new Div().setCSSClass("HeaderTable"); total.appendText("Total tests count: " + totalCount); Div success = new Div().setCSSClass("HeaderTable"); success.appendText("Passed tests: " + successCount); Div failure = new Div().setCSSClass("HeaderTable"); failure.appendText("Failed tests: " + failureCount); body.appendChild(total); body.appendChild(success); body.appendChild(failure); } // public static void addFailedTestsBlock (String className, String testName, String status) { Div failed = new Div().setCSSClass("AfterHeader"); Div classTestDiv = new Div().appendText(className); Div testNameDiv = new Div().appendText(testName); Div statusTestDiv = new Div().appendText(status); failed.appendChild(classTestDiv); failed.appendChild(testNameDiv); failed.appendChild(statusTestDiv); body.appendChild(failed); } // public static void addfailedWithStacktraces (String className, String testName, String status, String stackTrace) { Div failedWithStackTraces = new Div().setCSSClass("Lowest"); failedWithStackTraces.appendText(className + " " + testName + " " + status + "\n"); Div stackTraceDiv = new Div(); stackTraceDiv.appendText(stackTrace); body.appendChild(failedWithStackTraces); body.appendChild(stackTraceDiv); } // arraylist public static void addTestInfo(String testName, String className, String status, String stackTrace) { TestData testData = new TestData(); testData.setTestName(testName); testData.setClassName(className); testData.setTestResult(status); testData.setStackTrace(stackTrace); list.add(testData); } // , html- public static String getReport() { document = new Document(DocumentType.XHTMLTransitional); Head head = document.head; Link cssStyle= new Link().setType("text/css").setRel("stylesheet").setHref("src/main/resources/site.css"); head.appendChild(cssStyle); body = document.body; // - int totalCount = list.size(); // ArrayList failedCountArray = new ArrayList(); for (int f=0; f < list.size(); f++) { if (list.get(f).getTestResult() == "Failure") { failedCountArray.add(f); } } int failedCount = failedCountArray.size(); // - int successCount = totalCount - failedCount; // html headerImage(); // html addCommonRunMetrics(totalCount, successCount, failedCount); // html for (int s = 0; s < list.size(); s++){ if (list.get(s).getTestResult() == "Failure"){ addFailedTestsBlock(list.get(s).getClassName(), list.get(s).getTestName(), list.get(s).getTestResult()); } } // , if(list.isEmpty()){ System.out.println("ERROR: TEST LIST IS EMPTY"); return ""; } // ( ) + html String currentTestClass = ""; ArrayList constructedClasses = new ArrayList(); for(int i=0; i < list.size();i++){ currentTestClass = list.get(i).getClassName(); // boolean isClassConstructed=false; for(int j=0;j<constructedClasses.size();j++){ if(currentTestClass.equals(constructedClasses.get(j))){ isClassConstructed=true; } } if(!isClassConstructed){ for (int k=0;k<list.size();k++){ if(currentTestClass.equals(list.get(k).getClassName())){ addTestReport(list.get(k).getClassName(), list.get(k).getTestName(),list.get(k).getTestResult()); } } constructedClasses.add(currentTestClass); } } // + html for (int z = 0; z < list.size(); z++){ if (list.get(z).getTestResult() == "Failure"){ addfailedWithStacktraces(list.get(z).getClassName(), list.get(z).getTestName(), list.get(z).getTestResult(), list.get(z).getStackTrace()); } } return document.write(); } // + getter' / setter' public static class TestData{ String testName; String className; String testResult; String stackTrace; public TestData() {} public String getTestName() { return testName; } public String getClassName() { return className; } public String getTestResult() { return testResult; } public String getStackTrace() { return stackTrace; } public void setTestName(String testName) { this.testName = testName; } public void setClassName(String className) { this.className = className; } public void setTestResult(String testResult) { this.testResult = testResult; } public void setStackTrace(String stackTrace) { this.stackTrace = stackTrace; } } } Class itself with tests

@Listeners(TestListener.class) // , TestListener public class Test { private static WebDriver driver; @BeforeClass public static void init () { driver = new FirefoxDriver(); driver.get("http://www.last.fm/ru/"); } @AfterClass public static void close () { driver.close(); } @org.testng.annotations.Test (retryAnalyzer = RetryAnalyzer.class) // RetryAnalyzer public void findLive () { driver.findElement(By.cssSelector("[href=\"/ru/dashboard\"]")).click(); } } You also need to add the following tag to the testng.xml file with the path to the Reporter class:

<listeners> <listener class-name= "retry.Reporter" /> </listeners> The visualization of the final result remains entirely at your discretion. For example, the report that you see in the code above looks like this:

In conclusion, I want to say that, faced with a rather trivial, at first glance, problem, the solution to the output we got is far from trivial.

Perhaps not elegant enough or simple - in such a welcome criticism in the comments. For myself, the main advantage of this set I see is universality: it will be possible to re-use the development on any java + testng project in the future.

My github with this project.

Source: https://habr.com/ru/post/272643/

All Articles