Is it easy to recognize information on a bank card?

When we communicate with our customers, as specialists in this field, we actively use the appropriate terminology, in particular the word “recognition”. At the same time, the listening audience, educated on Cuneiform and FineReader, often invests in this term precisely the task of matching the cut-out portion of the image to a certain number (character code), which today is solved by a neural network approach and is far from the first step in the problem of information recognition. In the beginning, it is necessary to localize the card in the image, find information fields, perform segmentation into characters. Each formal subtask from a formal point of view is an independent task of recognition. And if for learning neural networks there are proven approaches and tools, then in the tasks of orientation and segmentation an individual approach is required each time. If you are interested to know about the approaches that we used in solving the problem of recognizing a bank card, then welcome under the cat!

Recognizing data from a credit card is at the same time a highly topical and very interesting task from the point of view of algorithms. A well-implemented plastic card recognition program can save a person from having to enter most of the data manually when making online payments and payments in mobile applications. From a recognition point of view, a bank card is a complex document of standard size (85.6 × 53.98 mm), executed on a typical form and containing a specific set of fields (both mandatory and optional): card number, cardholder name, date issue, expiration date, account number, CVV2 code or its equivalent. Part of the fields is on the front side, the other part is on the back. And despite the fact that to complete a payment transaction, you only need to specify the card number, almost all payment systems (as authentication) additionally require you to specify the cardholder's name, expiration date, and CVV2 code. Focus further on the task of recognizing the information fields of the front side of the card (objectively, it is many times more complicated).

')

So, in order to make an online payment, in most cases it is required to recognize the card number, the holder's name and the card validity term on the image.

As a first step, it is necessary to find the coordinates of the corners of the card. Since the geometric characteristics of the card are known to us (all the cards are made strictly in accordance with the ISO 781 standard), then to define the quadrilateral of the card, we use the search and search algorithm for the straight lines, which we already told about in one of our publications on Habré .

Given a known quadrilateral, it is easy to calculate and apply to the image a projective transformation that reduces the image of the map to an orthogonal view with a fixed resolution. We will assume that such a corrected image comes to the input to subsequent stages - orientation and recognition of specific information fields.

From an architectural point of view, the recognition of the three target fields consists of the same parts:

- Pre-filtering the image (in order to suppress the background of the card, which is surprisingly diverse).

- Search for a zone (line) of the target information field.

- Segmentation of the found string into “character boxes”.

- Recognition of the found “boxes of symbols” using an artificial neural network (INS).

- The use of post-processing (the use of the Moon algorithm to identify recognition errors, the use of dictionaries of names and surnames, date verification for validity, etc.).

Although the composition of the recognition steps is the same, the complexity varies greatly. The easiest way to recognize the card number (not for nothing is a sufficient number of SDKs for various mobile platforms, including those laid out in free access ) for a whole set of reasons:

- card number contains only numbers;

- number format is strictly defined for each type of payment card;

- the geometric position of the number does not “walk” much, regardless of the manufacturer;

- there is an algorithm for the moon, allowing you to check the correctness of the recognition numbers.

The situation is more complicated with the two remaining fields: the term of validity and the name of the cardholder. In this article we will dwell on the procedure for recognizing the card's validity period (the name is recognized in the same way).

Expiration Algorithm

Let the image of the card we have already corrected (as mentioned above). The result of the algorithm should be 4 decimal digits: two per month and a year of expiration. It is believed that the algorithm gave the correct answer if the received 4 digits coincide with those shown on the map. The symbol separating them is not considered and can be any. Failure to recognize is interpreted as an incorrect answer.

The first step is to localize the field on the card (in contrast to the number, the location of this field is not standardized). Using the “brute force method” over the entire map area is not very promising, since the corresponding text fragment is very short (usually 5 characters), the syntactic redundancy is small, and the probability of false detection on an arbitrary text fragment or even a variegated background area turns out to be unacceptably large. Therefore, apply the trick: we will not look for the date itself, but some information zone, located under the card number and having a stable geometric structure.

Figure 1. Examples of the required three-line information zone

The zone in question is divided into three lines, one of which is often empty. It is important that the location of the string with the date within this zone is well defined. This paradoxical phrase means the following: in the case when there are two empty lines in zone two, their line spacing either coincides with the line spacing of three-line zones, or is approximately equal to the sum of the doubled spacing and line height.

Finding a zone and dividing it into 3 lines is complicated by the presence of a background on the map, which, as we have said, is diverse. To solve this problem, a combination of filters is applied to the image of the card, the purpose of which is to highlight the vertical borders of the letters and blanking the remaining image details. The sequence of filters is as follows:

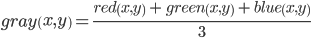

- Image serration by averaging the values of color channels using the formula

see figure 2b.

see figure 2b. - Calculating the image of vertical borders using the formula

see figure 2c.

see figure 2c. - Filtration of small vertical boundaries using mathematical morphology (specifically by applying erosion with a rectangular size window

), see figure 2d.

), see figure 2d.

Figure 2. Pre-filtering a credit card image: a) source image, b) image in grayscale c) image of vertical borders, d) filtered image of vertical borders

In our implementation, to save time, morphological operations are implemented using the van Herk algorithm . It allows you to calculate morphological operations with a rectangular primitive in time, independent of the size of the primitive, which allows the use of complex morphological filters of a large area in document recognition tasks in real time.

After filtering, the intensities of the pixels of the processed image are projected on the vertical axis:

Where

- filtered image

- filtered image  - image width,

- image width,  - level quantile

- level quantile  (this value is used for threshold cut-off in order to suppress the influence of sharp noise boundaries, which usually arise due to the presence of a paint-applied static text like “valid thru”, etc.).

(this value is used for threshold cut-off in order to suppress the influence of sharp noise boundaries, which usually arise due to the presence of a paint-applied static text like “valid thru”, etc.).According to the received projection

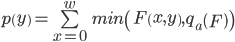

Now it is possible to find the most probable position of lines, assuming the absence of horizontal borders in line spacing. To do this, minimize the amount of projection for all possible periods

Now it is possible to find the most probable position of lines, assuming the absence of horizontal borders in line spacing. To do this, minimize the amount of projection for all possible periods  and initial phases

and initial phases  from a predetermined interval:

from a predetermined interval:

Since local minima

As a rule, quite pronounced on the outer borders of the text, the optimal value

As a rule, quite pronounced on the outer borders of the text, the optimal value  equals four (meaning that the map has 3 lines, and, therefore, 4 local minima). As a result, we will find the parameters

equals four (meaning that the map has 3 lines, and, therefore, 4 local minima). As a result, we will find the parameters  , specifying the centers of the line spacing, as well as the outer boundaries of the text (see Figure 3).

, specifying the centers of the line spacing, as well as the outer boundaries of the text (see Figure 3).

Figure 3. View of the projection and optimal cuts, highlighting areas of three lines on it

Now the date search area can be significantly reduced, at the same time taking into account the initial shape of the area and the position of the rows found on this image. For such an intersection, a set of possible positions of substrings is generated, with which further work will be conducted.

Each of the candidate strings is segmented into characters, given that all the characters on such maps are monospaced. This allows you to use a dynamic programming algorithm to search for inter-character cuts without character recognition (all you need to know is the allowable interval for the character width). Here we present the main ideas of the algorithm.

- Let y have a filtered image of the vertical borders of the row containing the date. Construct a projection of this image on the horizontal axis. The resulting projection will contain local maxima at the points of vertical boundaries (that is, in the zone of letters) and minima between the letters. Let be

- the expected period, and

- the expected period, and  - the maximum deviation of the period value.

- the maximum deviation of the period value. - We will move from left to right along the constructed projection. We will get an additional battery array, where the accumulated penalty will be stored in each element. At each step

Consider the battery segment

Consider the battery segment  . We write as the current value of the penalty the sum of the projection value at the point

. We write as the current value of the penalty the sum of the projection value at the point  and the minimum value of the penalty of the segment under consideration. Additionally save the index of the previous step, delivering the specified minimum.

and the minimum value of the penalty of the segment under consideration. Additionally save the index of the previous step, delivering the specified minimum. - Having completed the entire projection in the manner described, we can analyze the mass accumulator of fines and, thanks to the preservation of the indices of the previous steps, restore all the cuts.

After segmentation into characters, the time has come to recognize using an artificial neural network (ANN). Unfortunately, any detailed description of this process is beyond the scope of this article. Note only a couple of facts:

- For recognition, convolutional neural networks trained using the cuda-convnet tool are used .

- The alphabet of the trained network contains numbers, punctuation marks, a space, and a non-character ("trash") character.

Thus, for each symbol image, we obtain an array containing pseudo-probability estimates for finding the corresponding alphabet character in the given image. It would seem that the correct answer is to build a string of the best options (with the highest value of pseudo-probability). However, the INS is sometimes mistaken. Part of the INS errors can be corrected with the help of post-processing due to the existing restrictions on the expected values of the date (for example, there is no 13th month). For this, the so-called “roulette” algorithm is used, which iteratively lists all possible variants of “reading a line” in descending order of total pseudo-probability. The first option satisfying the existing constraints is considered the answer.

Of course, in addition to the “elementary” post-process described in our system, additional context-sensitive methods are used, the description of which is not included in this article.

Work results

In order to evaluate the quality of work of our SDK, we collected a database of images of cards of various payment systems issued by different banks in the amount of 750 images (the number of unique cards is 60 pieces). On the collected material obtained the following results:

- Recognition quality numbers - 99%

- Date Recognition Quality - 99%

- The quality of recognition of the name of the card holder is 90%

- Total card recognition time on iPhone 4S is 0.6 seconds.

List of useful sources

- van Herk M. A. 517-521.

Source: https://habr.com/ru/post/272607/

All Articles