How to monitor all layers of infrastructure

Once I figured out that 1 minute of idle time on hh.ru in the daytime affects about 30,000 users. We constantly solve the problem of reducing the number of incidents and their duration. We can reduce the number of problems with the right infrastructure, application architecture - this is a separate issue, we will not take it into account yet. Let's talk better about how to quickly understand what is happening in our infrastructure. This is where monitoring helps us.

In this article, using the example of hh.ru, I will tell and show you how to monitor all layers of the infrastructure:

- client-side metrics

- metrics from frontend (nginx logs)

- network (which can be obtained from TCP)

- application (logs)

- database metrics (postgresql in our case)

- operating system (cpu usage may also be useful)

Formulate the task

I formulated for myself the tasks that monitoring should solve:

- find out what's broken

- quickly find out where it broke

- see what is fixed

- capacity planning: do we have enough resources to grow

- optimization planning: choose where you need to optimize for the effect

- control of optimizations: if after the release of optimization in the production effect is not visible, then the release should be rolled back, and the code should be thrown out (or reformulated)

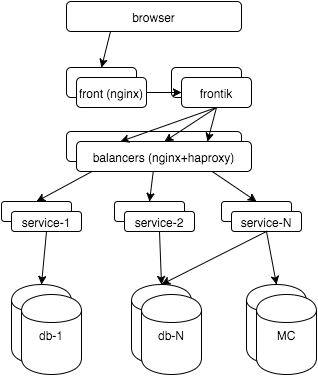

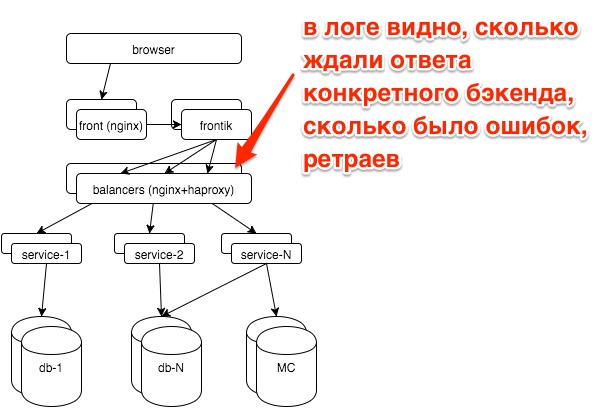

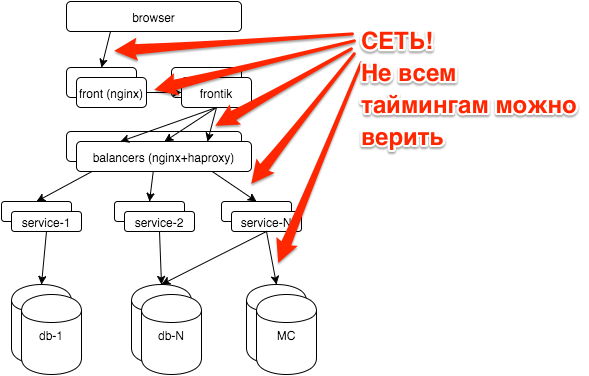

First, a few words about the hh.ru architecture: all logic is distributed among several dozens of services on java / python, pages for users are “collected” by our frontik collection service, the main database is postgresql, on frontends and internal balancers nginx + haproxy is used.

')

Now let's start monitoring all layers. In the end, I want:

- see how the system works from the user's point of view

- see what happens in each subsystem

- know what resources go

- look at it all in time: as it was a minute ago, yesterday, last Monday

That is, we will talk about graphs. About alerts, workflow, KPI, you can view my slides from RootConf 2015 (found the same “ screen ”).

Client-side metrics

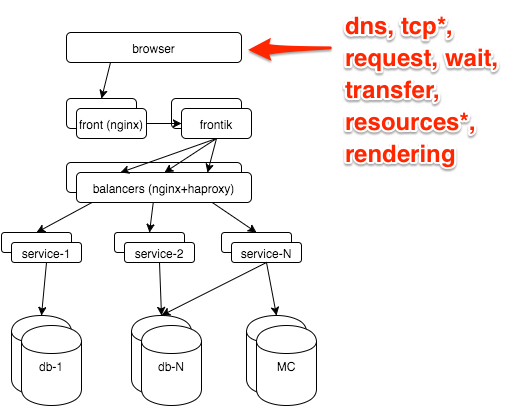

The most reliable information about how the user sees the site is in the browser. It can be obtained through the Navigation timing API , our js snapshot hits the metrics to the GET server with a request with parameters, then it is accepted by nginx:

location = /stat { return 204; access_log /var/log/nginx/clientstat.access.log; } then we just parse the resulting log and get the following graph:

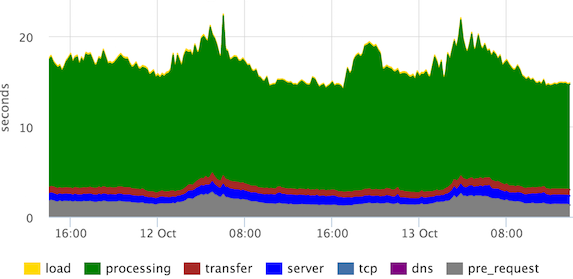

These are stacked 95th percentiles since the main stages of rendering the page. "Processing" can be defined differently, in this case, the user sees the page much earlier, but our frontend developers decided that they need to see it that way.

We see that the channels are normal (in the “transfer” stage), there are no emissions in the “server”, “tcp” stages.

What to remove from the frontend

Our main monitoring is based on the metrics we remove from the frontends. On this layer we want to find out:

- Are there any bugs? How many?

- Fast or slow? One user or all?

- How many requests? As usual / failure / bots?

- Is the channel tupit to customers?

All this is in the nginx log, you only need to slightly expand its default format:

- $ request_time - time from receiving the first byte of the request to writing the last byte of the response to the socket (we can assume that we take into account the transmission of the response through the network)

- $ upstream_response_time - how much the backend thought

- Optional: $ upstream_addr, $ upstream_status, $ upstream_cache_status

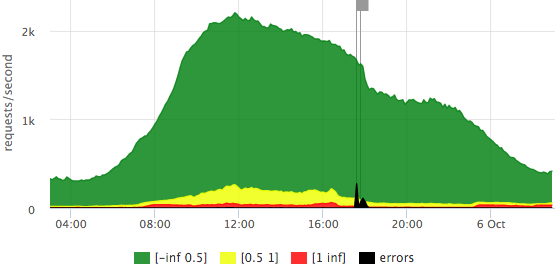

The histogram is well suited for visualizing the entire request flow.

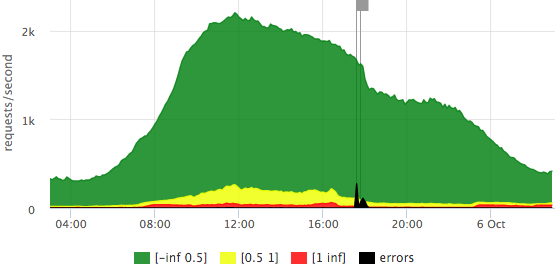

We have already determined what a quick request is (up to 500ms), medium (500ms-1s), slow (1s +).

We draw requests per second along the Y axis, the response time is reflected in color, and we also added errors (HTTP-5xx).

Here is an example of our “traffic light” based on $ request_time:

We see that we have a bit more than 2krps at the peak, the majority of requests are fast (there is an exact legend in the tooltip), on this day there were 2 error shots affecting 10-15% of requests.

Let's see how the graph differs if we take $ upstream_response_time as a basis:

It can be seen that fewer requests reach the backends (some are given from the nginx cache), there are almost no “slow” requests, that is, in the previous graph red is mainly the contribution of the transmission over the network to the user, rather than waiting for the backend response.

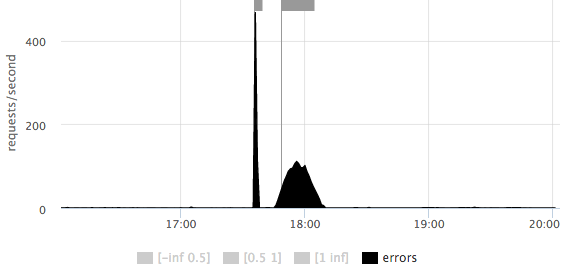

On any of the graphs, you can consider in more detail the scale of the number of errors:

It is immediately clear that there were 2 outliers: the first short ~ 500rps, the second longer ~ 100rps.

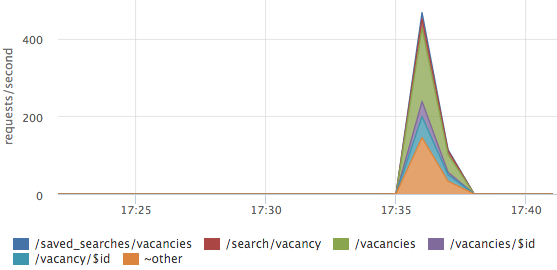

Errors sometimes need to be expanded to url:

In this case, the errors are not on any one URL, but are distributed across all the main pages.

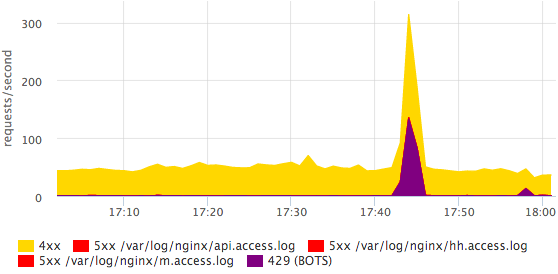

On the main dashboard, we also have a schedule with HTTP-4xx errors, we separately look at the number of HTTP-429 (we give this status if the limit on the number of requests from one IP is exceeded).

Here you can see that some bot came. More detailed information on bots is easier to see in the log with your eyes, in monitoring, it is enough for us to simply have the fact that something has changed. All errors can be broken down by specific statuses; this is done quite simply and quickly.

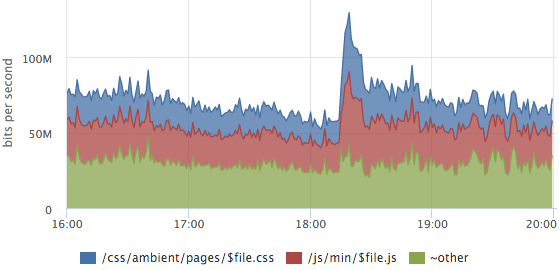

Sometimes it is useful to look at the traffic structure, which URLs generate how much traffic.

On this graph, the layout of the static content traffic, the outburst is the release of the new release, the users had css and js caches.

About the breakdown of metrics by urls should be mentioned separately:

- manual rules for parsing basic urls become obsolete

- you can try to somehow normalize urls:

throw out query arguments, id and other parameters in the URL itself

/ vacancy / 123? arg = value -> / vacancy / $ id - after all normalizations, the dynamic top of the URLs works well (by rps or the sum of $ upstream_response_time), you detail only the most significant requests, for the rest, total metrics are considered

- You can also make a separate top for errors, but with a cutoff from the bottom, so that there is no garbage

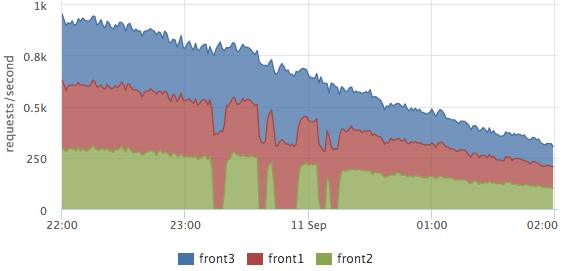

Our logs are parsed on each server, on the charts we usually see the final picture, but you can look at the server section too. For example, this is convenient for balancing evaluation:

Here you can clearly see how they were withdrawing from the front2 cluster, at which time requests were processed by 2 neighboring servers. The total number of requests did not fail, that is, the users did not affect these works.

Similar graphs based on metrics from the nginx log allow you to see well how our site works, but they do not reveal the cause of the problems.

Collector layer

Instant monitoring of any program gives an answer to the question: where is the control now (calculations, waiting for data from other subsystems, working with the disk, memory)? If we consider this task in time, we need to understand at what stages (actions) it took time.

In our collector service, all significant stages of each request are logged, for example:

2015-10-14 15:12:00,904 INFO: timings for xhh.pages.vacancy.Page: prepare=0.83 session=4.99 page=123.84 xsl=36.63 postprocess=13.21 finish=0.23 flush=0.49 total=180.21 code=200 During the processing of the vacancy page (handler = "xhh.pages.vacancy.Page") we spent:

- ~ 5ms per user session processing

- ~ 124ms waiting for all requests to services behind the page data

- ~ 37ms for templating

- ~ 13ms to localize

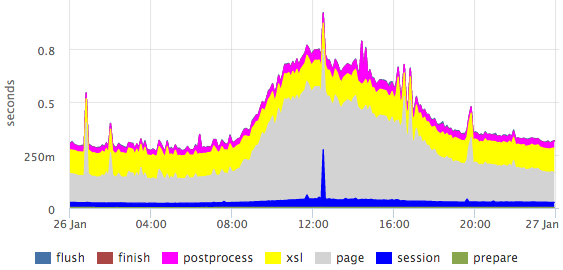

From this log, we take the 95th percentile for each stage of each handler (as we parse the logs, I will tell on a more illustrative example just below), we get graphs for all pages, for example, for a vacancy page:

There are clearly visible emissions within specific stages.

Percentiles have a number of drawbacks (for example, it is difficult to combine data from several measurements: servers / files / etc), but in this case the picture is much clearer than the histogram.

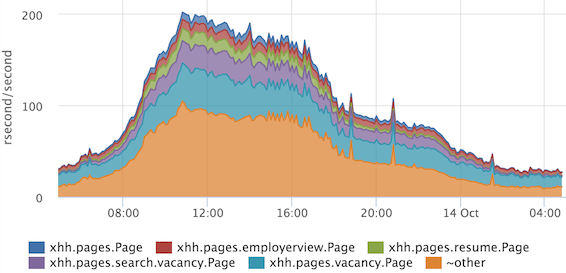

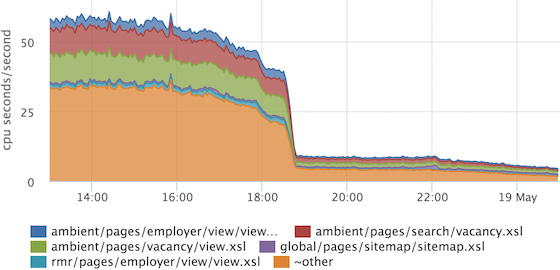

If we need, for example, to get a more or less exact ratio of any stages, then we can look at the sum of times. Summing up the response times with a breakdown by handlers, we can estimate how to occupy our entire cluster.

On the Y axis, we have the so-called “resource” seconds spent per second. It is very convenient that the ratio immediately takes into account the frequency of requests, and if we have one very heavy handler rarely called, then it will not hit the top. If you do not take into account the parallelization of processing of some stages, then we can assume that at the peak we spend 200 processor cores for all requests.

We also applied this technique to the profiling problem of templating.

The collector logs which template was rendered for each request:

2015-10-14 15:12:00,865 INFO: applied XSL ambient/pages/vacancy/view.xsl in 22.50ms The monitoring service agent that we use can parse logs, for this we need to write something like this config:

plugin: logparser config: file: /var/log/hh-xhh.log regex: '(?P<datetime>\d{4}-\d{2}-\d{2} \d{2}:\d{2}:\d{2}),\d+ [^:]+: applied XSL (?P<xsl_file>\S+) in (?P<xsl_time>\d+\.\d+)ms' time_field: datetime time_field_format: 2006-01-02 15:04:05 metrics: - type: rate name: xhh.xsl.calls.rate labels: xsl_file: =xsl_file - type: rate name: xhh.xsl.cpu_time.rate value: ms2sec:xsl_time labels: xsl_file: =xsl_file - type: percentiles name: xhh.xsl.time.percentiles value: ms2sec:xsl_time args: [50, 75, 95, 99] labels: xsl_file: =xsl_file From the received metrics we draw the schedule:

If suddenly with the release we will have the time to overlay one template (or all at once), we will see it. We can also choose what exactly makes sense to optimize ...

... and check that the optimization has brought results. In this example, a generic piece was optimized, which is included in all other templates.

What happens on the collectors, we now understand quite well, moving on.

Internal balancer

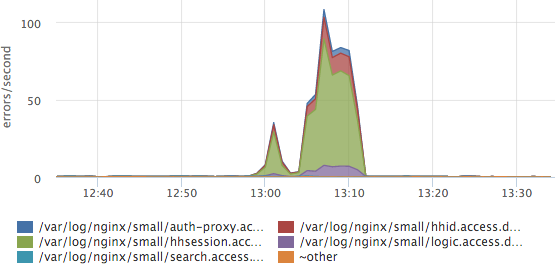

In case of breakdowns, as a rule, the question arises: if a specific service broke down (if yes, which one) or all at once (for example, if there are problems with databases, other storages, a network). Each service is behind the balancers with nginx, the logs for each service are written in a separate access log.

A simple schedule of top-5 services by the number of errors greatly simplified our lives:

Here you can see that the most errors occur in the session service log. If we have a new service / log, then it will be distributed automatically and the new data will be immediately taken into account in this top. We also have histograms of response time for all services, but more often we look at this graph.

Services

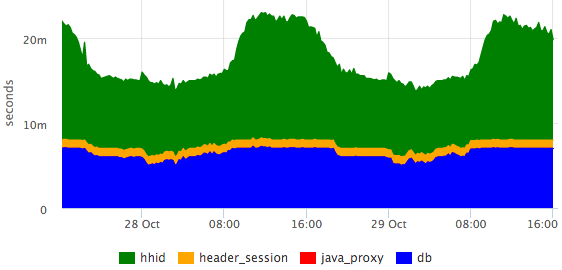

In the service logs we also have the request processing stages:

2015-10-21 10:47:10.532 [Grizzly-worker(14)] INFO r.hh.health.monitoring.TimingsLogger: response: 200; context: GET /hh-session/full; total time 10 ms; jersey#beforeHandle=+0; HHid#BeforeClient=+0; HHid#Client=+6; DB#getHhSession=+3; pbMappers=+0; makeHeaderSession=+1; currentSessionInBothBodyAndHeader=+0; jersey#afterHandle=+0; Visualize:

It can be seen that the database is responsible for the constant time, and the hhid service at attendance peaks slows down a bit.

Database

All the major databases we are working on postgresql. We outsource database administration to postgresql-consulting, but we ourselves also need to understand what is happening with the database, since it is not advisable to pull the DBA for all the problems.

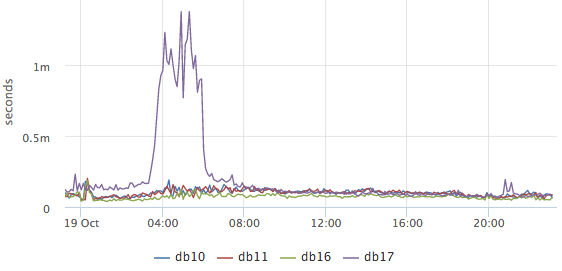

The main question that worried us is: is everything OK now with the database? Based on pg_stat_statements, we draw a graph of the average query execution time:

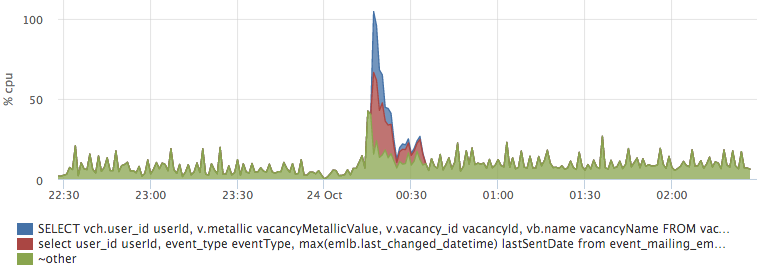

According to it, it is clear whether something unusual is happening in the database now or not (unfortunately nothing can be done on the available data except the average). Then we want to know what the base is doing at the moment, while we are primarily interested in what requests load CPU and disks:

These are top-2 requests for CPU usage (we remove top50 + other). Here you can clearly see the release of a specific query to the hh_tests database. If necessary, it can be completely copied and viewed.

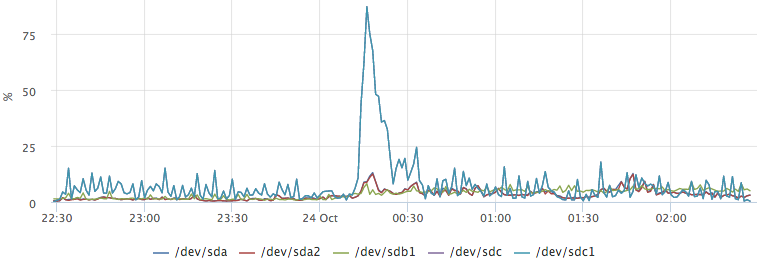

Postgresql also has statistics about how many requests are waiting for disk operations. For example, we want to know what caused this outlier on / dev / sdc1:

Having built a graph of top requests for io, we easily get an answer to our question:

Network

The network is a rather unstable environment. Often, when we do not understand what is happening, we can write off the brakes of services to problems with the network. For example:

- hhsession waited for a response from hhid 150ms

- the hhid service thinks that it responded to this request in 5ms (we all requests have the identifier $ request_id, we can recognize specific requests from the logs)

- between them is only a network

You do not have ping results for yesterday between these hosts. How to exclude a network?

TCP RTT

TCP Round Trip Time - the time from sending a packet to receiving an ACK. A monitoring agent on each server removes rtt for all current connections. For ip from our network, we aggregate the times separately, we get something like these metrics:

{ "name": "netstat.connections.inbound.rtt", "plugin": "netstat", "source_hostname": "db17", "listen_ip": "0.0.0.0", "listen_port": "6503", "remote_ip: "192.168.1.48", "percentile": "95", } Based on these metrics, you can look backwards at how the network worked between any two hosts on the network.

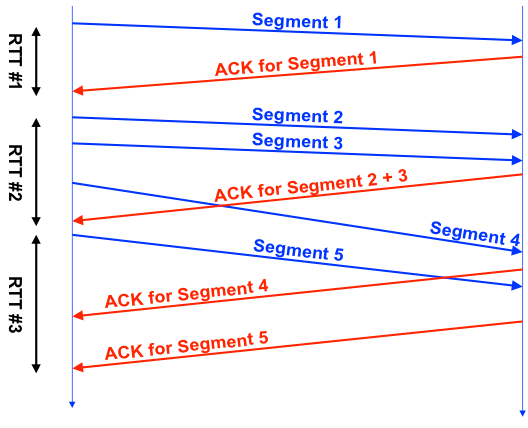

But TCP RTT values don't even closely match ICMP RTT (what ping shows). The fact is that TCP is quite complex, it uses many different optimizations (SACK, etc.), well illustrated by the picture from this presentation :

Each TCP connection has one RTT counter, it is clear that RTT # 1 is more or less honest, in the second case we sent 3 segments during the measurement, in the third case we sent 1 segment, we received several ACKs.

In practice, TCP RTT is fairly stable between the selected hosts.

This is a TCP RTT between the master database and the replica in another data center. You can clearly see how the connection disappeared while working on the channel, how the network was slowed down after recovery. At the same time TCP RTT ~ 7ms with ping ~ 0.8ms.

OS metrics

We, like everyone, are looking at CPU, memory, disk usage, disk IO utilization, traffic on network interfaces, packet rate, swap usage. Separately, we need to mention swap i / o - a very useful metric, which allows us to understand whether swap was actually used or if it just contains unused pages.

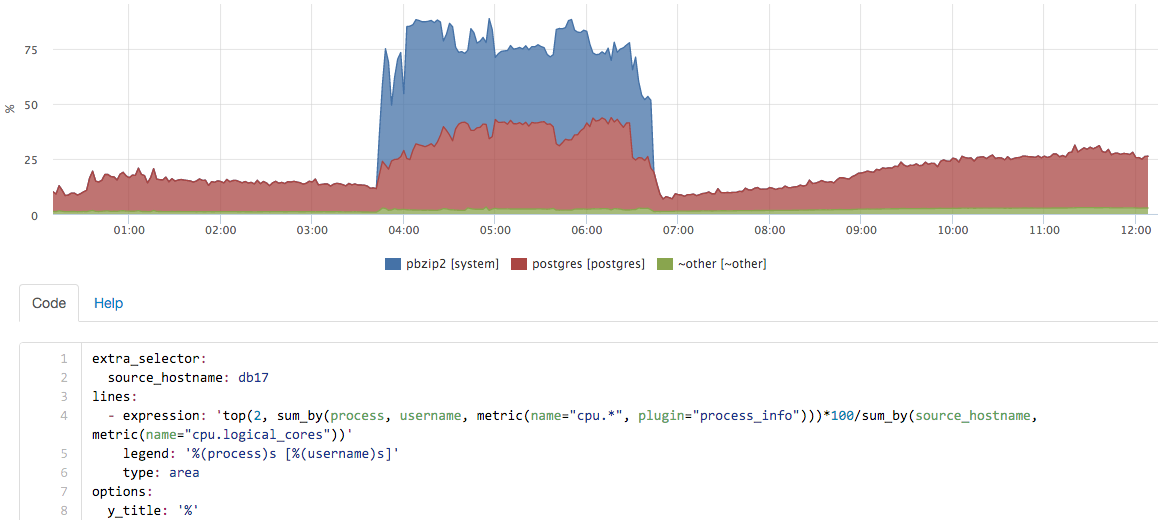

But these metrics are not enough if you want to retroactively understand what was happening on the server. For example, look at the CPU graph on the database master server:

What was it?

To answer such questions, we have metrics for all running processes:

- CPU per process + user

- Memory per process + user

- Disk I / O per process + user

- Swap per process + user

- Swap I / O per process + user

- FD usage per process + user

Around this interface, we can “play” with metrics in real time:

In this case, we run a backup on the server, almost never used pg_dump, but the contribution of pbzip2 is clearly visible.

We summarize

By monitoring you can understand a lot of different tools. For example, you can use an external service to check the availability of the main page once a minute, so you can learn about the main failures. This was not enough for us, we wanted to learn to see a complete picture of the quality of the site’s work. With the current decision, we see all the meaningful variations from the usual picture.

The second part of the task is to reduce the duration of incidents. Here, the carpet carpet helped us a lot by monitoring all the components. Separately, we had to be confused with the simple visualization of a large number of metrics, but after we made good dashboards, life became much simpler.

-

The article was written based on my report on Highload ++ 2015, slides are available here .

I can not fail to mention the monitoring service okmeter.io , which we have been using for more than a year.

Source: https://habr.com/ru/post/272263/

All Articles