The news called for the road: a super-fast, energy-efficient optical coprocessor for big data

Last week, Phys.org broke the news : a startup LightOn offered an alternative to central processing units (CPUs) and graphics processors (GPUs) to solve big data analysis problems. The group of authors is based at the University of Pierre and Marie Curie, at the Sorbonne and all the other right places in France. The solution is based on optical analog data processing "at the speed of light." Sounds interesting. Since there were no scientific and technical details in the press release, I had to search for information in patent databases and on the websites of universities. The results of the investigation under the cut.

The LightOn solution is based on a relatively new class of algorithms based on random projections of data. Mixing data in a reproducible and controlled manner allows you to extract useful information, for example, for solving classification problems or compressive sensing (I don’t know the Russian term). When working with large data volumes, the limiting factor is the calculation of random projections. LightOn has developed an optical scheme for calculating random projections and is now receiving funding to develop a coprocessor that can do all the hard work of extracting essential features from raw data in the streaming mode with minimal energy.

This will allow, for example, replacing graphics processors when processing video and audio data in mobile devices. The optical coprocessor consumes units of Watts, and therefore can operate in continuous mode, for example, to recognize the sacramental phrase “OK GOOGLE” without additional actions by the user. In the field of big data, the optical coprocessor will help to cope with exponentially growing volumes of information in areas such as the Internet of things, genome research, video recognition.

')

How it works?

The article deals with the formulation of the ridge regression problem (ridge regression) with a simple kernel function (kernel function), where epsilon is elliptic integrals.

Despite the frightening appearance, this function has a bell-shaped appearance, describes a measure of proximity between feature vectors and is obtained experimentally from an analysis of the optical system.

The ridge regression is one of the simplest linear classifiers, its solution in analytical form depends on the inner product of the matrix of vectors of characters XX T of dimension nxn:

Y '= X'X T (XX T + λI) -1 Y

Here X is the matrix of training signs, Y is the matrix of the classification of the training data, X 'is the matrix of test signs, Y' is the desired classification matrix of the test data, I is the identity matrix, λ is the regularization coefficient.

For example, the authors of the article taught the linear ridge regression classifier MNIST in the standard partitioning n = 60000 training and 10,000 test samples; classification error was 12%. In this case, it was necessary to invert the matrix with the dimension of 60000x60000. Of course, when working with big data, the number of samples can amount to billions, and the inversion (and even just storage) of matrices of such sizes is impossible.

We now replace X with a non-linear mapping of random projections of the original features into the space of dimension N <n:

K ij = φ ((WX i ) j + b j ) i = 1..n, j = 1..N

Here W is the random weights matrix, b is the displacement vector, φ is a nonlinear function. Then, displaying the test characters X 'in K', we get

Y '= K'K T (KK T + λI) -1 Y

When training the MNIST classifier, we took N = 10,000 random projections, W contained random complex weights with real and imaginary parts, distributed in Gauss, using the module as a nonlinear function. The classification error was 2% (against 12% in the linear classifier), the dimension of the inverted matrix was 10,000 x 10,000, moreover, the dimension did not depend on n 2 . Using the elliptic function of the kernel reduces the error to 1.3%. Of course, the use of more advanced classifiers such as convolutional neural networks makes it possible to obtain higher accuracy on MNIST, but it is not always possible to use neural networks in big data problems or mobile applications.

The described mathematical apparatus requires storing and multiplying the original features by a potentially huge random matrix, and applying a nonlinear function to the result of the multiplication. The authors developed an experimental setup that performs these calculations in analog form "at the speed of light."

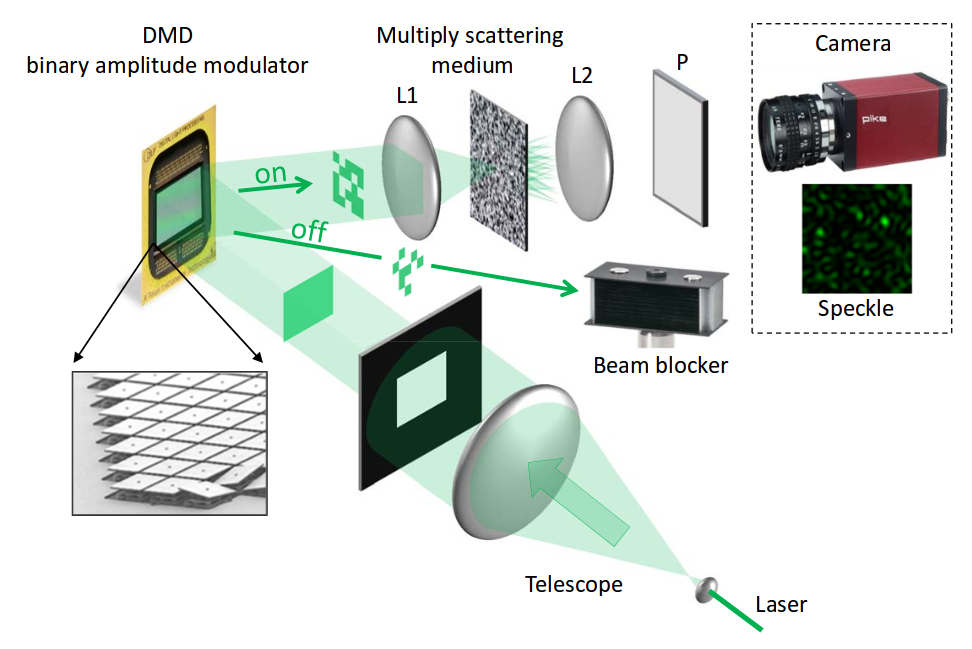

The 532 nm monochrome laser beam is expanded with a “telescope”, and the DMD micromirrors digital matrix is obtained with a square beam. DMD spatially encodes a light beam with input data — features. For example, in the case of the MNIST handwriting recognition task, a commercially available DMD matrix of 1920 x 1080 encodes 28 M 28 pixel MNIST halftones in such a way that each MNIST pixel has a 4 x 4 micromirror segment, i.e. 16 brightness levels.

Next, a light beam containing an amplitude-modulated input image signal is directed to a substance that plays the role of a random projection weight matrix. This is a thick layer (tens of microns) of titanium dioxide nanoparticles, that is, a white pigment. A huge number of opaque particles so mix the light beam that its properties can be considered completely random, but at the same time deterministic and repeatable (i.e., the matrix W is unchanged).

Since the light beam is received from a laser, it is coherent, and generates an interference pattern on the matrix of an ordinary video camera. This interference pattern is a set of random projections of high dimension (of the order of 10 4 - 10 6 ), and therefore it can be used to build a linear classifier, for example, SVM. Due to the high dimensionality and non-linearity, the chances for linear separability of the data are increased, and linear classifiers based on such interference patterns can achieve accuracy comparable to neural network ones. At the same time, the speed of calculations and energy costs are not comparable. The speed of work is limited only by the speed of the DMD micromirrors and the reading of the interference pattern from the video camera. Modern matrices reach speeds of 20 kHz. The implementation of such an optical system in a single crystal will allow you to really create a universal coprocessor for the effective extraction of features from a variety of data from the surrounding world.

The authors patented the optical scheme for use in the task compressive sensing (publication WO2013068783) and, possibly, for the classification task (there is no publication of the patent application yet). Nevertheless, I hope, the described technique will generate new ideas in habragols.

If you want to do all sorts of such things, I invite you for an internship or a permanent job at NIKFI - the Research and Development Film and Photo Institute.

Source: https://habr.com/ru/post/272255/

All Articles