Accidents on server farms in Azerbaijan and the UK

Downtime data centers are extremely expensive pleasure, downtime in a few seconds can result in serious financial and reputational losses. Accidents that happened quite recently proved it again. Two large server farms were hit - one in the UK, the second in Azerbaijan.

In one of the data centers of the company Delta Telecom fire broke out. Downtime lasted for eight hours. After this incident, access to Internet services was possible only through the use of channels of local mobile operators Backcell and Azerfon.

')

The reason for the shutdown was the fire in Baku on the server farm Delta Telecom. According to the official statement of the representatives of this company, several cables in the old data center caught fire. Firefighters and emergency services were involved in the process of extinguishing a fire. Because of the incident, the work of banks was practically paralyzed — no operations were carried out, the work of ATMs and payment terminals was stopped. In many regions, mobile communication was not available.

The shutdown occurred on November 16 at 16 o'clock local time. Such a major accident related to the Internet occurred in Azerbaijan for the first time. It took 5 hours to eliminate its consequences. User service was restored only closer to midnight local time.

According to the company Renesys, which is engaged in tracking Internet connections, 78% of Azerbaijani networks are in downtime, which is 6 more than hundreds of networks. These networks used the key connection Delta Telecom and Telecom Italia Sparkle. Renesys experts argue that Azerbaijan is one of the countries with a high risk of disconnecting the Internet due to the low number of networks that connect the country with external traffic exchange nodes. A similar situation is now typical of many neighboring states such as Iran, Georgia, Armenia and Saudi Arabia.

Although in recent years, Azerbaijan has been actively developing its telecommunications infrastructure through revenues from the sale of oil and gas, and also participates in the creation of the Trans-Eurasian Information Highway (TASIM).

According to the results of a number of studies, from 65 to 85% of unplanned downtime of the data center is caused by malfunctions of UPS systems. That is why the periodic monitoring of these elements of the infrastructure of the data center, as well as the timely maintenance and replacement of batteries, should be given special attention.

Perhaps the engineers of the European Telecity Group colocation provider are not very attentive to their uninterruptible power supplies. Almost two weeks ago, the company twice “upset” its clients, who rented premises inside the computer halls of the company's commercial data center in London. Two power outages in the Sovereign House data center, which followed one after the other, led to dissatisfaction among many tenants, including the London Internet Exchange and AWS Direct Connect (a service that allows third-party companies to connect to the Amazon cloud via private network connections) .

And all the fault of public utilities. Problems in the data center, which is located in the Docklands region east of central London and serves approximately 10% of UK Internet traffic, began precisely through their fault. After the first failure of the central grid, the data center infrastructure could not automatically switch to backup generators. Later, the power supply was restored for some time, after which, in the morning hours of the environment, repair of the UPS system began. But then the electricity disappeared again, and the infrastructure of the data center once again did not automatically switch to the DSU. Problems in the work of the data center did not go unnoticed by British businessmen and ordinary users who complained about problems in the work of VoIP-services and web hosting, as well as AWS platform.

Many mid-level specialists are ready to talk backstage, but the management of the data centers, as a rule, imposed the strictest ban on the discussion of what happened.

A data center that has worked on the market for three to five years and has not survived at least one accident is most likely unique. Accidents happen everywhere, the only difference is in the consequences. In the western market, the value of the server farm manager who survived the accident is increasing because he already has experience in overcoming difficulties and will be more cautious and motivated to prevent an accident in the future. In our market, most often, managers are ready to stand up to the last, not bringing to public information about the incidents that have occurred, although the consequences are difficult and it is impossible to hide the services for customers in any way. It turns out a database of incidents in data centers are collected by some international organizations. True, access to them is carried out only through membership in closed clubs, and even there they are not quite ready to share invaluable information.

Analyzing the main causes of accidents on server farms, two types of errors “lead”: related to human factors and equipment component failure. Even a project with high reliability requirements, involving the use of redundant equipment or engineering systems in case of failure, is not immune from an accident caused by human error or the design stage, or operation in conditions of failed equipment. It is known that the slightest mistake, a short stop of work, an accident can cost a company billions of dollars. Therefore, many companies that respect themselves and their customers conduct independent engineering examinations of documentation even before construction begins, in order to identify critical points of failure and work out solutions for their early elimination. There is also a stage of complex pre-operational testing.

The causes of accidents (taken as an example of the existing data center) were presented by I. Schwartz - Head of the System Integration Department of the Trinity Group of Companies (from the article by I. Schwartz: Data Center Infrastructure Security (Security Algorithm magazine No. 3, 2015).

There are still errors from the category “I do not believe my eyes”:

Almost the entire population of Azerbaijan lost access to the Internet

In one of the data centers of the company Delta Telecom fire broke out. Downtime lasted for eight hours. After this incident, access to Internet services was possible only through the use of channels of local mobile operators Backcell and Azerfon.

')

The reason for the shutdown was the fire in Baku on the server farm Delta Telecom. According to the official statement of the representatives of this company, several cables in the old data center caught fire. Firefighters and emergency services were involved in the process of extinguishing a fire. Because of the incident, the work of banks was practically paralyzed — no operations were carried out, the work of ATMs and payment terminals was stopped. In many regions, mobile communication was not available.

The shutdown occurred on November 16 at 16 o'clock local time. Such a major accident related to the Internet occurred in Azerbaijan for the first time. It took 5 hours to eliminate its consequences. User service was restored only closer to midnight local time.

According to the company Renesys, which is engaged in tracking Internet connections, 78% of Azerbaijani networks are in downtime, which is 6 more than hundreds of networks. These networks used the key connection Delta Telecom and Telecom Italia Sparkle. Renesys experts argue that Azerbaijan is one of the countries with a high risk of disconnecting the Internet due to the low number of networks that connect the country with external traffic exchange nodes. A similar situation is now typical of many neighboring states such as Iran, Georgia, Armenia and Saudi Arabia.

Although in recent years, Azerbaijan has been actively developing its telecommunications infrastructure through revenues from the sale of oil and gas, and also participates in the creation of the Trans-Eurasian Information Highway (TASIM).

Telecity colocation data center and UPS problems

According to the results of a number of studies, from 65 to 85% of unplanned downtime of the data center is caused by malfunctions of UPS systems. That is why the periodic monitoring of these elements of the infrastructure of the data center, as well as the timely maintenance and replacement of batteries, should be given special attention.

Perhaps the engineers of the European Telecity Group colocation provider are not very attentive to their uninterruptible power supplies. Almost two weeks ago, the company twice “upset” its clients, who rented premises inside the computer halls of the company's commercial data center in London. Two power outages in the Sovereign House data center, which followed one after the other, led to dissatisfaction among many tenants, including the London Internet Exchange and AWS Direct Connect (a service that allows third-party companies to connect to the Amazon cloud via private network connections) .

And all the fault of public utilities. Problems in the data center, which is located in the Docklands region east of central London and serves approximately 10% of UK Internet traffic, began precisely through their fault. After the first failure of the central grid, the data center infrastructure could not automatically switch to backup generators. Later, the power supply was restored for some time, after which, in the morning hours of the environment, repair of the UPS system began. But then the electricity disappeared again, and the infrastructure of the data center once again did not automatically switch to the DSU. Problems in the work of the data center did not go unnoticed by British businessmen and ordinary users who complained about problems in the work of VoIP-services and web hosting, as well as AWS platform.

About accidents in server farms

Many mid-level specialists are ready to talk backstage, but the management of the data centers, as a rule, imposed the strictest ban on the discussion of what happened.

A data center that has worked on the market for three to five years and has not survived at least one accident is most likely unique. Accidents happen everywhere, the only difference is in the consequences. In the western market, the value of the server farm manager who survived the accident is increasing because he already has experience in overcoming difficulties and will be more cautious and motivated to prevent an accident in the future. In our market, most often, managers are ready to stand up to the last, not bringing to public information about the incidents that have occurred, although the consequences are difficult and it is impossible to hide the services for customers in any way. It turns out a database of incidents in data centers are collected by some international organizations. True, access to them is carried out only through membership in closed clubs, and even there they are not quite ready to share invaluable information.

Analyzing the main causes of accidents on server farms, two types of errors “lead”: related to human factors and equipment component failure. Even a project with high reliability requirements, involving the use of redundant equipment or engineering systems in case of failure, is not immune from an accident caused by human error or the design stage, or operation in conditions of failed equipment. It is known that the slightest mistake, a short stop of work, an accident can cost a company billions of dollars. Therefore, many companies that respect themselves and their customers conduct independent engineering examinations of documentation even before construction begins, in order to identify critical points of failure and work out solutions for their early elimination. There is also a stage of complex pre-operational testing.

The causes of accidents (taken as an example of the existing data center) were presented by I. Schwartz - Head of the System Integration Department of the Trinity Group of Companies (from the article by I. Schwartz: Data Center Infrastructure Security (Security Algorithm magazine No. 3, 2015).

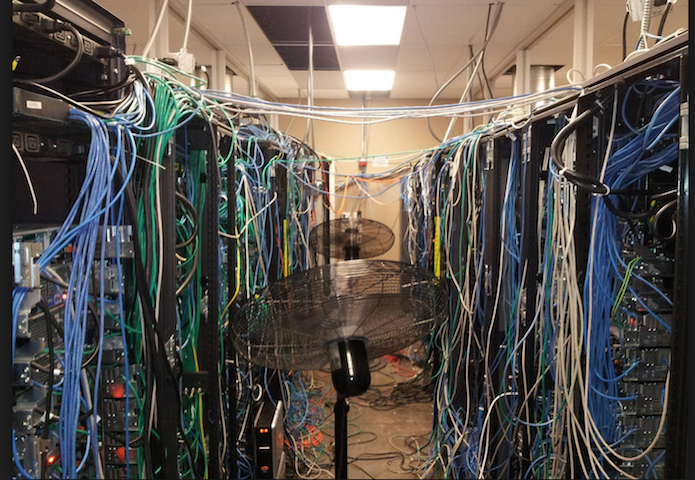

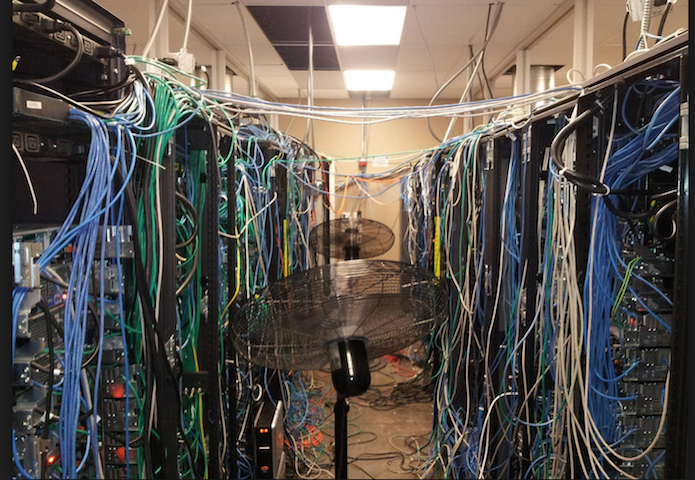

80% of cases I hear complaints that it is hard to cool the server, something overheats, or something happens to the power supply. Here is one of the cases:

A data processing center of a regime enterprise with a capacity of more than 1 MW, a computing cluster is located in the data center, the cost of the project is more than $ 10 million. Intra-row cooling is used, components of power supply systems, cooling systems, gas extinguishing systems are reserved, systems have N + 1, 2N reliability. Trinity was invited as an independent expert to analyze the causes of the accident in the data center.

The external nature of equipment damage (significant deformation of the geometry of plastic elements, boiling up and swelling of battery cells) indicates the effect of elevated temperature for a long time - from tens of hours to several days.

Appearance of damage

Based on the duration of exposure to temperature, it follows an unambiguous conclusion that the complex continued to work actively in the conditions of stopping the cooling subsystem. The analysis of the UPS logbooks, inter-row air conditioners, chillers and external power supply stabilizer showed the following facts: before and during the accident there were no interruptions in the external power supply, there were no power interruptions along the clean lines (powered by the UPS), despite the disconnection of the battery pack and numerous transitions to bypass power supply (without stabilization). When the valve pressure threshold was exceeded due to an increased air temperature of more than 50 ° C, an emergency discharge of extinguishing agent from the cylinders of the automatic gas extinguishing system took place, which resulted in the inoperability of the extinguishing system against the background of the continued rise in temperature. As it turned out, the accident was preceded by a 20-hour simultaneous operation of two chillers, in normal mode, this work lasts no more than 25 seconds, during the rotation of the chillers. The simultaneous long-term operation of the two external cooling system blocks led to an excessive overcooling of the coolant, as a result of which they shut down by mistake “Protection against the threat of freezing” with the main circulation pumps stopped. An additional circulating pump located in the engine room is not capable of independent circulation of the coolant.

The result of the absence of circulation was an emergency stop of inter-row air conditioners and, as a consequence, a sharp increase in temperature in the “hot corridor”. As a result of a study of all available journaling systems, it was established that the root cause of the accident was problems with the power automation shield. The simultaneous start and operation of the second chiller resulted in incorrect operation of the first chiller, due to the loss of the first phase in the power supply of chiller number 1.

The reason that allowed events to develop further and for so long was:

1) The absence in the design specification of the requirements for the monitoring and warning system related to the security mode of the facility, namely the “Automatic Shutdown and Alert System (SAOO)” in the design, was designed to work with the operator on duty by alerting through two channels: SMS (text alerts via public GSM networks) and email (electronic alerts via public internet networks). Both channels were not connected due to the security mode of the facility.

2) When commissioning, the SAOO was not transferred to automatic operation in the absence of accident notification channels.

3) The “accident” signal line, normally provided by the manufacturer (APC), was disconnected between the NetBotz hardware monitoring system and the UPS.

4) An additional circuit for monitoring environmental parameters was not designed and installed, with the alarm signal output to the guard post.

5) The accident was detected only when the volume motion sensors of the security and alarm signaling system, deduced to the guard post, detected the fall of the melted air plugs and the side walls of the cabinets.

The conclusion on this case is applicable in the construction of any server room: the design specification should specify the requirements for the monitoring system for environmental parameters, power supply, the requirements for outputting the alarm to the security post, the requirements for communication channels for alerting, and the requirements for the independence of the monitoring circuit for the main critical parameters from the health of LAN, servers, PBX and other equipment, which is monitored. The project should develop a detailed program of the test procedure at the stage of commissioning the complex, providing for the maximum possible combination of freelance events. Executive documentation should contain instructions for action during emergency situations. Operator training should be conducted. When powering three-phase technology, phase control relays should be used.

There are still errors from the category “I do not believe my eyes”:

simple ignorance or inattention of personnel: two power supplies or switchgear connected to the same power line instead of two independent lines; server installed in the rack backwards so that its fans draw air from the “hot” and not from the “cold” aisle; emergency power button without proper labeling and protection, leading to a power outage by a new employee who thought it was just turning off the lights ... These errors could have caused a smile if it hadn’t been so expensive and time consuming.

Source: https://habr.com/ru/post/272131/

All Articles