Inconvenient questions about the RDMA architecture

We have accumulated an array of materials related to the study of the architecture of Remote Direct Memory Access . During its formation, a number of points became more understandable, but the mechanisms of some implementations still remained only in the form of assumptions. Unfortunately, the existing problems of remote access directly to memory is reduced to a simplified model of the rejection of unnecessary transfers. Obviously, in the case of RDMA, we are dealing with an entity that generates a new quality of cross-platform interaction, the basis of which is laid down by such key concepts as IfiniBand and NUMA . ( Hereinafter, by RDMA, we mean RoCE - one of the current Zero-Copy implementations of direct memory access, reduced to the usual Ethernet-transport ).

To understand the place of RDMA in the existing issues of high-performance computing (and the associated prospects for the development of server building), I would like to find an answer to such questions, which turned out to be "behind the scenes" of the current information support. (Of course, we are talking about the texts generated by the leading manufacturers of RNIC-controllers, namely: Broadcom, Intel, Mellanox).

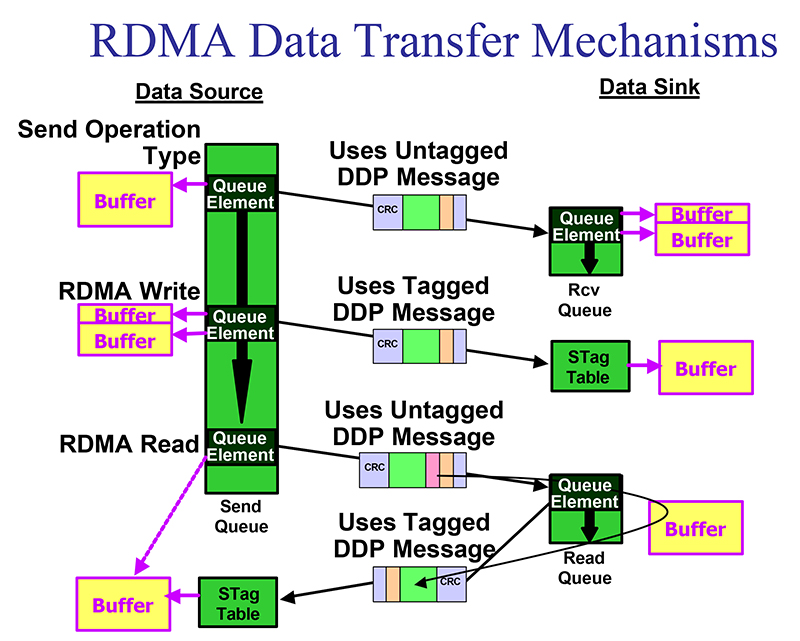

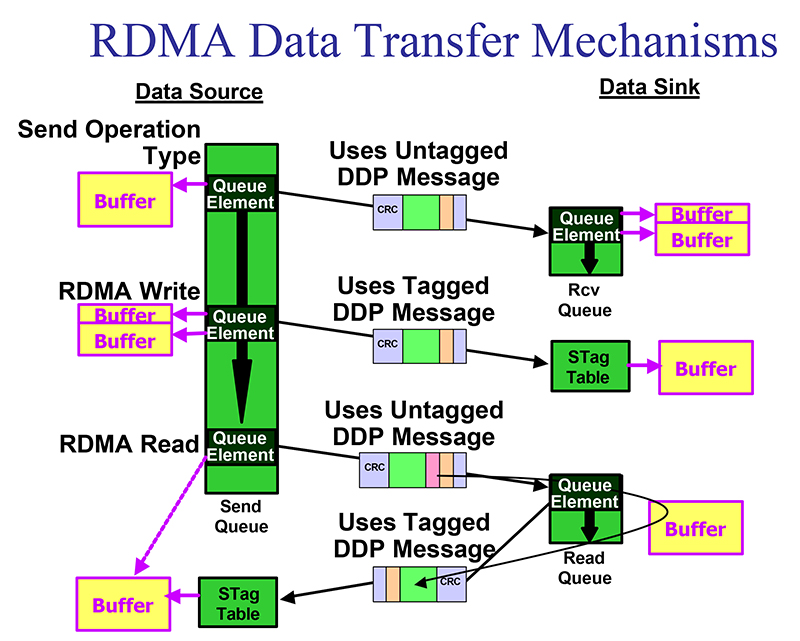

At present, two things remain unclear. The most important of these is the memory access model. As is known , the exchange over the network can be carried out using tagged queries (Read and Write operations) and untagged (Send operations). How good are each models in terms of performance? And if the user can, if not control the choice, then at least analyze the statistics of RDMA-transactions in the specified section?

')

Open sources make it possible to reasonably assume that tagged requests are related to the remote control of the memory addressing of a remote platform. With their help, a single address space is formed from physically separated local components that form a cluster. Untagged requests appear to be focused on more traditional data block transfer operations.

This is not idle curiosity: the results obtained in a series of experiments indicate that the advantages and possibilities of the “black RDMA-box”, as expected, are not endless. Massive data transmissions become an unbearable burden for advanced technology, and for ever-living classics. We look at the educational and educational video Mellanox (note the fragment, which begins with 29:04):

Here on the agenda goes deep understanding and fine tuning - the key to efficiency. In such a situation, it is pertinent to inquire whether there is a conflict of performance criteria with compatibility requirements? After all, if this is so, then in order to effectively use RDMA technology, a more radical redesign of software will be required - from low-level drivers to custom applications.

Another sacramental question: what, in fact, locally provides direct memory access? Recall that in modern platforms there are two models for performing operations of this kind:

Despite the fact that the DMA Engine is physically located in the composition of the CPU chip, its use means the release of the kernel or, more correctly, the cores of the central processor from routine I / O operations.

Effective encapsulation of low-level aspects by the architects of the RDMA protocol did not allow us to come to an unequivocal conclusion which of the methods (decentralized or centralized) the developers preferred. Although today there are weighty arguments in favor of the use of Bus Master due to the fact that the scenario for memory access operations is controlled by information coming over the network. Nevertheless, there is still an answer to this question: it is reasonable to assume that both options are supported and everything depends on the platform configuration ...

To understand the place of RDMA in the existing issues of high-performance computing (and the associated prospects for the development of server building), I would like to find an answer to such questions, which turned out to be "behind the scenes" of the current information support. (Of course, we are talking about the texts generated by the leading manufacturers of RNIC-controllers, namely: Broadcom, Intel, Mellanox).

Operation classification and performance

At present, two things remain unclear. The most important of these is the memory access model. As is known , the exchange over the network can be carried out using tagged queries (Read and Write operations) and untagged (Send operations). How good are each models in terms of performance? And if the user can, if not control the choice, then at least analyze the statistics of RDMA-transactions in the specified section?

')

Open sources make it possible to reasonably assume that tagged requests are related to the remote control of the memory addressing of a remote platform. With their help, a single address space is formed from physically separated local components that form a cluster. Untagged requests appear to be focused on more traditional data block transfer operations.

This is not idle curiosity: the results obtained in a series of experiments indicate that the advantages and possibilities of the “black RDMA-box”, as expected, are not endless. Massive data transmissions become an unbearable burden for advanced technology, and for ever-living classics. We look at the educational and educational video Mellanox (note the fragment, which begins with 29:04):

Here on the agenda goes deep understanding and fine tuning - the key to efficiency. In such a situation, it is pertinent to inquire whether there is a conflict of performance criteria with compatibility requirements? After all, if this is so, then in order to effectively use RDMA technology, a more radical redesign of software will be required - from low-level drivers to custom applications.

Bus Master vs DMA Engine

Another sacramental question: what, in fact, locally provides direct memory access? Recall that in modern platforms there are two models for performing operations of this kind:

- The decentralized model implies that the RNIC-controller in Bus Master mode is able to independently interact with the RAM, performing read and write operations; here we can say that the DMA controller is part of the RNIC.

- The centralized model uses the DMA Engine node that is part of the Intel Xeon processor; it is a kind of input / output processor that provides hardware support for fast movement of huge amounts of data, with elements of intelligent processing required for the implementation of a number of devices, such as disk RAID arrays and network NIC controllers; This node replaced the "ancient" DMA-controller Intel 8237, whose architecture was developed before the appearance of the ISA bus.

Despite the fact that the DMA Engine is physically located in the composition of the CPU chip, its use means the release of the kernel or, more correctly, the cores of the central processor from routine I / O operations.

Effective encapsulation of low-level aspects by the architects of the RDMA protocol did not allow us to come to an unequivocal conclusion which of the methods (decentralized or centralized) the developers preferred. Although today there are weighty arguments in favor of the use of Bus Master due to the fact that the scenario for memory access operations is controlled by information coming over the network. Nevertheless, there is still an answer to this question: it is reasonable to assume that both options are supported and everything depends on the platform configuration ...

Source: https://habr.com/ru/post/271393/

All Articles