Virtual quadrocopter on Unity + OpenCV (Part 3)

Hello!

Today I would like to continue the series on how to make friends with Unity, C ++ and OpenCV. As well as how to get a virtual environment for testing computer vision algorithms and navigating drones based on Unity. In previous articles, I talked about how to make a virtual quadrocopter in Unity and how to connect the C ++ plugin, transfer the image from the virtual camera there and process it using OpenCV . In this article I will explain how to make a stereo pair of two virtual cameras on a quadrocopter and how to get a disparity map, which can be used to estimate the depth of image pixels.

Idea

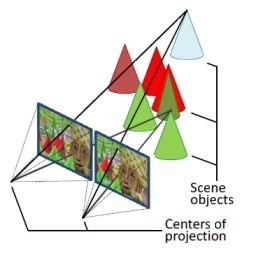

How to make a 3D reconstruction is written not enough. For example, there is a wonderful article on Habré . I strongly advise you to read it, if you are not at all in the subject. More strictly mathematically can be read here . Here I will sooo simplify the basic idea. The technique for obtaining the displacement map to be used is called a dense 3d reconstruction (two-dimensional reconstruction). It is used that two adjacent and having the same orientation of the camera see the same scene from slightly different points of view. This is called a stereo pair . We will use the usual horizontal stereo pair, that is, cameras that are perpendicular to the direction of the camera’s “view”. If we find the same point of the scene on the first and second images, that is, we find two projections of the point of the scene, then we can see that, in general, the coordinates of these two projections do not match. That is, the projections are shifted relative to each other in the case of overlapping images. This makes it possible to calculate the depth of the point of the scene by the magnitude of the displacement (simplified: the more shifted points are closer than the less shifted points).

')

image from www.adept.net.au/news/newsletter/201211-nov/article_3D_stereo.shtml

To do this you need to calibrate the camera. Next, you need to calibrate the stereo pair, remove distortion and straighten the image, so that those same projection points lie on the same horizontal line. This is a requirement of the dense 3D reconstruction algorithm from OpenCV, which accelerates the search for the corresponding points. We will use the easiest and fastest algorithm from OpenCV - StereoBM. Description of the API is here . Let's get started

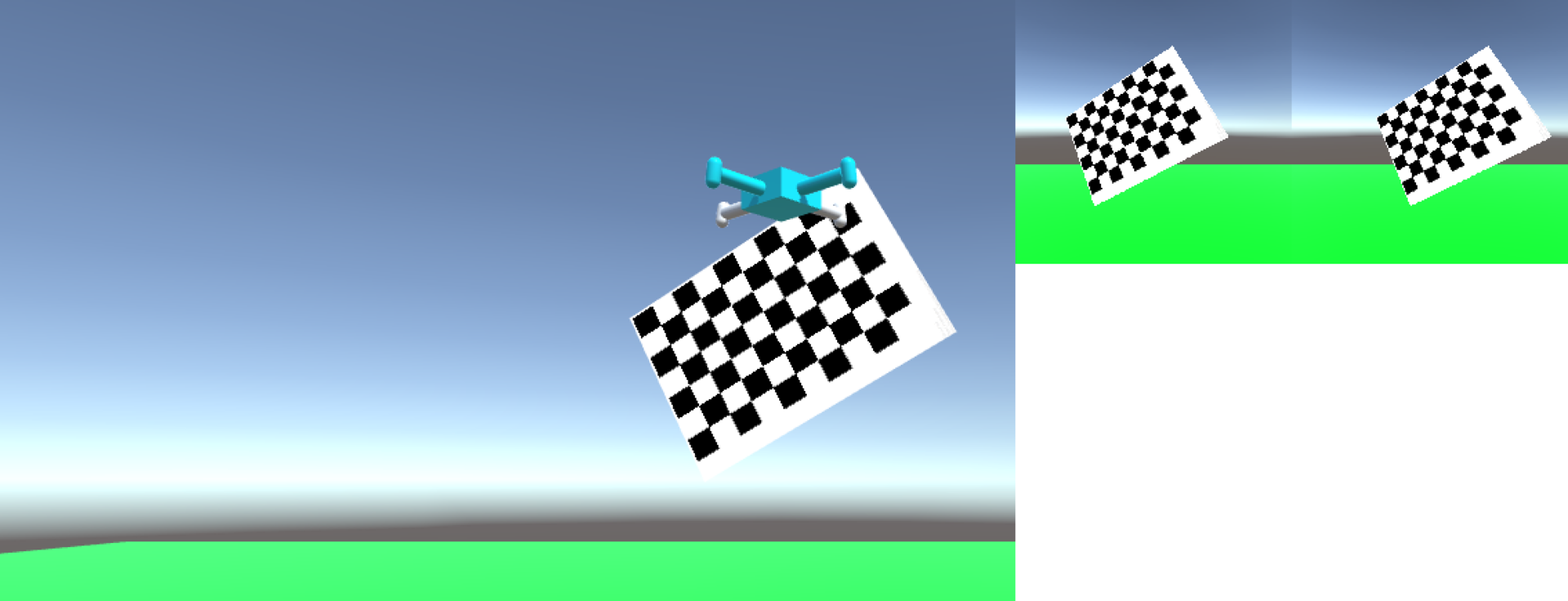

Camera Calibration

How to add another camera to a quadrocopter and get an image from it is described in the previous article, so let's start with camera calibration right away. I use two cameras with a viewing angle (field of view) of 70 degrees, image textures from cameras with a resolution of 512 x 512 pixels. Calibrating the camera means getting a 3x3 matrix of internal parameters and a vector of parameters for its distortion. Calibration is done by obtaining a set of calibration samples. One calibration sample is the coordinates of the points of the calibration pattern with a previously known geometry in the two-dimensional reference system of the camera image. The quality of the calibration is very dependent on the work of all algorithms that use it, so it is very important to calibrate the camera well. I calibrate by 40-50 calibration samples, and the large variability of calibration samples is important, that is, whenever possible, it is necessary to get as wide a spread as possible on the orientations and positions of the calibration pattern relative to the camera. The presentation of almost identical 50 calibration samples will give a low quality camera calibration. I use this calibration pettarn . It’s just possible to drag-and-drop in Unity and set it with a texture for a 2D sprite. All the dimensions in the program I specify in pixels, the size of the side of the square of this pattern is 167 pixels. For him, there is already a function to search it on the image in OpenCV. For inspiration, you can use the example from opencv-source / samples / cpp / calibration.cpp. Actually, I did.

Code for basic calibration functions

/** @brief . Size boardSize (9, 6) - , , sampleFound */ void CameraCalibrator::findSample (const cv::Mat& img) { currentSamplePoints.clear(); int chessBoardFlags = CALIB_CB_ADAPTIVE_THRESH | CALIB_CB_NORMALIZE_IMAGE; sampleFound = findChessboardCorners( img, boardSize, currentSamplePoints, chessBoardFlags); currentImage = &img; } bool CameraCalibrator::isSampleFound () { return sampleFound; } /** @brief */ void CameraCalibrator::acceptSample () { // Mat viewGray; cvtColor(*currentImage, viewGray, COLOR_BGR2GRAY); cornerSubPix( viewGray, currentSamplePoints, Size(11,11), Size(-1,-1), TermCriteria( TermCriteria::EPS+TermCriteria::COUNT, 30, 0.1 )); // ( ) drawChessboardCorners(*currentImage, boardSize, Mat(currentSamplePoints), sampleFound); // samplesPoints.push_back(currentSamplePoints); } /** @brief */ void CameraCalibrator::makeCalibration () { vector<Mat> rvecs, tvecs; vector<float> reprojErrs; double totalAvgErr = 0; // , // , // - , // , // float aspectRatio = 1.0; int flags = CV_CALIB_FIX_ASPECT_RATIO; bool ok = runCalibration(samplesPoints, imageSize, boardSize, squareSize, aspectRatio, flags, cameraMatrix, distCoeffs, rvecs, tvecs, reprojErrs, totalAvgErr); // stringstream sstr; sstr << "--- calib result: " << (ok ? "Calibration succeeded" : "Calibration failed") << ". avg reprojection error = " << totalAvgErr; DebugLog(sstr.str()); saveCameraParams(imageSize, boardSize, squareSize, aspectRatio, flags, cameraMatrix, distCoeffs, rvecs, tvecs, reprojErrs, samplesPoints, totalAvgErr); } /** @brief */ void calcChessboardCorners(Size boardSize, float squareSize, vector<Point3f>& corners) { corners.resize(0); for( int i = 0; i < boardSize.height; ++i ) for( int j = 0; j < boardSize.width; ++j ) corners.push_back(Point3f(j*squareSize, i*squareSize, 0)); } /** @brief */ bool runCalibration( vector<vector<Point2f> > imagePoints, Size imageSize, Size boardSize, float squareSize, float aspectRatio, int flags, Mat& cameraMatrix, Mat& distCoeffs, vector<Mat>& rvecs, vector<Mat>& tvecs, vector<float>& reprojErrs, double& totalAvgErr ) { // cameraMatrix = Mat::eye(3, 3, CV_64F); if( flags & CALIB_FIX_ASPECT_RATIO ) cameraMatrix.at<double>(0,0) = aspectRatio; // distCoeffs = Mat::zeros(8, 1, CV_64F); // // // vector<vector<Point3f> > objectPoints(1); calcChessboardCorners(boardSize, squareSize, objectPoints[0]); objectPoints.resize(imagePoints.size(),objectPoints[0]); // OpenCV //objectPoints - //imagePoints - //imageSize - double rms = calibrateCamera(objectPoints, imagePoints, imageSize, cameraMatrix, distCoeffs, rvecs, tvecs, flags|CALIB_FIX_K4|CALIB_FIX_K5); ///*|CALIB_FIX_K3*/|CALIB_FIX_K4|CALIB_FIX_K5); //rms - , , // , 1 printf("RMS error reported by calibrateCamera: %g\n", rms); bool ok = checkRange(cameraMatrix) && checkRange(distCoeffs); totalAvgErr = computeReprojectionErrors(objectPoints, imagePoints, rvecs, tvecs, cameraMatrix, distCoeffs, reprojErrs); return ok; } Stereo pair

Now we need to make a stereo pair from our two cameras. For this, the stereoCalibrate function is used . For her, we will need samples of both cameras. We will use samples taken from the camera calibration step. Therefore, it is important to save only those samples where the calibration pattern is found on two images at once. The matrixes of cameras and their distortion parameters will be needed as good initial approximations.

Stereo calibration code

/** @brief , */ void StereoCalibrator::makeCalibration ( const std::vector<std::vector<cv::Point2f>>& camera1SamplesPoints, const std::vector<std::vector<cv::Point2f>>& camera2SamplesPoints, cv::Mat& camera1Matrix, cv::Mat& camera1DistCoeffs, cv::Mat& camera2Matrix, cv::Mat& camera2DistCoeffs ) { // std::vector<vector<Point3f>> objectPoints; for( int i = 0; i < camera1SamplesPoints.size(); i++ ) { objectPoints.push_back(chessboardCorners); } double rms = stereoCalibrate( objectPoints, camera1SamplesPoints, camera2SamplesPoints, // camera1Matrix, camera1DistCoeffs, camera2Matrix, camera2DistCoeffs, // imageSize, // rotationMatrix, // translationVector, // essentialMatrix, // fundamentalMatrix, //, // CV_CALIB_USE_INTRINSIC_GUESS, TermCriteria(TermCriteria::COUNT+TermCriteria::EPS, 100, 1e-5) ); // - // , , , 1 stringstream outs; outs << "--- stereo calib: done with RMS error=" << rms; DebugLog(outs.str()); }

The calibration parameters I got

//512, 70 deg Mat cam1 = (Mat_<double>(3, 3) << 355.3383989449604, 0, 258.0008490063121, 0, 354.5068750418187, 255.7252273330564, 0, 0, 1); Mat dist1 = (Mat_<double>(5, 1) << -0.02781875153957544, 0.05084431574408409, 0.0003262438299225566, 0.0005420218184546293, -0.06711413339515834); Mat cam2 = (Mat_<double>(3, 3) << 354.8366825622115, 0, 255.7668702403205, 0, 353.9950515096826, 254.3218524455621, 0, 0, 1); Mat dist2 = (Mat_<double>(12, 1) << -0.03429254591232522, 0.04304840389703278, -0.0005799461588668822, 0.0005396568753307817, -0.01867317550268149); Mat R = (Mat_<double>(3, 3) << 0.9999698145104303, 3.974878365893637e-06, 0.007769816740176146, -3.390471048492443e-05, 0.9999925806915616, 0.003851936175643478, -0.00776974378253147, -0.003852083336451321, 0.9999623955607145); Mat T = (Mat_<double>(3, 1) << 498.2890078004688, 0.3317087752736566, -6.137837861924672); Displacement map

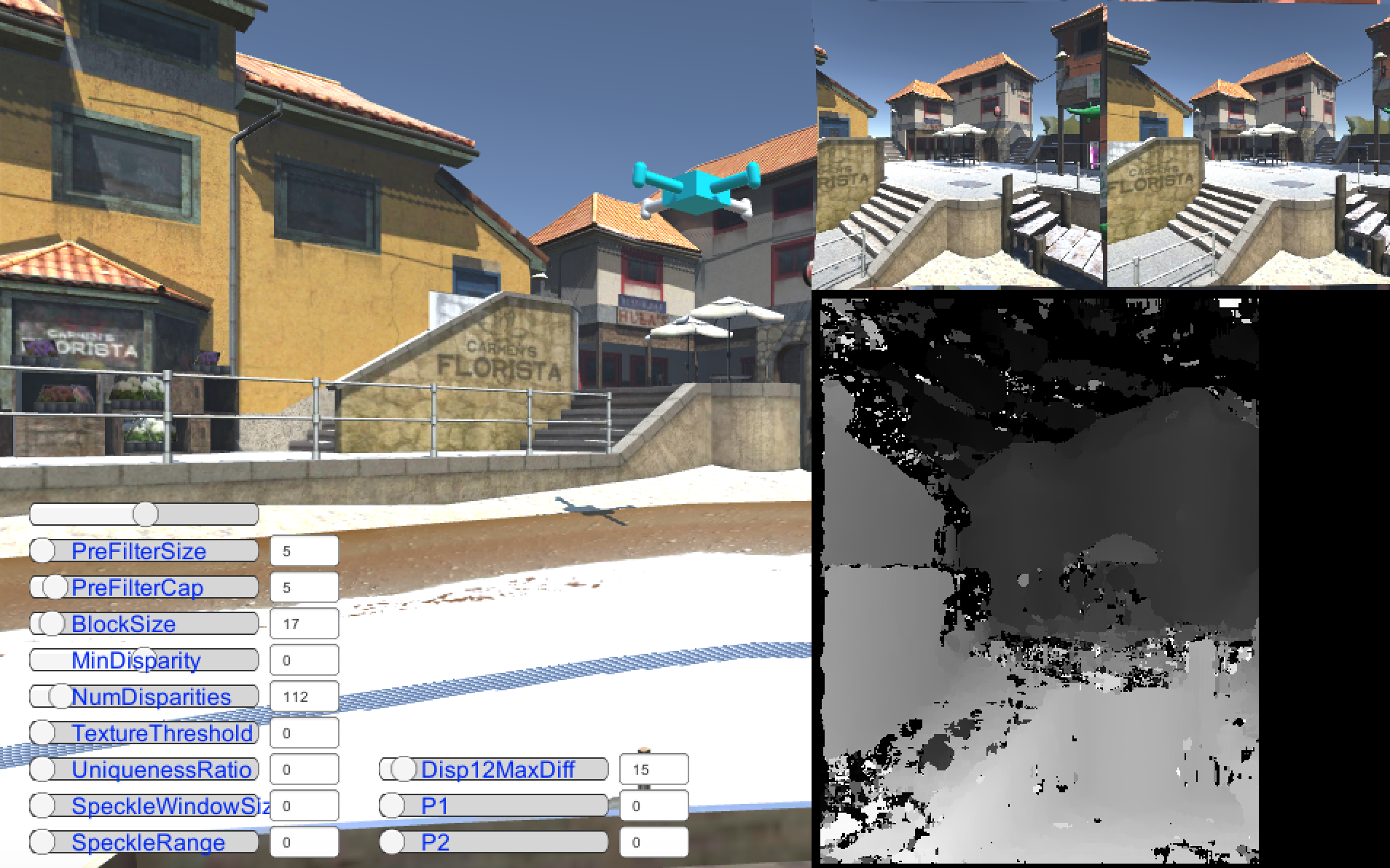

Now it is the turn to calculate the displacement map. Even if you did all the previous steps correctly, it’s not a fact that you can immediately calculate it. The fact is that the algorithm for calculating the map offset the order of 10 parameters that you need to choose the right to get a good picture. It helps to understand what's the point here is this video

from this article . Regarding the setting of parameters, I also do not advise you to pick up the TextureThreshold parameter greater than 50, since it is known that the StereoBM algorithm starts to fall spontaneously.

Offset Card Receipt Code

// DisparityMapCalculator::DisparityMapCalculator () { bm = StereoBM::create(16,9); } /** @brief */ void DisparityMapCalculator::set ( cv::Mat camera1Matrix, cv::Mat camera2Matrix, cv::Mat camera1distCoeff, cv::Mat camera2distCoeff, cv::Mat rotationMatrix, cv::Mat translationVector, cv::Size imageSize ) { this->camera1Matrix = camera1Matrix; this->camera2Matrix = camera2Matrix; this->camera1distCoeff = camera1distCoeff; this->camera2distCoeff = camera2distCoeff; this->rotationMatrix = rotationMatrix; this->translationVector = translationVector; // () stereoRectify( camera1Matrix, camera1distCoeff, camera2Matrix, camera2distCoeff, imageSize, rotationMatrix, translationVector, R1, R2, P1, P2, Q, /*CALIB_ZERO_DISPARITY*/0, -1, imageSize, &roi1, &roi2 ); // // initUndistortRectifyMap(camera1Matrix, camera1distCoeff, R1, P1, imageSize, CV_16SC2, map11, map12); initUndistortRectifyMap(camera2Matrix, camera2distCoeff, R2, P2, imageSize, CV_16SC2, map21, map22); bm->setROI1(roi1); bm->setROI2(roi2); } /** @brief */ void DisparityMapCalculator::setBMParameters ( int preFilterSize, int preFilterCap, int blockSize, int minDisparity, int numDisparities, int textureThreshold, int uniquenessRatio, int speckleWindowSize, int speckleRange, int disp12maxDiff ) { bm->setPreFilterSize(preFilterSize); bm->setPreFilterCap(preFilterCap); bm->setBlockSize(blockSize); bm->setMinDisparity(minDisparity); bm->setNumDisparities(numDisparities); bm->setTextureThreshold(textureThreshold); bm->setUniquenessRatio(uniquenessRatio); bm->setSpeckleWindowSize(speckleWindowSize); bm->setSpeckleRange(speckleRange); bm->setDisp12MaxDiff(disp12maxDiff); } /** @brief */ void DisparityMapCalculator::compute ( const cv::Mat& image1, const cv::Mat& image2, cv::Mat& image1recified, cv::Mat& image2recified, cv::Mat& disparityMap ) { // remap(image1, image1recified, map11, map12, INTER_LINEAR); remap(image2, image2recified, map21, map22, INTER_LINEAR); // . // OpenGL , // , // OpenGL OpenCV // , flip(image1recified, L, 1); flip(image2recified, R, 1); // stereo bm - sgbm, // // StereoBM cv::cvtColor(L, image1gray, CV_RGBA2GRAY, 1); cv::cvtColor(R, image2gray, CV_RGBA2GRAY, 1); int numberOfDisparities = bm->getNumDisparities(); // bm->compute(image1gray, image2gray, disp); // , disp.convertTo(disp8bit, CV_8U, 255/(numberOfDisparities*16.)); // , Unity flip (disp8bit, disp, 1); // 4 // // 4 cv::cvtColor(disp, disparityMap, CV_GRAY2RGBA, 4); } You can also watch the video how it all works for me.

The algorithm used to calculate the displacement map is quite old, so the result is not very nice. I think that it can be improved by using some more modern algorithm, but for this OpenCV alone is not enough.

Thank you for the scene ThomasKole

The code can be taken from github , branch habr_part3_disparity_map_opencv_stereobm

Thanks for attention

Source: https://habr.com/ru/post/271337/

All Articles