HP P2000 MSA G3 Performance Testing

In one of our past articles on the performance of server disk systems, we talked about testing methodology and tool selection.

Now, we decided to compare the performance of the entry-level storage system and the array on the P410 controller. Let me remind you that the parameters we are interested in are: IOPS - the number of disk operations per second (the more, the better) and latency - the processing time of the operation (the less, the better).

Stand configuration:

HP C7000 Chassis

Blade BL460C G6

HP Proliant DL360 Gen7 Server

AE372A pair of switches

HP StorageWorks P2000 G3 MSA Shelf

A pair of AP837B controllers

Disks 2.5 "HP 146Gb SAS 15k 6G HDD (512547-B21, 512544-001)

')

Blade BL460C G6

HP Proliant DL360 Gen7 Server

AE372A pair of switches

HP StorageWorks P2000 G3 MSA Shelf

A pair of AP837B controllers

Disks 2.5 "HP 146Gb SAS 15k 6G HDD (512547-B21, 512544-001)

')

We tested the same fio utility under Debian GNU / Linux according to the technique described in the previous article using the same toolkit. Still refer to the raw-device via libaio.

$cat ~/fio/oltp.conf [oltp-db] blocksize=8k rwmixread=70 rwmixwrite=30 rw=randrw percentage_random=100 ioengine=libaio direct=1 buffered=0 time_based runtime=2400 The configuration is as follows: the volume of LUNs is equal to the volume of Vdisk and in all configurations RAID10 is used, multipathing is enabled, connection via the Fiber Channel.

#multipath -ll 3600c0ff000199b069319335601000000 dm-1 HP,P2000G3 FC/iSCSI size=956G features='1 queue_if_no_path' hwhandler='0' wp=rw |-+- policy='service-time 0' prio=50 status=active | |- 0:0:0:8 sdc 8:32 active ready running | `- 2:0:0:8 sdg 8:96 active ready running `-+- policy='service-time 0' prio=10 status=enabled |- 0:0:1:8 sde 8:64 active ready running `- 2:0:1:8 sdi 8:128 active ready running Tested with increasing the number of threads from 16 to 256, in order to catch where the lack of performance begins. The duration of each test is 40 minutes.

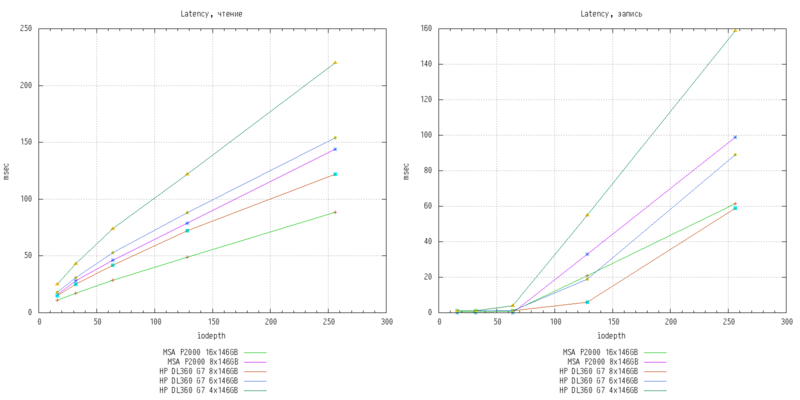

So, let's look at the results on the example of the OLTP-DB profile, illustrating the dependence of IOPS and the responsiveness of the system on the number of threads:

Block size 4KB, 70% / 30% read / write, 100% random access

Looking at the upper graph, it becomes clear how to evaluate the system performance in isolation from the latency: as soon as the increase in the number of IOPS stalled, we got the result and only the latency will continue to grow.

Unexpectedly, an array of eight disks shows much higher performance than storage systems with the same number of carriers, and this is especially noticeable with increasing load. MSA P2000 stalled on 64 threads, while the increase in the number of IOPS on the P410 controller stopped at 128.

Also clearly visible is the dependence of system performance on the number of hard drives in the array. I think there are no comments here.

And the conclusion from this can be done as follows: it makes sense to use this storage system when you need more flexibility in allocating disk space between virtual or physical servers and you need the ability to connect a large number of hard drives, including through expansion shelves. However, there is no hope that all this will work faster than the local disk array of the server with the same number of disks.

And, as always, we post a detailed file with the results .

Source: https://habr.com/ru/post/271229/

All Articles