Rutube 2009-2015: the history of our iron

7 years have passed since Rutube became a part of Gazprom-Media Holding and a new stage of project development began. In this article we will talk about how we got the project at the end of 2008, and how it changed over the course of 7 years in terms of hardware. Under the cut you will find a fascinating story and many many pictures (carefully, traffic!), So poke at Fichu (our office cat) and go ahead!

At the end of 2008, Gazprom-Media Holding acquired the Rutube code and infrastructure. The technical team, which at that time consisted of a technical director, system administrator and technical specialist (“Computer asks to click“ Enikey ”, where is it?), Had several racks with equipment in data centers“ M10 ”,“ COMSTAR-Direct "And" Kurchatnik. "

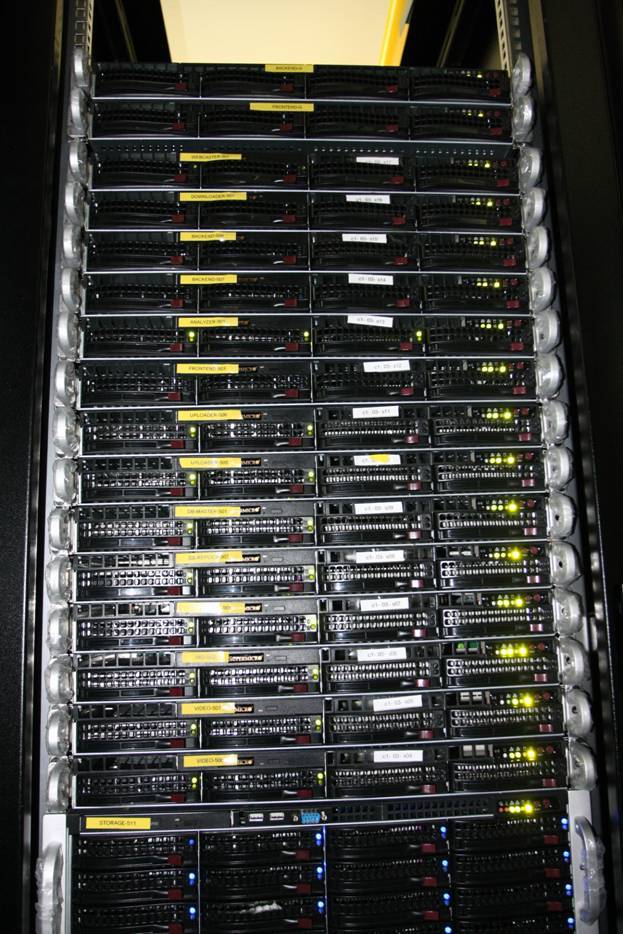

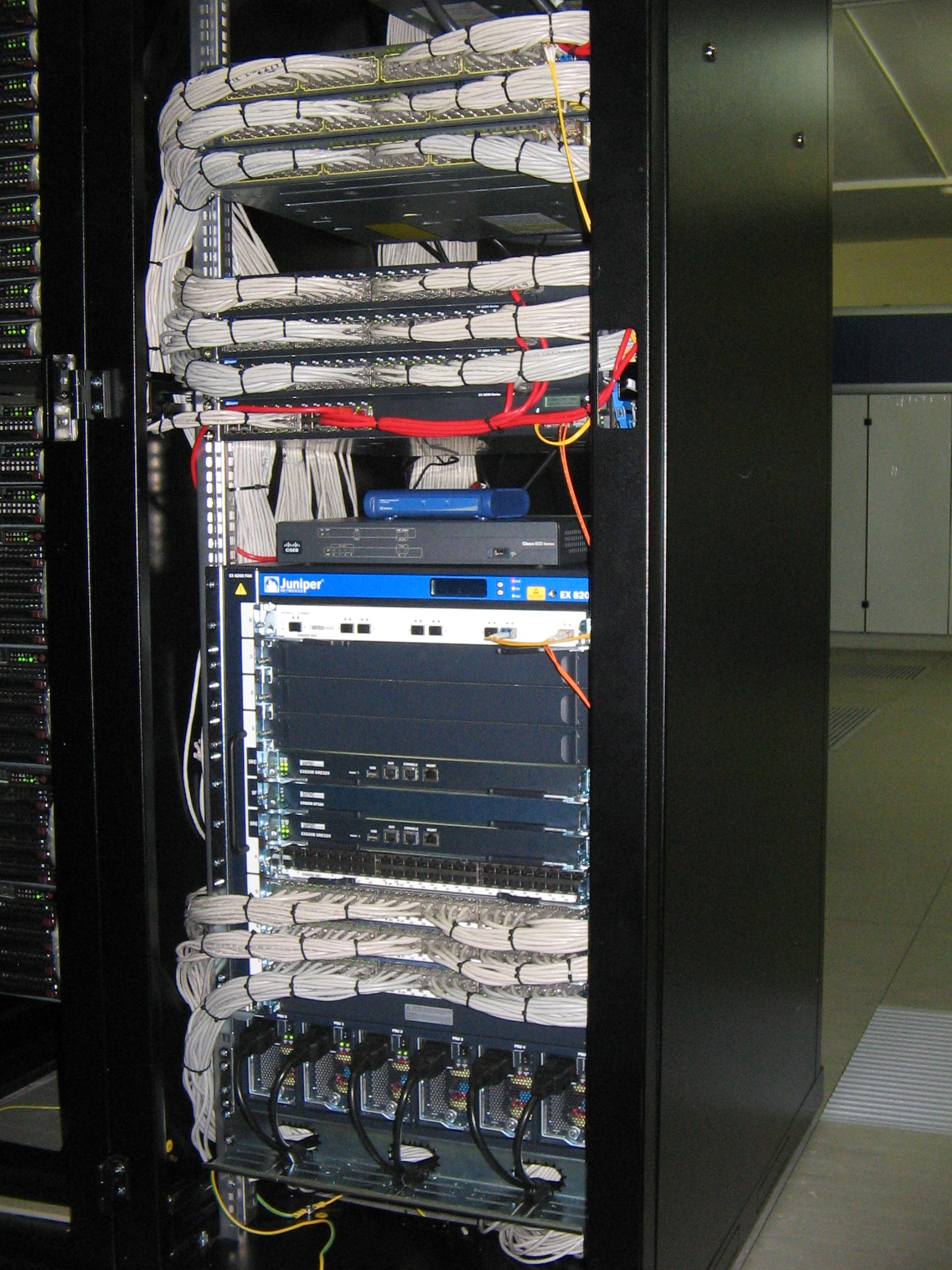

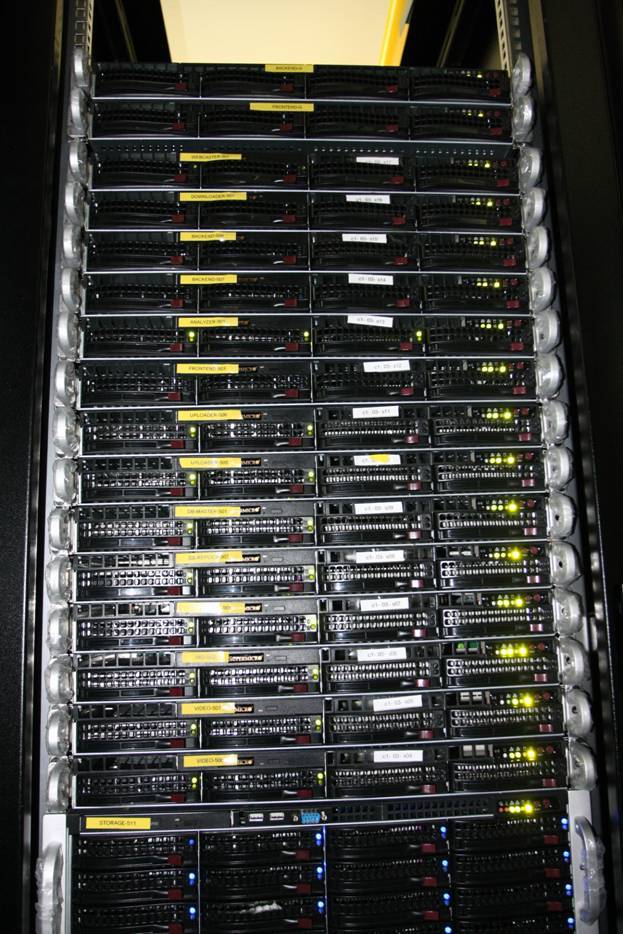

Racks looked like this:

')

With a longing we recall the data center "M10", in which the quick-detachable sled can only be installed with the help of pliers and lightly tapping with a hammer. But the Supermicro slide, fastened to the bolts, perfectly fixed in the racks, and the racks themselves were ready to withstand the full filling of the UPS devices.

That only cost the location of racks in the COMSTAR-Direct data center when the rear door could not open completely, resting against the wall, and had to remove the door in order to crawl to the sled from the hinge side of the rack. Even some nostalgia remained for this valuable experience!

The equipment consisted of HP ProLiant DL140 G3 and HP ProLiant DL320 G5 servers, as well as Supermicro servers based on PDSMU, X7SBi motherboards. The role of switches was performed by Allied Telesis and D-Link.

By the way, we have already decommissioned and sold a part of this equipment, and some are still on sale - please contact us!

Almost immediately it became clear that the current capacity is not enough for the development of the project, and it was decided to purchase several dozen Supermicro servers based on the X7DWU motherboard. Cisco Catalyst 3750 switches used the network component. Since the beginning of 2009, we installed this equipment in the Synterra data center and in the “M10”.

Storage of content began to translate into industrial data storage. The choice fell on NetApp: FAS3140 controllers with DS14 disk shelves. Subsequently, the storage system was expanded with the FAS3170 and FAS3270 controllers using more advanced DS4243 shelves.

By the summer of 2009, an “unexpected” problem had arisen - since no one was specifically responsible for servicing the data centers, everyone who put iron there or performed switching did not feel like a host, but a guest. From here drew jungle of wires and randomly scattered servers.

It was decided to assign responsibility for this direction (hundreds of servers, dozens of racks and switches) to the dedicated employee. Since then, the infrastructure has grown to five hundred servers, several dozen switches and racks, the employee has become a department of three people.

At the same time, the purchase of new network equipment took place - the choice was made on Juniper (Juniper EX8208, EX4200, EX3200, EX2200 switches and MX480 switches). And in the fall of 2009, when we received new equipment, we carried out large-scale work to restore order (at the Synterra data center) and to commission new equipment with a minimum interruption of service.

We installed new network equipment, let down elements of the new SCS (at that time we were still embroidering patch panels).

Decorated the garland with temporary patch cords to minimize service interruptions during operation.

As a result, came to this order. The End-of-Row scheme is working, but has its own clear disadvantages. A few years later, having expanded the network equipment fleet, they switched to the Top-of-Rack scheme.

The final transfer to the new equipment took place on November 4 - the Day of National Unity.

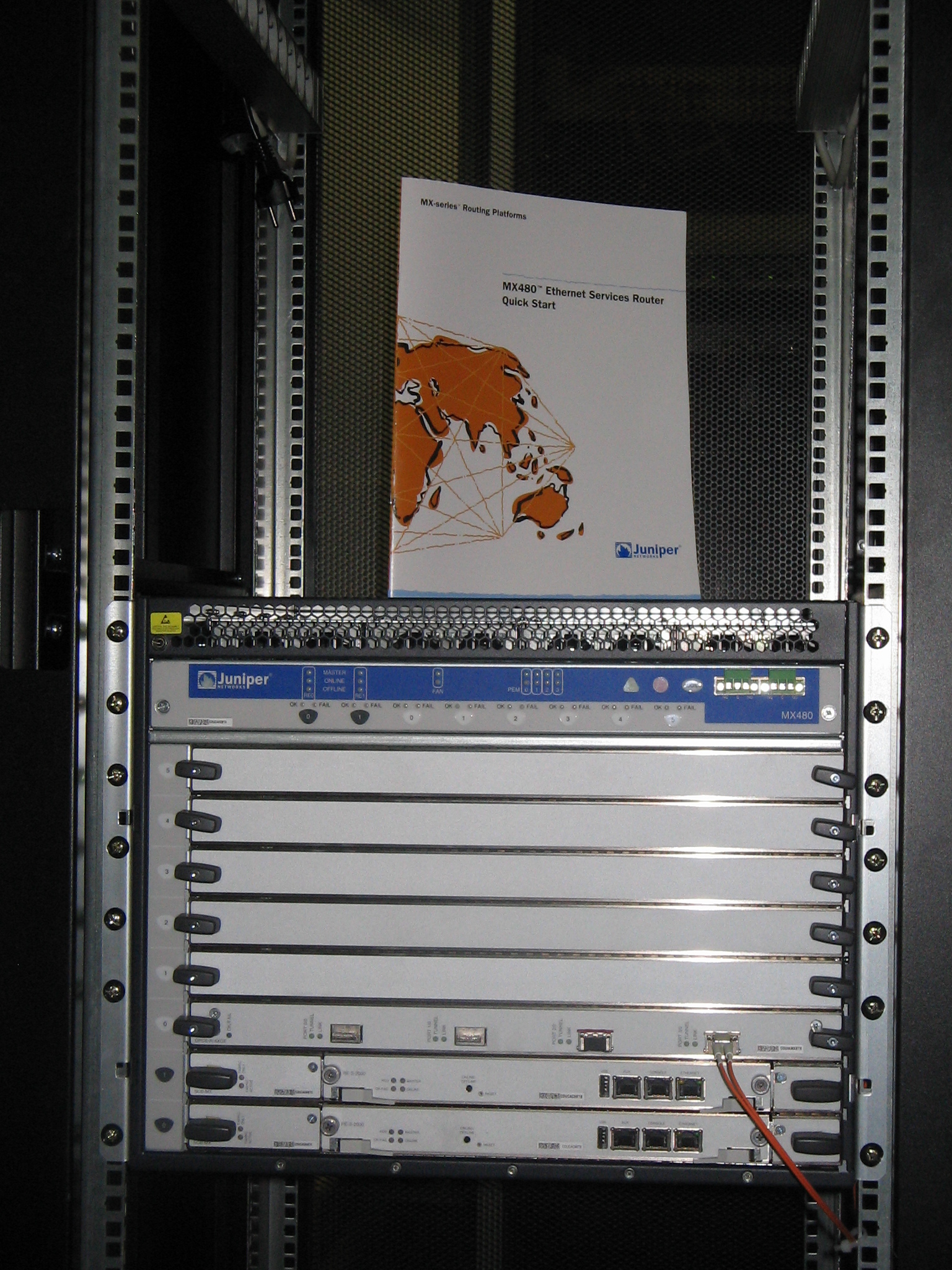

At the end of 2009, we launched our site in the M9 data center. The main goal was to gain access to the hundreds of operators that are present at the Nine (even now in Moscow there is no real alternative to this institution). Here we installed the Juniper MX480 router, the Juniper EX4200, EX2200 switches and the new Dell PowerEdge R410 servers.

Juniper MX480

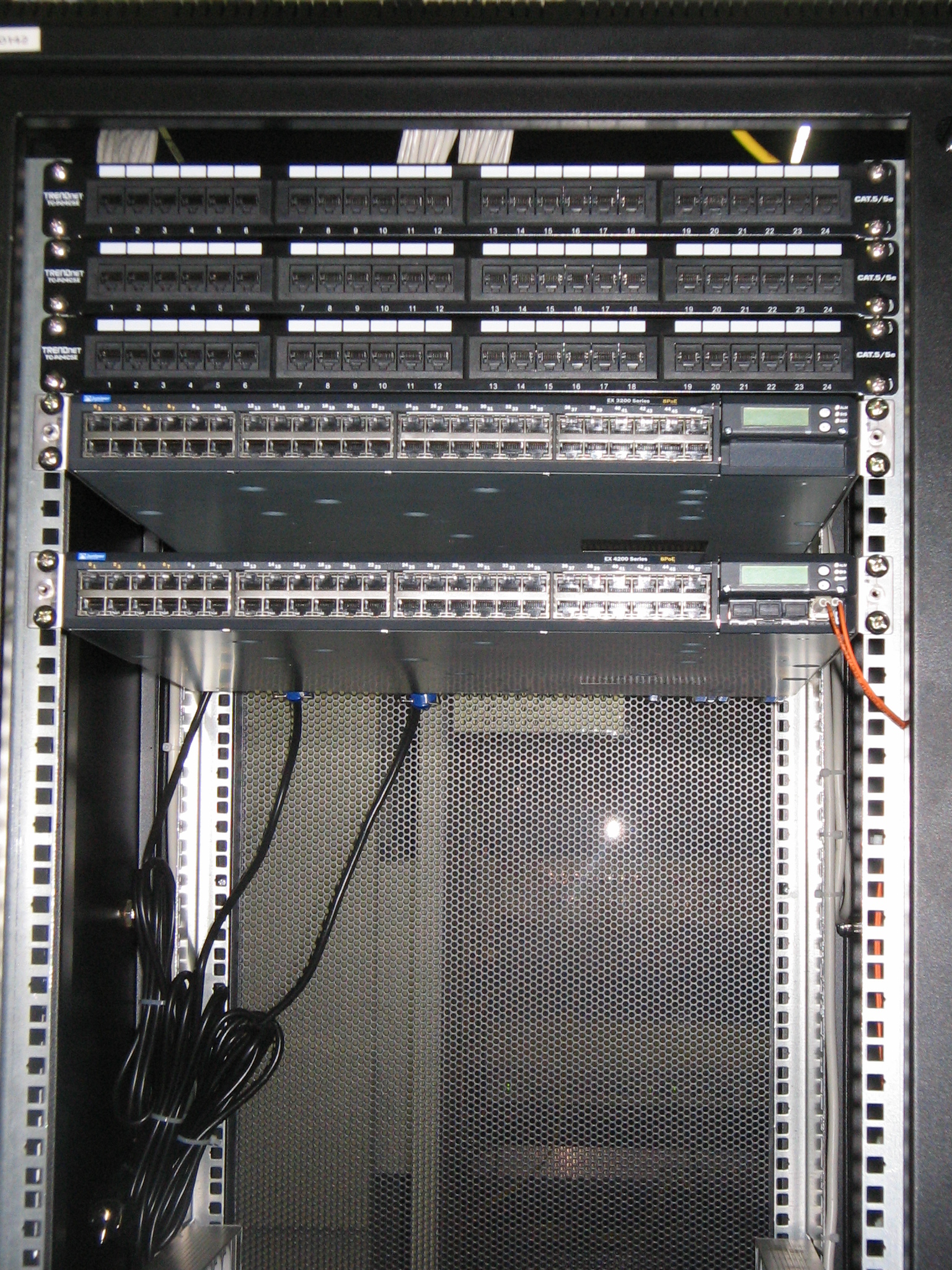

Juniper EX2200, EX4200

Then it seemed that the 52U racks on the “M9” were dimensionless, and now we can hardly fit them.

Previously, we took the servers not immediately to the data center, but in the office where the servers were checked and the initial setup of the servers before being sent to the data center.

A cozy spacious server room with no windows and an air-conditioning system, in which, as a bonus, there was a certain farm manager, who constantly offered to dine for the company.

Since 2010, we have been actively growing: new projects, new equipment, new racks in the data center. In mid-2011, colleagues noticed that the employee responsible for hardware and the data center does not appear in the office even on the day of the advance payment and salary (they come to the card). We missed you!

A minute of glory (I realized that I was writing more for myself than for a habr)!

But nobody was going to slow down the pace. In the new data center M77, we launched a new project (NTVPLUS.TV) and started building the second core RUTUBE.RU so that when the main data center RUTUB falls, it continues to work.

A small batch of servers Sun Fire X4170 × 64.

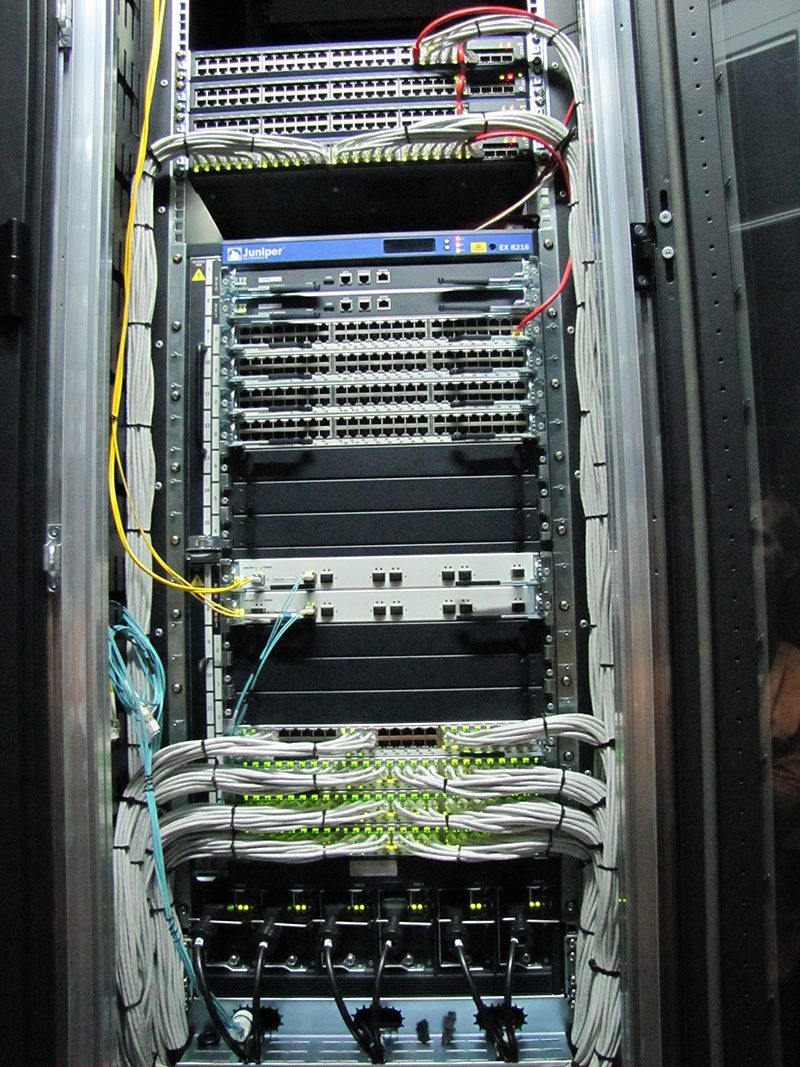

Juniper EX8216, EX4200, EX2200 and a bit of NetApp switches.

Another competition "manage to compress 100500 patch cords before launching the project."

With SCS completed and data center launched.

Here and NetApp FAS3170 with shelves DS4243 gradually filled with content.

In the meantime, our system administrators are finishing setting up the Sun Fire X4170 × 64.

A “main posting” completes the beauty (AKA order).

The year 2011 began with the continuation of the expansion of the second core in the M77 data center, when we received a new batch of Dell PowerEdge R410 servers and, within the framework of the new project (from the technology partner), servers on the Quanta platform.

In the network infrastructure, 10G switches appeared more and more - the first swallow was Extreme Summit X650-24x. Then there were more interesting Extreme Summit X670-48x.

That's what was lacking in childhood to build a cardboard house.

Not having time to exhale after finishing work at the data center, the M77 was relocated to the Synterra data center, where it was necessary to commission the Juniper EX8216 instead of the EX8208 (it was necessary to install more boards for connecting operators and servers).

At the same time, we started the installation of our first DWDM complex (the active version), which connects the three main data centers "M9", "Synterra" and "M77" over dark optics. Here we were helped by a domestic manufacturer - T8.

Juniper EX8216 and DWDM

In 2012, we had a department responsible for the data center and hardware (that is, instead of one employee, there were two). Before that, of course, more than one person did all the work — his network and system administrators actively helped him. Since then, the department has tried to balance between order, unification, beauty and operational work within the framework of project development tasks.

A new stage of development began in 2014, when they began to change the storage system, optimize the server infrastructure, launching new caching servers, and also (in 2015) replaced all the main network equipment, since the old one did not meet current needs.

NetApp storage system faithfully served us for 5 years. During this time, we realized that the maintenance and expansion of storage systems requires expenditures that are not commensurate with the rest of the subsystems. We began the search for a more rational solution, which ended with the phased implementation of our own developed storage systems (the transition began in early 2014 and ended in autumn 2015). Now the storage system consists of 12 disk servers (Supermicro, Quanta) and software written by our developers. For us, this was a great solution, and at the moment NetApp has been removed from support and we use some of it as storage systems for various technological needs.

In early 2014, they decided to upgrade the caching system, which at that time represented a hundred servers with 4 Gigabit interfaces and a hybrid disk subsystem (SAS + SSD).

We decided to separate the servers that will give the “hot” (actively viewed) content into a separate cluster. These servers were Supermicro on an X9DRD-EF motherboard with two Intel Xeon E5-2660 v2 processors, 128 GB of RAM, 480 GB of SSD and 4 Intel X520-DA2 network cards. Experienced to establish that such a server without any problems gives 65-70 Gbit / s (the maximum was 77 Gbit / s).

In mid-2014, we replaced active DWDM with passive. This allowed us to greatly increase its resources and begin to "plant" operators connected in one data center to other sites, reducing dependence on the failure of a specific border equipment.

By the end of 2014, they launched a new cluster for “cold” content, which replaced the remaining servers with an aggregate of 4 Gbit / s. And again our choice fell on Supermicro on the X9DRD-EF motherboard, this time with two Intel Xeon E5-2620 v2 processors, 128 GB of RAM, 12 × 960 GB SSD and 2 Intel X520-DA2 network cards. Each node in this cluster is capable of holding a load of up to 35 Gbit / s.

Naturally, it is not only a matter of well-chosen hardware, but also remarkable self-written segmentation modules written by our system wonder-architect and a wonderful video balancer created by the development team. Work on finding out the limits of this platform continues - there are slots for SSD and network cards.

2015 was marked by the replacement of all major network equipment, including the transition from hardware load balancers to software (Linux + x86). Instead of Juniper EX8216 switches, most of the EX4200, Extreme Summit X650-24x and X670-48x have been taken over by Cisco ASR 9912 routers and Cisco Nexus 9508, Cisco Nexus 3172PQ and Cisco Nexus 3048 switches. Of course, the development of our network subsystem is a reason for a separate large article .

After working on replacing the old server hardware and network, the racks do not look as good as we would like. In the foreseeable future, we will finish restoring order and publish a colorful article with photos as we enter 2016.

Start

At the end of 2008, Gazprom-Media Holding acquired the Rutube code and infrastructure. The technical team, which at that time consisted of a technical director, system administrator and technical specialist (“Computer asks to click“ Enikey ”, where is it?), Had several racks with equipment in data centers“ M10 ”,“ COMSTAR-Direct "And" Kurchatnik. "

Racks looked like this:

')

With a longing we recall the data center "M10", in which the quick-detachable sled can only be installed with the help of pliers and lightly tapping with a hammer. But the Supermicro slide, fastened to the bolts, perfectly fixed in the racks, and the racks themselves were ready to withstand the full filling of the UPS devices.

That only cost the location of racks in the COMSTAR-Direct data center when the rear door could not open completely, resting against the wall, and had to remove the door in order to crawl to the sled from the hinge side of the rack. Even some nostalgia remained for this valuable experience!

The equipment consisted of HP ProLiant DL140 G3 and HP ProLiant DL320 G5 servers, as well as Supermicro servers based on PDSMU, X7SBi motherboards. The role of switches was performed by Allied Telesis and D-Link.

By the way, we have already decommissioned and sold a part of this equipment, and some are still on sale - please contact us!

Development

Almost immediately it became clear that the current capacity is not enough for the development of the project, and it was decided to purchase several dozen Supermicro servers based on the X7DWU motherboard. Cisco Catalyst 3750 switches used the network component. Since the beginning of 2009, we installed this equipment in the Synterra data center and in the “M10”.

Storage of content began to translate into industrial data storage. The choice fell on NetApp: FAS3140 controllers with DS14 disk shelves. Subsequently, the storage system was expanded with the FAS3170 and FAS3270 controllers using more advanced DS4243 shelves.

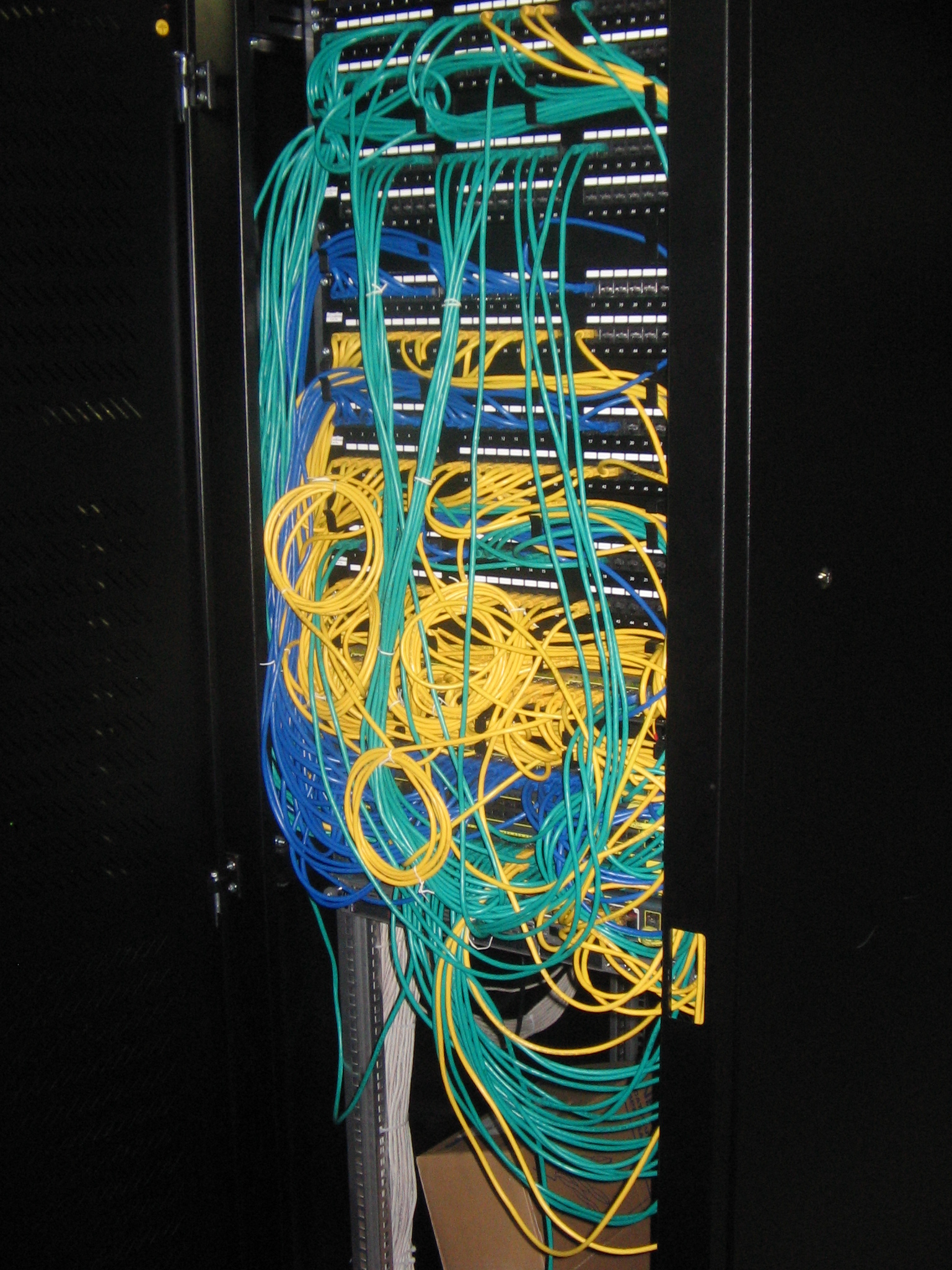

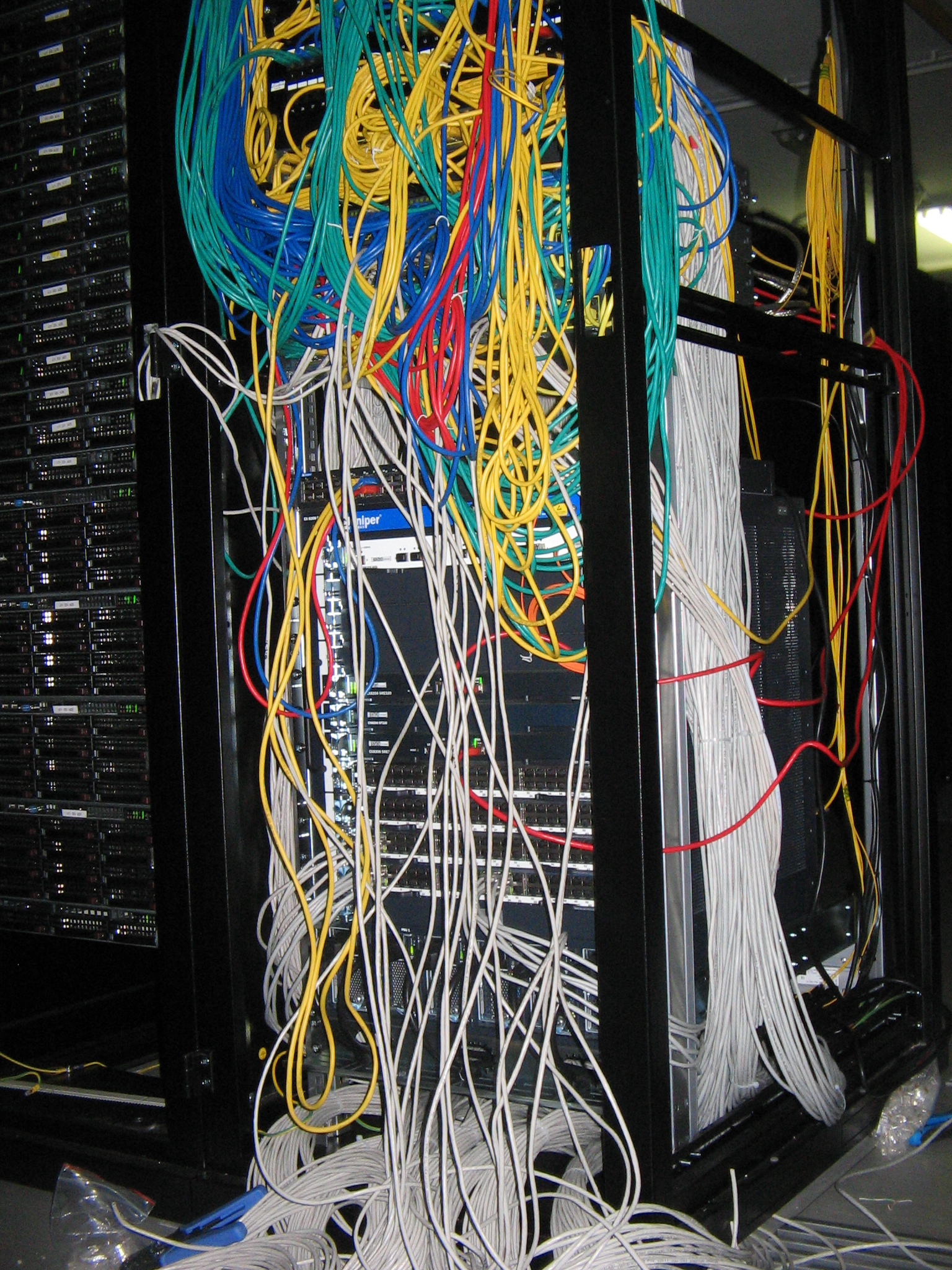

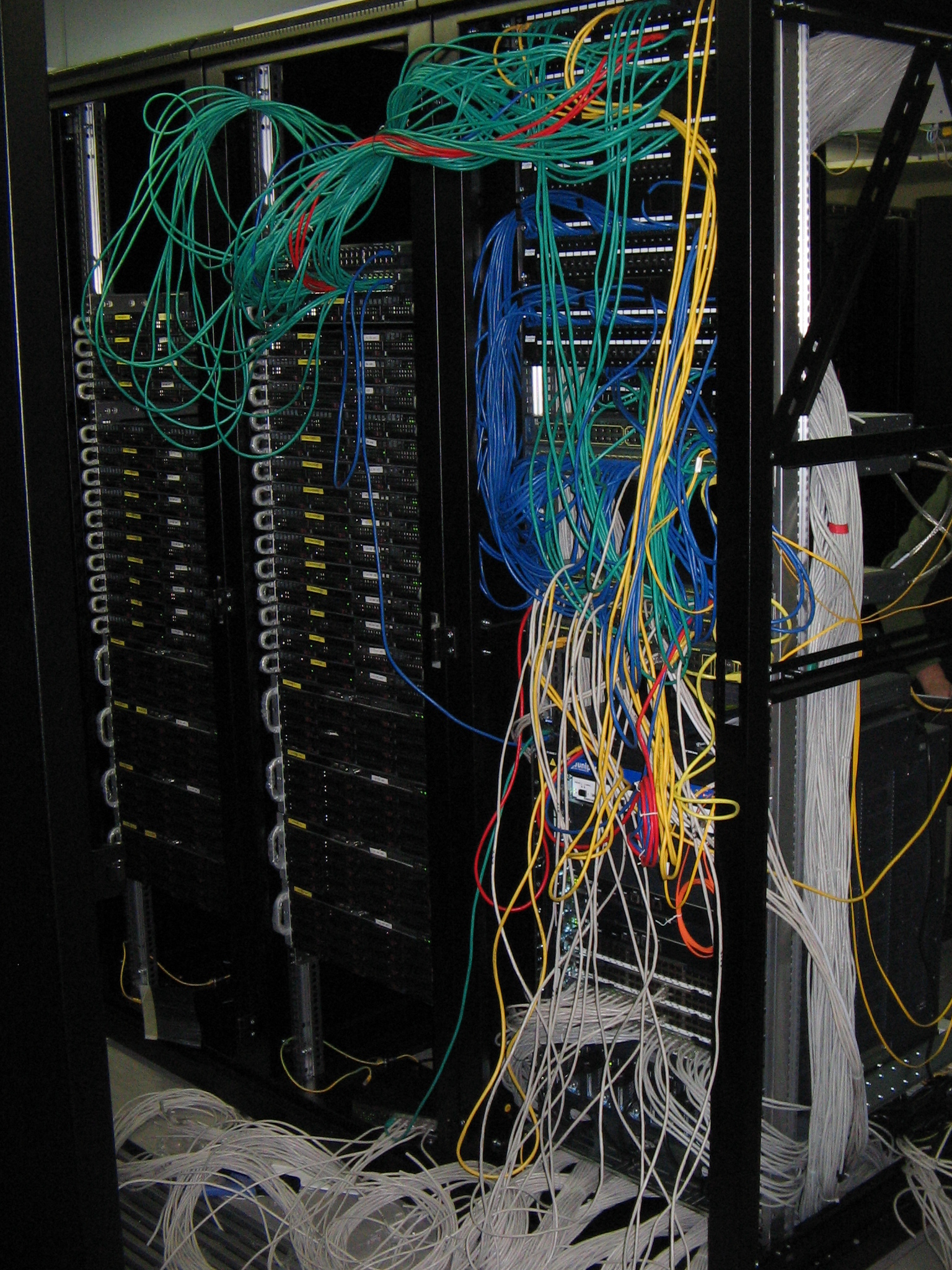

By the summer of 2009, an “unexpected” problem had arisen - since no one was specifically responsible for servicing the data centers, everyone who put iron there or performed switching did not feel like a host, but a guest. From here drew jungle of wires and randomly scattered servers.

It was decided to assign responsibility for this direction (hundreds of servers, dozens of racks and switches) to the dedicated employee. Since then, the infrastructure has grown to five hundred servers, several dozen switches and racks, the employee has become a department of three people.

At the same time, the purchase of new network equipment took place - the choice was made on Juniper (Juniper EX8208, EX4200, EX3200, EX2200 switches and MX480 switches). And in the fall of 2009, when we received new equipment, we carried out large-scale work to restore order (at the Synterra data center) and to commission new equipment with a minimum interruption of service.

We installed new network equipment, let down elements of the new SCS (at that time we were still embroidering patch panels).

Decorated the garland with temporary patch cords to minimize service interruptions during operation.

As a result, came to this order. The End-of-Row scheme is working, but has its own clear disadvantages. A few years later, having expanded the network equipment fleet, they switched to the Top-of-Rack scheme.

The final transfer to the new equipment took place on November 4 - the Day of National Unity.

At the end of 2009, we launched our site in the M9 data center. The main goal was to gain access to the hundreds of operators that are present at the Nine (even now in Moscow there is no real alternative to this institution). Here we installed the Juniper MX480 router, the Juniper EX4200, EX2200 switches and the new Dell PowerEdge R410 servers.

Juniper MX480

Juniper EX2200, EX4200

Then it seemed that the 52U racks on the “M9” were dimensionless, and now we can hardly fit them.

Previously, we took the servers not immediately to the data center, but in the office where the servers were checked and the initial setup of the servers before being sent to the data center.

A cozy spacious server room with no windows and an air-conditioning system, in which, as a bonus, there was a certain farm manager, who constantly offered to dine for the company.

Since 2010, we have been actively growing: new projects, new equipment, new racks in the data center. In mid-2011, colleagues noticed that the employee responsible for hardware and the data center does not appear in the office even on the day of the advance payment and salary (they come to the card). We missed you!

A minute of glory (I realized that I was writing more for myself than for a habr)!

But nobody was going to slow down the pace. In the new data center M77, we launched a new project (NTVPLUS.TV) and started building the second core RUTUBE.RU so that when the main data center RUTUB falls, it continues to work.

A small batch of servers Sun Fire X4170 × 64.

Juniper EX8216, EX4200, EX2200 and a bit of NetApp switches.

Another competition "manage to compress 100500 patch cords before launching the project."

With SCS completed and data center launched.

Here and NetApp FAS3170 with shelves DS4243 gradually filled with content.

In the meantime, our system administrators are finishing setting up the Sun Fire X4170 × 64.

A “main posting” completes the beauty (AKA order).

The year 2011 began with the continuation of the expansion of the second core in the M77 data center, when we received a new batch of Dell PowerEdge R410 servers and, within the framework of the new project (from the technology partner), servers on the Quanta platform.

In the network infrastructure, 10G switches appeared more and more - the first swallow was Extreme Summit X650-24x. Then there were more interesting Extreme Summit X670-48x.

That's what was lacking in childhood to build a cardboard house.

Not having time to exhale after finishing work at the data center, the M77 was relocated to the Synterra data center, where it was necessary to commission the Juniper EX8216 instead of the EX8208 (it was necessary to install more boards for connecting operators and servers).

At the same time, we started the installation of our first DWDM complex (the active version), which connects the three main data centers "M9", "Synterra" and "M77" over dark optics. Here we were helped by a domestic manufacturer - T8.

Juniper EX8216 and DWDM

In 2012, we had a department responsible for the data center and hardware (that is, instead of one employee, there were two). Before that, of course, more than one person did all the work — his network and system administrators actively helped him. Since then, the department has tried to balance between order, unification, beauty and operational work within the framework of project development tasks.

Project nowadays

A new stage of development began in 2014, when they began to change the storage system, optimize the server infrastructure, launching new caching servers, and also (in 2015) replaced all the main network equipment, since the old one did not meet current needs.

NetApp storage system faithfully served us for 5 years. During this time, we realized that the maintenance and expansion of storage systems requires expenditures that are not commensurate with the rest of the subsystems. We began the search for a more rational solution, which ended with the phased implementation of our own developed storage systems (the transition began in early 2014 and ended in autumn 2015). Now the storage system consists of 12 disk servers (Supermicro, Quanta) and software written by our developers. For us, this was a great solution, and at the moment NetApp has been removed from support and we use some of it as storage systems for various technological needs.

In early 2014, they decided to upgrade the caching system, which at that time represented a hundred servers with 4 Gigabit interfaces and a hybrid disk subsystem (SAS + SSD).

We decided to separate the servers that will give the “hot” (actively viewed) content into a separate cluster. These servers were Supermicro on an X9DRD-EF motherboard with two Intel Xeon E5-2660 v2 processors, 128 GB of RAM, 480 GB of SSD and 4 Intel X520-DA2 network cards. Experienced to establish that such a server without any problems gives 65-70 Gbit / s (the maximum was 77 Gbit / s).

In mid-2014, we replaced active DWDM with passive. This allowed us to greatly increase its resources and begin to "plant" operators connected in one data center to other sites, reducing dependence on the failure of a specific border equipment.

By the end of 2014, they launched a new cluster for “cold” content, which replaced the remaining servers with an aggregate of 4 Gbit / s. And again our choice fell on Supermicro on the X9DRD-EF motherboard, this time with two Intel Xeon E5-2620 v2 processors, 128 GB of RAM, 12 × 960 GB SSD and 2 Intel X520-DA2 network cards. Each node in this cluster is capable of holding a load of up to 35 Gbit / s.

Naturally, it is not only a matter of well-chosen hardware, but also remarkable self-written segmentation modules written by our system wonder-architect and a wonderful video balancer created by the development team. Work on finding out the limits of this platform continues - there are slots for SSD and network cards.

2015 was marked by the replacement of all major network equipment, including the transition from hardware load balancers to software (Linux + x86). Instead of Juniper EX8216 switches, most of the EX4200, Extreme Summit X650-24x and X670-48x have been taken over by Cisco ASR 9912 routers and Cisco Nexus 9508, Cisco Nexus 3172PQ and Cisco Nexus 3048 switches. Of course, the development of our network subsystem is a reason for a separate large article .

After working on replacing the old server hardware and network, the racks do not look as good as we would like. In the foreseeable future, we will finish restoring order and publish a colorful article with photos as we enter 2016.

Source: https://habr.com/ru/post/271143/

All Articles