Library of machine learning Google TensorFlow - first impressions and comparison with its own implementation

More recently, Google has made available to all its library for machine learning, called TensorFlow. For us, it turned out to be interesting by the fact that the structure includes the most modern neural network models for text processing, in particular, sequence-to-sequence learning. Since we have several projects related to this technology, we decided that this is a great opportunity to stop reinventing the wheel (probably it’s time) and quickly improve the results. Having imagined the satisfied faces of our customers, we started to work. And that's what came out of it ...

First you need to clarify that we have our own neural network library. Of course, far from being so extensive and ambitious, but intended for solving certain word processing tasks. At one time I decided to go by writing my own solution, instead of using a ready-made one. For various reasons, including cross-platform, compactness, ease of integration into the rest of the code, aesthetic reluctance to have dozens of dependencies on the generally unnecessary means of various third-party authors. The result was a kind of tool written in F #, convenient, taking about 2mb of space, and doing what was required of him. On the other hand, it is rather slow in terms of speed, not supporting computations on the GPU, truncated in functionality, and leaving doubt in its correspondence to modern realities.

The question to reinvent the wheel or use it ready, in general, is eternal. And the worm of doubt all the time gnawed that the maintenance of its own means is an unjustified thing, costly in resources and limiting in possibilities. With periodic aggravations, ideas came to use, for example, Theano or Torch, as all normal people do. But hands did not reach. And with the release of TensorFlow, another extra motivation arose.

It was in this context that I began to deal with this system.

')

Short notes on the installation process

TensorFlow is probably easier to install than a number of other modern neural network libraries. If you are lucky, the case may be limited to one line entered in the line of the terminal Linux. Yes, Windows is traditionally not supported, but such a trifle certainly will not stop the real developer.

We were not lucky, on Ubuntu 11 TensorFlow refused to be installed. But after the upgrade to 14.04 and some dances with a tambourine, something still worked. At least, it was possible to execute a code fragment from the section getting started. Therefore, you can safely write that installing TensorFlow is simple and does not cause difficulties, especially if you have a fresh distribution, and the version of python 2 is at least 2.7.9. For Linux, this is normal if a complex software package is not installed immediately (well, maybe not normal, but this is a common situation, as often happens).

Check work.

Here it is necessary to say the following. All of the following should be considered as a private example from personal experience, made in order to satisfy personal curiosity in a fairly narrow area. The author does not claim that the findings have a global value, or even some value. Arguments about the shortcomings in the results should not be attributed to the TensorFlow system itself (which basically works perfectly and most importantly quickly), but to specific models and training examples.

Chat bot

I left the introductory lessons on the recognition of numbers from the MNIST set for the future, and immediately opened a section on sequence-to-sequence learning. To make it clear what is at stake, I will describe the essence of the matter in a little more detail.

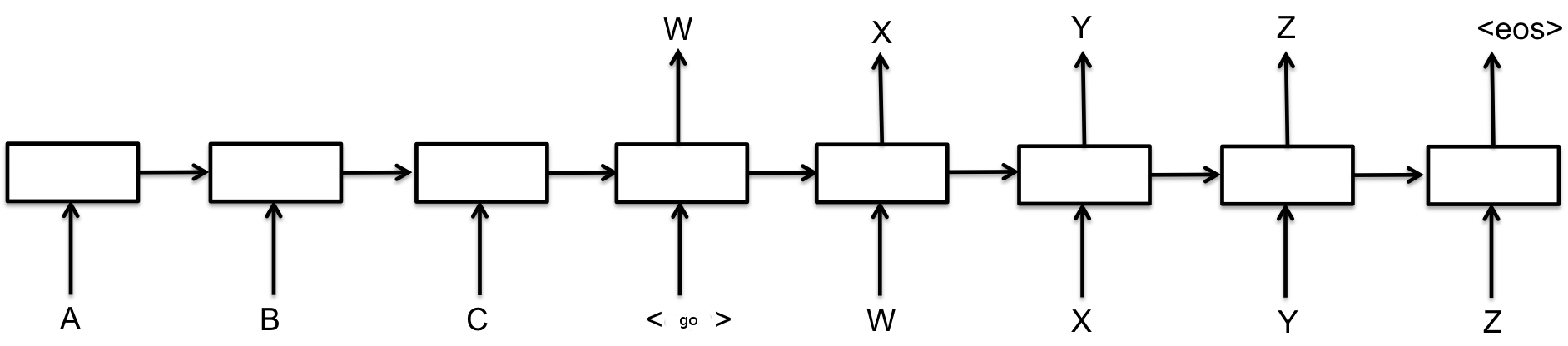

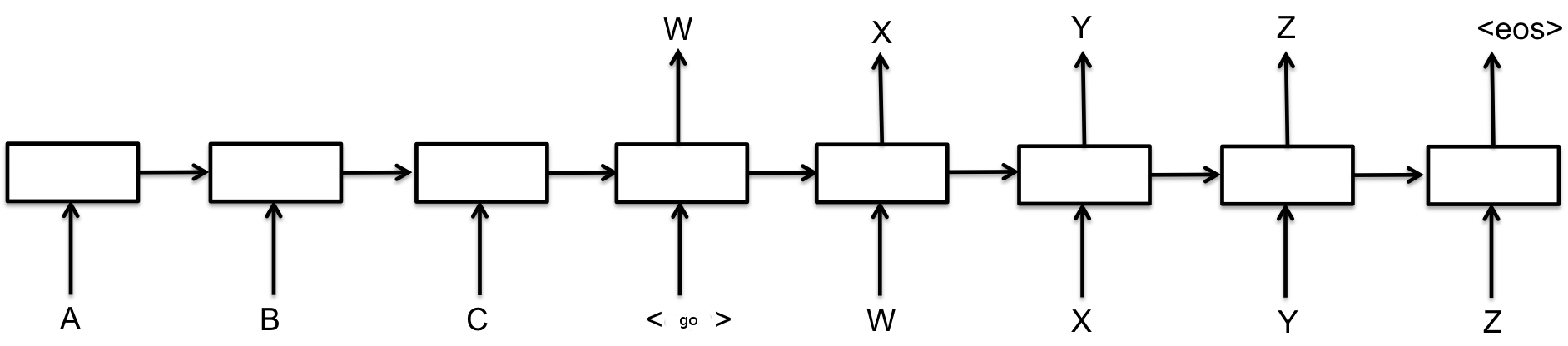

The task of sequence-to-sequence learning in general is to generate a new sequence of characters based on the input sequence of characters. In our particular case, the characters are words. The most famous application of this problem is probably machine translation - when a sentence is submitted in one language to the input of the model, and we receive a translation to another language at the output. As shown in the figures from the introductory lessons, there are two main classes of such models. The first uses a variant encoder-decoder. The original sequence is encoded using a neural network into a fixed-length representation, and then the second neural network decodes this representation into a new sequence (Fig. 1) [1]. It is as if someone were given a text, asked to remember it, and then selected and asked to write a translation into another language. The second class uses the model of selective attention, when the decoder can “peep” into the original sequence during operation (Fig. 2) [2].

Figure 1. The basic model for learning the neural model for sequence-to-sequence. On the left (before the character) the original sequence, on the right - the generated one. Image from tensorflow.org

Figure 2. The basic model for learning the neural model for sequence-to-sequence. Image from tensorflow.org

Chat bot is a special case of the task of learning sequence-to-sequence (question at the entrance, the answer at the exit). Earlier we wrote about our implementation of the chat bot in this way . Somewhat later, an article by Google employees [3] appeared on the same topic, but using a more advanced (as we thought) model, similar to that shown in Fig. one.

The model from Google uses LSTM cells, while I, while designing a chat bot, used a conventional recurrent network, screwing only one modification, for better performance. The dialogs shown in article [3] look impressive and more interesting than I was able to get (In addition, it is said that a bot trained in user support dialogs could provide intelligent help). But Google's chat bot is trained on a much larger collection of data than my old sample.

Modifying the standard example from the TensorFlow kit intended for machine translation, I downloaded data from a collection of dialogs used to train my chat bot (there are only 3000 examples, in contrast to the hundreds of thousands in which the Google chat bot studied). in the lesson from Google, it is argued that the example implements a model from [2], plus selective softmax [4], that is, almost all the most recent results from this field are applied.

My chatbot, as described earlier, uses a convolution network as an encoder (one layer 16 with 2 and 3 word filters + a maximum merge layer), and the decoder uses a simple recurrent network of Ellman. Neither one nor the other, according to other researchers, did well in the tasks of sequence-to-sequence learning. Therefore, one modification has been applied to the system, which I invented myself more than a year ago for another task (generating reviews). Instead of one convolutional network, an encoder, two convolutional networks are used — one to encode the source text and the other to encode the new, just generated. The outputs of the last layers of the union are connected in pairs in the next layer (i.e., one neuron receives one input from the neuron of the first network and one input from the neuron of the second). The idea was that when something is generated that corresponds to the input signal, this signal will be suppressed at the expense of the second network, and the system will continue to generate the rest of the text. As far as I know, this solution is not described anywhere, and it was not exactly described at the time of implementation. It worked as it seemed to me badly (although the chat bot was getting better than with one coder), and I abandoned it when I saw an article about the mechanism of selective attention, so I decided that the solution used there was more “cool” and there was nothing to invent any nonsense.

Here are the results:

Figure 3. Dialog with a chat bot using the seq2seq.embedding_attention_seq2seq model from the TensorFlow suite

Conversations with chat bots, with an approximate translation:

Both models work, but, alas, it was not possible to get a great improvement. Moreover, the dialogue on the right looks like, in my subjective view, the dialogue on the right looks even more interesting and more correct. Most likely, it's about the volume of data. Model from fig. 1. It has considerable representative power, but it needs a lot of data to start producing meaningful results (the situation we discussed in the previous article). My chat bot model is probably not as good, but it can produce meaningful results in the face of data shortages. That allows, for example, to create models of communication of different people in limited selections of dialogues. For example, if from my set of 3000 pairs of phrases I take the phrases of the answers of only one of the interlocutors, the following is obtained:

Figure 4.

I (again, subjectively) have a feeling of more positive and friendly communication. And you? From the model from the TensorFlow kit I was not able to achieve the best dialogue shown in the table, although I checked only five different configurations, perhaps a person with extensive experience with it can do better.

Reconstruction of phrases from a set of words

Reconstruction of phrases from a set of words is a synthetic task that I use here instead of a practical task that one of the customers has set for us. The customer was against the publication of both the task itself and the examples with its own data, so for this article I came up with another task similar in form.

As phrases, I used user queries to search engines, because they are a good source of short meaningful phrases and besides, they were handy in sufficient quantity. The essence of the problem is as follows. Suppose we have a request “To make a diploma to order” and its spoiled version “Diploma to make an order”. It is necessary to make a sensible request from the corrupted version again, while retaining the meaning of the original. That is, “make windows to order” will be considered an incorrect result. Corrupted versions were generated automatically by rearranging words, changing gender, number, case, and deleting all words shorter than four letters long. A total of 120,000 training examples were produced in this way, of which 1000 were set aside for testing. The task seems simpler than the problem of machine translation, but at the same time it has something in common with it.

To solve the original problem, we had to develop a special model based on the idea described above in the chat bot section. Since the quality for the needs of the customer was insufficient, I also added a tool for working with very large dictionaries, somewhat reminiscent of selective softmax. By the way, I learned about selective softmax for the first time four days ago, from a lesson on TensorFlow. Before that, I reviewed the article about him, as it turned out, fortunately, for the results ... but about the results a little later. The model is also equipped with a means for controlling the degree of “fancy” of a neural network and a tool, not that accounting for morphology, but rather a means of circumventing the problem of accounting for morphology, in which each word form is also represented by a separate vector, as in systems without morphology, but not there are related problems. However, this solution lacks the normal mechanism of selective attention, has a primitive mechanism for presenting the original sequence and does not use LSTM or GRU modules. Due to this, the speed of its work in my implementation is quite adequate.

So the results:

Figure 5.

This is what the model from the TensorFlow kit did. The generated search queries have something to do with the original keywords, and, in general, are made according to the rules of the Russian language. And with the meaning of trouble. One "nanny for cutting the roof" is worth something. On the other hand, what fascinates me about these constructions is the model's understanding of the principles of language, and the creative approach. For example, the “hematite monitor” device sounds plausible and somehow even medically, if you do not know that hematite is an “iron ore mineral Fe2O3” and there is no apparatus for a hematite monitor. But for practical purposes this is not suitable. Of the 100 tested test examples, there was not one correct.

My model has created the following options:

Figure 6.

Here, the original is the corrupted version, the generated is the generated one, the human is the original search query. Of the 100 proven examples, the correct 72%. Improvements from the model from the TensorFlow kit did not work out.

Work speed and breadth of functionality

By these parameters, the package from Google certainly surpasses my library by an order of magnitude. It also has convolutional networks for image analysis with all modern methods, LSTM and GRU, automatic differentiation, and in general the ability to easily create all sorts of models, and not just neural networks. Everything is done quite intuitively, and well documented. It can be recommended, especially for beginners involved in machine learning, of course, if you have Linux or MacOs (you may compile the source code on Windows using cygwin or mingw or something else, but this is not officially supported).

In terms of speed, I haven’t done accurate measurements yet, but the feeling is that TensorFlow models run on the CPU two or three times faster than my implementations, with approximately the same number of parameters (one would expect a greater difference in performance). And consume significantly less memory. And the GPU version is faster than the CPU implementation ten times (again, this is a general impression, so far without accurate measurements). All this is natural, because Google has a lot of resources and programmers engaged in code optimization (on the about TensorFlow page in the list of 40 developers of names), and I have no such opportunity - it works and it’s okay.

On the other hand, my library takes up little space, works on Windows and under Linux using mono. In certain situations this can be a plus.

As for the results, of course, they must be interpreted with great care. They relate to certain particular cases, and besides these are the results of specific models within an entire library, the functionality of which is much wider. Therefore, if you transfer my models to TensorFlow, the results should be the same, and everything will be executed much faster. In this sense, knowledge of the correct neural network architecture is more important than knowledge of a specific technology stack.

The truth is one philosophical question. If I had access to TensorFlow initially or worked with a similar off-the-shelf tool, would you be able to make the same models and get the same results? Does system programming from scratch help to understand deeper than the basis of neural networks or is it a waste of time? Are performance constraints an incentive to develop new models or annoying hindrances?

Conclusion

Conclusions I suggest everyone to make their own. I have not yet received any obvious answers to my questions from these experiments, the problem of the invention / non-invention of bicycles remains, but the information seems to be quite instructive.

Bibliography

1. Sutskever, Ilya, Oriol Vinyals, and Quoc VV Le. “Sequence to sequence learning with neural networks.” A dvances in neural information processing systems . 2014

2. Bahdanau, Dzmitry, Kyunghyun Cho, and Yoshua Bengio. "Neural machine translation and translation." ArXiv preprint arXiv: 1409.0473 (2014).

3. Vinyals, Oriol, and Quoc Le. “A neural conversational model.” ArXiv preprint arXiv: 1506.05869 (2015).

4. Jean, Sébastien, et al. “On using very large vocal for neural machine translation.” ArXiv preprint arXiv: 1412.2007 (2014).

PS

All trademarks mentioned in the article are the property of their respective owners.

First you need to clarify that we have our own neural network library. Of course, far from being so extensive and ambitious, but intended for solving certain word processing tasks. At one time I decided to go by writing my own solution, instead of using a ready-made one. For various reasons, including cross-platform, compactness, ease of integration into the rest of the code, aesthetic reluctance to have dozens of dependencies on the generally unnecessary means of various third-party authors. The result was a kind of tool written in F #, convenient, taking about 2mb of space, and doing what was required of him. On the other hand, it is rather slow in terms of speed, not supporting computations on the GPU, truncated in functionality, and leaving doubt in its correspondence to modern realities.

The question to reinvent the wheel or use it ready, in general, is eternal. And the worm of doubt all the time gnawed that the maintenance of its own means is an unjustified thing, costly in resources and limiting in possibilities. With periodic aggravations, ideas came to use, for example, Theano or Torch, as all normal people do. But hands did not reach. And with the release of TensorFlow, another extra motivation arose.

It was in this context that I began to deal with this system.

')

Short notes on the installation process

TensorFlow is probably easier to install than a number of other modern neural network libraries. If you are lucky, the case may be limited to one line entered in the line of the terminal Linux. Yes, Windows is traditionally not supported, but such a trifle certainly will not stop the real developer.

We were not lucky, on Ubuntu 11 TensorFlow refused to be installed. But after the upgrade to 14.04 and some dances with a tambourine, something still worked. At least, it was possible to execute a code fragment from the section getting started. Therefore, you can safely write that installing TensorFlow is simple and does not cause difficulties, especially if you have a fresh distribution, and the version of python 2 is at least 2.7.9. For Linux, this is normal if a complex software package is not installed immediately (well, maybe not normal, but this is a common situation, as often happens).

Check work.

Here it is necessary to say the following. All of the following should be considered as a private example from personal experience, made in order to satisfy personal curiosity in a fairly narrow area. The author does not claim that the findings have a global value, or even some value. Arguments about the shortcomings in the results should not be attributed to the TensorFlow system itself (which basically works perfectly and most importantly quickly), but to specific models and training examples.

Chat bot

I left the introductory lessons on the recognition of numbers from the MNIST set for the future, and immediately opened a section on sequence-to-sequence learning. To make it clear what is at stake, I will describe the essence of the matter in a little more detail.

The task of sequence-to-sequence learning in general is to generate a new sequence of characters based on the input sequence of characters. In our particular case, the characters are words. The most famous application of this problem is probably machine translation - when a sentence is submitted in one language to the input of the model, and we receive a translation to another language at the output. As shown in the figures from the introductory lessons, there are two main classes of such models. The first uses a variant encoder-decoder. The original sequence is encoded using a neural network into a fixed-length representation, and then the second neural network decodes this representation into a new sequence (Fig. 1) [1]. It is as if someone were given a text, asked to remember it, and then selected and asked to write a translation into another language. The second class uses the model of selective attention, when the decoder can “peep” into the original sequence during operation (Fig. 2) [2].

Figure 1. The basic model for learning the neural model for sequence-to-sequence. On the left (before the character) the original sequence, on the right - the generated one. Image from tensorflow.org

Figure 2. The basic model for learning the neural model for sequence-to-sequence. Image from tensorflow.org

Chat bot is a special case of the task of learning sequence-to-sequence (question at the entrance, the answer at the exit). Earlier we wrote about our implementation of the chat bot in this way . Somewhat later, an article by Google employees [3] appeared on the same topic, but using a more advanced (as we thought) model, similar to that shown in Fig. one.

The model from Google uses LSTM cells, while I, while designing a chat bot, used a conventional recurrent network, screwing only one modification, for better performance. The dialogs shown in article [3] look impressive and more interesting than I was able to get (In addition, it is said that a bot trained in user support dialogs could provide intelligent help). But Google's chat bot is trained on a much larger collection of data than my old sample.

Modifying the standard example from the TensorFlow kit intended for machine translation, I downloaded data from a collection of dialogs used to train my chat bot (there are only 3000 examples, in contrast to the hundreds of thousands in which the Google chat bot studied). in the lesson from Google, it is argued that the example implements a model from [2], plus selective softmax [4], that is, almost all the most recent results from this field are applied.

My chatbot, as described earlier, uses a convolution network as an encoder (one layer 16 with 2 and 3 word filters + a maximum merge layer), and the decoder uses a simple recurrent network of Ellman. Neither one nor the other, according to other researchers, did well in the tasks of sequence-to-sequence learning. Therefore, one modification has been applied to the system, which I invented myself more than a year ago for another task (generating reviews). Instead of one convolutional network, an encoder, two convolutional networks are used — one to encode the source text and the other to encode the new, just generated. The outputs of the last layers of the union are connected in pairs in the next layer (i.e., one neuron receives one input from the neuron of the first network and one input from the neuron of the second). The idea was that when something is generated that corresponds to the input signal, this signal will be suppressed at the expense of the second network, and the system will continue to generate the rest of the text. As far as I know, this solution is not described anywhere, and it was not exactly described at the time of implementation. It worked as it seemed to me badly (although the chat bot was getting better than with one coder), and I abandoned it when I saw an article about the mechanism of selective attention, so I decided that the solution used there was more “cool” and there was nothing to invent any nonsense.

Here are the results:

Figure 3. Dialog with a chat bot using the seq2seq.embedding_attention_seq2seq model from the TensorFlow suite

Conversations with chat bots, with an approximate translation:

| Model from the TensorFlow kit | My model |

| D: Hello (hello) K: hey there (hello) W: how are you? (how are you) K: i'm fine (excellent) D: where are you? (where are you) K: i'm at fine (I'm in fine) D: Tell me something (say something) K: yes (yes) R: ok, see you soon (see you soon) K: see you nice clean are nice (I'll see you well clean it's good) H: bye (for now) K: bye (for now) | D: Hello (hello) K: hi (hi) W: how are you? (how are you) K: i feel strange (I feel strange) D: where are you? (where are you) K: I'm at home now (I'm at home) D: Tell me something (say something) To: world (world) R: ok, see you soon (see you soon) K: I'm not sure ... (not sure) H: bye (for now) K: ok |

Both models work, but, alas, it was not possible to get a great improvement. Moreover, the dialogue on the right looks like, in my subjective view, the dialogue on the right looks even more interesting and more correct. Most likely, it's about the volume of data. Model from fig. 1. It has considerable representative power, but it needs a lot of data to start producing meaningful results (the situation we discussed in the previous article). My chat bot model is probably not as good, but it can produce meaningful results in the face of data shortages. That allows, for example, to create models of communication of different people in limited selections of dialogues. For example, if from my set of 3000 pairs of phrases I take the phrases of the answers of only one of the interlocutors, the following is obtained:

Figure 4.

I (again, subjectively) have a feeling of more positive and friendly communication. And you? From the model from the TensorFlow kit I was not able to achieve the best dialogue shown in the table, although I checked only five different configurations, perhaps a person with extensive experience with it can do better.

Reconstruction of phrases from a set of words

Reconstruction of phrases from a set of words is a synthetic task that I use here instead of a practical task that one of the customers has set for us. The customer was against the publication of both the task itself and the examples with its own data, so for this article I came up with another task similar in form.

As phrases, I used user queries to search engines, because they are a good source of short meaningful phrases and besides, they were handy in sufficient quantity. The essence of the problem is as follows. Suppose we have a request “To make a diploma to order” and its spoiled version “Diploma to make an order”. It is necessary to make a sensible request from the corrupted version again, while retaining the meaning of the original. That is, “make windows to order” will be considered an incorrect result. Corrupted versions were generated automatically by rearranging words, changing gender, number, case, and deleting all words shorter than four letters long. A total of 120,000 training examples were produced in this way, of which 1000 were set aside for testing. The task seems simpler than the problem of machine translation, but at the same time it has something in common with it.

To solve the original problem, we had to develop a special model based on the idea described above in the chat bot section. Since the quality for the needs of the customer was insufficient, I also added a tool for working with very large dictionaries, somewhat reminiscent of selective softmax. By the way, I learned about selective softmax for the first time four days ago, from a lesson on TensorFlow. Before that, I reviewed the article about him, as it turned out, fortunately, for the results ... but about the results a little later. The model is also equipped with a means for controlling the degree of “fancy” of a neural network and a tool, not that accounting for morphology, but rather a means of circumventing the problem of accounting for morphology, in which each word form is also represented by a separate vector, as in systems without morphology, but not there are related problems. However, this solution lacks the normal mechanism of selective attention, has a primitive mechanism for presenting the original sequence and does not use LSTM or GRU modules. Due to this, the speed of its work in my implementation is quite adequate.

So the results:

Figure 5.

This is what the model from the TensorFlow kit did. The generated search queries have something to do with the original keywords, and, in general, are made according to the rules of the Russian language. And with the meaning of trouble. One "nanny for cutting the roof" is worth something. On the other hand, what fascinates me about these constructions is the model's understanding of the principles of language, and the creative approach. For example, the “hematite monitor” device sounds plausible and somehow even medically, if you do not know that hematite is an “iron ore mineral Fe2O3” and there is no apparatus for a hematite monitor. But for practical purposes this is not suitable. Of the 100 tested test examples, there was not one correct.

My model has created the following options:

Figure 6.

Here, the original is the corrupted version, the generated is the generated one, the human is the original search query. Of the 100 proven examples, the correct 72%. Improvements from the model from the TensorFlow kit did not work out.

Work speed and breadth of functionality

By these parameters, the package from Google certainly surpasses my library by an order of magnitude. It also has convolutional networks for image analysis with all modern methods, LSTM and GRU, automatic differentiation, and in general the ability to easily create all sorts of models, and not just neural networks. Everything is done quite intuitively, and well documented. It can be recommended, especially for beginners involved in machine learning, of course, if you have Linux or MacOs (you may compile the source code on Windows using cygwin or mingw or something else, but this is not officially supported).

In terms of speed, I haven’t done accurate measurements yet, but the feeling is that TensorFlow models run on the CPU two or three times faster than my implementations, with approximately the same number of parameters (one would expect a greater difference in performance). And consume significantly less memory. And the GPU version is faster than the CPU implementation ten times (again, this is a general impression, so far without accurate measurements). All this is natural, because Google has a lot of resources and programmers engaged in code optimization (on the about TensorFlow page in the list of 40 developers of names), and I have no such opportunity - it works and it’s okay.

On the other hand, my library takes up little space, works on Windows and under Linux using mono. In certain situations this can be a plus.

As for the results, of course, they must be interpreted with great care. They relate to certain particular cases, and besides these are the results of specific models within an entire library, the functionality of which is much wider. Therefore, if you transfer my models to TensorFlow, the results should be the same, and everything will be executed much faster. In this sense, knowledge of the correct neural network architecture is more important than knowledge of a specific technology stack.

The truth is one philosophical question. If I had access to TensorFlow initially or worked with a similar off-the-shelf tool, would you be able to make the same models and get the same results? Does system programming from scratch help to understand deeper than the basis of neural networks or is it a waste of time? Are performance constraints an incentive to develop new models or annoying hindrances?

Conclusion

Conclusions I suggest everyone to make their own. I have not yet received any obvious answers to my questions from these experiments, the problem of the invention / non-invention of bicycles remains, but the information seems to be quite instructive.

Bibliography

1. Sutskever, Ilya, Oriol Vinyals, and Quoc VV Le. “Sequence to sequence learning with neural networks.” A dvances in neural information processing systems . 2014

2. Bahdanau, Dzmitry, Kyunghyun Cho, and Yoshua Bengio. "Neural machine translation and translation." ArXiv preprint arXiv: 1409.0473 (2014).

3. Vinyals, Oriol, and Quoc Le. “A neural conversational model.” ArXiv preprint arXiv: 1506.05869 (2015).

4. Jean, Sébastien, et al. “On using very large vocal for neural machine translation.” ArXiv preprint arXiv: 1412.2007 (2014).

PS

All trademarks mentioned in the article are the property of their respective owners.

Source: https://habr.com/ru/post/271053/

All Articles