Website Testing Automation: Pytest, Allure, Selenium + Some Secret Ingredients

For more than a year, Acronis has a new logo, how it was created can be read here . As part of the rebranding, the site www.acronis.com has also been updated . To build the site, we chose CMS Drupal. The site turned out to be cool on the outside and uneasy on the inside: 28 locales (!), Responsive web design, carousels, wizards, pop-up windows ... in the end, I had to use many different Drupal modules, some of which customize and write my own. With such a dynamic, complex system, mistakes are inevitable. Their prevention and detection is carried out by the team monitoring and ensuring the quality of the website. In this article we want to share our successful recipe for automating the testing of our site.

Unfortunately, at the initial stage of the life of our site, the quality guard had, basically, only the possibilities of manual testing. Given the short release cycles, regression tests had to be run often. A large number of locales exacerbated the situation (checks were multiplied by the number of locales). The costs of manual testing were great, and the benefits of automating the testing of the site are obvious - it was necessary to act.

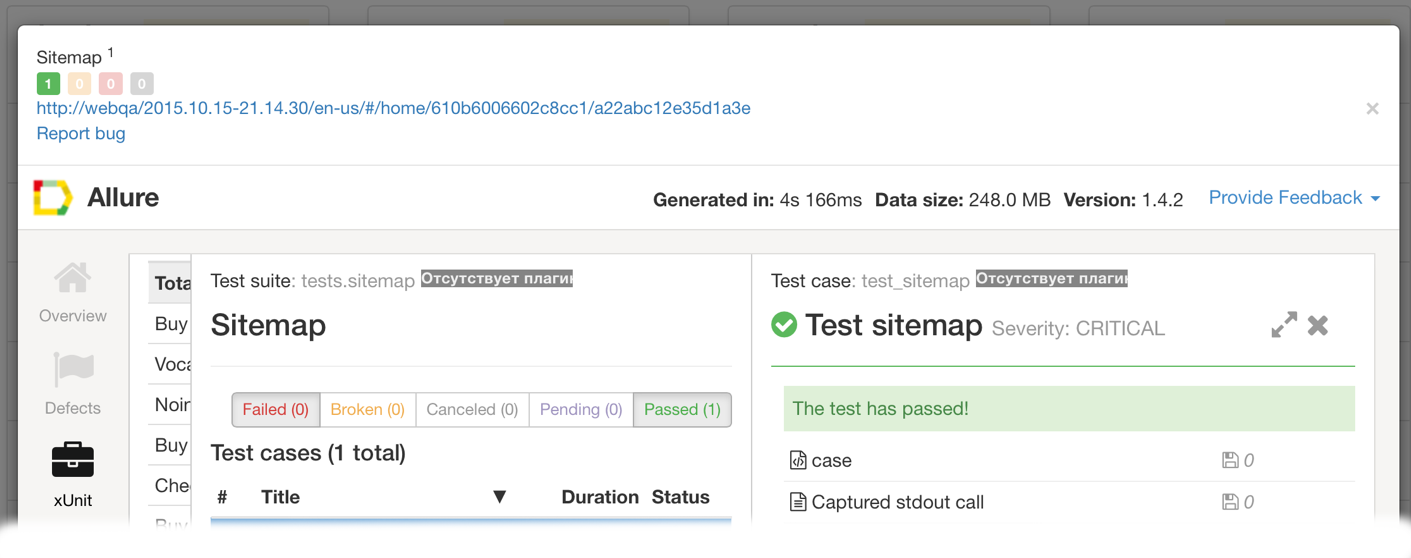

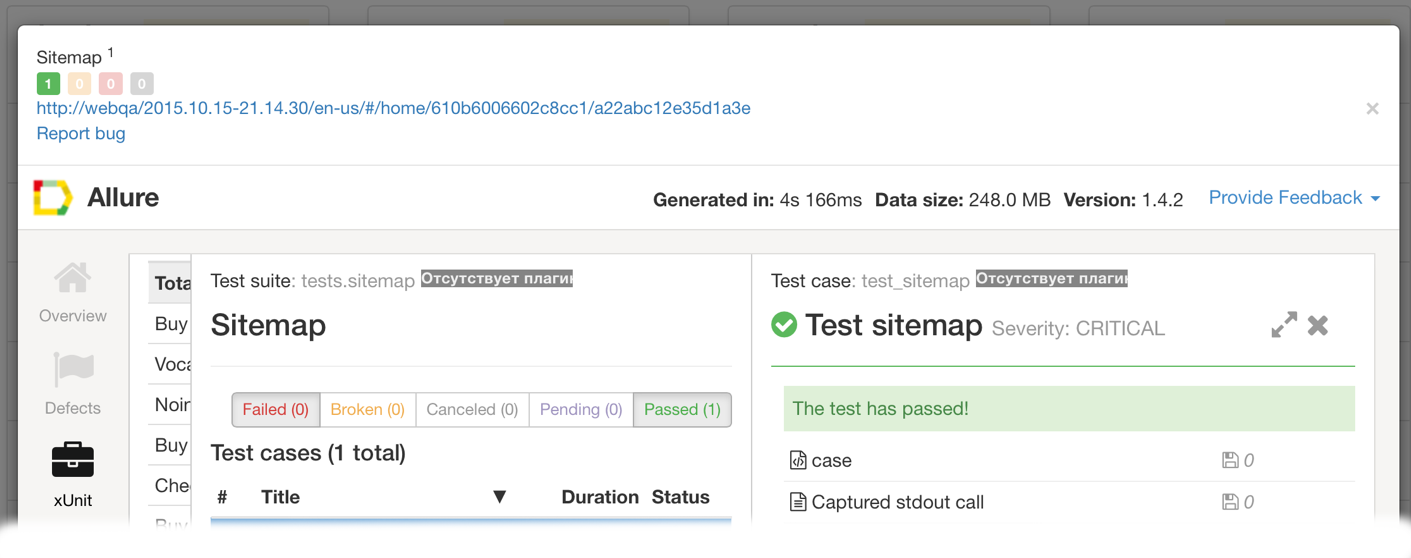

So, we start to make requirements for the system of automatic testing. We noticed that precious time is wasted on processing fairly simple defects, we wanted to minimize the number of defects inflicted in the bug tracker, but without losing such errors from our field of view. Therefore, it was decided to entrust the work with such defects to the system of autotest reports. Reporting was required to be very convenient, understandable, informative and enjoyable. So that any member of our friendly web-team can look at the report, quickly understand what's wrong, and fix the defect. Accordingly, everyone is watching: the developers fix the site, the testers fix the tests. As a result, we have a "green traffic signal" and GO in production.

')

Historically, another requirement has arisen - the Python development language . Omitting the details of the remaining wishes, we received a list of requirements for a future automated testing system:

First of all, we paid attention to the Robot Framework . This is a popular solution that has already been mentioned in Acronis publications . Installed, began to try. Of course, there are many advantages, but now it’s not about them. The main contraindication for us is the work of RF with parameterized tests and the regular reporting of the results for them. It should be noted that the absolute majority of the tests we have conceived are parameterized tests. In the RF report, if one of the cases in the parameterized test fails, then the entire test is marked as failed. Also, RF reporting out of the box was inconvenient for most of our guys - it took a lot of time to analyze the results of test autotests. The peculiar “simple” writing of tests with the help of keywords in the case of tests proved more difficult than a difficult task. At the same time, thanks to Habra including, we paid attention to Allure from Yandex.

We looked, everyone liked it, everyone had a desire to work with this system, which, it should be noted, is very important. We studied the tool, made sure that the project is actively developing, has many ready-made adapters for various popular test frameworks, including python. Unfortunately (and perhaps fortunately) it turned out that for python there is an adapter only for the pytest framework. We looked, read, tried - it turned out to be a cool thing. Pytest is able to do a lot of things, easy to use, extensible functionality at the expense of ready-made and own plug-ins, a large online community, well, the parameterization of tests on it is what we need! Launched, wrote a couple of test tests, everything is fine. Now it's up to running the tests in parallel, here the choice of solutions is small - only pytest-xdist . Installed, launched and ...

It turned out that pytest-xdist conflicts with Allure Pytest Adapter . Began to understand, the problem is known , has been discussed for a long time and is not solved. Also, a complete solution for inheriting test data by locale could not be found,

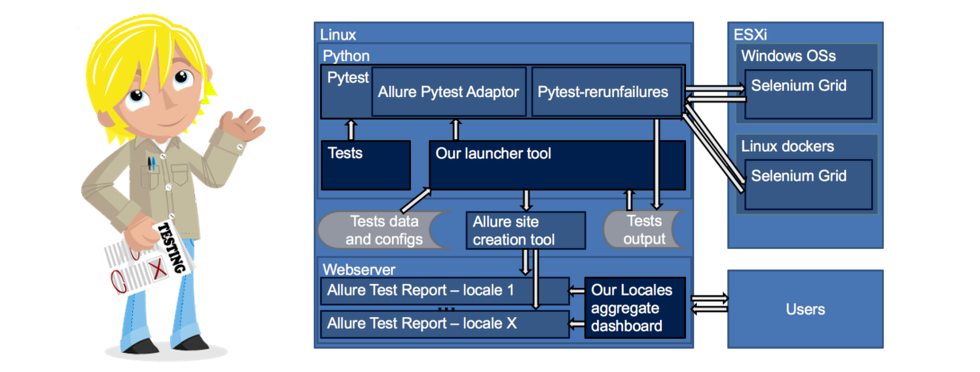

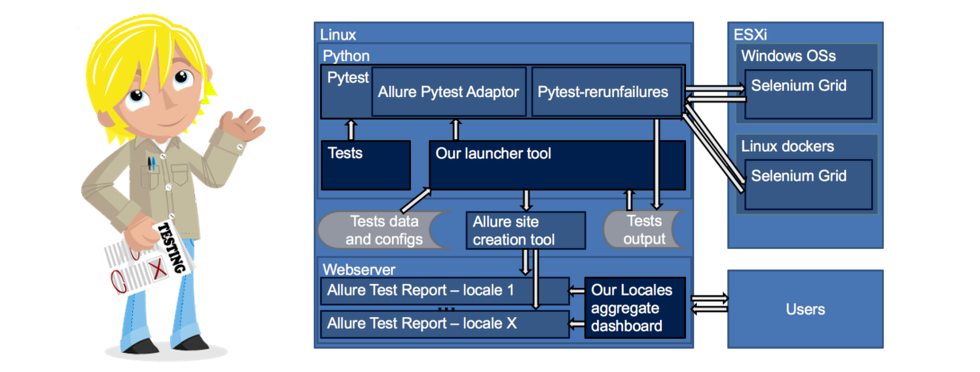

To get around these problems, we decide to write a tool (wrapper) in python. This wrapper will prepare test data with regard to inheritance, pass it to tests, transfer test data to the test environment (browser, for example), and run the tests in the specified number of threads. After completing the tests, merge the reports received in different streams into one and publish the final data on the website.

We decided to implement parallelization quite simply, as a parallel call for each single test through the command line. With this approach, it was necessary to implement the transfer of test data to the test independently. Thanks to the fixtures in pytest, this is a matter of a few lines. It is important ! You also need to comment out a couple of lines in (allure-python / allure / common.py) that are responsible for deleting the "old" files in the adapter's allure report directory.

It was decided to store test data for parameterized tests in tsv files, static test data in yaml. It was decided to determine the belonging of the test data to the test and the locale using the names and hierarchy of the directories in which these data are located. Inheritance is carried out from the main base locale "en-us", by deleting, adding unique data. Also in the test data it is possible to use the keyword 'skip' and 'comment' - to cancel the launch of a specific test case with an indication of the reason. Such inheritance, for example, if it is necessary to use the same data for all site localizations, then inheritance occurs automatically without any additional parameters. By the way, for these test configurations (environment, waiting time, etc.) they also implemented inheritance, but not on the basis of localization, but on the basis of the inheritance of the global configuration file, with test configs.

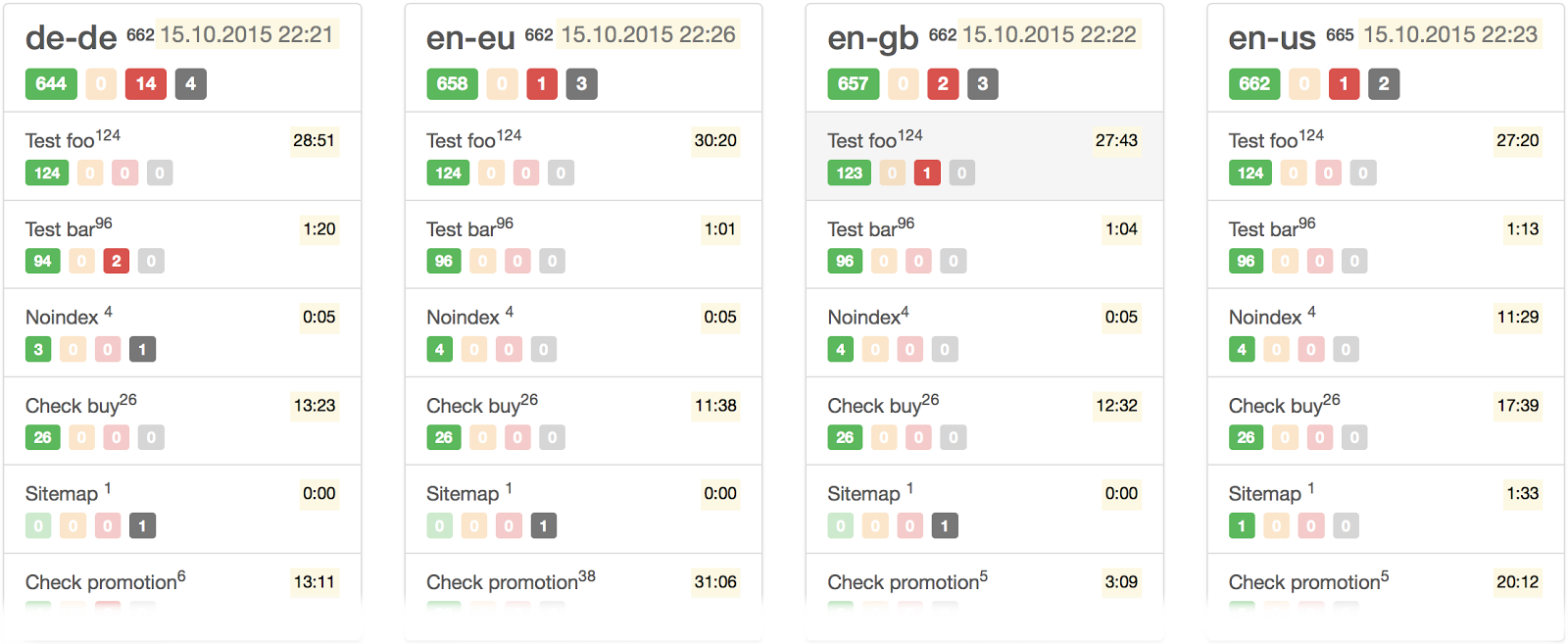

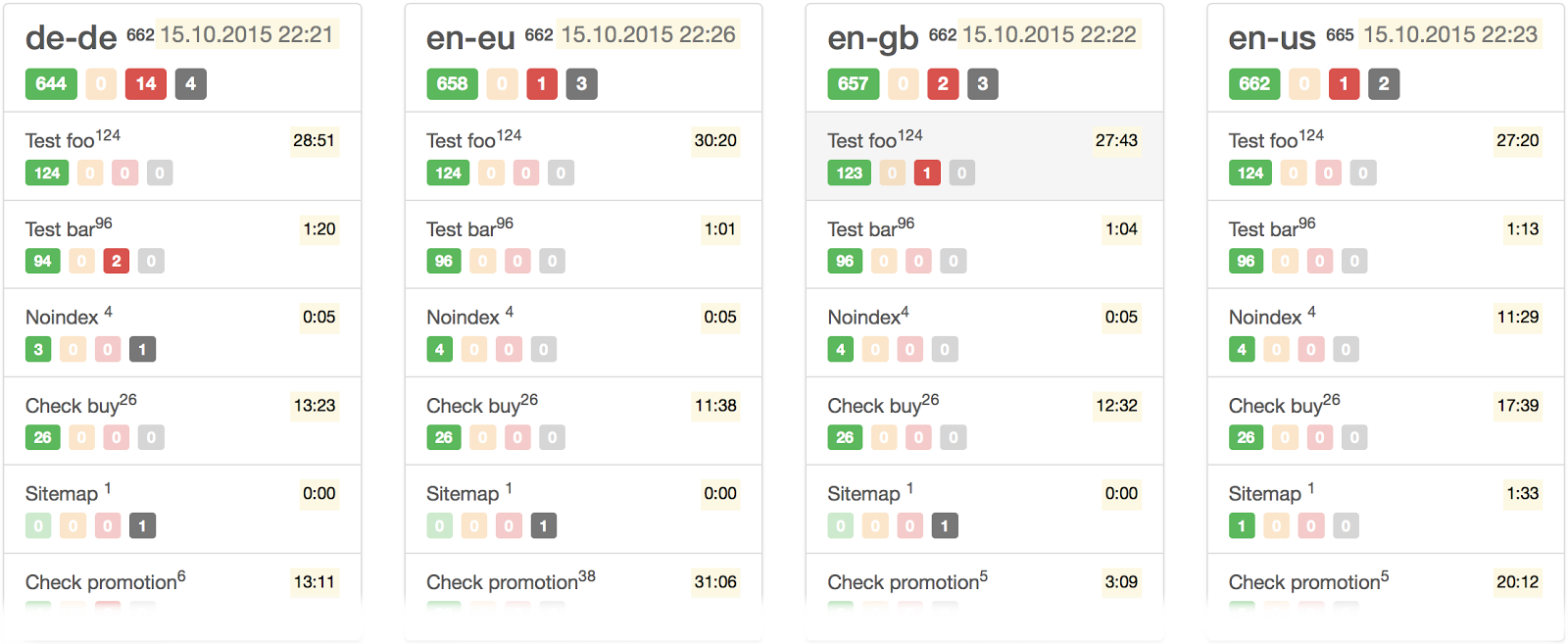

Receiving the first reports, they began to think about how it is more convenient to display test results in the context of locales. We considered that it was most convenient for us to share the results according to the principle - each locale has its own copy of the allure-report. And for aggregation of general information on locales, we quickly wrote a simple but nice wrapper.

The last thing that overshadowed our joy was the cases of individual launches in a test environment. I forgot to mention that as a medium for testing, we decided to use classic Selenium (as an environment that is closest to real). With a large number of checks in selenium failures can not be avoided. As well as all the “favorite” false positives, they make it very difficult for continuous integration and a “green traffic signal” for production.

We thought and found a way out. Hangs - overcame the refinement of our wrappers. Added the ability to specify the maximum time for an individual test and, if it does not run within the specified time, we restart it roughly. And the false positives were removed with the pytest rerun-xfails add - on. This plugin automatically restarts all failed tests; again, we set the number of attempts in the configuration yaml file for each test or total.

And finally, here it is - the happiness of a novice automator: a stable, convenient working system. It is easy to maintain, allows you to test as quickly as possible and without false positives, provides very convenient reporting of test results.

Friends, on your feedbacks here at Habré, we would like to understand how interesting our experience is. There is an idea to publish the resulting ready-made solution in the form of a docker-container.

Why and why?

Unfortunately, at the initial stage of the life of our site, the quality guard had, basically, only the possibilities of manual testing. Given the short release cycles, regression tests had to be run often. A large number of locales exacerbated the situation (checks were multiplied by the number of locales). The costs of manual testing were great, and the benefits of automating the testing of the site are obvious - it was necessary to act.

What do we want?

So, we start to make requirements for the system of automatic testing. We noticed that precious time is wasted on processing fairly simple defects, we wanted to minimize the number of defects inflicted in the bug tracker, but without losing such errors from our field of view. Therefore, it was decided to entrust the work with such defects to the system of autotest reports. Reporting was required to be very convenient, understandable, informative and enjoyable. So that any member of our friendly web-team can look at the report, quickly understand what's wrong, and fix the defect. Accordingly, everyone is watching: the developers fix the site, the testers fix the tests. As a result, we have a "green traffic signal" and GO in production.

')

Historically, another requirement has arisen - the Python development language . Omitting the details of the remaining wishes, we received a list of requirements for a future automated testing system:

- Convenient, understandable, informative reporting ;.

- Parallel test run;

- Test parameterization;

- Inheritance of test data by locale;

- Testing in the environment closest to the user;

- Minimization of false positives;

- Convenient support and delivery environment for testing;

- The minimum of its own design and customization;

- Open source;

- Python.

Flour choice

First of all, we paid attention to the Robot Framework . This is a popular solution that has already been mentioned in Acronis publications . Installed, began to try. Of course, there are many advantages, but now it’s not about them. The main contraindication for us is the work of RF with parameterized tests and the regular reporting of the results for them. It should be noted that the absolute majority of the tests we have conceived are parameterized tests. In the RF report, if one of the cases in the parameterized test fails, then the entire test is marked as failed. Also, RF reporting out of the box was inconvenient for most of our guys - it took a lot of time to analyze the results of test autotests. The peculiar “simple” writing of tests with the help of keywords in the case of tests proved more difficult than a difficult task. At the same time, thanks to Habra including, we paid attention to Allure from Yandex.

We looked, everyone liked it, everyone had a desire to work with this system, which, it should be noted, is very important. We studied the tool, made sure that the project is actively developing, has many ready-made adapters for various popular test frameworks, including python. Unfortunately (and perhaps fortunately) it turned out that for python there is an adapter only for the pytest framework. We looked, read, tried - it turned out to be a cool thing. Pytest is able to do a lot of things, easy to use, extensible functionality at the expense of ready-made and own plug-ins, a large online community, well, the parameterization of tests on it is what we need! Launched, wrote a couple of test tests, everything is fine. Now it's up to running the tests in parallel, here the choice of solutions is small - only pytest-xdist . Installed, launched and ...

A spoon of tar

It turned out that pytest-xdist conflicts with Allure Pytest Adapter . Began to understand, the problem is known , has been discussed for a long time and is not solved. Also, a complete solution for inheriting test data by locale could not be found,

Secret ingredients

To get around these problems, we decide to write a tool (wrapper) in python. This wrapper will prepare test data with regard to inheritance, pass it to tests, transfer test data to the test environment (browser, for example), and run the tests in the specified number of threads. After completing the tests, merge the reports received in different streams into one and publish the final data on the website.

We decided to implement parallelization quite simply, as a parallel call for each single test through the command line. With this approach, it was necessary to implement the transfer of test data to the test independently. Thanks to the fixtures in pytest, this is a matter of a few lines. It is important ! You also need to comment out a couple of lines in (allure-python / allure / common.py) that are responsible for deleting the "old" files in the adapter's allure report directory.

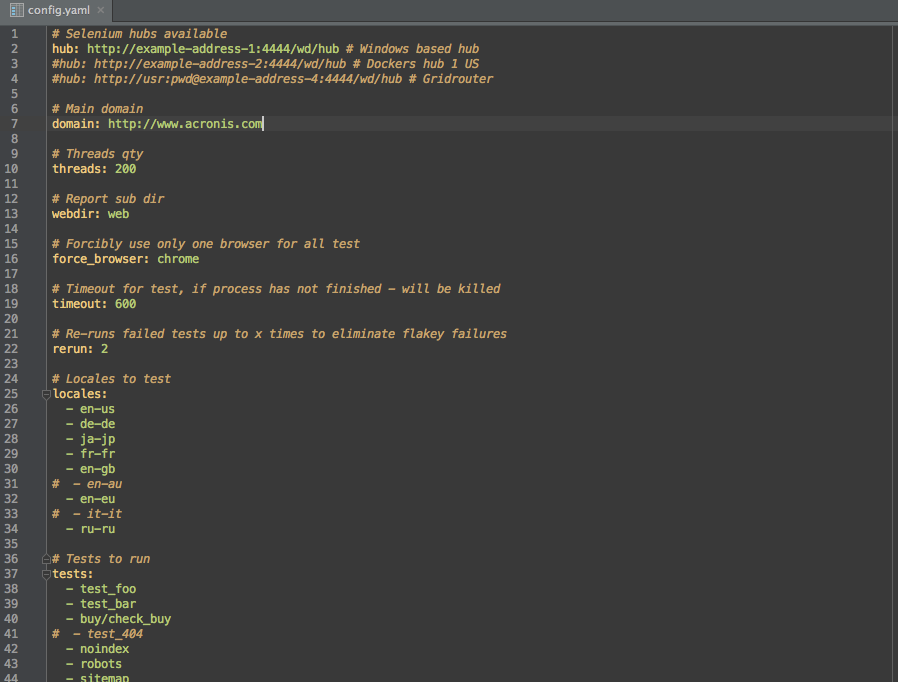

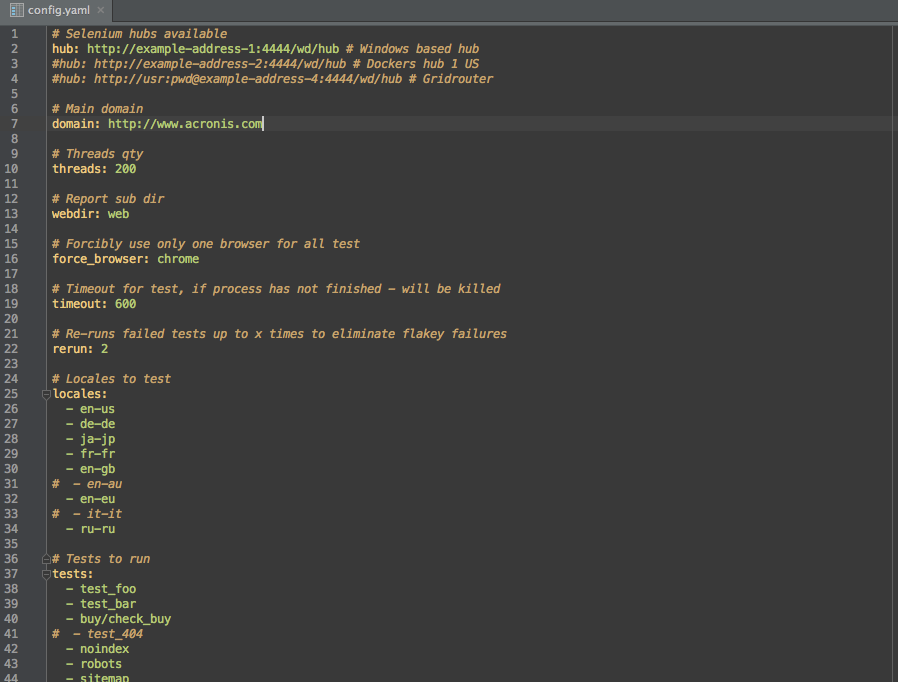

It was decided to store test data for parameterized tests in tsv files, static test data in yaml. It was decided to determine the belonging of the test data to the test and the locale using the names and hierarchy of the directories in which these data are located. Inheritance is carried out from the main base locale "en-us", by deleting, adding unique data. Also in the test data it is possible to use the keyword 'skip' and 'comment' - to cancel the launch of a specific test case with an indication of the reason. Such inheritance, for example, if it is necessary to use the same data for all site localizations, then inheritance occurs automatically without any additional parameters. By the way, for these test configurations (environment, waiting time, etc.) they also implemented inheritance, but not on the basis of localization, but on the basis of the inheritance of the global configuration file, with test configs.

Another nuance

Receiving the first reports, they began to think about how it is more convenient to display test results in the context of locales. We considered that it was most convenient for us to share the results according to the principle - each locale has its own copy of the allure-report. And for aggregation of general information on locales, we quickly wrote a simple but nice wrapper.

The last thing that overshadowed our joy was the cases of individual launches in a test environment. I forgot to mention that as a medium for testing, we decided to use classic Selenium (as an environment that is closest to real). With a large number of checks in selenium failures can not be avoided. As well as all the “favorite” false positives, they make it very difficult for continuous integration and a “green traffic signal” for production.

We thought and found a way out. Hangs - overcame the refinement of our wrappers. Added the ability to specify the maximum time for an individual test and, if it does not run within the specified time, we restart it roughly. And the false positives were removed with the pytest rerun-xfails add - on. This plugin automatically restarts all failed tests; again, we set the number of attempts in the configuration yaml file for each test or total.

Epilogue

And finally, here it is - the happiness of a novice automator: a stable, convenient working system. It is easy to maintain, allows you to test as quickly as possible and without false positives, provides very convenient reporting of test results.

PS

Friends, on your feedbacks here at Habré, we would like to understand how interesting our experience is. There is an idea to publish the resulting ready-made solution in the form of a docker-container.

Source: https://habr.com/ru/post/271049/

All Articles